At the latest UEFA Champions League Finals, one of the world’s most anticipated annual soccer events, pop stars Marshmello, Khalid and Selena Gomez shared the stage for a dazzling opening ceremony at Portugal’s third-largest football stadium — without ever stepping foot in it.

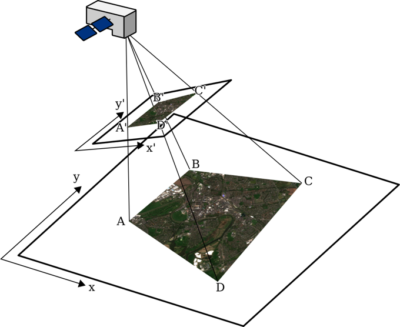

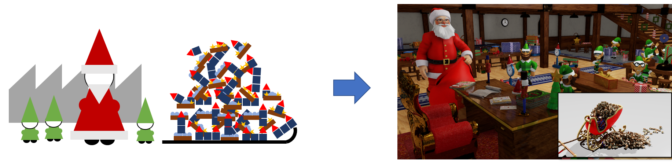

The stunning video performance took place in a digital twin of the Estádio do Dragão, or Dragon Stadium, rendered by Madrid-based MR Factory, a company that specializes in virtual production.

The studio, which has been at the forefront of using virtual productions for film and television since the 1990s, now brings its virtual sets to life with the help of NVIDIA Studio, RTX GPUs and Omniverse, a real-time collaboration and simulation platform.

MR Factory’s previous projects include Netflix’s popular series Money Heist and Sky Rojo, and feature films like Jeepers Creepers: Reborn.

With NVIDIA RTX technology and real-time rendering, MR Factory can create stunning visuals and 3D models faster than before. And with NVIDIA Omniverse Enterprise, MR factory enables remote designers and artists to collaborate in one virtual space.

These advanced solutions help the company accelerate design workflows and take virtual productions to the next level.

“NVIDIA is powering this new workflow that allows us to improve creative opportunities while reducing production times and costs,” said Óscar Olarte, co-founder and CTO of MR Factory. “Instead of traveling to places like Australia or New York, we can create these scenarios virtually — you go from creating content to creating worlds.”

Setting the Virtual Stage for UEFA Champions

MR Factory received the UEFA Champions League Finals opening ceremony project when there were heavy restrictions on travel due to the pandemic. The event was initially set to take place at Istanbul’s Ataturk Stadium, the largest sporting arena in Turkey.

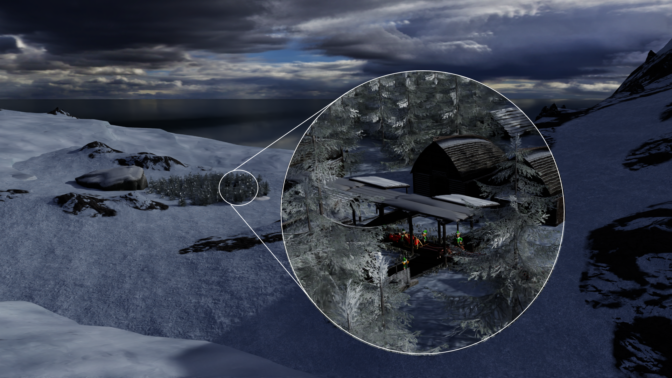

MR Factory captured images of the stadium and used them to create a 3D model for the music video. But with the pandemic’s shifting conditions, the UEFA changed the location to a stadium in Porto, on Portugal’s coast — with just two weeks until the project’s deadline.

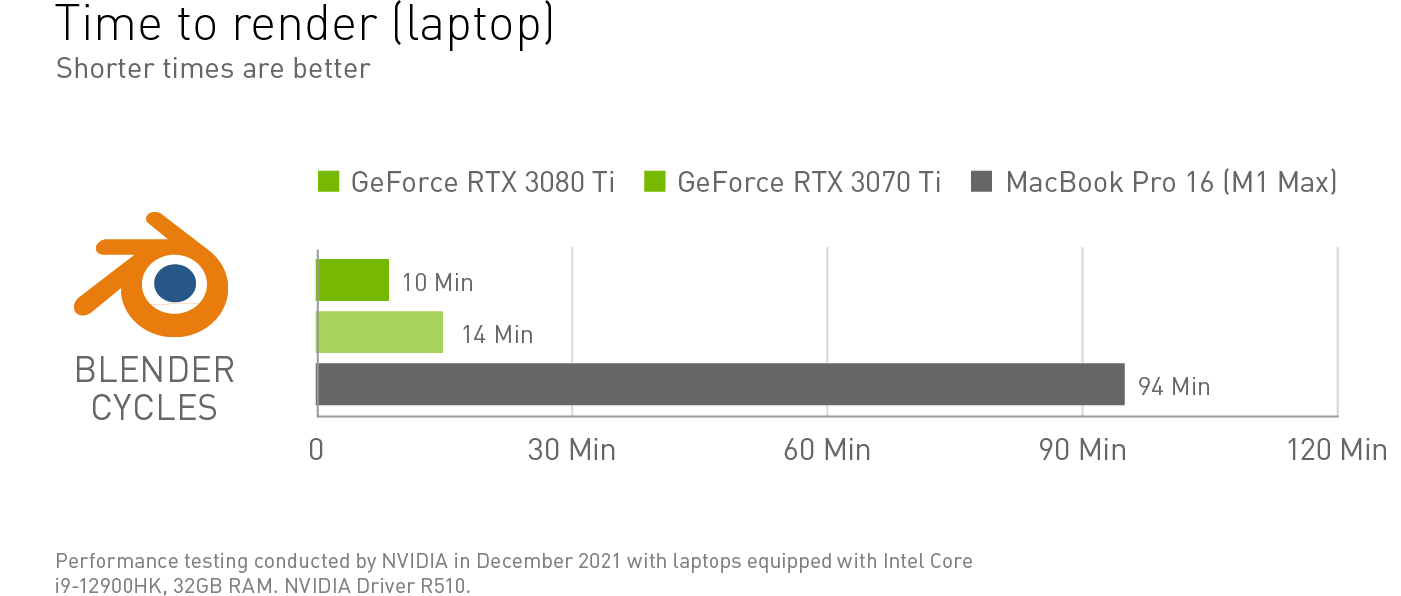

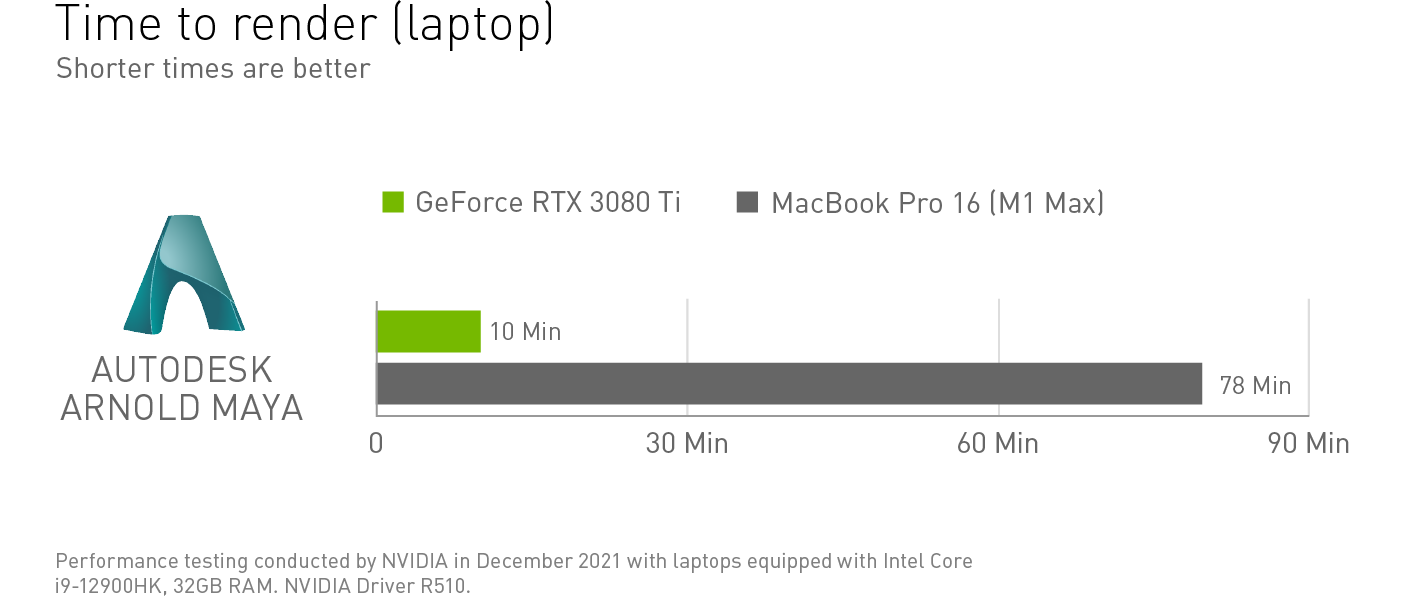

MR Factory had to quickly create another 3D model of the new stadium. The team used NVIDIA technology to achieve this, with real-time rendering tools accelerating their creative workflows. To create the stunning graphics and set up the scenes for virtual production, MR Factory uses leading applications such as Autodesk Arnold, DaVinci Resolve, OctaneRender and Unreal Engine.

“One of the most exciting technologies for us right now is NVIDIA RTX because it allows us to render faster, and in real time,” said Olarte. “We can mix real elements with virtual elements instantly.”

MR Factory also uses camera-tracking technology, which allows it to capture all camera and lens movements on stage. They use that footage to then combine live elements with the virtual production environment in real time.

Over 80 people across Spain worked on the virtual opening ceremony and, with the help of NVIDIA RTX, the team was able to complete the integrations from scratch, render all the visuals and finish the project in time for the event.

Making Vast Virtual Worlds

One of MR Factory’s core philosophies is enabling remote work, as this provides the company with more opportunities to hire talent from anywhere. The studio then empowers that talent with the best creative tools.

Additionally, MR Factory has been developing the metaverse as a way to produce films and television scenes. The pandemic accentuated the need for real-time collaboration and interaction between remote teams, and NVIDIA Omniverse Enterprise helps MR Factory achieve this.

With Omniverse Enterprise, MR Factory can drastically reduce production times, since multiple people can work simultaneously on the same project. Instead of completing a scene in a week, five artists can work in Omniverse and have the scene ready in a day, Olarte said.

“For us, virtual production is a way of creating worlds — and from these worlds come video games and movies,” he added. “So we’re building a library of content while we’re producing it, and the library is compatible with NVIDIA Omniverse Enterprise.”

MR Factory uses a render farm with 200 NVIDIA RTX A6000 GPUs, which provide artists with the GPU memory they need to quickly produce stunning work, deliver high-quality virtual productions and render in real time.

MR Factory plans to use Omniverse Enterprise and the render farm on future projects, so they can streamline creative workflows and bring virtual worlds together.

The same tools that MR Factory uses to create the virtual worlds of tomorrow are also available at no cost to millions of individual NVIDIA Studio creators with GeForce RTX and NVIDIA RTX GPUs.

Learn more about NVIDIA RTX, Omniverse and other powerful technologies behind the latest virtual productions by registering for free for GTC, taking place March 21-24.

The post Peak Performance: Production Studio Sets the Stage for Virtual Opening Ceremony at European Football Championship appeared first on The Official NVIDIA Blog.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)

today is 2/2/22

today is 2/2/22 on Friday,

on Friday,