Editor’s note: This post is part of our weekly In the NVIDIA Studio series, which celebrates featured artists, offers creative tips and tricks and demonstrates how NVIDIA Studio technology improves creative workflows.

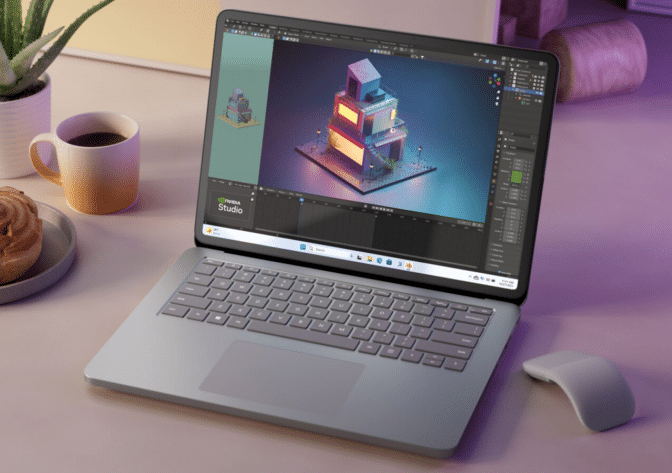

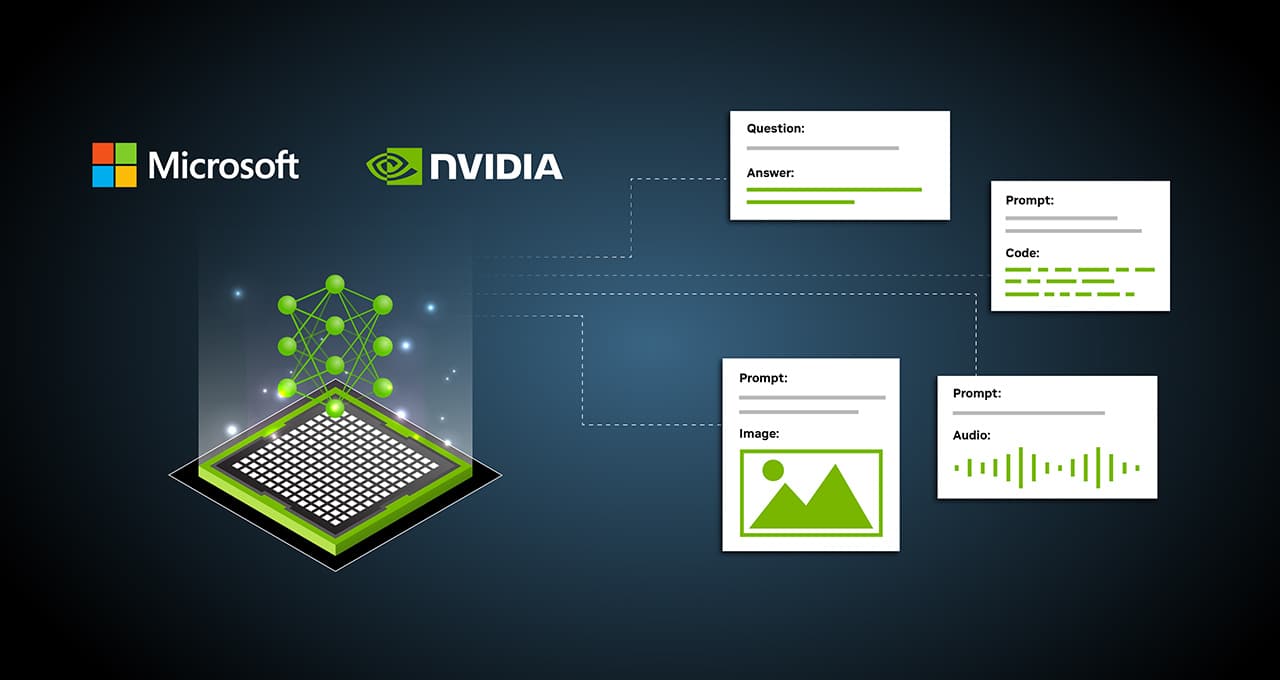

The NVIDIA Studio laptop lineup is expanding with the new Microsoft Surface Laptop Studio 2, powered by GeForce RTX 4060, GeForce RTX 4050 or NVIDIA RTX 2000 Ada Generation Laptop GPUs, providing powerful performance and versatility for creators.

Backed by the NVIDIA Studio platform, the Surface Laptop Studio 2, announced today, offers maximum stability with preinstalled Studio Drivers, plus exclusive tools to accelerate professional and creative workflows.

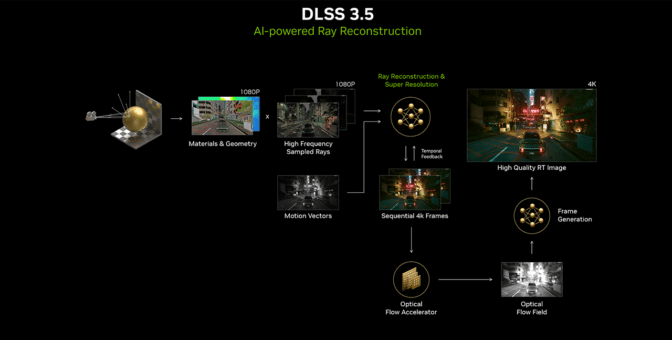

NVIDIA today also launched DLSS 3.5, adding Ray Reconstruction to the suite of AI-powered DLSS technologies. The latest feature puts a powerful new AI neural network at the fingertips of creators on RTX PCs, producing higher-quality, lifelike ray-traced images in real time before generating a full render.

Chaos Vantage is the first creative application to integrate Ray Reconstruction. For gamers, Ray Reconstruction is now available in Cyberpunk 2077 and is slated for the Phantom Liberty expansion on Sept. 26.

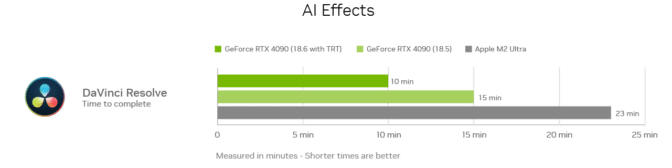

Blackmagic Design has adopted NVIDIA TensorRT acceleration in update 18.6 for its popular DaVinci Resolve software for video editing, color correction, visual effects, motion graphics and audio post-production. By integrating TensorRT, the software now runs AI tools like Magic Mask, Speed Warp and Super Scale over 50% faster than before. With this acceleration, AI runs up to 2.3x faster on GeForce RTX and NVIDIA RTX GPUs compared to Macs.

The September Studio Driver is now available for download — providing support for the Surface Laptop Studio 2, new app releases and more.

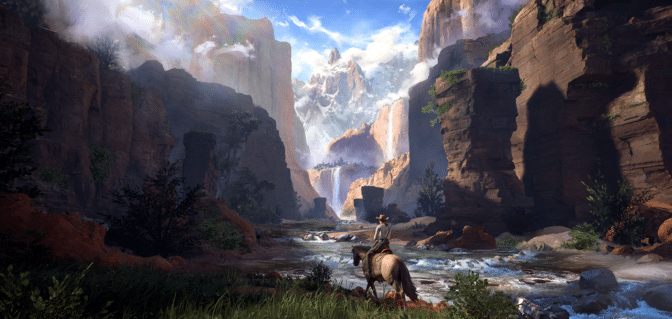

This week’s In the NVIDIA Studio installment features Studio Spotlight artist Gavin O’Donnell, who created a Wild West-inspired piece using a workflow streamlined with AI and RTX acceleration in Blender and Unreal Engine running on a GeForce RTX 3090 GPU.

Create Without Compromise

The Surface Laptop Studio 2, when configured with NVIDIA laptop GPUs, delivers up to 2x the graphics performance compared to the previous generation, and is NVIDIA Studio validated.

In addition to NVIDIA graphics, the Surface Laptop Studio 2 comes with 13th Gen Intel Core processors, up to 64GB of RAM and a 2TB SSD. It features a bright, vibrant 14.4-inch PixelSense Flow touchscreen, with true-to-life color and up to 120Hz refresh rate, and now comes with Dolby Vision IQ and HDR to deliver sharper colors.

The system’s unique design adapts to fit any workflow. It instantly transitions from a pro-grade laptop to a perfectly angled display for entertainment to a portable creative canvas for drawing and sketching with the Surface Slim Pen 2.

NVIDIA Studio systems deliver upgraded performance thanks to dedicated ray tracing, AI and video encoding hardware. They also provide AI app acceleration, advanced rendering and Ray Reconstruction with NVIDIA DLSS, plus exclusive software like NVIDIA Omniverse, NVIDIA Broadcast, NVIDIA Canvas and RTX Video Super Resolution — helping creators go from concept to completion faster.

The Surface Laptop Studio 2 will be available beginning October 3.

An AI for RTX

NVIDIA GeForce and RTX GPUs feature powerful local accelerators that are critical for AI performance, supercharging creativity by unlocking AI capabilities on Windows 11 PCs — including the Surface Laptop Studio 2.

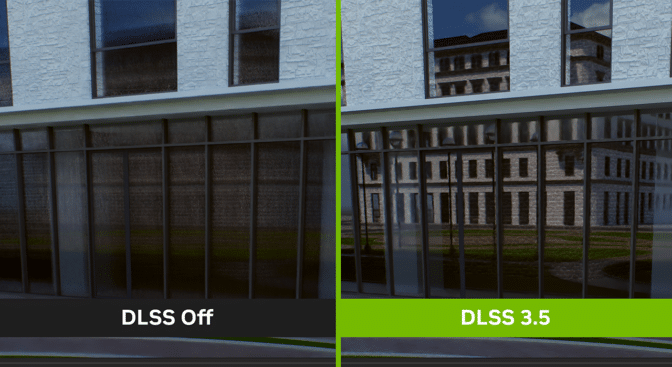

With the launch of DLSS 3.5 with Ray Reconstruction, an NVIDIA supercomputer-trained AI network replaces hand-tuned denoisers to generate higher-quality pixels between sampled rays.

Ray Reconstruction improves the real-time editing experience by sharpening images and reducing noise — even while panning around a scene. The benefits of Ray Reconstruction shine in the viewport, as rendering during camera movement is notoriously difficult.

By adding DLSS 3.5 support, Chaos Vantage will enable creators to explore large scenes in a fully ray-traced environment at high frame rates with improved image quality. Ray Reconstruction joins other AI-accelerated features in Vantage, including the NVIDIA OptiX denoiser and Super Resolution.

DLSS 3.5 and AI-powered Ray Reconstruction today join performance-multiplying AI Frame Generation in Cyberpunk 2077 with the new 2.0 update. On Sept. 26, Cyberpunk 2077: Phantom Liberty will launch with full ray tracing and DLSS 3.5.

NVIDIA TensorRT — the high-performance inference optimizer that delivers low latency and high throughput for deep learning inference applications and features — has been added to DaVinci Resolve in version 18.6.

The added acceleration dramatically increases performance. AI effects now run on a GeForce RTX 4090 GPU up to 2.3x faster than on M2 Ultra, and 5.4x faster than on 7900 XTX.

Stay tuned for updates in the weeks ahead for more AI on RTX, including DLSS 3.5 support coming to Omniverse this October.

Welcome to the Wild, Wild West

Gavin O’Donnell — an Ireland-based senior environment concept artist at Disruptive Games — is no stranger to interactive entertainment.

He also does freelance work on the side including promo art, environment design and matte painting for an impressive client list, including Disney, Giant Animation, ImagineFX, Netflix and more.

O’Donnell’s series of Western-themed artwork — the Wild West Project — was directly inspired by the critically acclaimed classic open adventure game Red Dead Redemption 2.

“I really enjoyed how immersive the storyline and the world in general was, so I wanted to create a scene that might exist in that fictional world,” said O’Donnell. Furthermore, it presented him an opportunity to practice new workflows within 3D apps Blender and Unreal Engine.

Workflows combining NVIDIA technologies including RTX GPU acceleration at the intersection of AI were of especially great interest — accelerated by his GeForce RTX 3090 laptop GPU.

In Blender — O’Donnell sampled AI-powered Blender Cycles RTX-accelerated OptiX ray tracing in the viewport for interactive, photoreal rendering for modeling and animation.

Meanwhile — in Unreal Engine — O’Donnell sampled NVIDIA DLSS to increase the interactivity of the viewport by using AI to upscale frames rendered at lower resolution while still retaining high-fidelity detail. On top of RTX-accelerated rendering for high-fidelity visualization of 3D designs, virtual production and game development, the artist could simply create better, more detailed artwork, faster and easier.

O’Donnell credits his success to a constant state of creative evaluation — ensuring everything from his content creation techniques, methods of gaining inspiration, and technological knowledge — enabling the highest quality artwork possible — all while maintaining resource and efficiency gains.

As such, O’Donnell recently upgraded to an NVIDIA Studio laptop equipped with a GeForce RTX 4090 GPU with spectacular results. His rendering speeds in Blender, already very fast, sped up 73%, a massive time savings for the artist.

Check out O’Donnell’s portfolio on ArtStation.

And finally, don’t forget to enter the #StartToFinish community challenge! Show us a photo or video of how one of your art projects started — and then one of the final result — using the hashtag #StartToFinish and tagging @NVIDIAStudio for a chance to be featured! Submissions considered through Sept. 30.

Follow NVIDIA Studio on Instagram, Twitter and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter.

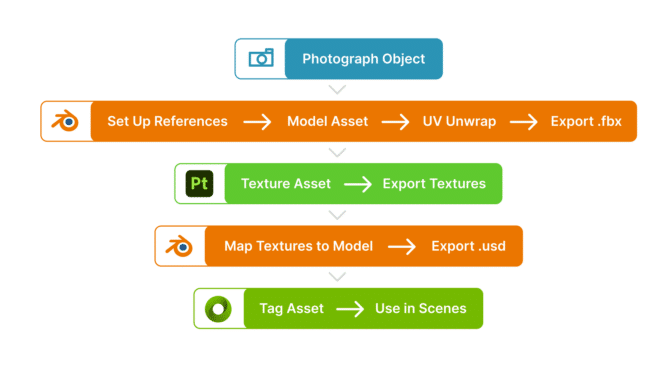

A clip of an industrial crate virtually “aging.”

A clip of an industrial crate virtually “aging.” Moment Factory’s interactive installation at InfoComm 2023.

Moment Factory’s interactive installation at InfoComm 2023.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)