A new AI-powered, imaging-based technology that creates accurate three-dimensional models of tumors, veins and other soft tissue offers a promising new method to help surgeons operate on, and better treat, breast cancers.

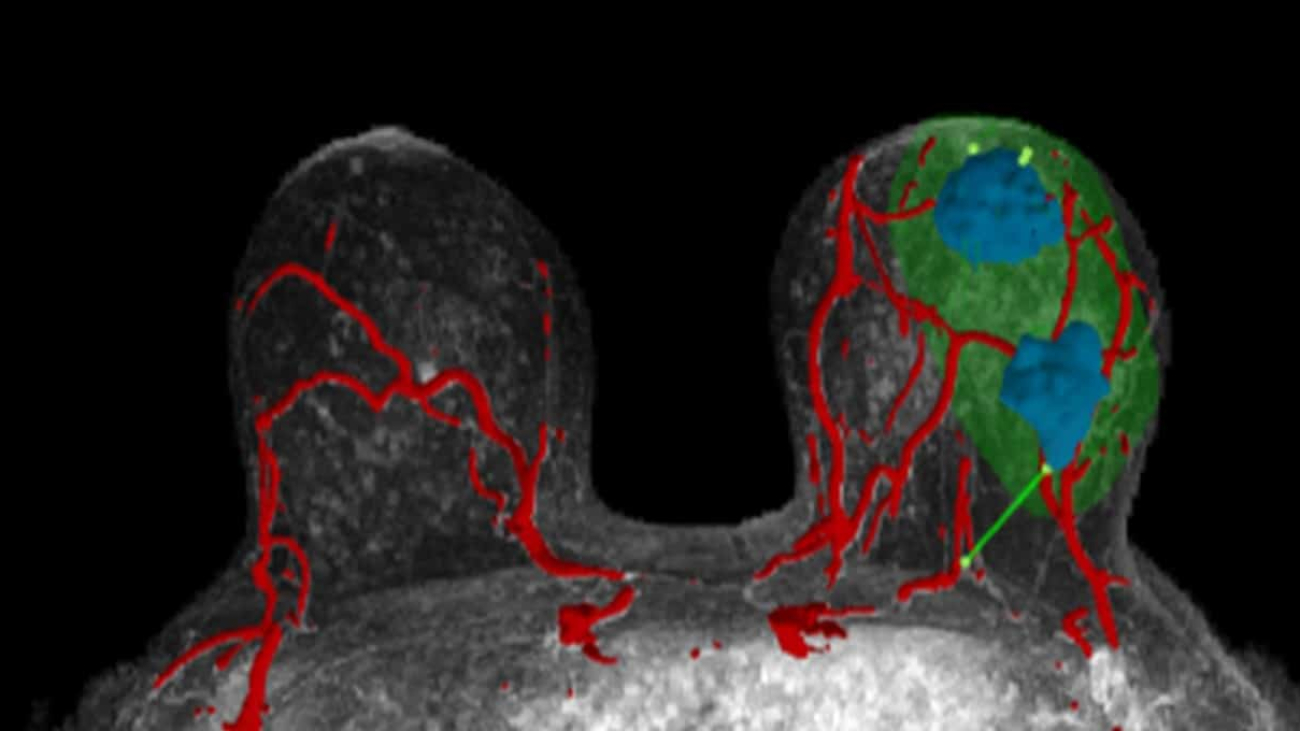

The technology, from Illinois-based startup SimBioSys, converts routine black-and-white MRI images into spatially accurate, volumetric images of a patient’s breasts. It then illuminates different parts of the breast with distinct colors — the vascular system, or veins, may be red; tumors are shown in blue; surrounding tissue is gray.

Surgeons can then easily manipulate the 3D visualization on a computer screen, gaining important insight to help guide surgeries and influence treatment plans. The technology, called TumorSight, calculates key surgery-related measurements, including a tumor’s volume and how far tumors are from the chest wall and nipple.

It also provides key data about a tumor’s volume in relation to a breast’s overall volume, which can help determine — before a procedure begins — whether surgeons should try to preserve a breast or choose a mastectomy, which often presents cosmetic and painful side effects. Last year, TumorSight received FDA clearance.

Across the world, nearly 2.3 million women are diagnosed with breast cancer each year, according to the World Health Organization. Every year, breast cancer is responsible for the deaths of more than 500,000 women. Around 100,000 women in the U.S. annually undergo some form of mastectomy, according to the Brigham and Women’s Hospital.

According to Jyoti Palaniappan, chief commercial officer at SimBioSys, the company’s visualization technology offers a step-change improvement over the kind of data surgeons typically see before they begin surgery.

“Typically, surgeons will get a radiology report, which tells them, ‘Here’s the size and location of the tumor,’ and they’ll get one or two pictures of the patient’s tumor,” said Palaniappan. “If the surgeon wants to get more information, they’ll need to find the radiologist and have a conversation with them — which doesn’t always happen — and go through the case with them.”

Dr. Barry Rosen, the company’s chief medical officer, said one of the technology’s primary goals is to uplevel and standardize presurgical imaging, which he believes can have broad positive impacts on outcomes.

“We’re trying to move the surgical process from an art to a science by harnessing the power of AI to improve surgical planning,” Dr. Rosen said.

SimBioSys uses NVIDIA A100 Tensor Core GPUs in the cloud for pretraining its models. It also uses NVIDIA MONAI for training and validation data, and NVIDIA CUDA-X libraries including cuBLAS and MONAI Deploy to run its imaging technology. SimBioSys is part of the NVIDIA Inception program for startups.

SimBioSys is already working on additional AI use cases it hopes can improve breast cancer survival rates.

It has developed a novel technique to reconcile MRI images of a patient’s breasts, taken when the patient is lying face down, and converts those images into virtual, realistic 3D visualizations that show how the tumor and surrounding tissue will appear during surgery — when a patient is lying face up.

This 3D visualization is especially relevant for surgeons so they can visualize what a breast and any tumors will look like once surgery begins.

To create this imagery, the technology calculates gravity’s impact on different kinds of breast tissue and accounts for how different kinds of skin elasticity impact a breast’s shape when a patient is lying on the operating table.

The startup is also working on a new strategy that also relies on AI to quickly provide insights that can help avoid cancer recurrence.

Currently, hospital labs run pathology tests on tumors that surgeons have removed. The biopsies are then sent to a different outside lab, which conducts a more comprehensive molecular analysis.

This process routinely takes up to six weeks. Without knowing how aggressive a cancer in the removed tumor is, or how that type of cancer might respond to different treatments, patients and doctors are unable to quickly chart out treatment plans to avoid recurrence.

SimBioSys’s new technology uses an AI model to analyze the 3D volumetric features of the just-removed tumor, the hospital’s initial tumor pathology report and a patient’s demographic data. From that information, SimBioSys generates — in a matter of hours — a risk analysis for that patient’s cancer, which helps doctors quickly determine the best treatment to avoid recurrence.

According to SimBioSys’s Palaniappan, the startup’s new method matches or exceeds the risk of recurrence scoring ability of more traditional methodologies, based upon its internal studies. It also takes a fraction of the time of these other methods while costing far less money.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)