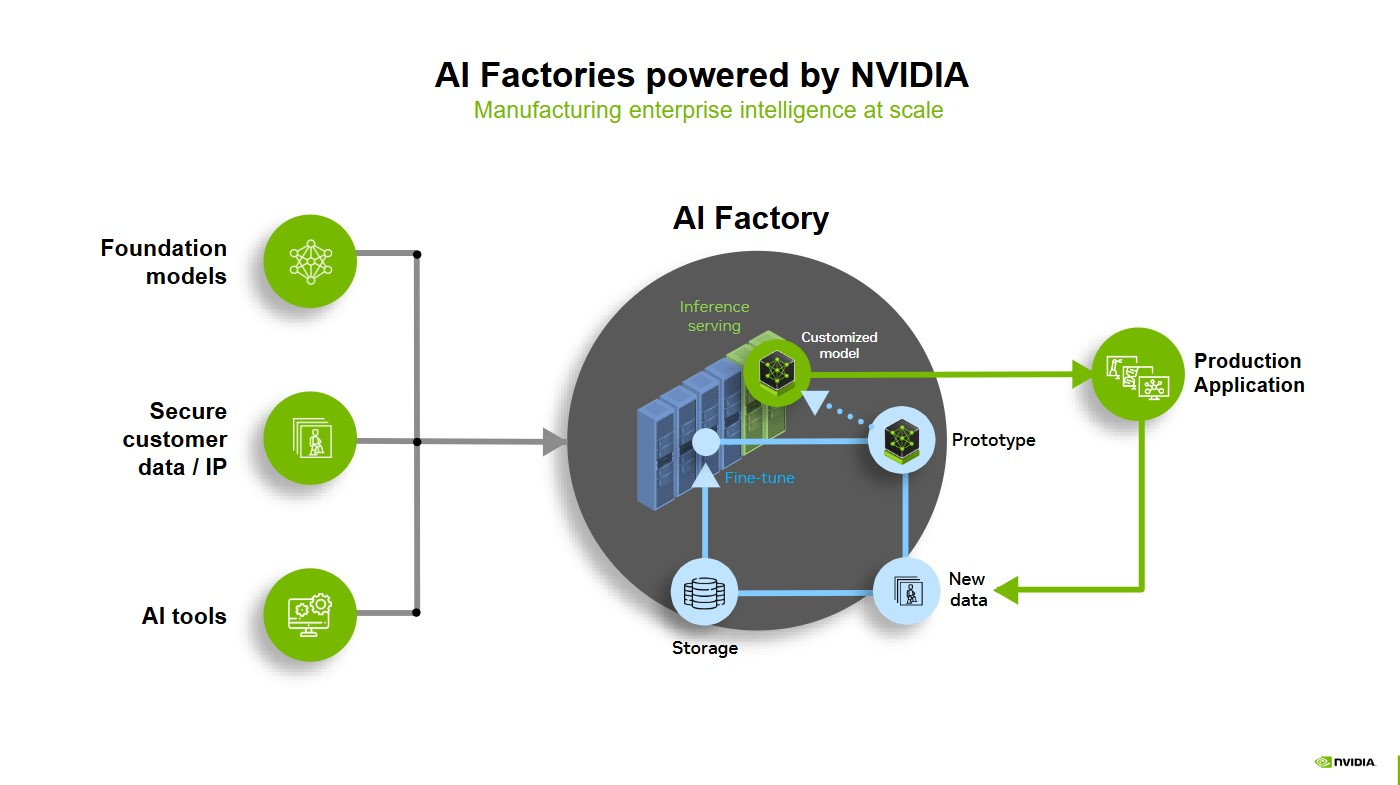

The world’s leading storage and server manufacturers are combining their design and engineering expertise with the NVIDIA AI Data Platform — a customizable reference design for building a new class of AI infrastructure — to provide systems that enable a new generation of agentic AI applications and tools.

The reference design is now being harnessed by storage system leaders globally to support AI reasoning agents and unlock the value of information stored in the millions of documents, videos and PDFs enterprises use.

NVIDIA-Certified Storage partners DDN, Dell Technologies, Hewlett Packard Enterprise, Hitachi Vantara, IBM, NetApp, Nutanix, Pure Storage, VAST Data and WEKA are introducing products and solutions built on the NVIDIA AI Data Platform, which includes NVIDIA accelerated computing, networking and software.

In addition, AIC, ASUS, Foxconn, Quanta Cloud Technology, Supermicro, Wistron and other original design manufacturers (ODMs) are developing new storage and server hardware platforms that support the NVIDIA reference design. These platforms feature NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs, NVIDIA BlueField DPUs and NVIDIA Spectrum-X Ethernet networking, and are optimized to run NVIDIA AI Enterprise software.

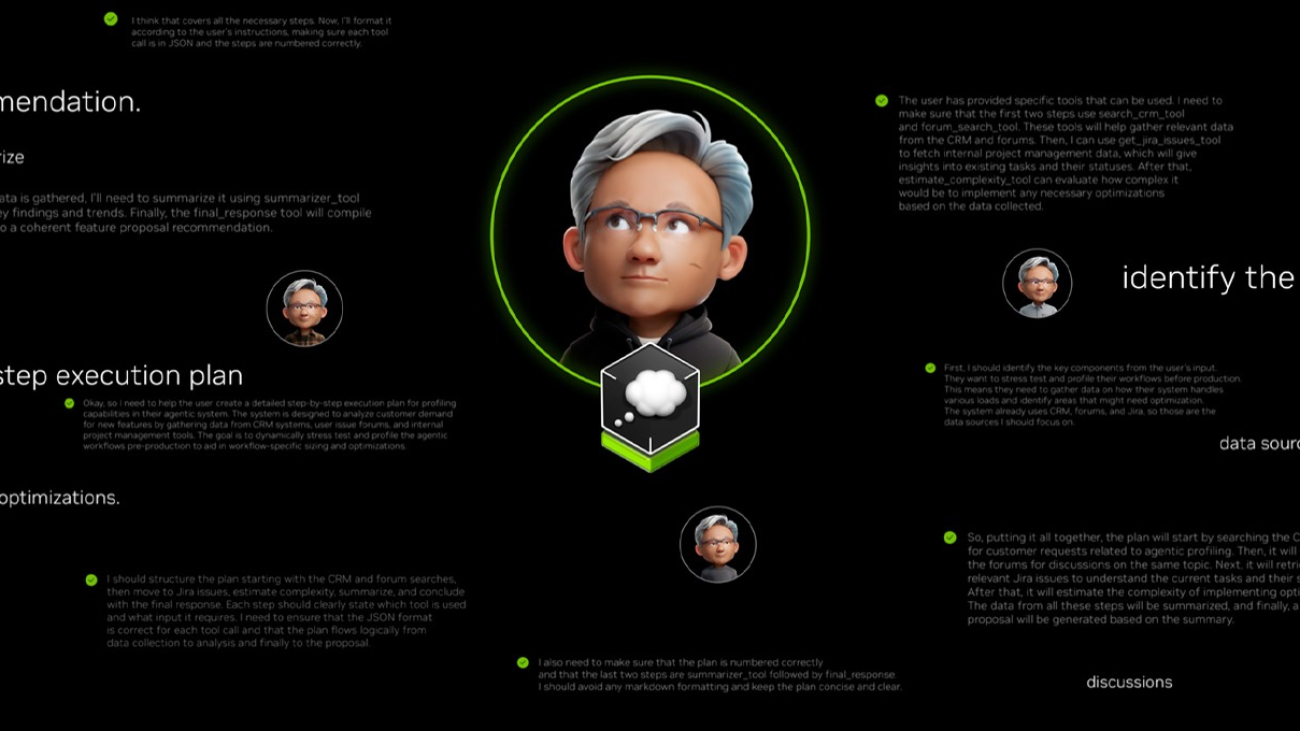

Such integrations allow enterprises across industries to quickly deploy storage and data platforms that scan, index, classify and retrieve large stores of private and public documents in real time. This augments AI agents as they reason and plan to solve complex, multistep problems.

Building agentic AI infrastructure with these new AI Data Platform-based solutions can help enterprises turn data into actionable knowledge using retrieval-augmented generation (RAG) software, including NVIDIA NeMo Retriever microservices and the AI-Q NVIDIA Blueprint.

Storage systems built with the NVIDIA AI Data Platform reference design turn data into knowledge, boosting agentic AI accuracy across many use cases. This can help AI agents and customer service representatives provide quicker, more accurate responses.

With more access to data, agents can also generate interactive summaries of complex documents — and even videos — for researchers of all kinds. Plus, they can assist cybersecurity teams in keeping software secure.

Leading Storage Providers Showcase AI Data Platform to Power Agentic AI

Storage system leaders play a critical role in providing the AI infrastructure that runs AI agents.

Embedding NVIDIA GPUs, networking and NIM microservices closer to storage enhances AI queries by bringing compute closer to critical content. Storage providers can integrate their document-security and access-control expertise into content-indexing and retrieval processes, improving security and data privacy compliance for AI inference.

Data platform leaders such as IBM, NetApp and VAST Data are using the NVIDIA reference design to scale their AI technologies.

IBM Fusion, a hybrid cloud platform for running virtual machines, Kubernetes and AI workloads on Red Hat OpenShift, offers content-aware storage services that unlock the meaning of unstructured enterprise data, enhancing inferencing so AI assistants and agents can deliver better, more relevant answers. Content-aware storage enables faster time to insights for AI applications using RAG when combined with NVIDIA GPUs, NVIDIA networking, the AI-Q NVIDIA Blueprint and NVIDIA NeMo Retriever microservices — all part of the NVIDIA AI Data Platform.

NetApp is advancing enterprise storage for agentic AI with the NetApp AIPod solution built with the NVIDIA reference design. NetApp incorporates NVIDIA GPUs in data compute nodes to run NVIDIA NeMo Retriever microservices and connects these nodes to scalable storage with NVIDIA networking.

VAST Data is embedding NVIDIA AI-Q with the VAST Data Platform to deliver a unified, AI-native infrastructure for building and scaling intelligent multi-agent systems. With high-speed data access, enterprise-grade security and continuous learning loops, organizations can now operationalize agentic AI systems that drive smarter decisions, automate complex workflows and unlock new levels of productivity.

ODMs Innovate on AI Data Platform Hardware

Offering their extensive experience with server and storage design and manufacturing, ODMs are working with storage system leaders to more quickly bring innovative AI Data Platform hardware to enterprises.

ODMs provide the chassis design, GPU integration, cooling innovation and storage media connections needed to build AI Data Platform servers that are reliable, compact, energy efficient and affordable.

A high percentage of the ODM industry’s market share comprises manufacturers based or colocated in Taiwan, making the region a crucial hub for enabling the hardware to run scalable agentic AI, inference and AI reasoning.

AIC, based in Taoyuan City, Taiwan, is building flash storage servers, powered by NVIDIA BlueField DPUs, that enable higher throughput and greater power efficiency than traditional storage designs. These arrays are deployed in many AI Data Platform-based designs.

ASUS partnered with WEKA and IBM to showcase a next-generation unified storage system for AI and high-performance computing workloads, addressing a broad spectrum of storage needs. The RS501A-E12-RS12U, a WEKA-certified software-defined storage solution, overcomes traditional hardware limitations to deliver exceptional flexibility — supporting file, object and block storage, as well as all-flash, tiering and backup capabilities.

Foxconn, based in New Taipei City, builds many of the manufacturing industry’s accelerated servers and storage platforms used for AI Data Platform solutions. Its subsidiary Ingrasys offers NVIDIA-accelerated GPU servers that support the AI Data Platform.

Supermicro is using the reference design to build its intelligent all-flash storage arrays powered by the NVIDIA Grace CPU Superchip or BlueField-3 DPU. The Supermicro Petascale JBOF and Petascale All-Flash Array Storage Server deliver high performance and power efficiency with software-defined storage vendors and support use with AI Data Platform solutions.

Quanta Cloud Technology, also based in Taiwan, is designing and building accelerated server and storage appliances that include NVIDIA GPUs and networking. They’re well-suited to run NVIDIA AI Enterprise software and support AI Data Platform solutions.

Taipei-based Wistron and Wiwynn offer innovative hardware designs compatible with the AI Data Platform, incorporating NVIDIA GPUs, NVIDIA BlueField DPUs and NVIDIA Ethernet SuperNICs for accelerated compute and data movement.

Learn more about the latest agentic AI advancements at NVIDIA GTC Taipei, running May 21-22 at COMPUTEX.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)