Starting today, developers can begin building apps with the DALL·E API.OpenAI Blog

DALL·E Now Available Without Waitlist

New users can start creating straight away. Lessons learned from deployment and improvements to our safety systems make wider availability possible.

Starting today, we are removing the waitlist for the DALL·E beta so users can sign up and start using it immediately. More than 1.5M users are now actively creating over 2M images a day with DALL·E—from artists and creative directors to authors and architects—with over 100K users sharing their creations and feedback in our Discord community.

Responsibly scaling a system as powerful and complex as DALL·E—while learning about all the creative ways it can be used and misused—has required an iterative deployment approach.

Since we first previewed the DALL·E research to users in April, users have helped us discover new uses for DALL·E as a powerful creative tool. Artists, in particular, have provided important input on DALL·E’s features.

Their feedback inspired us to build features like Outpainting, which lets users continue an image beyond its original borders and create bigger images of any size, and collections—so users can create in all new ways and expedite their creative processes.

Learning from real-world use has allowed us to improve our safety systems, making wider availability possible today. In the past months, we’ve made our filters more robust at rejecting attempts to generate sexual, violent and other content that violates our content policy and built new detection and response techniques to stop misuse.

We are currently testing a DALL·E API with several customers and are excited to soon offer it more broadly to developers and businesses so they can build apps on this powerful system.

We can’t wait to see what users from around the world create with DALL·E. Sign up today and start creating.

- DALL·E beta is available without a waitlist, over 1.5M users creating more than 2M images per day

- Outpainting is added to the Edit tool

- DALL·E available in beta with pricing, which enabled onboarding over 1 million users

- 1,000 users per week onboarded to research preview

- DALL·E 2 paper published and Research Preview available to 200 artists, researchers, and trusted users

- First version of GLIDE paper is published

- Diffusion models outperform GANs on ImageNet synthesis

- OpenAI announces the first DALL·E and open-sources CLIP

Our journey over the past few months includes:

OpenAI

DALL·E now available without waitlist

New users can start creating straight away. Lessons learned from deployment and improvements to our safety systems make wider availability possible.OpenAI Blog

Introducing Whisper

We’ve trained and are open-sourcing a neural net called Whisper that approaches human level robustness and accuracy on English speech recognition.

View Code

View Model Card

Whisper examples:

Whisper is an automatic speech recognition (ASR) system trained on 680,000 hours of multilingual and multitask supervised data collected from the web. We show that the use of such a large and diverse dataset leads to improved robustness to accents, background noise and technical language. Moreover, it enables transcription in multiple languages, as well as translation from those languages into English. We are open-sourcing models and inference code to serve as a foundation for building useful applications and for further research on robust speech processing.

The Whisper architecture is a simple end-to-end approach, implemented as an encoder-decoder Transformer. Input audio is split into 30-second chunks, converted into a log-Mel spectrogram, and then passed into an encoder. A decoder is trained to predict the corresponding text caption, intermixed with special tokens that direct the single model to perform tasks such as language identification, phrase-level timestamps, multilingual speech transcription, and to-English speech translation.

Other existing approaches frequently use smaller, more closely paired audio-text training datasets, or use broad but unsupervised audio pretraining. Because Whisper was trained on a large and diverse dataset and was not fine-tuned to any specific one, it does not beat models that specialize in LibriSpeech performance, a famously competitive benchmark in speech recognition. However, when we measure Whisper’s zero-shot performance across many diverse datasets we find it is much more robust and makes 50% fewer errors than those models.

About a third of Whisper’s audio dataset is non-English, and it is alternately given the task of transcribing in the original language or translating to English. We find this approach is particularly effective at learning speech to text translation and outperforms the supervised SOTA on CoVoST2 to English translation zero-shot.

We hope Whisper’s high accuracy and ease of use will allow developers to add voice interfaces to a much wider set of applications. Check out the paper, model card, and code to learn more details and to try out Whisper.

OpenAI

Introducing Whisper

We’ve trained and are open-sourcing a neural net called Whisper that approaches human level robustness and accuracy on English speech recognition.OpenAI Blog

DALL·E: Introducing Outpainting

Extend creativity and tell a bigger story with DALL-E images of any size

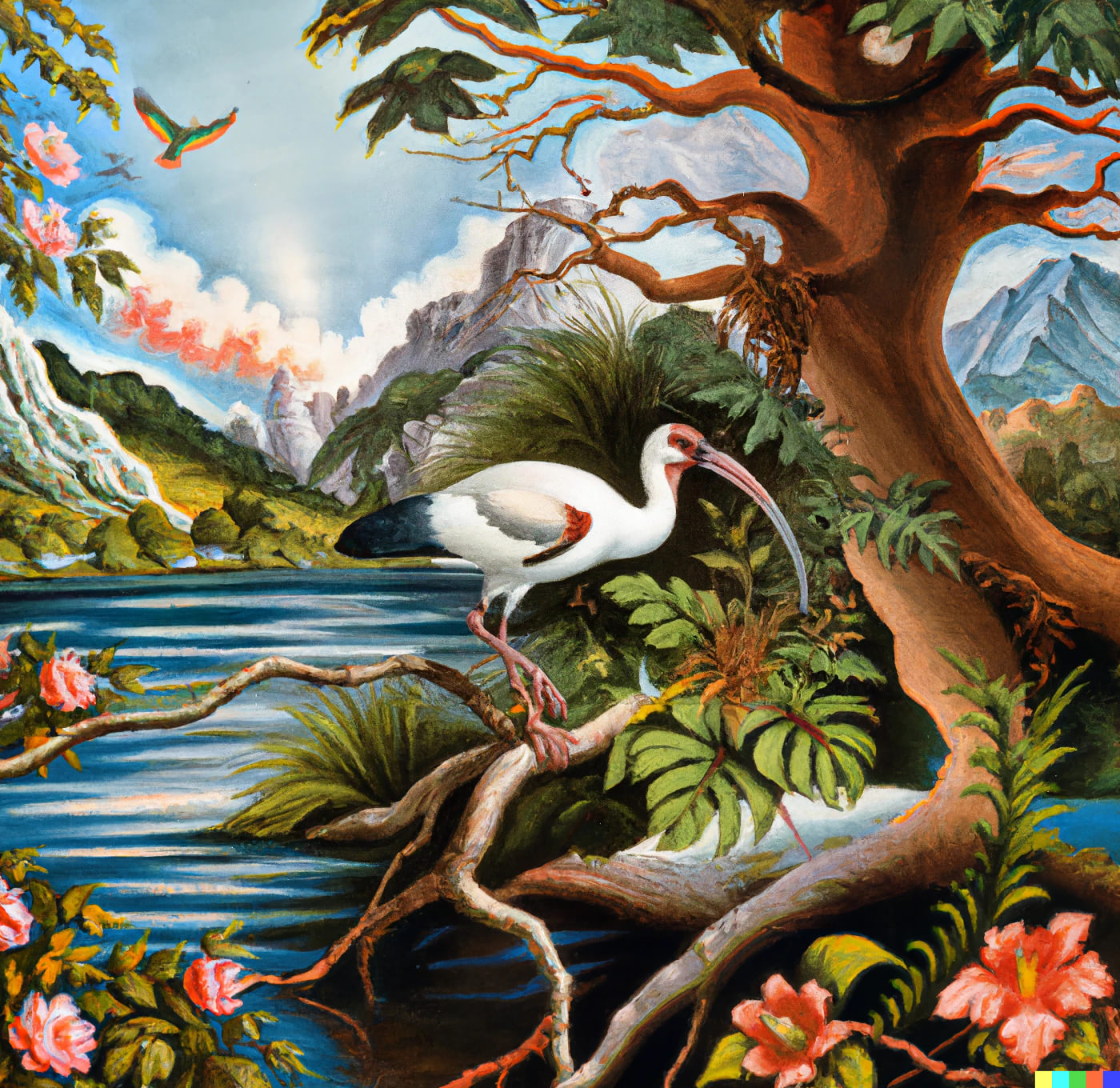

Today we’re introducing Outpainting, a new feature which helps users extend their creativity by continuing an image beyond its original borders — adding visual elements in the same style, or taking a story in new directions — simply by using a natural language description.

Outpainting: August Kamp

DALL·E’s Edit feature already enables changes within a generated or uploaded image — a capability known as Inpainting. Now, with Outpainting, users can extend the original image, creating large-scale images in any aspect ratio. Outpainting takes into account the image’s existing visual elements — including shadows, reflections, and textures — to maintain the context of the original image.

More than one million people are using DALL·E, the AI system that generates original images and artwork from a natural language description, as a creative tool today. Artists have already created remarkable images with the new Outpainting feature, and helped us better understand its capabilities in the process.

Outpainting is now available to all DALL·E users on desktop. To discover new realms of creativity, visit labs.openai.com or join the waitlist.

Featured artists:

OpenAI

DALL·E: Introducing outpainting

Extend creativity and tell a bigger story with DALL·E images of any size.OpenAI Blog

Our approach to alignment research

Our approach to aligning AGI is empirical and iterative. We are improving our AI systems’ ability to learn from human feedback and to assist humans at evaluating AI. Our goal is to build a sufficiently aligned AI system that can help us solve all other alignment problems.

Introduction

Our alignment research aims to make artificial general intelligence (AGI) aligned with human values and follow human intent. We take an iterative, empirical approach: by attempting to align highly capable AI systems, we can learn what works and what doesn’t, thus refining our ability to make AI systems safer and more aligned. Using scientific experiments, we study how alignment techniques scale and where they will break.

We tackle alignment problems both in our most capable AI systems as well as alignment problems that we expect to encounter on our path to AGI. Our main goal is to push current alignment ideas as far as possible, and to understand and document precisely how they can succeed or why they will fail. We believe that even without fundamentally new alignment ideas, we can likely build sufficiently aligned AI systems to substantially advance alignment research itself.

Unaligned AGI could pose substantial risks to humanity and solving the AGI alignment problem could be so difficult that it will require all of humanity to work together. Therefore we are committed to openly sharing our alignment research when it’s safe to do so: We want to be transparent about how well our alignment techniques actually work in practice and we want every AGI developer to use the world’s best alignment techniques.

At a high-level, our approach to alignment research focuses on engineering a scalable training signal for very smart AI systems that is aligned with human intent. It has three main pillars:

- Training AI systems using human feedback

- Training AI systems to assist human evaluation

- Training AI systems to do alignment research

Aligning AI systems with human values also poses a range of other significant sociotechnical challenges, such as deciding to whom these systems should be aligned. Solving these problems is important to achieving our mission, but we do not discuss them in this post.

Training AI systems using human feedback

RL from human feedback is our main technique for aligning our deployed language models today. We train a class of models called InstructGPT derived from pretrained language models such as GPT-3. These models are trained to follow human intent: both explicit intent given by an instruction as well as implicit intent such as truthfulness, fairness, and safety.

Our results show that there is a lot of low-hanging fruit on alignment-focused fine-tuning right now: InstructGPT is preferred by humans over a 100x larger pretrained model, while its fine-tuning costs <2% of GPT-3’s pretraining compute and about 20,000 hours of human feedback. We hope that our work inspires others in the industry to increase their investment in alignment of large language models and that it raises the bar on users’ expectations about the safety of deployed models.

Our natural language API is a very useful environment for our alignment research: It provides us with a rich feedback loop about how well our alignment techniques actually work in the real world, grounded in a very diverse set of tasks that our customers are willing to pay money for. On average, our customers already prefer to use InstructGPT over our pretrained models.

Yet today’s versions of InstructGPT are quite far from fully aligned: they sometimes fail to follow simple instructions, aren’t always truthful, don’t reliably refuse harmful tasks, and sometimes give biased or toxic responses. Some customers find InstructGPT’s responses significantly less creative than the pretrained models’, something we hadn’t realized from running InstructGPT on publicly available benchmarks. We are also working on developing a more detailed scientific understanding of RL from human feedback and how to improve the quality of human feedback.

Aligning our API is much easier than aligning AGI since most tasks on our API aren’t very hard for humans to supervise and our deployed language models aren’t smarter than humans. We don’t expect RL from human feedback to be sufficient to align AGI, but it is a core building block for the scalable alignment proposals that we’re most excited about, and so it’s valuable to perfect this methodology.

Training models to assist human evaluation

RL from human feedback has a fundamental limitation: it assumes that humans can accurately evaluate the tasks our AI systems are doing. Today humans are pretty good at this, but as models become more capable, they will be able to do tasks that are much harder for humans to evaluate (e.g. finding all the flaws in a large codebase or a scientific paper). Our models might learn to tell our human evaluators what they want to hear instead of telling them the truth. In order to scale alignment, we want to use techniques like recursive reward modeling (RRM), debate, and iterated amplification.

Currently our main direction is based on RRM: we train models that can assist humans at evaluating our models on tasks that are too difficult for humans to evaluate directly. For example:

- We trained a model to summarize books. Evaluating book summaries takes a long time for humans if they are unfamiliar with the book, but our model can assist human evaluation by writing chapter summaries.

- We trained a model to assist humans at evaluating the factual accuracy by browsing the web and providing quotes and links. On simple questions, this model’s outputs are already preferred to responses written by humans.

- We trained a model to write critical comments on its own outputs: On a query-based summarization task, assistance with critical comments increases the flaws humans find in model outputs by 50% on average. This holds even if we ask humans to write plausible looking but incorrect summaries.

- We are creating a set of coding tasks selected to be very difficult to evaluate reliably for unassisted humans. We hope to release this data set soon.

Our alignment techniques need to work even if our AI systems are proposing very creative solutions (like AlphaGo’s move 37), thus we are especially interested in training models to assist humans to distinguish correct from misleading or deceptive solutions. We believe the best way to learn as much as possible about how to make AI-assisted evaluation work in practice is to build AI assistants.

Training AI systems to do alignment research

There is currently no known indefinitely scalable solution to the alignment problem. As AI progress continues, we expect to encounter a number of new alignment problems that we don’t observe yet in current systems. Some of these problems we anticipate now and some of them will be entirely new.

We believe that finding an indefinitely scalable solution is likely very difficult. Instead, we aim for a more pragmatic approach: building and aligning a system that can make faster and better alignment research progress than humans can.

As we make progress on this, our AI systems can take over more and more of our alignment work and ultimately conceive, implement, study, and develop better alignment techniques than we have now. They will work together with humans to ensure that their own successors are more aligned with humans.

We believe that evaluating alignment research is substantially easier than producing it, especially when provided with evaluation assistance. Therefore human researchers will focus more and more of their effort on reviewing alignment research done by AI systems instead of generating this research by themselves. Our goal is to train models to be so aligned that we can off-load almost all of the cognitive labor required for alignment research.

Importantly, we only need “narrower” AI systems that have human-level capabilities in the relevant domains to do as well as humans on alignment research. We expect these AI systems are easier to align than general-purpose systems or systems much smarter than humans.

Language models are particularly well-suited for automating alignment research because they come “preloaded” with a lot of knowledge and information about human values from reading the internet. Out of the box, they aren’t independent agents and thus don’t pursue their own goals in the world. To do alignment research they don’t need unrestricted access to the internet. Yet a lot of alignment research tasks can be phrased as natural language or coding tasks.

Future versions of WebGPT, InstructGPT, and Codex can provide a foundation as alignment research assistants, but they aren’t sufficiently capable yet. While we don’t know when our models will be capable enough to meaningfully contribute to alignment research, we think it’s important to get started ahead of time. Once we train a model that could be useful, we plan to make it accessible to the external alignment research community.

Limitations

We’re very excited about this approach towards aligning AGI, but we expect that it needs to be adapted and improved as we learn more about how AI technology develops. Our approach also has a number of important limitations:

- The path laid out here underemphasizes the importance of robustness and interpretability research, two areas OpenAI is currently underinvested in. If this fits your profile, please apply for our research scientist positions!

- Using AI assistance for evaluation has the potential to scale up or amplify even subtle inconsistencies, biases, or vulnerabilities present in the AI assistant.

- Aligning AGI likely involves solving very different problems than aligning today’s AI systems. We expect the transition to be somewhat continuous, but if there are major discontinuities or paradigm shifts, then most lessons learned from aligning models like InstructGPT might not be directly useful.

- The hardest parts of the alignment problem might not be related to engineering a scalable and aligned training signal for our AI systems. Even if this is true, such a training signal will be necessary.

- It might not be fundamentally easier to align models that can meaningfully accelerate alignment research than it is to align AGI. In other words, the least capable models that can help with alignment research might already be too dangerous if not properly aligned. If this is true, we won’t get much help from our own systems for solving alignment problems.

We’re looking to hire more talented people for this line of research! If this interests you, we’re hiring Research Engineers and Research Scientists!

OpenAI

Our approach to alignment research

We are improving our AI systems’ ability to learn from human feedback and to assist humans at evaluating AI. Our goal is to build a sufficiently aligned AI system that can help us solve all other alignment problems.OpenAI Blog