Posted by Daniel Freedman and Ehud Rivlin, Research Scientists, Google Health

Colorectal cancer (CRC) is a global health problem and the second deadliest cancer in the United States, resulting in an estimated 900K deaths per year. While deadly, CRC can be prevented by removing small precancerous lesions in the colon, called polyps, before they become cancerous. In fact, it is estimated that a 1% increase in the adenoma detection rate (ADR, defined as the fraction of procedures in which a physician discovers at least one polyp) can lead to a 6% decrease in the rate of interval CRCs (a CRC that is diagnosed within 60 months of a negative colonoscopy).

Colonoscopy is considered the gold standard procedure for the detection and removal of polyps. Unfortunately, the literature indicates that endoscopists miss on average 22%-28% of polyps during colonoscopies; furthermore, 20% to 24% of polyps that have the potential to become cancerous (adenomas) are missed. Two major factors that may cause an endoscopist to miss a polyp are (1) the polyp appears in the field of view, but the endoscopist misses it, perhaps due to its small size or flat shape; and (2) the polyp does not appear in the field of view, as the endoscopist has not fully covered the relevant area during the procedure.

In “Detecting Deficient Coverage in Colonoscopies”, we introduce the Colonoscopy Coverage Deficiency via Depth algorithm, or C2D2, a machine learning-based approach to improving colonoscopy coverage. The C2D2 algorithm performs a local 3D reconstruction of the colon as images are captured during the procedure, and on that basis, identifies which areas of the colon were covered and which remained outside of the field of view. C2D2 can then indicate in real time whether a particular area of the colon has suffered from deficient coverage so the endoscopist can return to that area. Our work proposes a novel approach to compute coverage in real time, for which 3D reconstruction is done using a calibration-free, unsupervised learning method, and evaluate it in a large scale way.

The C2D2 Algorithm

When considering colon coverage, it is important to estimate the coverage fraction — what percentage of the relevant regions were covered by a complete procedure. While a retrospective analysis is useful for the physician and could provide general guidance for future procedures, it is more useful to have real-time estimation of coverage fraction, on a segment by segment basis, i.e. knowledge of what fraction of the current segment has been covered while traversing the colon. The helpfulness of such functionality is clear: during the procedure itself, a physician may be alerted to segments with deficient coverage, and can immediately return to review these areas. Higher coverage will result in a higher proportion of polyps being seen.

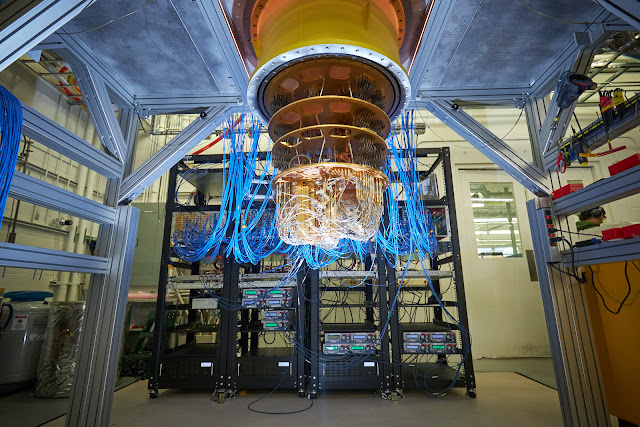

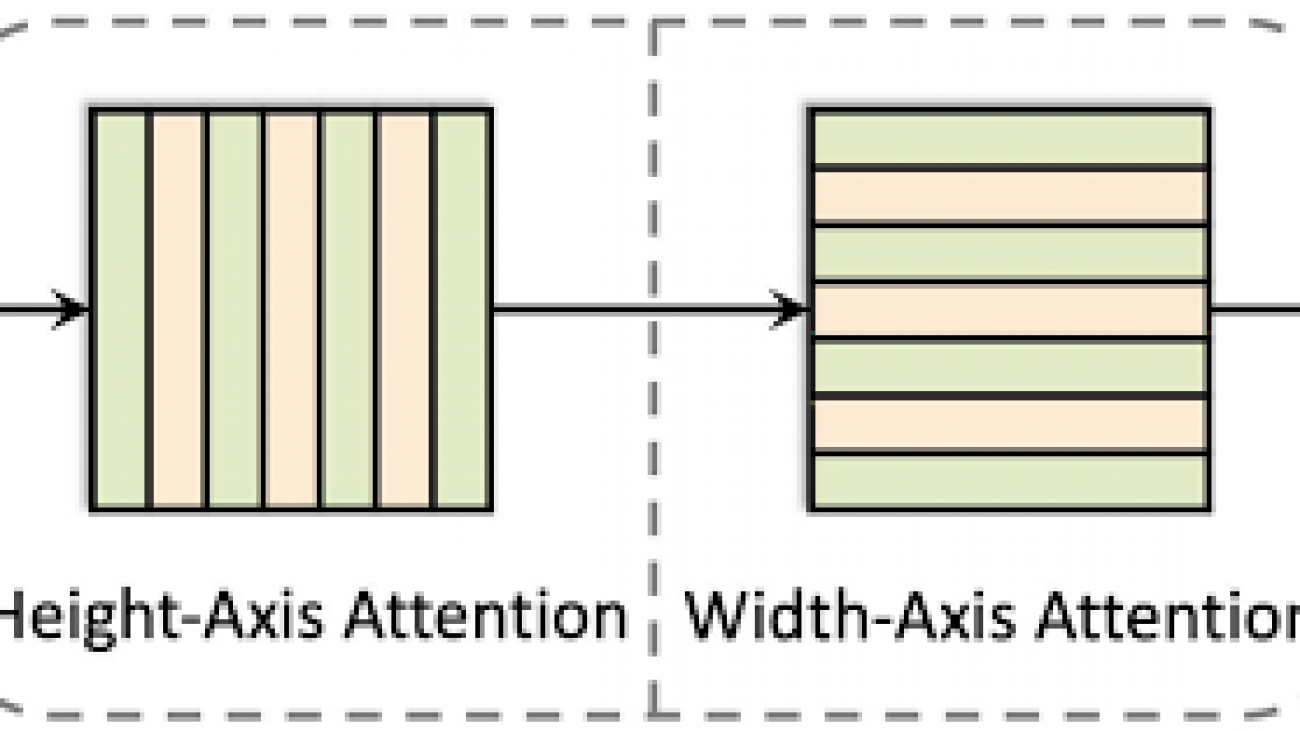

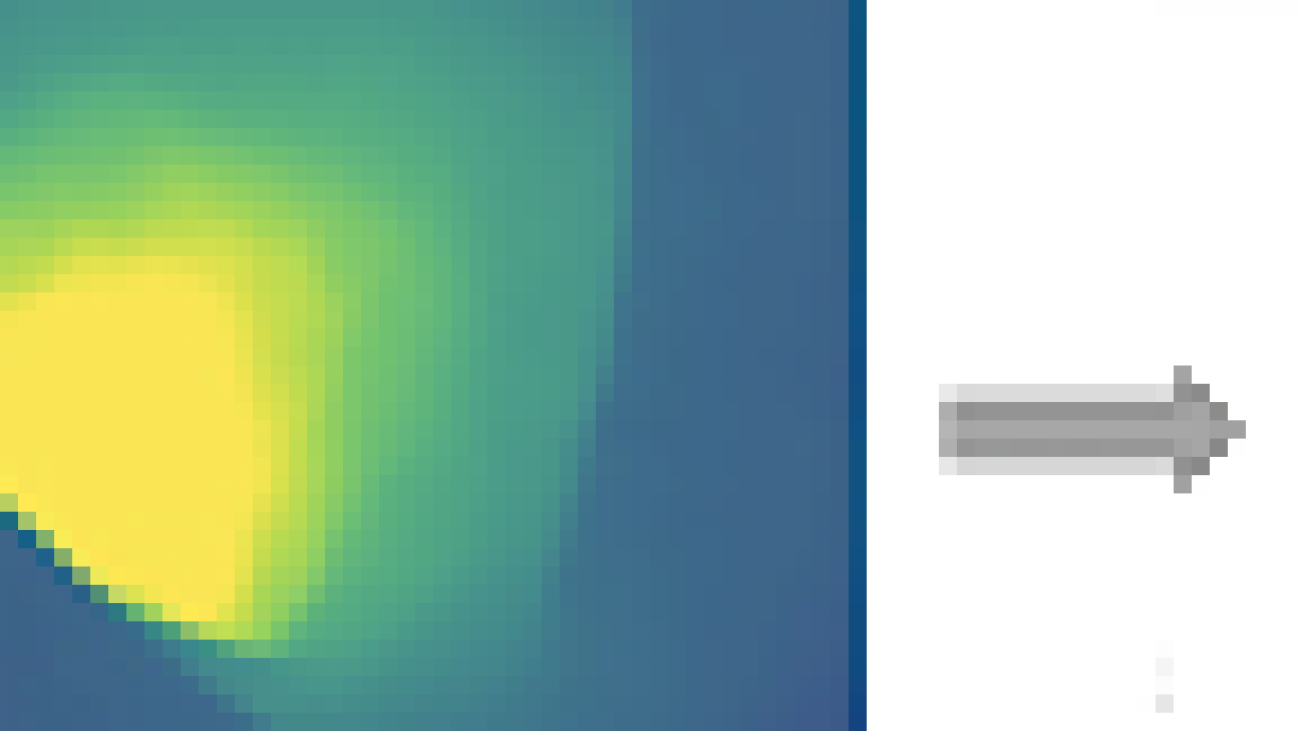

The C2D2 algorithm is designed to compute such a segment-by-segment coverage in two phases: computing depth maps for each frame of the colonoscopy video, followed by computation of coverage based on these depth maps.

|

| C2D2 computes a depth image from a single RGB image. Then, based on the computed depth images for a video sequence, C2D2 calculates local coverage, so it can detect where the coverage has been deficient and a second look is required. |

Depth map creation consists of both depth estimation as well as pose estimation — the localization of where the endoscope is in space, as well as the direction it is pointing. In addition to the detection of deficient coverage, depth and pose estimation are useful for a variety of other interesting tasks. For example, depth can be used for improved detection of flat polyps, while pose estimation can be used for relocalizing areas of the colon (including polyps) that the endoscopist wishes to revisit, and both together can be used for visualization and navigation.

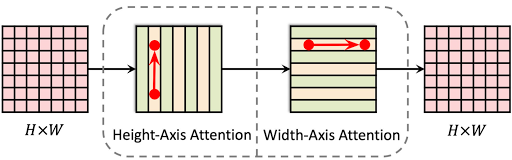

|

| Top row: RGB image, from which the depth is computed. Bottom row: Depth image as computed by C2D2. Yellow is deeper, blue is shallower. Note that the “tunnel” structure is captured, as well as the Haustral ridges. |

In order to compute coverage fractions from these depth maps, we trained C2D2 on two sources of data: synthetic sequences and real sequences. We generated the synthetic videos using a graphical model of a colon. For each synthetic video, ground truth coverage is available in the form of a number between 0 (completely uncovered) and 1 (completely covered). For real sequences, we analyzed de-identified colonoscopy videos, for which ground truth coverage is unavailable.

Performance on Synthetic Videos

When using synthetic videos, the availability of ground truth coverage enables the direct measurement of C2D2’s performance. We quantify this using the mean absolute error (MAE), which indicates how much the algorithm’s prediction differs, on average, from the ground truth. We find that C2D2’s MAE = 0.075; meaning that, on average, the prediction of C2D2 is within 7.5% of the ground truth. By contrast, a group of physicians given the same task achieved MAE = 0.177, i.e., within 17.7% of the ground truth. Thus, the C2D2 attained an accuracy rate 2.4 times higher on synthetic sequences.

Performance on Real Videos

Of course, what matters most is performance on videos of real colonoscopies. The challenge in this case is the absence of ground truth labelling: we don’t know what the actual coverage is. Additionally, one cannot use labels provided by experts directly as they are not always accurate, due to the challenges described earlier. However, C2D2 can still perform inference on real colonoscopy videos. Indeed, the learning pipeline is designed to perform equally well on synthetic and real colonoscopy videos.

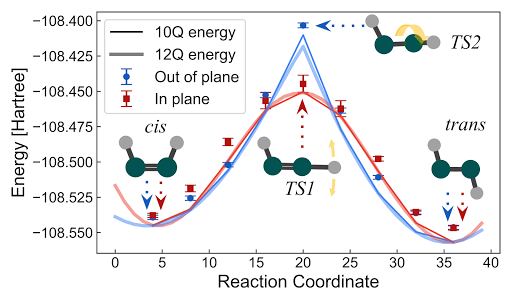

To verify performance on real sequences, we used a variant of a technique common in the generative modelling literature, which involves providing video sequences to human experts along with C2D2’s coverage scores for those sequences. We then ask the experts to assess whether C2D2’s score is correct. The idea is that while it is difficult for experts to assign a score directly, the task of verifying a given score is considerably easier. (This is similar to the fact that verifying a proposed solution to an algorithmic problem is generally much easier than computing that solution.) Using this methodology, experts verified C2D2’s score 93% of the time. And in a more qualitative sense, C2D2’s output seems to pass the “eyeball test”, see the figure below.

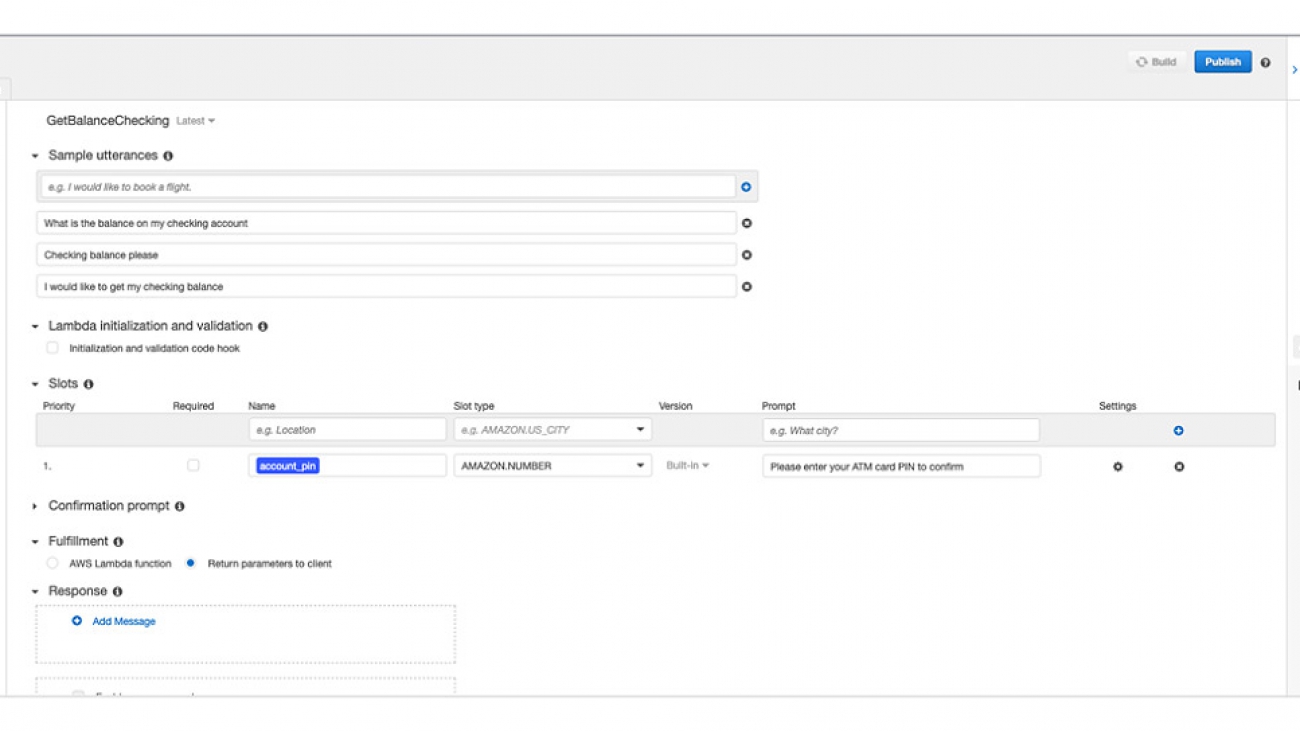

|

|

|

| Coverage on real colonoscopy sequences. Top row: Frames from a well covered sequence — the entire “tunnel” down the lumen may be seen; C2D2 coverage = 0.931. Middle row: A partially covered sequence — the bottom may be seen, but the top is not as visible; C2D2 coverage = 0.427. Bottom row: A poorly covered sequence, much of what is seen is the wall; C2D2 coverage = 0.227. |

Next steps

By alerting physicians to missed regions of the colon wall, C2D2 promises to lead to the discovery of more adenomas, thereby increasing the ADR and concomitantly decreasing the rate of interval CRC. This would be of tremendous benefit to patients.

In addition to this work that addresses colonoscopy coverage, we are concurrently conducting research to improve polyp detection by combining C2D2 with an automatic, real-time polyp detection algorithm. This study adds to the mounting evidence that physicians may use machine learning methods to augment their efforts, especially during procedures, to improve the quality of care for patients.

Acknowledgements

This research was conducted by Daniel Freedman, Yochai Blau, Liran Katzir, Amit Aides, Ilan Shimshoni, Danny Veikherman, Tomer Golany, Ariel Gordon, Greg Corrado, Yossi Matias, and Ehud Rivlin, with support from Verily. We would like to thank all of our team members and collaborators who worked on this project with us, including: Nadav Rabani, Chen Barshai, Nia Stoykova, David Ben-Shimol, Jesse Lachter, and Ori Segol, 3D-Systems and many others. We’d also like to thank Yossi Matias for support and guidance. The research was conducted by teams from Google Health and Google Research, Israel.

Read More

Read More

Esther Lee is a Product Manager for AWS Language AI Services. She is passionate about the intersection of technology and education. Out of the office, Esther enjoys long walks along the beach, dinners with friends and friendly rounds of Mahjong.

Esther Lee is a Product Manager for AWS Language AI Services. She is passionate about the intersection of technology and education. Out of the office, Esther enjoys long walks along the beach, dinners with friends and friendly rounds of Mahjong.

Paloma Pineda is a Product Marketing Manager for AWS Artificial Intelligence Devices. She is passionate about the intersection of technology, art, and human centered design. Out of the office, Paloma enjoys photography, watching foreign films, and cooking French cuisine.

Paloma Pineda is a Product Marketing Manager for AWS Artificial Intelligence Devices. She is passionate about the intersection of technology, art, and human centered design. Out of the office, Paloma enjoys photography, watching foreign films, and cooking French cuisine.