Amazon Translate is a neural machine translation service that delivers fast, high-quality, and affordable language translation. Neural machine translation is a form of language translation automation that uses deep learning models to deliver more accurate and natural-sounding translation than traditional statistical and rule-based translation algorithms. The translation service is trained on a wide variety of content across different use cases and domains to perform well on many kinds of content.

The Amazon Translate asynchronous batch processing capability enables organizations to translate a large collection of text or HTML documents. They can translate the collection of documents from one language to another with just a single API call. The ability to process data at scale is becoming important to organizations across all industries. In this blog post, we are going to demonstrate how you can build a notification mechanism to message you when a batch translation job is complete. This can enable end-end automation by triggering other Lambda functions or integrate with SQS for any post processing steps.

Solution overview

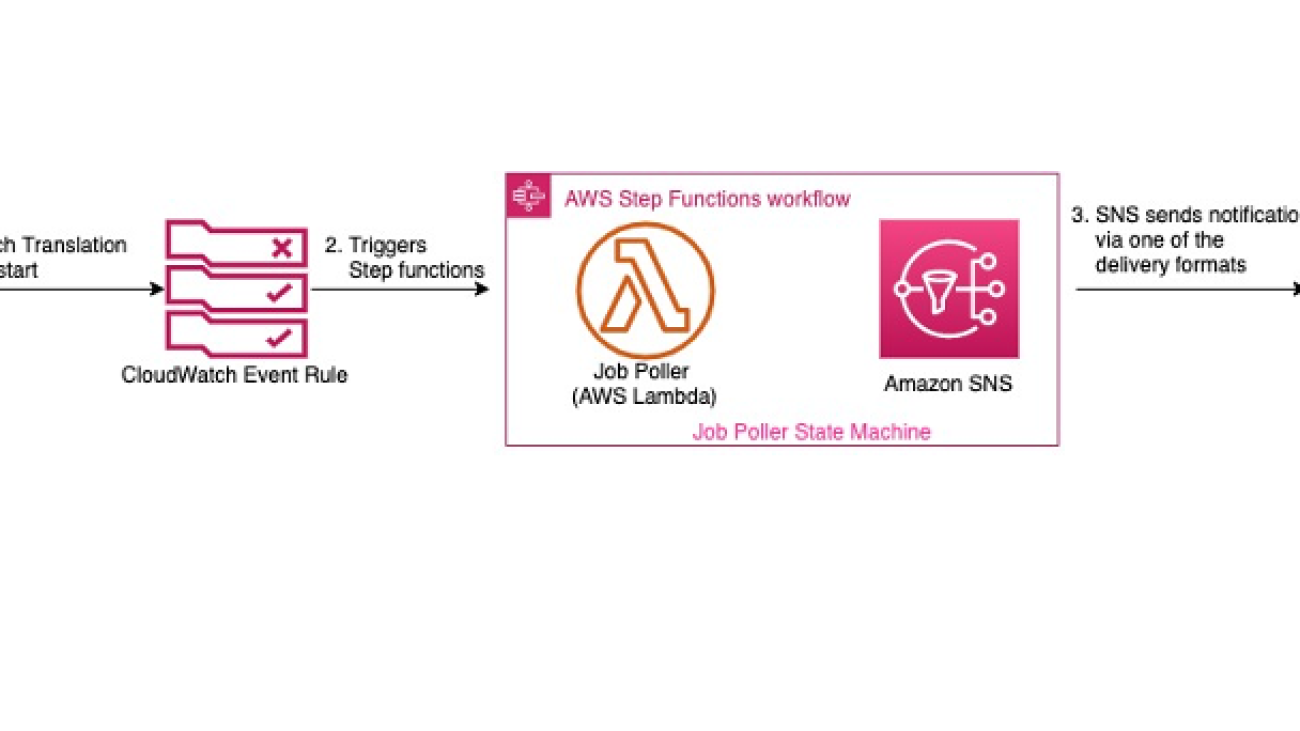

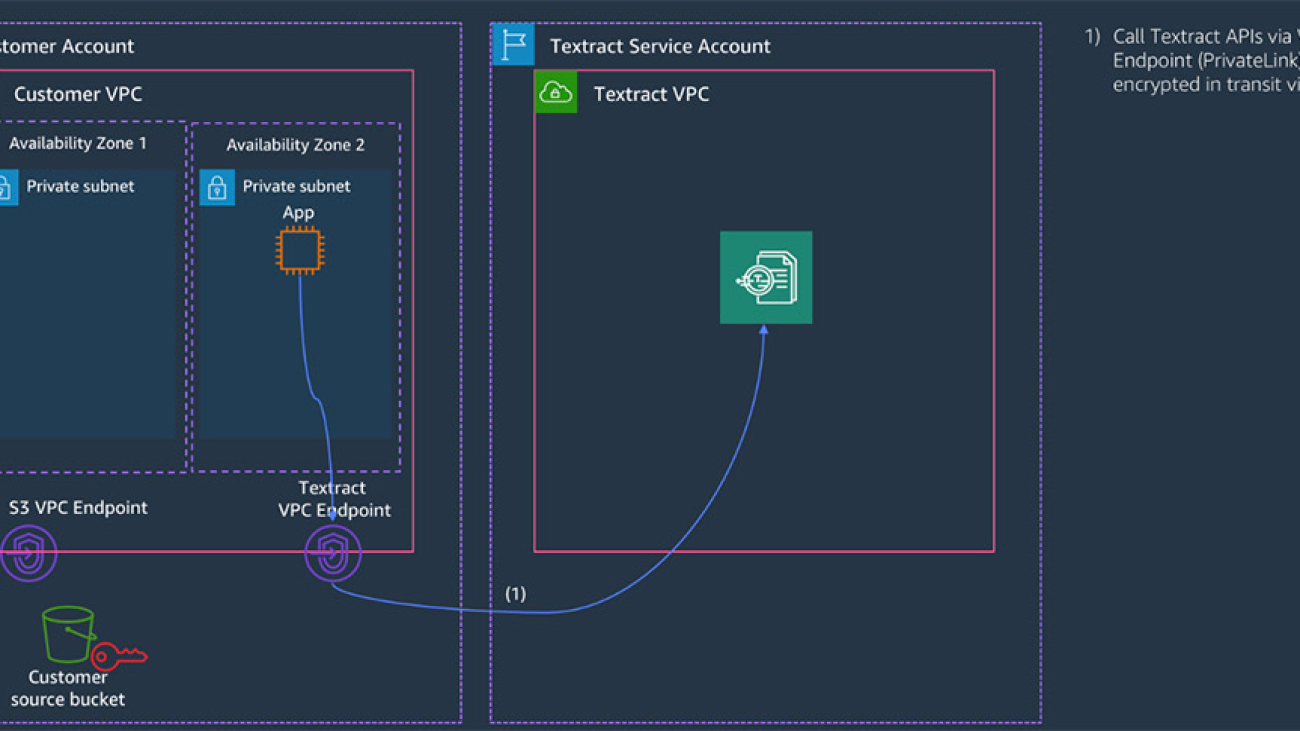

The following diagram illustrates the high-level architecture of the solution.

The solution contains the following steps:

- A user starts a batch translation job.

- An Amazon CloudWatch Events rule picks up the event and triggers the AWS Step Functions

- The Job Poller AWS Lambda function polls the job status every 5 minutes.

- When the Amazon Translate batch job is complete, an email notification is sent via an Amazon Simple Notification Service (Amazon SNS) topic.

To implement this solution, you must create the following:

- An SNS topic

- An AWS Identity and Access Management (IAM) role

- A Lambda function

- A Step Functions state machine

- A CloudWatch Events rule

Creating an SNS topic

To create an SNS topic, complete the following steps:

- On the Amazon SNS console, create a new topic.

- For Topic name, enter a name (for example,

TranslateJobNotificationTopic). - Choose Create topic.

You can now see the TranslateJobNotificationTopic page. The Details section displays the topic’s name, ARN, display name (optional), and the AWS account ID of the Topic owner.

- In the Details section, copy the topic ARN to the clipboard (

arn:aws:sns:us-east-1:123456789012:TranslateJobNotificationTopic). - On the left navigation pane, choose Subscriptions.

- Choose Create subscription.

- On the Create subscription page, enter the topic ARN of the topic you created earlier (

arn:aws:sns:us-east-1:123456789012:TranslateJobNotificationTopic). - For Protocol, select Email.

- For Endpoint, enter an email address that can receive notifications.

- Choose Create subscription.

For email subscriptions, you have to first confirm the subscription by choosing the confirm subscription link in the email you received.

Creating an IAM role for the Lambda function

To create an IAM role, complete the following steps. For more information, see Creating an IAM Role.

- On the IAM console, choose Policies.

- Choose Create Policy.

- On the JSON tab, enter the following IAM policy:

{ "Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "translate:DescribeTextTranslationJob",

"Resource": "*"

},

{

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": "arn:aws:logs:us-east-1:123456789012:log-group:/aws/lambda/TranslateJobStatusPoller:*"

}

]

}Update the resource property for CloudWatch Logs permission to reflect your configuration for Region, AWS account ID, and the Lambda function name.

- Choose Review policy.

- Enter a name (MyLambdaPolicy) for this policy and choose Create policy.

- Record the name of this policy for later steps.

- On the left navigation pane, choose Roles.

- Choose Create role.

- On the Select role type page, choose Lambda and the Lambda use case.

- Choose Next: Permissions.

- Filter policies by the policy name that you just created, and select the check-box.

- Choose Next: Tags.

- Add an appropriate tag.

- Choose Next: Review.

- Give this IAM role an appropriate name, and note it for future use.

- Choose Create role.

Creating a Lambda function

To create a Lambda function, complete the following steps. For more information, see Create a Lambda Function with the Console.

- On the Lambda console, choose Author from scratch.

- For Function Name, enter the name of your function (for example, TranslateJobStatusPoller).

- For Runtime, choose Python 3.8.

- For Execution role, select Use an existing role.

- Choose the IAM role you created in the previous step.

- Choose Create Function.

- Remove the default function and enter the following code into the Function Code window:

# Copyright 2020 Amazon.com, Inc. or its affiliates. All Rights Reserved.

# Licensed under the Apache License, Version 2.0 (the "License").

# You may not use this file except in compliance with the License.

# A copy of the License is located at## http://aws.amazon.com/apache2.0/

# or in the "license" file accompanying this file.

# This file is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND,

# either express or implied. See the License for the specific language governing permissions

# and limitations under the License.

# Description: This Lambda function is part of the a step function that checks the status of Amazon translate batch job.

# Author: Sudhanshu Malhotra

import boto3

import logging

import os

from botocore.exceptions import ClientError

logging.basicConfig(level=logging.DEBUG)

logger = logging.getLogger(__name__)

def msgpublish(jobid):

client = boto3.client('translate')

try:

response = client.describe_text_translation_job(JobId=jobid)

logger.debug('Job Status is: {}' .format(response['TextTranslationJobProperties']['JobStatus']))

return(response['TextTranslationJobProperties']['JobStatus'])

except ClientError as e:

logger.error("An error occured: %s" % e)

def lambda_handler(event, context):

logger.setLevel(logging.DEBUG)

logger.debug('Job ID is: {}' .format(event))

return(msgpublish(event))

- Choose Save.

Creating a state machine

To create a state machine, complete the following steps. For more information, see Create a State Machine.

- On the Step Functions console, on the Define state machine page, choose Start with a template.

- Choose Hello world.

- Under Type, choose Standard.

- Under Definition, enter the following Amazon States Language. Make sure to replace the Lambda function and SNS topic ARN.

{

"Comment": "Polling step function for translate job complete",

"StartAt": "LambdaPoll",

"States": {

"LambdaPoll": {

"Type": "Task",

"Resource": "<ARN of the Lambda Function created in step 3>",

"InputPath": "$.detail.responseElements.jobId",

"ResultPath": "$.detail.responseElements.jobStatus",

"Next": "Job Complete?",

"Retry": [

{

"ErrorEquals": [

"States.ALL"

],

"IntervalSeconds": 1,

"MaxAttempts": 3,

"BackoffRate": 2

}

]

},

"Job Complete?": {

"Type": "Choice",

"Choices": [

{

"Variable": "$.detail.responseElements.jobStatus",

"StringEquals": "IN_PROGRESS",

"Next": "Wait X Seconds"

},

{

"Variable": "$.detail.responseElements.jobStatus",

"StringEquals": "SUBMITTED",

"Next": "Wait X Seconds"

},

{

"Variable": "$.detail.responseElements.jobStatus",

"StringEquals": "COMPLETED",

"Next": "Notify"

},

{

"Variable": "$.detail.responseElements.jobStatus",

"StringEquals": "FAILED",

"Next": "Notify"

},

{

"Variable": "$.detail.responseElements.jobStatus",

"StringEquals": "STOPPED",

"Next": "Notify"

}

],

"Default": "Wait X Seconds"

},

"Wait X Seconds": {

"Type": "Wait",

"Seconds": 60,

"Next": "LambdaPoll"

},

"Notify": {

"Type": "Task",

"Resource": "arn:aws:states:::sns:publish",

"Parameters": {

"Subject": "Translate Batch Job Notification",

"Message": {

"JobId.$": "$.detail.responseElements.jobId",

"S3OutputLocation.$": "$.detail.requestParameters.outputDataConfig.s3Uri",

"JobStatus.$": "$.detail.responseElements.jobStatus"

},

"MessageAttributes": {

"JobId": {

"DataType": "String",

"StringValue.$": "$.detail.responseElements.jobId"

},

"S3OutputLocation": {

"DataType": "String",

"StringValue.$": "$.detail.requestParameters.outputDataConfig.s3Uri"

}

},

"TopicArn": "<ARN of the SNS topic created in step 1>"

},

"End": true

}

}

}

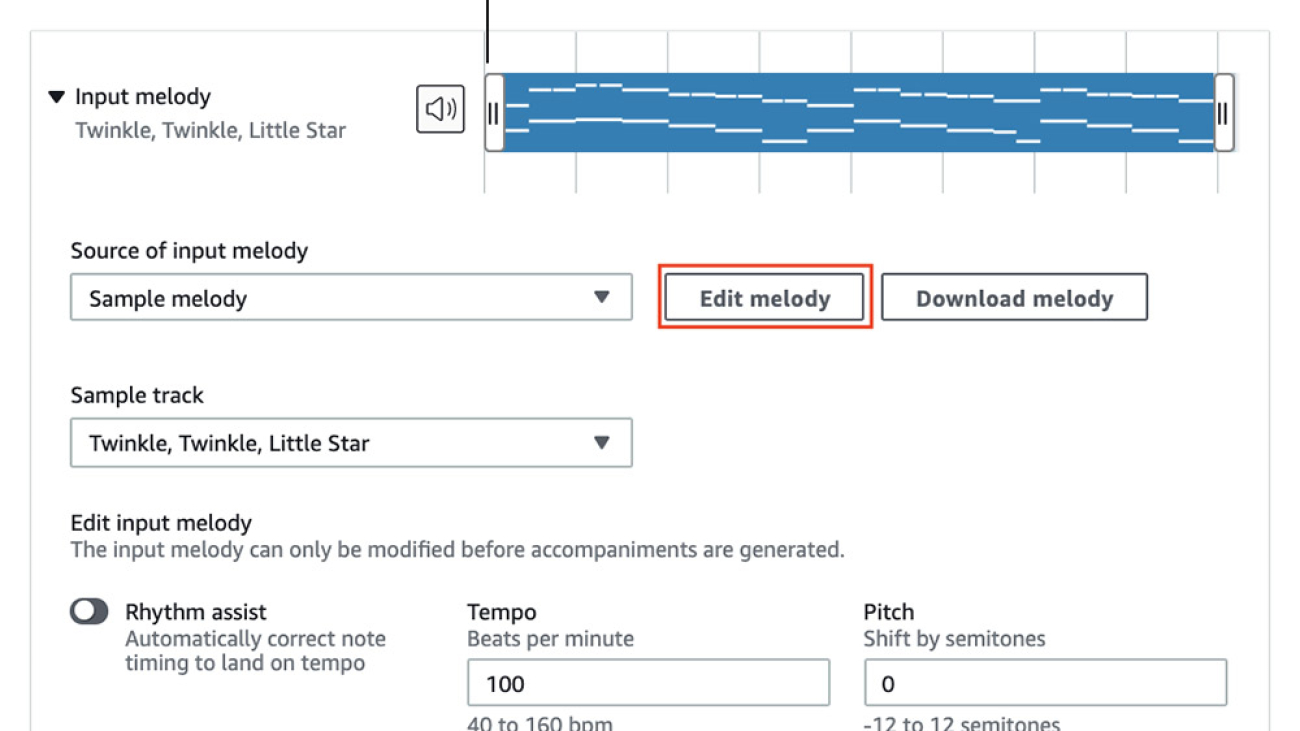

- Use the graph in the Visual Workflow pane to check that your Amazon States Language code describes your state machine correctly. You should see something like the following screenshot.

- Choose Next.

- For Name, enter a name for the state machine.

- Under Permissions, select Create new role.

You now see an info block with the details of the role and the associated permissions.

- Choose Create state machine.

Creating a CloudWatch Events rule

To create a CloudWatch Events rule, complete the following steps. This rule catches when a user performs a StartTextTranslationJob API event and triggers the step function (set as a target).

- On the CloudWatch console, choose Rules.

- Choose Create rule.

- On the Step 1: Create rule page, under Event Source, select Event Pattern.

- Choose Build custom event pattern from the drop-down menu.

- Enter the following code into the preview pane:

{

"source": [ "aws.translate" ],

"detail-type": [ "AWS API Call via CloudTrail" ],

"detail": {

"eventSource": [ "translate.amazonaws.com" ],

"eventName": [ "StartTextTranslationJob" ]

}

}- For Targets, select Step Functions state machine.

- Select the state machine you created earlier.

- For permission to send events to Step Functions, select Create a new role for this specific resource.

- Choose Configure details.

- On the Step 2: Configure rule details page, enter a name and description for the rule.

- For State, select Enabled.

- Choose Create rule.

Validating the solution

To test this solution, I first create an Amazon Translate batch job and provide the input text Amazon Simple Storage Service (Amazon S3) location, output Amazon S3 location, target language, and the data access service role ARN. For instructions on creating a batch translate job, see Asynchronous Batch Processing or Translating documents with Amazon Translate, AWS Lambda, and the new Batch Translate API.

The following screenshot shows my batch job on the Translation jobs page.

The CloudWatch Events rule picks up the StartTextTranslationJob API and triggers the state machine. When the job is complete, I get an email notification via Amazon SNS.

Conclusion

In this post, we demonstrated how you can use Step Functions to poll for an Amazon Translate batch job. For this use case, we configured an email notification to send when a job is complete; however, you can use this framework to trigger other Lambda functions or integrate with Amazon Simple Queue Service (Amazon SQS) for any postprocessing automated steps, enabling you to build an end-to-end automated workflow. For further reading, see the following:

- Translating documents with Amazon Translate, AWS Lambda, and the new Batch Translate API

- Designing human review workflows with Amazon Translate and Amazon Augmented AI

- Translating your website or application automatically with Amazon Translate in your CI/CD pipeline

About the Authors

Sudhanshu Malhotra is a Boston-based Enterprise Solutions Architect for AWS. He is a technology enthusiast who enjoys helping customers find innovative solutions to complex business challenges. His core areas of focus are DevOps, Machine Learning, and Security. When he’s not working with customers on their journey to the cloud, he enjoys reading, hiking, and exploring new cuisines.

Sudhanshu Malhotra is a Boston-based Enterprise Solutions Architect for AWS. He is a technology enthusiast who enjoys helping customers find innovative solutions to complex business challenges. His core areas of focus are DevOps, Machine Learning, and Security. When he’s not working with customers on their journey to the cloud, he enjoys reading, hiking, and exploring new cuisines.

Siva Rajamani is a Boston-based Enterprise Solutions Architect for AWS. He enjoys working closely with customers, supporting their digital transformation and AWS adoption journey. His core areas of focus are Serverless, Application Integration, and Security. Outside of work, he enjoys outdoor activities and watching documentaries.

Siva Rajamani is a Boston-based Enterprise Solutions Architect for AWS. He enjoys working closely with customers, supporting their digital transformation and AWS adoption journey. His core areas of focus are Serverless, Application Integration, and Security. Outside of work, he enjoys outdoor activities and watching documentaries.

Maryam Rezapoor is a Senior Product Manager with AWS AI Ecosystem team. As a former biomedical researcher and entrepreneur, she finds her passion in working backward from customers’ needs to create new impactful solutions. Outside of work, she enjoys hiking, photography, and gardening.

Maryam Rezapoor is a Senior Product Manager with AWS AI Ecosystem team. As a former biomedical researcher and entrepreneur, she finds her passion in working backward from customers’ needs to create new impactful solutions. Outside of work, she enjoys hiking, photography, and gardening.

Chaitanya Bapat is a Software Engineer with the AWS Deep Learning team. He works on Apache MXNet and integrating the framework with Sagemaker, DLC and DLAMI. In his spare time, he loves watching sports and enjoys reading books and learning Spanish.

Chaitanya Bapat is a Software Engineer with the AWS Deep Learning team. He works on Apache MXNet and integrating the framework with Sagemaker, DLC and DLAMI. In his spare time, he loves watching sports and enjoys reading books and learning Spanish. Karan Jariwala is a Software Development Engineer on the AWS Deep Learning team. His work focuses on training deep neural networks. Outside of work, he enjoys hiking, swimming, and playing tennis.

Karan Jariwala is a Software Development Engineer on the AWS Deep Learning team. His work focuses on training deep neural networks. Outside of work, he enjoys hiking, swimming, and playing tennis.

Raj Copparapu is a Product Manager focused on putting machine learning in the hands of every developer.

Raj Copparapu is a Product Manager focused on putting machine learning in the hands of every developer. Thomas joined Amazon Web Services in 2016 initially working on Application Auto Scaling before moving into this current role at Textract. Before joining AWS, he worked in engineering roles in the domains of computer graphics and networking. Thomas holds a master’s degree in engineering from the university of Leuven in Belgium.

Thomas joined Amazon Web Services in 2016 initially working on Application Auto Scaling before moving into this current role at Textract. Before joining AWS, he worked in engineering roles in the domains of computer graphics and networking. Thomas holds a master’s degree in engineering from the university of Leuven in Belgium.

Rahul Suresh is an Engineering Manager with the AWS AI org, where he has been working on AI based products for making machine learning accessible for all developers. Prior to joining AWS, Rahul was a Senior Software Developer at Amazon Devices and helped launch highly successful smart home products. Rahul is passionate about building machine learning systems at scale and is always looking for getting these advanced technologies in the hands of customers. In addition to his professional career, Rahul is an avid reader and a history buff.

Rahul Suresh is an Engineering Manager with the AWS AI org, where he has been working on AI based products for making machine learning accessible for all developers. Prior to joining AWS, Rahul was a Senior Software Developer at Amazon Devices and helped launch highly successful smart home products. Rahul is passionate about building machine learning systems at scale and is always looking for getting these advanced technologies in the hands of customers. In addition to his professional career, Rahul is an avid reader and a history buff.