In this post, we show you how to select the right metadata for your use case when building a recommendation engine using Amazon Personalize. The aim is to help you optimize your models to generate more user-relevant recommendations. We look at which metadata is most relevant to include for different use cases, and where you may get better results by excluding other metadata. We also highlight a specific use case from Pulselive, one of our customers that recently used Amazon Personalize to enhance the recommendation capabilities of their customer’s websites, resulting in a 20% increase in video consumption.

Introducing Amazon Personalize

Amazon Personalize is a managed service that enables you to improve customer engagement by powering personalized product and content recommendations, and targeted marketing promotions. Amazon Personalize uses machine learning (ML) to create high-quality recommendations that you can use to personalize your user experience across digital channels such as websites, applications, and email systems. You can get started without any prior ML experience using simple APIs to easily build sophisticated personalization capabilities in just a few clicks. Amazon Personalize automatically processes and examines your metadata, identifies what is meaningful, allows you to pick an ML algorithm, and trains and optimizes a custom model based on your metadata. All your data is encrypted to be private and secure, and is only used to create recommendations for your users.

Let’s dive into how important that metadata is to get a performant model.

The role metadata selection plays in recommendations

The goal of metadata selection in recommendation engines is to select the right data to aid the training algorithm to discover valuable information about the similarities in user preferences and behavior, in addition to the properties and similarity of the items you’re trying to recommend through the engine. The ultimate goal is to provide a personalized experience, uniquely tailored for each user, and present them with the items that are the most relevant to them.

Nowadays, there are so many sources of data that a company could potentially use to capture user behavior and understand which items to present to them that it has become challenging to accurately select which metadata to consider and which to ignore. Irrespective of the use case, a commercial website can use large amounts of data about every aspect of each user’s behavior on the website, such as which items they’re frequently interacting with (watching a video or ordering an item), how long they spend on each item’s page, or even how erratic or smooth the movement of their cursor is while scrolling through a page. All this information can reveal a lot about a user’s preferences and what would be the ideal items to recommend to them.

There are two main categories of approaches to recommendation engines: collaborative filtering and content-based filtering.

Collaborative filtering compares the behavior of the users with each other and tries to calculate the similarity between them to find shared interests. Therefore, the recommendation engine knows that if user A has very similar behavior to user B, then user A would likely be interested in some of the items that user B has interacted with, and vice versa.

Content-based filtering looks at the actual items the users interact with. If a user has interacted with items A and B, and product C is very similar to A and B, then item C will likely be of interest to the user.

We also have hybrid models that use both user behavior and item-related data to find the underlying patterns that reveal the ideal items to recommend to each user.

Each method requires a different approach to metadata selection because they require different types of data to be collected and used for the training. For example, building collaborative filtering engines requires data related to the behavior of users on the website, whereas building a content-based engine requires more data related to the items (item-specific metadata and which users interacted with which items). A hybrid solution requires data related to both the users and the items.

As a general rule, authenticated experiences are most optimal. When your users have personal accounts that they log in to, you can provide them with a more personalized experience tailored to their needs because you can easily track and record every aspect of their behavior (along with additional metadata), whereas it’s harder to track anonymous or guest users and map them to their previous sessions.

The problems that can occur if metadata selection isn’t done right

If metadata selection isn’t done correctly, it can potentially lead to poor recommendations that are either too generic (showing most users the most popular and commonly interacted products) or not relevant (showing items that are completely irrelevant to the unique user).

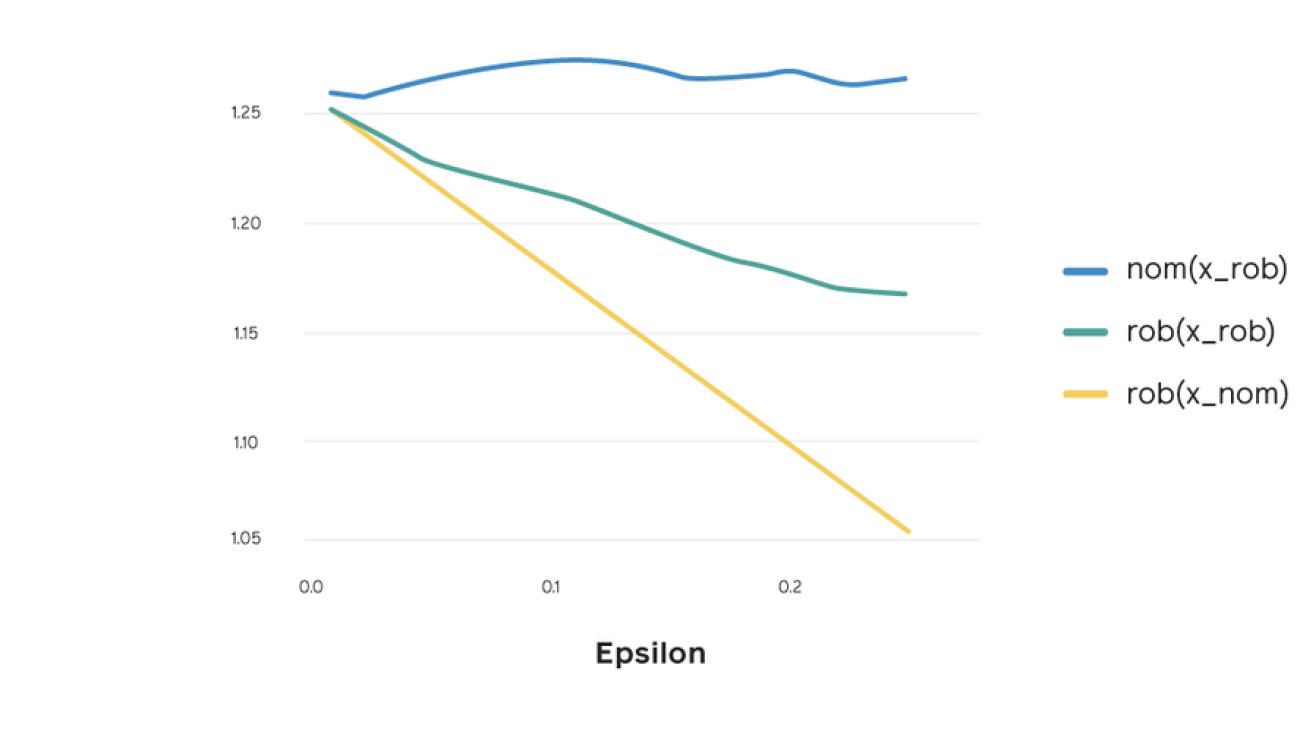

When too much information is included in training a recommendation model, it can lead to noise in the model. Metadata that has no correlation with user preferences but was included in training skews the model and makes it harder for the algorithm to find the valuable underlying patterns that allow for a successful recommender system.

This can also apply to the depth (amount of history) of the data that is used to train a model. Perhaps relevant metadata has been selected, but the freshness of the data in many cases is a stronger indicator of relevance—the most recent metadata is more relevant than historical data for the same kind of interactions. This is because user behavior and preferences vary over time and people’s interests can change rather quickly; therefore, presenting a user with a recommendation that was considered relevant to them a few months ago doesn’t guarantee that the recommendation is relevant to them today. This is why it’s important to keep your recommender system up to date with current user behavior.

Conversely, if too little information is included, the recommendation model under-performs. If you don’t include valuable information that can aid the performance of the model, the recommendation model makes suboptimal suggestions.

A wrong approach to metadata selection can make it harder for the algorithms to find the underlying patterns that connect users and items. This means that the recommendations that the users are presented with aren’t personalized as expected.

Terminology of recommendation engines

To introduce the topic further, let’s dive into some of the terminology associated with Amazon Personalize:

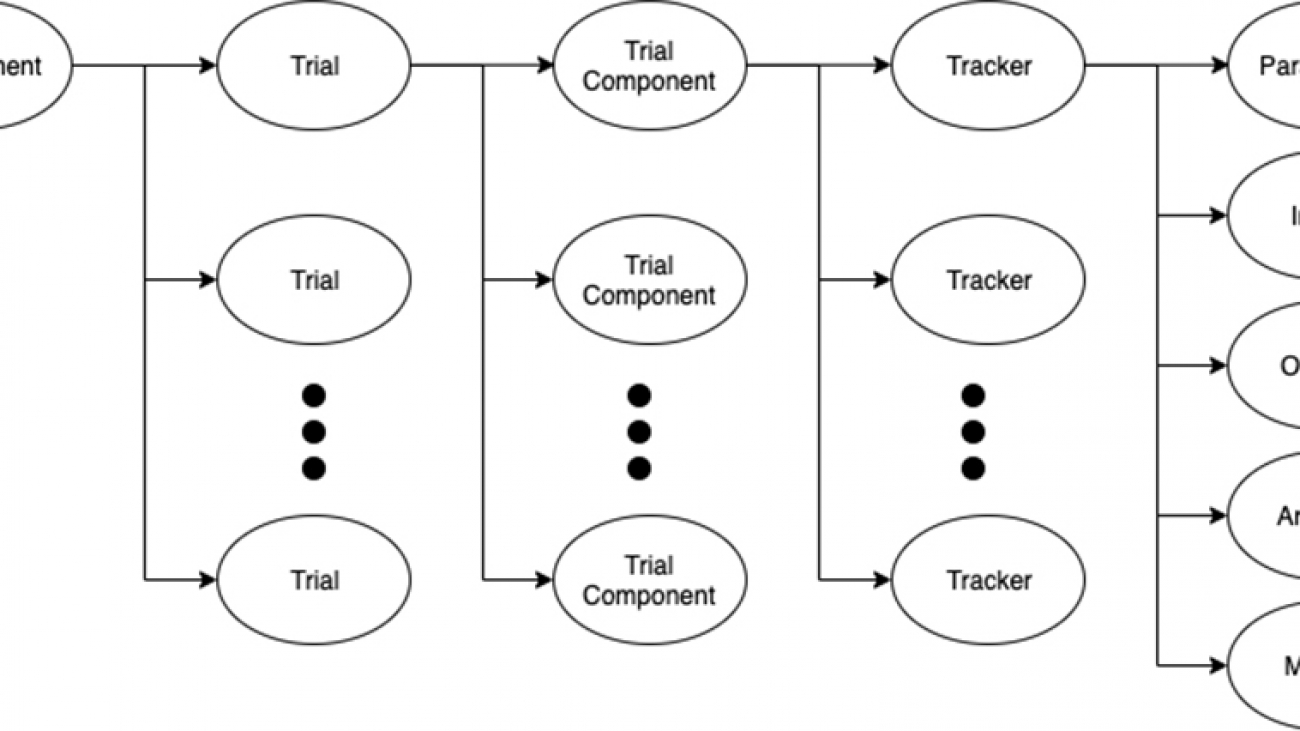

- Datasets and dataset groups – Datasets contain the data used to train a recommendation model. You can use different dataset groups to serve different purposes. For example, separate applications, with their own users and items, can have their own dataset groups.

- Recipes and solutions – Amazon Personalize uses recipes, which are the combination of the learning algorithm with the hyperparameters and datasets used. Training a model with different recipes leads to different results. The resultant models that are deployed are referred to as a solution version.

- Campaigns – A deployed solution version is known as a campaign. A campaign allows Amazon Personalize to make recommendations for your users.

Metadata types are dictated in the datasets used to train a model. In the following section, we look at how to do that.

Selecting metadata

Amazon Personalize uses different recipes that are aimed towards either of the two main categories of recommendation engines—collaborative filtering and content-based filtering—and also the hybrid methods. For more information about pre-defined recipes, see Choosing a Recipe.

No matter which recipe you chose to work with, Amazon Personalize has three main types of datasets that it can use to build models (solutions), and each is related to one of the following categories:

- Users

- Items

- Interactions

The users and items dataset types are known as metadata types, and are only used by certain recipes. As their names imply, their metadata has unique fields that describe each individual user or item. User metadata could be age, gender, and geography. Typical item metadata is color, category, shape, price (in the case of items) or content category, ratings, and genre, if the type of item we’re trying to recommend is a video or movie.

The interactions metadata is the direct interactions of a user with an item, which is usually the most revealing information for the relationship between users and items. Some examples of interactions data can be clicks (user A clicked on item X), purchases (user actually purchased an item), amount of time spent on an item’s webpage, the addition of an item to a user’s wishlist, or even the fact that the user hovered their cursor for a few milliseconds more than usual over a certain item.

The minimum number of interactions Amazon Personalize expects in order to start making recommendations is 1,000 interactions from a minimum of 25 users. User and item metadata datasets are optional, and their importance depends on your use case and the algorithm (recipe) you’re using.

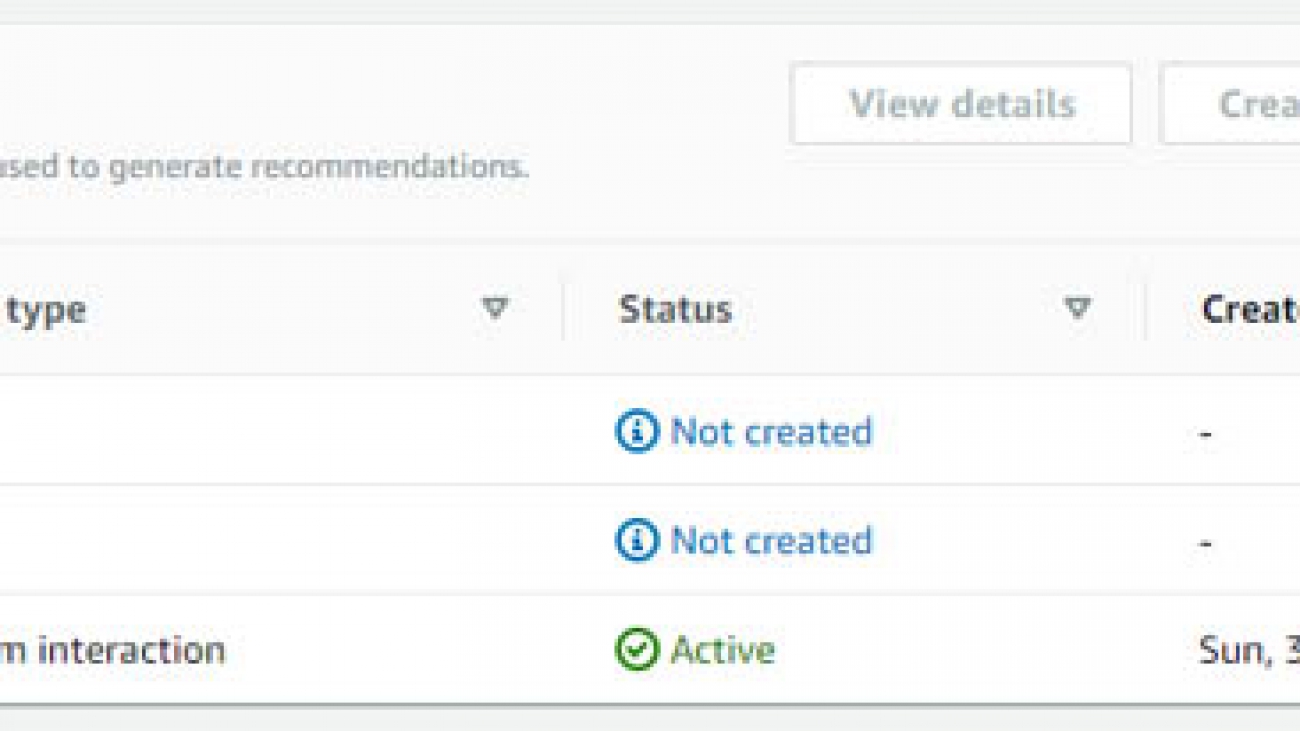

The following screenshot shows the Datasets page on the Amazon Personalize console.

What data types are supported by each category?

Each dataset has a set of required fields, reserved keywords, and required datatypes, as shown in the following table.

| Dataset Type | Required Fields | Reserved Keywords | ||

| Users | USER_ID (string)one metadata field |

|||

| Items | ITEM_ID (string)one metadata field |

|

||

| Interactions | USER_ID (string)ITEM_ID ( string)TIMESTAMP ( long) |

EVENT_TYPE (string) IMPRESSION (string) EVENT_VALUE (float, null)

|

Before you add a dataset to Amazon Personalize, you must define a schema for that dataset. Each dataset type has specific requirements. Schemas in Amazon Personalize are defined in the Avro format.

The following example code shows an interactions schema. The EVENT_TYPE and EVENT_VALUE fields are optional, and are reserved keywords recognized by Amazon Personalize. LOCATION and DEVICE are optional contextual metadata fields.

{

"type": "record",

"name": "Interactions",

"namespace": "com.amazonaws.personalize.schema",

"fields": [

{

"name": "USER_ID",

"type": "string"

},

{

"name": "ITEM_ID",

"type": "string"

},

{

"name": "EVENT_TYPE",

"type": "string"

},

{

"name": "EVENT_VALUE",

"type": "float"

},

{

"name": "LOCATION",

"type": "string",

"categorical": true

},

{

"name": "DEVICE",

"type": "string",

"categorical": true

},

{

"name": "TIMESTAMP",

"type": "long"

}

],

"version": "1.0"

}

Creating a schema using the AWS Python SDK

To create a schema using the AWS Python SDK, complete the following steps:

- Define the Avro format schema that you want to use.

- Save the schema in a JSON file in the default Python folder.

- Create the schema using the following code:

import boto3

personalize = boto3.client('personalize')

with open('schema.json') as f:

createSchemaResponse = personalize.create_schema(

name = 'YourSchema',

schema = f.read()

)

schema_arn = createSchemaResponse['schemaArn']

print('Schema ARN:' + schema_arn )

Amazon Personalize returns the ARN of the new schema.

- Store the ARN for later use.

Filtering your metadata

Amazon Personalize allows you to experiment with building different models (or solutions) based on different metadata by enabling you to filter records from your interactions dataset and set a threshold for each event type, or simply select and leave out certain event types. You can filter records from an interactions dataset in two ways:

- Set a threshold to exclude records based on a specific value by specifying an event value in your recipe. If the records include a value that is associated with a specific event—for example, the price a user paid is associated with the purchase of an item—you can set a specific value in a recipe as a threshold to exclude records from training. The amount is called an event value.

- Exclude records of a certain type by specifying an event type in your recipe. A dataset often includes specific types of activities, for example, purchase, click, or wishlisted. These are called event types. To include only records for specific event types in training, filter your dataset by event type in your recipe.

To filter your metadata, call the CreateSolution API. If you want to specify the event type, for example purchase, set it in the eventType parameter. If you want to specify an event value, for example 10, set it in the eventValueThreshold parameter. You can also specify an event type and an event value. You can specify an eventType, an eventType and eventValueThreshold, or neither. You can’t specify just eventValueThreshold alone. See the following code:

import boto3

personalize = boto3.client('personalize')

# Create the solution

create_solution_response = personalize.create_solution(

name = "your-solution-name",

datasetGroupArn = dataset_group_arn,

recipeArn = recipe_arn,

"eventType": "purchase",

solutionConfig = {

"eventValueThreshold": "10"

}

)

# Store the solution ARN

solution_arn = create_solution_response['solutionArn']

# Use the solution ARN to get the solution status

solution_description = personalize.describe_solution(solutionArn = solution_arn)['solution']

print('Solution status: ' + solution_description['status'])

When selecting metadata for a recommendation engine, it’s helpful to ask the following questions to help guide your decisions:

- What is likely to be the strongest indicator of a good recommendation—similar users, similar items, or their combined interactions? This can help determine which metadata to select and tag in the datasets. As described, the interactions dataset is the minimum that Amazon Personalize expects, so you have to choose wisely which types of interactions (or events) you want to capture. A combination of interactions and metadata is typically recommended, but choosing which types of interactions to record is important.

- What is the temporal value of the data? Is old data less potent? How much less? How can you use real-time APIs with real-time data to get the most relevant recommendations that reflect the users’ change of preferences over time?

- Which metrics best show whether the recommendation engine is working well? Can you align Amazon Personalize metrics with your own KPIs? Can you construct an A/B test with live customers?

The answers to these questions can be a good guide to improve the recommendation system.

Applying metadata selection: Pulselive use case

In a recent engagement with Pulselive, an AWS customer that builds and hosts solutions for large sports organizations, we were asked to aid them in prototyping a personalized recommendation engine for one of their customers, a renowned European football club, to suggest videos to the visitors of their website according to their preferences and past behavior. Their goal was to use all the data they could to provide the website’s visitors with a tailored, highly personalized experience by recommending videos relevant to each user to increase engagement with the content.

Our initial approach was to use some of their existing recorded historical data to extract the minimum required information needed to start building Amazon Personalize solutions that can recommend the right videos to the right users. Therefore, the metadata we initially selected was the simplest form of user-video interactions—clicks—from a historical dataset of which users had clicked on which videos and at what time. We started with 30,000 user interactions.

That allowed us to build a baseline solution that used that information to evaluate the relevance of each video to each user and considered it as our starting point. The next goal was to enrich the dataset by selecting the right metadata and observing the impact that the new models had on user engagement.

At this point, we have to mention that it’s somewhat challenging to predict how well the recommendation system will do when deployed into production. Amazon Personalize provides some standard out-of-the-box metrics when a model has finished training to give you an idea of how well it did at recommending the most relevant items higher on the recommendations list (such as having a high precision or coverage). But you can only evaluate the true impact on your customers when deploying the system into production.

Pulselive chose to do A/B testing, comparing the results of their existing recommendation methods to those produced from an Amazon Personalize campaign. They started with redirecting 5% of their traffic through the Amazon Personalize campaign. After seeing good results, they eventually rolled out to 50% of the traffic being redirected to Amazon Personalize. For more information, see Increasing engagement with personalized online sports content.

Regarding metadata selection, we quickly realized that the users and items in the initial historical dataset weren’t very recent, and most of their IDs didn’t correspond to users and items that had recent activity on their production website.

Luckily, apart from an initial historical dataset, Amazon Personalize can also enrich its models in real time by allowing you to feed in interaction data from your live website. Through the use of the Amazon Personalize PutEvents API, you can record any action users take on the website and feed it into Amazon Personalize in near-real time, updating the model with the most recent user behavior and preferences. This is an important capability because it’s natural for user preferences to change over time, and you don’t want to risk presenting them with items that are either out of date or not relevant to them anymore.

This also means that you can directly connect Amazon Personalize to your website, with no historical data or any models trained, and start feeding in events. After a while, Amazon Personalize has gathered enough data to start making accurate recommendations. For more information, see Recording Events.

We spent some time discussing what other relevant user behavior metadata we could capture, and decided to start recording some to observe whether these would result in a more accurate recommendation system that would impact user engagement on the site. Two simple measures for this were seeing if recommended videos were more frequently visited and watched for longer periods.

We started recording the source of the clicks (recommended list vs. other links in the website), the amount of time a user spent on a clicked video in seconds, and the percentage of the video that time represented (because it’s different for someone to spend 1 minute on a 20-minute video, compared to spending the same time on a 1-minute video to watch it in its entirety). These additions proved to be very important because after a while, user engagement started improving. We discussed and investigated providing more detailed information about user behavior on the website, but decided to pay more attention to the metadata.

Items metadata was important because it allowed Amazon Personalize to have more context on the nature of each video. This ranged from general and broad video categories, such as interviews and games, to more specific categories, such as “Leagues” and “Friendly games,” to more specific metadata, such as which players are featured in a video. Adding metadata about the content for each video significantly improved the personalized recommendations because the solution had a notion of context that helped determine what type on content each user preferred to watch.

Equally, on the user metadata side, more detailed information was provided, trying to capture the demographics and preferences of each user. Of course, in the case of the users, we had to deal with the cold-start problem (new users or guest users for which the system didn’t have any information yet). Luckily, the Amazon Personalize HRNN-Coldstart recipe has proved to be very sufficient in solving this problem by quickly linking the new user’s behavior to existing ones. The more time a guest or new user spends on the platform, the more Amazon Personalize understands about their preferences and adjusts its recommendations accordingly.

We had many options of what type of metadata to include in the interactions dataset, but it’s important to make sure we only use relevant metadata, and we had to pay attention to the balance between providing too much information to a model and providing too little.

For example, we considered recording the movement of each user’s cursors on the website and sending these as well to Amazon Personalize, which in theory could provide a marginal improvement to the performance of the recommendation system. But doing so proved to be expensive and tolling both on the front end (it impacted website performance) and the back end (the volume of data the system had to record, store, and send to Amazon Personalize significantly increased). Therefore, after careful consideration, we decided that cursor movement metadata wasn’t worth keeping.

After a few months, Pulselive rolled out the Amazon Personalize-based recommendation system to nearly half of their customer’s website visitors, and saw that that group’s engagement with their videos increased by 20%.

Conclusion

Recommendation engines can provide more pertinent results to users based on metadata about a user’s historical selections, or on the types of items of interest.

In this post, we looked at how to select the right metadata to get the best results when training a recommendation engine on Amazon Personalize by evaluating which metadata to include and which to exclude. We also looked at a specific use case and how an AWS customer, Pulselive, increased engagement with videos on their customer’s website by providing personalized recommendations to users.

For more information on creating recommendation engines with Amazon Personalize and metadata selection, see the following:

- Creating a recommendation engine using Amazon Personalize

- Choosing a Recipe

- Increasing engagement with personalized online sports content

About the Authors

Andrew Hood is a Prototyping Engagement Manager at AWS.

Andrew Hood is a Prototyping Engagement Manager at AWS.

Ion Kleopas is an ML Prototyping Architect at AWS.

Ion Kleopas is an ML Prototyping Architect at AWS.

Ivan Kopas is a Machine Learning Engineer for AWS Professional Services, based out of the United States. Ivan is passionate about working closely with AWS customers from a variety of industries and helping them leverage AWS services to spearhead their toughest AI/ML challenges. In his spare time, he enjoys spending time with his family, working out, hanging out with friends and diving deep into the fascinating realms of economics, psychology and philosophy.

Ivan Kopas is a Machine Learning Engineer for AWS Professional Services, based out of the United States. Ivan is passionate about working closely with AWS customers from a variety of industries and helping them leverage AWS services to spearhead their toughest AI/ML challenges. In his spare time, he enjoys spending time with his family, working out, hanging out with friends and diving deep into the fascinating realms of economics, psychology and philosophy.

Claire Mitchell is a Design Consultant with the AWS Professional Services Conversational AI team. Occasionally she spends time exploring speculative design practices, textiles, and playing the drums.

Claire Mitchell is a Design Consultant with the AWS Professional Services Conversational AI team. Occasionally she spends time exploring speculative design practices, textiles, and playing the drums. Brian Yost is a Senior Consultant with the AWS Professional Services Conversational AI team. In his spare time, he enjoys mountain biking, home brewing, and tinkering with technology.

Brian Yost is a Senior Consultant with the AWS Professional Services Conversational AI team. In his spare time, he enjoys mountain biking, home brewing, and tinkering with technology. As a Product Manager on the Amazon Lex team, Harshal Pimpalkhute spends his time trying to get machines to engage (nicely) with humans.

As a Product Manager on the Amazon Lex team, Harshal Pimpalkhute spends his time trying to get machines to engage (nicely) with humans.