Even in ordinary times, scientific process is stressful, with its demand for open-ended exploration and persistence in the face of failure. But the pandemic has added to the strain. In this new world of physical isolation, there are fewer opportunities for spontaneity and connection, and fewer distractions and events to mark the passage of time. Days pass in a numbing blur of sameness.

Working from home this summer, students participating in MIT’s Undergraduate Research Opportunities Program (UROP) did their best to overcome these challenges. Checking in with their advisors over Zoom and Slack, from as far west as Los Angeles, California and as far east as Skopje, North Macedonia, they completed two dozen projects sponsored by the MIT Quest for Intelligence. Four student projects are highlighted here.

Defending code-processing AI models against adversarial attacks

Computer vision models have famously been fooled into classifying turtles as rifles, and planes as pigs, simply by making subtle changes to the objects and images the models are asked to interpret. But models that analyze computer code, which are a part of recent efforts to build automated tools to design programs efficiently, are also susceptible to so-called adversarial examples.

The lab of Una-May O’Reilly, a principal research scientist at MIT, is focused on finding and fixing the weaknesses in code-processing models that can cause them to misbehave. As automated programming methods become more common, researchers are looking for ways to make this class of deep learning model more secure.

“Even small changes like giving a different name to a variable in a computer program can completely change how the model interprets the program,” says Tamara Mitrovska, a third-year student who worked on a UROP project this summer with Shashank Srikant, a graduate student in O’Reilly’s lab.

The lab is investigating two types of models used to summarize bits of a program as part of a broader effort to use machine learning to write new programs. One such model is Google’s seq2seq, originally developed for machine translation. A second is code2seq, which creates abstract representations of programs. Both are vulnerable to attacks due to a simple programming quirk: captions that let humans know what the code is doing, like assigning names to variables, give attackers an opening to exploit the model. By simply changing a variable name in a program or adding a print statement, the program may function normally, yet force the model processing it to give an incorrect answer.

This summer, from her home near Skopje, in North Macedonia, Mitrovska learned how to sift through a database of more than 100,000 programs in Java and Python and modify them algorithmically to try to fool seq2seq and code2seq. “These systems are challenging to implement,” she says. “Finding even the smallest bug can take a significant amount of time. But overall, I’ve been having fun and the project has been a very good learning experience for me.”

One exploit that she uncovered: Both models could be tricked by inserting “print” commands in the programs they process. That exploit, and others discovered by the lab, will be used to update the models to make them more robust.

What everyday adjectives can tell us about human reasoning

Embedded in the simplest of words are assumptions about the world that vary even among closely related languages. Take the word “biggest.” Like other superlatives in English, this adjective has no equivalent in French or Spanish. Speakers simply use the comparative form, “bigger” — plus grand in French or más grande in Spanish — to differentiate among objects of various sizes.

To understand what these words mean and how they are actually used, Helena Aparicio, formerly a postdoc at MIT and now a professor at Cornell University, devised a set of psychology experiments with MIT Associate Professor Roger Levy and Boston University Professor Elizabeth Coppock. Curtis Chen, a second-year student at MIT interested in the four topics that converge in Levy’s lab — computer science, psychology, linguistics, and cognitive science — joined on as a UROP student.

From his home in Hillsborough, New Jersey, Chen orchestrated experiments to identify why English speakers prefer superlatives in some cases and comparatives in others. He found that in scenes with more similarly sized objects, the more likely his human subjects were to prefer the word “biggest” to describe the largest object in the set. When objects appeared to fall within two clearly defined groups, subjects preferred the less-precise “bigger.” Chen also built an AI model to simulate the inferences made by his human subjects and found that it showed a similar preference for the superlative in ambiguous situations.

Designing a successful experiment can take several tries. To ensure consistency among the shapes that subjects were asked to describe, Chen generated them on the computer using HTML Canvas and JavaScript. “This way, the size differentials were exact, and we could simply report the formula used to make them,” he says.

After discovering that some subjects seemed confused by rectangle and line shapes, he replaced them with circles. He also removed the default option on his reporting scale after realizing that some subjects were using it to breeze through the tasks. Finally, he switched to the crowdsourcing platform Prolific after a number of participants on Amazon’s Mechanical Turk failed at tasks designed to ensure they were taking the experiments seriously.

“It was discouraging, but Curtis went through the process of exploring the data and figuring out what was going wrong,” says his mentor, Aparicio.

In the end, he wound up with strong results and promising ideas for follow-up experiments this fall. “There’s still a lot to be done,” he says. “I had a lot of fun cooking up and tweaking the model, designing the experiment, and learning about this deceptively simple puzzle.”

Levy says he looks forward to the results. “Ultimately, this line of inquiry helps us understand how different vocabularies and grammatical resources of English and thousands of other languages support flexible communication by their native speakers,” he says.

Reconstructing real-world scenes from sensor data

AI systems that have become expert at sizing up scenes in photos and video may soon be able to do the same for real-world scenes. It’s a process that involves stitching together snapshots of a scene from varying viewpoints into a coherent picture. The brain performs these calculations effortlessly as we move through the world, but computers require sophisticated algorithms and extensive training.

MIT Associate Professor Justin Solomon focuses on developing methods to help computers understand 3D environments. He and his lab look for new ways to take point cloud data gathered by sensors — essentially, reflections of infrared light bounced off the surfaces of objects — to create a holistic representation of a real-world scene. Three-dimensional scene analysis has many applications in computer graphics, but the one that drove second-year student Kevin Shao to join Solomon’s lab was its potential as a navigation tool for self-driving cars.

“Working on autonomous cars has been a childhood dream for me,” says Shao.

In the first phase of his UROP project, Shao downloaded the most important papers on 3D scene reconstruction and tried to reproduce their results. This improved his knowledge of PyTorch, the Python library that provides tools for training, testing, and evaluating models. It also gave him a deep understanding of the literature. In the second phase of the project, Shao worked with his mentor, PhD student Yue Wang, to improve on existing methods.

“Kevin implemented most of the ideas, and explained in detail why they would or wouldn’t work,” says Wang. “He didn’t give up on an idea until we had a comprehensive analysis of the problem.”

One idea they explored was the use of computer-drawn scenes to train a multi-view registration model. So far, the method works in simulation, but not on real-world scenes. Shao is now trying to incorporate real-world data to bridge the gap, and will continue the work this fall.

Wang is excited to see the results. “It sometimes takes PhD students a year to have a reasonable result,” he says. “Although we are still in the exploration phase, I think Kevin has made a successful transition from a smart student to a well-qualified researcher.”

When do infants become attuned to speech and music?

The ability to perceive speech and music has been traced to specialized parts of the brain, with infants as young as four months old showing sensitivity to speech-like sounds. MIT Professor Nancy Kanwisher and her lab are investigating how this special ear for speech and music arises in the infant brain.

Somaia Saba, a second-year student at MIT, was introduced to Kanwisher’s research last year in an intro to neuroscience class and immediately wanted to learn more. “The more I read up about cortical development, the more I realized how little we know about the development of the visual and auditory pathways,” she says. “I became very excited and met with [PhD student] Heather Kosakowski, who explained the details of her projects.”

Signing on for a project, Saba plunged into the “deep end” of cortical development research. Initially overwhelmed, she says she gained confidence through regular Zoom meetings with Kosakowski, who helped her to navigate MATLAB and other software for analyzing brain-imaging data. “Heather really helped motivate me to learn these programs quickly, which has also primed me to learn more easily in the future,” she says.

Before the pandemic shut down campus, Kanwisher’s lab collected functional magnetic resonance imaging (fMRI) data from two- to eight-week-old sleeping infants exposed to different sounds. This summer, from her home on Long Island, New York, Saba helped to analyze the data. She is now learning how to process fMRI data for awake infants, looking toward the study’s next phase. “This is a crucial and very challenging task that’s harder than processing child and adult fMRI data,” says Kosakowski. “Discovering how these specialized regions emerge in infants may be the key to unlocking mysteries about the origin of the mind.”

MIT Quest for Intelligence summer UROP projects were funded, in part, by the MIT-IBM Watson AI Lab and by Eric Schmidt, technical advisor to Alphabet Inc., and his wife, Wendy.

Read More

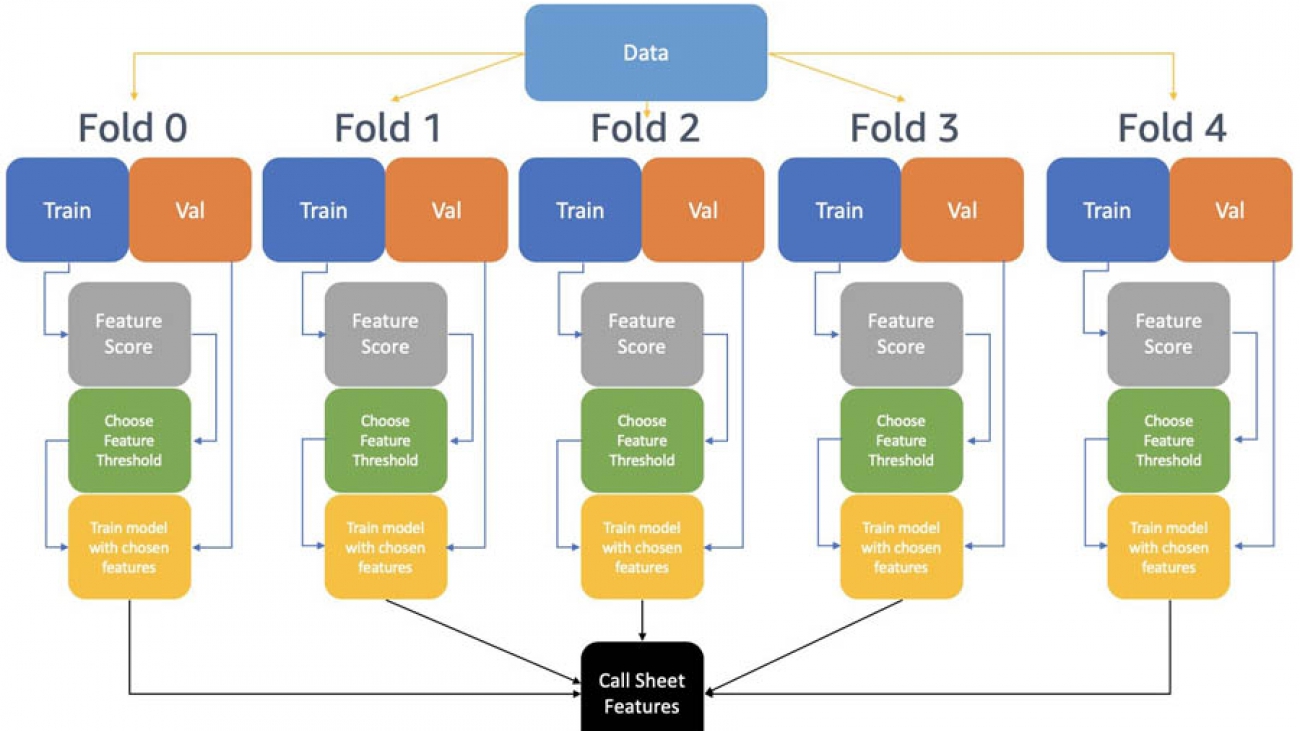

Ninad Kulkarni is a Data Scientist in the Amazon Machine Learning Solutions Lab. He helps customers adopt ML and AI by building solutions to address their business problems. Most recently, he has built predictive models for sports and automotive customers.

Ninad Kulkarni is a Data Scientist in the Amazon Machine Learning Solutions Lab. He helps customers adopt ML and AI by building solutions to address their business problems. Most recently, he has built predictive models for sports and automotive customers. Daliana Zhen Liu is a Data Scientist in the Amazon Machine Learning Solutions Lab. She has built ML models to help customers accelerate their business in sports, media and education. She is passionate about introducing data science to more people.

Daliana Zhen Liu is a Data Scientist in the Amazon Machine Learning Solutions Lab. She has built ML models to help customers accelerate their business in sports, media and education. She is passionate about introducing data science to more people. Tianyu Zhang is a Data Scientist in the Amazon Machine Learning Solutions Lab. He helps customers solve business problems by applying ML and AI techniques. Most recently, he has built NLP model and predictive model for procurement and sports.

Tianyu Zhang is a Data Scientist in the Amazon Machine Learning Solutions Lab. He helps customers solve business problems by applying ML and AI techniques. Most recently, he has built NLP model and predictive model for procurement and sports.