Content creation is booming at an unprecedented rate.

Whether it’s a 3D artist sculpting a beautiful piece of art or an aspiring influencer editing their next hit TikTok, more than 110 million professional and hobbyist artists worldwide are creating content on laptops and desktops.

NVIDIA Studio is meeting the moment with new GeForce RTX 40 Series GPUs, 110 RTX-accelerated apps, as well as the Studio suite of software, validated systems, dedicated Studio Drivers for creators, and software development kits to help artists create at the speed of their imagination.

On display during today’s NVIDIA GTC keynote, new GeForce RTX 40 Series GPUs and incredible AI tools — powered by the ultra-efficient NVIDIA Ada Lovelace architecture — are making content creation faster and easier than ever.

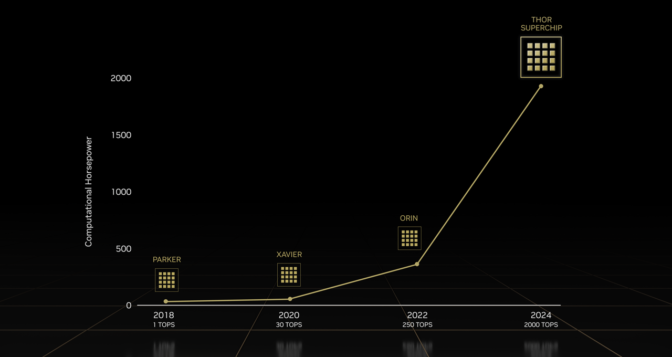

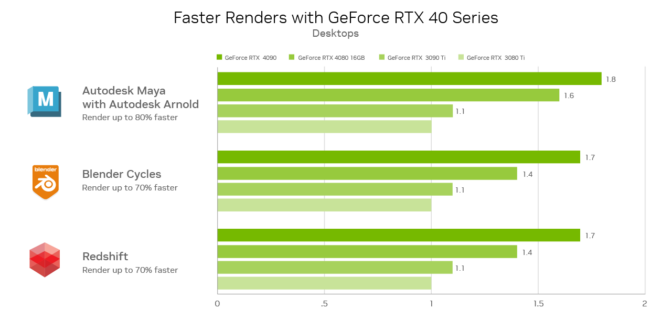

The new GeForce RTX 4090 brings a massive boost in performance, third-generation RT Cores, fourth-generation Tensor Cores, an eighth-generation NVIDIA Dual AV1 Encoder and 24GB of Micron G6X memory capable of reaching 1TB/s bandwidth. The GeForce RTX 4090 is up to 2x faster than a GeForce RTX 3090 Ti in 3D rendering, AI and video exports.

The GeForce RTX 4080 comes in two variants — 16GB and 12GB — so creators can choose the optimal memory capacity based on their projects’ needs. The RTX GeForce 4080 16GB is up to 1.5x faster than the RTX 3080 Ti.

The keynote kicked off with a breathtaking demo, NVIDIA Racer RTX, built in NVIDIA Omniverse, an open platform for virtual collaboration and real-time photorealistic simulation. The demo showcases the latest NVIDIA technologies with real-time full ray tracing — in 4K resolution at 60 frames per second (FPS), running with new DLSS 3 technology — and hyperrealistic physics.

It’s a blueprint for the next generation of content creation and game development, where worlds are no longer prebaked, but physically accurate, full simulations.

Deep dive into the making of Racer RTX in this special edition of the In the NVIDIA Studio blog series.

In addition to benefits for 3D creators, we’ve introduced near-magical RTX and AI tools for game modders — the creators of the PC gaming community — with the Omniverse application RTX Remix, which has been used to turn RTX ON in Portal with RTX, free downloadable content for Valve’s classic hit, Portal.

Video editors and livestreamers are getting a massive boost, too. New dual encoders cut video export times nearly in half. Live streamers get encoding benefits with the eighth-generation NVIDIA Encoder, including support for AV1 encoding.

Groundbreaking AI technology, like AI image generators and new video-editing tools in DaVinci Resolve, are ushering in a new wave of creativity. Beyond-fast GeForce RTX 4090 and 4080 graphics cards will power the next step in the AI revolution, delivering up to a 2x increase in AI performance over the previous generation.

A New Paradigm for 3D

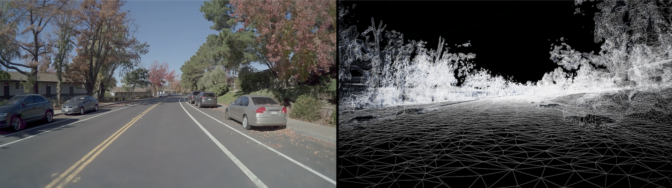

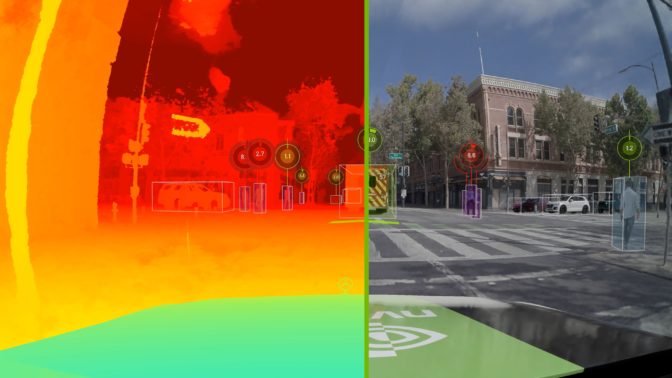

GeForce RTX 40 Series GPUs and DLSS 3 deliver big boosts in performance for 3D artists, so they can create in fully ray-traced environments with accurate physics and realistic materials all in real time, without proxies.

DLSS 3 uses AI-powered fourth-generation RTX Tensor Cores, and a new Optical Flow Accelerator on GeForce RTX 40 Series GPUs, to generate additional frames and dramatically increase FPS. This improves smoothness and speeds up movement in the viewport for those working in 3D applications such as NVIDIA Omniverse, Unity and Unreal Engine 5.

NVIDIA Omniverse, included in the NVIDIA Studio software suite, takes creativity and collaboration even further. A new Omniverse application, RTX Remix, is putting powerful new tools in the hands of game modders.

Modders — the millions of creators in the gaming world who are responsible for driving billions of game mod downloads annually — can use the app to remaster a large library of compatible DirectX 8 and 9 titles, including one of the world’s most modded games, The Elder Scrolls III: Morrowind. As a test, NVIDIA artists updated a Morrowind scene in stunning ray tracing with DLSS 3 and enhanced assets.

It starts with the magical capture tool. With one click, capture geometry, materials, lighting and cameras in the Universal Scene Description format. AI texture tools bring assets up to date, with super resolution and physically based materials. Assets are easily customizable in real time using Omniverse Connectors for Autodesk Maya, Blender and more.

Modders can collaborate in Omniverse connected apps and view changes in RTX Remix to replace assets throughout entire games. RTX Remix features a state-of-the-art ray tracer, DLSS 3 and more, making it easy to reimagine classics with incredible graphics.

RTX mods work alongside existing mods, meaning a large breadth of content on sites like Nexus Mods is ready to be updated with dazzling RTX. Sign up to be notified when RTX Remix is available.

The robust capabilities of this new modding platform were used to create Portal with RTX.

Portal fans can wishlist the Portal with RTX downloadable content on Steam and experience the first-ever RTX Remix-modded game in November.

Fast Forward to the Future of Video Production

Video production is getting a significant boost with GeForce RTX 40 Series GPUs. The feeling of being stuck on pause while waiting for videos to export gets dramatically reduced with the GeForce RTX 40 Series’ new dual encoders, which slash export times nearly in half.

The dual encoders can work in tandem, dividing work automatically between them to double output. They’re also capable of recording up to 8K, 60 FPS content in real time via GeForce Experience and OBS Studio to make stunning gameplay videos.

Blackmagic Design’s DaVinci Resolve, the popular Voukoder plugin for Adobe Premiere Pro, and Jianying — the top video editing app in China — are all enabling AV1 support, as well as a dual encoder through encode presets. Expect dual encoder and AV1 availability for these apps in October. And we’re working with popular video-effects app Notch to enable AV1, as well as Topaz to enable AV1 and the dual encoder.

AI tools are changing the speed at which video work gets done. Professionals can now automate tedious tasks, while aspiring filmmakers can add stylish effects with ease. Rotoscoping — the process of highlighting a part of motion footage, typically done frame by frame — can now be done nearly instantaneously with the AI-powered “Object Select Mask” tool in Blackmagic Design’s DaVinci Resolve. With GeForce RTX 40 Series GPUs, this feature is 70% faster than with the previous generation.

“The new GeForce RTX 40 Series GPUs are going to supercharge the speed at which our users are able to produce video through the power of AI and dual encoding — completing their work in a fraction of the time,” said Rohit Gupta, director of software development at Blackmagic Design.

Content creators using GeForce RTX 40 Series GPUs also benefit from speedups to existing integrations in top video-editing apps. GPU-accelerated effects and decoding save immeasurable time by enabling work with ultra-high-resolution RAW footage in real time in REDCINE-X PRO, DaVinci Resolve and Adobe Premiere Pro, without the need for proxies.

AV1 Brings Encoding Benefits to Livestreamers and More

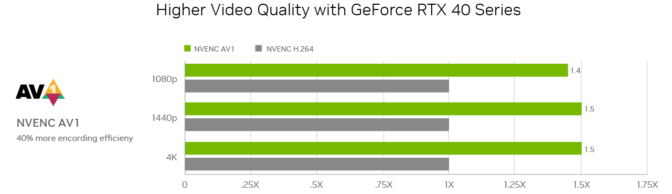

The open video encoding format AV1 is the biggest leap in encoding since H.264 was released nearly two decades ago. The GeForce RTX 40 Series features the eighth-generation NVIDIA video encoder, NVENC for short, now with support for AV1.

For livestreamers, the new AV1 encoder delivers 40% better efficiency. This means livestreams will appear as if bandwidth was increased by 40% — a big boost in image quality. AV1 also adds support for advanced features like high dynamic range.

NVIDIA collaborated with OBS Studio to add AV1 — on top of the recently released HEVC and HDR support — within its next software release, expected in October. OBS is also optimizing encoding pipelines to reduce overhead by 35% for all NVIDIA GPUs. The new release will additionally feature updated NVIDIA Broadcast effects, including noise and room echo removal, as well as improvements to virtual background.

We’ve also worked with Discord to enable end-to-end livestreams with AV1. In an update releasing later this year, Discord will enable its users to use AV1 to dramatically improve screen sharing, be it for game play, school work or hangouts with friends.

To make the deployment of AV1 seamless for developers, NVIDIA is making it available in the NVIDIA Video Codec SDK 12 in October. Developers can also access the NVENC AV1 directly with Microsoft Media Framework, through Google Chrome and Chromium, as well as in FFMPEG.

Benefits to livestreamers go beyond AV1 encoding on GeForce RTX 40 Series GPUs. The SDKs that power NVIDIA Broadcast are available to developers, enabling native feature support for Logitech, Corsair and Elgato devices, or advanced workflows in OBS and Notch software. At GTC, NVIDIA updated and introduced new AI-powered effects:

- The popular Virtual Background feature now includes temporal information, so random objects in the background will no longer create distractions by flashing in and out. It will be available in the next version of OBS Studio.

- Face Expression Estimation is a new feature that allows apps to accurately track facial expressions for face meshes, even with the simplest of webcams. It’s hugely beneficial to VTubers and can be found in the next version of VTube Studio.

- Eye Contact allows creators, like podcasters, to appear as if they’re looking directly at the camera — highly useful for when the user is reading a script or looking away to engage with viewers in the chat window.

The Making of ‘Racer RTX’

To showcase the technological advancements made possible by GeForce RTX 40 Series GPUs, a global team of NVIDIANs, led by creative director Gabriele Leone, created a stunning new technology demo, Racer RTX.

Leone and team set out to one-up the fully playable, physics-based Marbles at Night RTX demo. With improved GPU performance and breakthrough advancements in NVIDIA Omniverse, Racer RTX lets the user explore different sandbox environments, highlighting the amazing 3D worlds that artists are now able to create.

The demo is a look into the next generation of content creation, “where virtually everything is simulated,” Leone said. “Soon, there’s going to be no need to bake lighting — content will be fully simulated, aided by incredibly powerful GeForce RTX 40 Series GPUs.”

The Omniverse real-time editor empowered the artists on the project to create lights, design materials, rig physics, adjust elements and see updates immediately. They moved objects, added new geometry, changed surface types and tweaked physics.

In a traditional rasterized workflow, levels and lighting need to be baked. And in a typical art environment, only one person can work on a level at a time, leading to painstaking iteration that greatly slows the creation process. These challenges were overcome with Omniverse.

Animating behavior is also a complex and manual process for creators. Using NVIDIA MDL-based materials, Leone turned on PhysX in Omniverse, and each surface and object was automatically textured and modeled to behave as it would in real life. Ram a baseball, for example, and it’ll roll away and interact with other objects until it runs out of momentum.

Working with GeForce RTX 40 Series GPUs and DLSS 3 meant the team could modify its worlds through a fully ray-traced design viewport in 4K at 60 FPS — 4x the FPS of a GeForce RTX 3090 Ti.

And the team is just getting started. The Racer RTX demo will be available for developers and creators to download, explore and tweak in November. Get familiar with Omniverse ahead of the release.

With all these astounding advancements in AI and GPU-accelerated features for the NVIDIA Studio platform, it’s the perfect time to upgrade for gamers, 3D artists, video editors and live steamers. And battle-tested monthly NVIDIA Studio Drivers help any creator feel like a professional — download the September Driver.

Stay Tuned for the Latest Studio News

Keep up to date on the latest creator news, creative app updates, AI-powered workflows and featured In the NVIDIA Studio artists by visiting the NVIDIA Studio blog.

GeForce RTX 4090 GPUs launch on Wednesday, Oct. 12, followed by the GeForce RTX 4080 graphics cards in November. Visit GeForce.com for further information.

Follow NVIDIA Studio on Instagram, Twitter and Facebook. Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the NVIDIA Studio newsletter.

The post Creativity Redefined: New GeForce RTX 40 Series GPUs and NVIDIA Studio Updates Accelerate AI Revolution appeared first on NVIDIA Blog.