NeurIPS is the world’s largest conference in artificial intelligence (AI) and machine learning (ML), and we’re proud to support the event as Diamond sponsors, helping foster the exchange of research advances in the AI and ML community. Teams from across DeepMind are presenting 47 papers, including 35 external collaborations in virtual panels and poster sessions.Read More

DeepMind’s latest research at NeurIPS 2022

NeurIPS is the world’s largest conference in artificial intelligence (AI) and machine learning (ML), and we’re proud to support the event as Diamond sponsors, helping foster the exchange of research advances in the AI and ML community. Teams from across DeepMind are presenting 47 papers, including 35 external collaborations in virtual panels and poster sessions.Read More

DeepMind’s latest research at NeurIPS 2022

NeurIPS is the world’s largest conference in artificial intelligence (AI) and machine learning (ML), and we’re proud to support the event as Diamond sponsors, helping foster the exchange of research advances in the AI and ML community. Teams from across DeepMind are presenting 47 papers, including 35 external collaborations in virtual panels and poster sessions.Read More

DeepMind’s latest research at NeurIPS 2022

NeurIPS is the world’s largest conference in artificial intelligence (AI) and machine learning (ML), and we’re proud to support the event as Diamond sponsors, helping foster the exchange of research advances in the AI and ML community. Teams from across DeepMind are presenting 47 papers, including 35 external collaborations in virtual panels and poster sessions.Read More

DeepMind’s latest research at NeurIPS 2022

NeurIPS is the world’s largest conference in artificial intelligence (AI) and machine learning (ML), and we’re proud to support the event as Diamond sponsors, helping foster the exchange of research advances in the AI and ML community. Teams from across DeepMind are presenting 47 papers, including 35 external collaborations in virtual panels and poster sessions.Read More

DeepMind’s latest research at NeurIPS 2022

NeurIPS is the world’s largest conference in artificial intelligence (AI) and machine learning (ML), and we’re proud to support the event as Diamond sponsors, helping foster the exchange of research advances in the AI and ML community. Teams from across DeepMind are presenting 47 papers, including 35 external collaborations in virtual panels and poster sessions.Read More

Turn Black Friday Into Green Thursday With New GeForce NOW Deal

Black Friday is now Green Thursday with a great deal on GeForce NOW this week.

For a limited time, get a free $20-value GeForce NOW membership gift card with every purchase of a $50-value GeForce NOW membership gift card. Treat yourself and a buddy to high-performance cloud gaming — there’s never been a better time to share the love of GeForce NOW.

Plus, kick off a gaming-filled weekend with four new titles joining the GeForce NOW library.

Instant Streaming, Instant Savings

For one week only, from Nov. 23-Dec. 2, purchase a $50-value gift card — good toward a three-month RTX 3080 membership or a six-month Priority membership — and get a bonus $20-value GeForce NOW membership gift card for free, which is good toward a one-month RTX 3080 membership or a two-month Priority membership.

Recipients will be able to redeem these gift cards for the GeForce NOW membership level of their choice. The $20-value free gift card will be delivered as a digital code — providing instant savings for instant streaming. Learn more details.

With a paid membership, gamers get access to stream over 1,400 PC games with longer gaming sessions and real-time ray tracing for supported games across nearly all devices, even those that aren’t game ready. Priority members can stream up to 1080p at 60 frames per second, and RTX 3080 members can stream up to 4K at 60 FPS or 1440p at 120 FPS.

This special offer is valid on $50-value digital or physical gift card purchases, making it a perfect stocking stuffer or last-minute gift. Snag the deal to make Black Friday shopping stress-free this year.

Time to Play

The best way to celebrate a shiny new GeForce NOW membership is with the new games available to stream this GFN Thursday. Start out with Evil West from Focus Entertainment, a vampire-hunting third-person action game set in a fantasy version of the Old West. Play as a lone hunter or co-op with a buddy to explore and eradicate the vampire threat while upgrading weapons and tools along the way.

Check out this week’s new games here:

- Evil West (New release on Steam)

- Ship of Fools (New release on Steam)

- Crysis 2 Remastered (Steam)

- Crysis 3 Remastered (Steam)

Before you dig into your weekend gaming, we’ve got a question for you. Let us know your answer on Twitter or in the comments below.

You’re having a dinner party and can bring any two video game characters…

Who are you inviting?

—

NVIDIA GeForce NOW (@NVIDIAGFN) November 23, 2022

The post Turn Black Friday Into Green Thursday With New GeForce NOW Deal appeared first on NVIDIA Blog.

How JPMorgan Chase & Co. uses AWS DeepRacer events to drive global cloud adoption

This is a guest post by Stephen Carrad, Vice President at JP Morgan Chase & Co.

JPMorgan & Chase Co. started its cloud journey four years ago, building the integrations required to deploy cloud-native applications into the cloud in a resilient and secure manner. In the first year, three applications tentatively dipped their toes into the cloud, and today, we have an ambitious cloud-first agenda.

Operating in the cloud requires a change in culture and a fundamental reeducation towards a new normal. An on-premises server is like your car: you own it, power it, maintain it, and upgrade it. In the cloud, a server is like a rideshare: you press a few buttons, the car appears, you use it for a certain time, and when you’ve finished with it you walk away and someone else uses it. To adapt to a cloud first agenda, our engineers are learning a new operating model, new tools, and new processes.

JPMorgan Chase’s AWS DeepRacer learning program was born in Chicago in 2019. A child of the Chicago Innovation team led, it’s designed to upskill our employees in an enjoyable way by allowing them to compete internally with their local peer groups, globally against other cities, and externally against other firms, universities, and individuals. We started with physical tracks in Chicago and London, and now have tracks in most of our 20+ technology centers around the globe and several racers participating in the DeepRacer Championship Cup at AWS re:Invent.

It started small, but immediately provided value and, more importantly, entertainment to the participants of the program. People who had never used the AWS Management Console before logged on and learned how it worked, played with AWS DeepRacer, and started to write code and learn about reinforcement learning. They also started to collaborate with one another—someone would have an idea to reduce costs or provide visualization of the log analysis, and other people would partner with them to build new tools. It grew beyond teaching people about AWS products and machine learning to people across the world collaborating, building tools, and creating quizzes. We also have the JPMorgan Chase International Speedway, developed in Tampa, where we host our companywide annual finals.

Our AWS DeepRacer learning program now runs in 20 cities and 3,500 people have participated over the past two years. They have gained knowledge of the AWS console, Python, Amazon SageMaker, Jupyter notebooks, and reinforcement learning. Our biggest success is watching people change roles due to their participation.

We recently introduced the AWS DeepRacer Driving License, so hiring managers can see that applicants have attained a recognized standard. It includes a training curriculum that people can follow that enables them to both be knowledgeable and competitive. They also need to attain a certain lap time to prove they have been able to apply the knowledge they have gained.

JPMorgan Chase is now a cloud first organization. With the excitement and interest in the Drivers License, application teams have started to look towards the cloud and have found they are more likely to have technologists in their team with AWS skills. These individuals have then been able to apply their new skills in their day-to-day work.

In 2021, more than 80,000 participants from over 150 countries participated in AWS DeepRacer. As a testament to the work our employees have done with AWS DeepRacer, seven of the 40 racers in AWS’s global championships were JPMorgan Chase technologists. When the dust had settled, our employees topped the podium with first, second, and seventh place finishes. This was a huge achievement against some excellent competitors, and I apologize to anyone sitting near us in the arena at AWS re:Invent for all the shouting and screams of excitement.

This year’s entry to the AWS Championship finals can be achieved by racing on either virtual or physical tracks. We’re looking to get our tracks out and invite our competitors to come and learn, share ideas, enjoy pizza and practice on our tracks. We have also open-sourced two tools that we have created:

- DeepRacer on the Spot – This tool placed third in our Annual Hackathon in Houston. It allows teams to train models on Amazon Elastic Compute Cloud (Amazon EC2) instances using Spot pricing, which can be up to 90% cheaper than training on the console.

- Guru – Developed by one of our participants in London, this log analysis tool provides visualization of what the car is doing on the track at any point and how it is being rewarded.

Racing this year is going to be particularly interesting we continue to expand our presence with top racers. Yousef, Roger, and Tyler will be trying to knock Sairam off the podium, and a couple of groups of MDs are forming their own teams—look out for Managing Directions! I would say that my money is on our graduate talent, but that might be career limiting. We look forward to collaborating with our fellow racers on the tools we are releasing and invite you to race on our tracks.

AWS DeepRacer is at the forefront of making us a cloud-ready organization. To learn more about how you can drive collaboration and ML learning like JPMorgan Chase with AWS DeepRacer, join my session on Wednesday, November 30th at 2:30 PM.

About the author

Stephen Carrad is a DevOps Manager at JPMorgan Chase. He also leads the JPMorgan Chase DeepRacer Learning Program to grow his team building skills and support the firm’s widespread public cloud adoption. Outside of work, Stephen enjoys trying to keep up with his teenage children whilst skiing or cycling and coaching his local under-16 rugby team.

Stephen Carrad is a DevOps Manager at JPMorgan Chase. He also leads the JPMorgan Chase DeepRacer Learning Program to grow his team building skills and support the firm’s widespread public cloud adoption. Outside of work, Stephen enjoys trying to keep up with his teenage children whilst skiing or cycling and coaching his local under-16 rugby team.

Apply fine-grained data access controls with AWS Lake Formation and Amazon EMR from Amazon SageMaker Studio

Amazon SageMaker Studio is a fully integrated development environment (IDE) for machine learning (ML) that enables data scientists and developers to perform every step of the ML workflow, from preparing data to building, training, tuning, and deploying models. Studio comes with built-in integration with Amazon EMR so that data scientists can interactively prepare data at petabyte scale using open-source frameworks such as Apache Spark, Hive, and300 Presto right from within Studio notebooks. Data is often stored in data lakes managed by AWS Lake Formation, enabling you to apply fine-grained access control through a simple grant or revoke mechanism. We’re excited to announce that Studio now supports applying this fine-grained data access control with Lake Formation when accessing data through Amazon EMR.

Until now, when you ran multiple data processing jobs on an EMR cluster, all the jobs used the same AWS Identity and Access Management (IAM) role for accessing data—namely, the cluster’s Amazon Elastic Compute Cloud (Amazon EC2) instance profile. Therefore, to run jobs that needed access to different data sources such as different Amazon Simple Storage Service (Amazon S3) buckets, you had to configure the EC2 instance profile with policies that allowed access to the union of all such data sources. Additionally, for enabling groups of users with differential access to data, you had to create multiple separate clusters, one for each group, resulting in operational overheads. Separately, jobs submitted to Amazon EMR from Studio notebooks were unable to apply fine-grained data access control with Lake Formation.

Starting with the release of Amazon EMR 6.9, when you connect to EMR clusters from Studio notebooks, you can visually browse and choose an IAM role on the fly called the runtime IAM role. Subsequently, all your Apache Spark, Apache Hive, or Presto jobs created from Studio notebooks will access only the data and resources permitted by policies attached to the runtime role. Also, when data is accessed from data lakes managed with Lake Formation, you can enforce table-level and column-level access using policies attached to the runtime role.

With this new capability, multiple Studio users can connect to the same EMR cluster, each using a runtime IAM role scoped with permissions matching their individual level of access to data. Their user sessions are also completely isolated from one another on the shared cluster. With this ability to control fine-grained access to data on the same shared cluster, you can simplify provisioning of EMR clusters, thereby reducing operational overhead and saving costs.

In this post, we demonstrate how to use a Studio notebook to connect to an EMR cluster using runtime roles. We provide a sample Studio Lifecycle Configuration that can help configure the EMR runtime roles that a Studio user profile has access to. Additionally, we manage data access in a data lake via Lake Formation by enforcing row-level and column-level permissions to the EMR runtime roles.

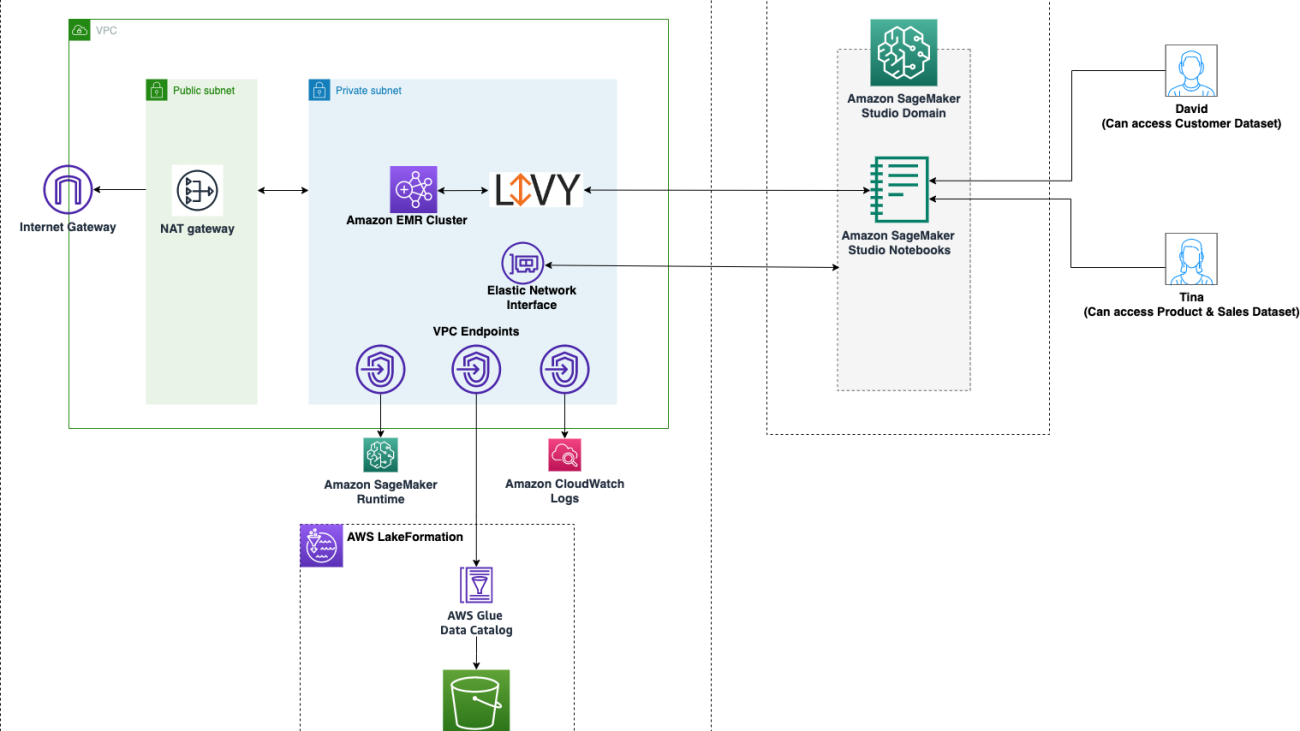

Solution overview

We demonstrate this solution with an end-to-end use case using a sample dataset, the TPC data model. This data represents transaction data for products and includes information such as customer demographics, inventory, web sales, and promotions. To demonstrate fine-grained data access permissions, we consider the following two users:

- David, a data scientist on the marketing team. He is tasked with building a model on customer segmentation, and is only permitted to access non-sensitive customer data.

- Tina, a data scientist on the sales team. She is tasked with building the sales forecast model, and needs access to sales data for the particular region. She is also helping the product team with innovation, and therefore needs access to product data as well.

The architecture is implemented as follows:

- Lake Formation manages the data lake, and the raw data is available in S3 buckets

- Amazon EMR is used to query the data from the data lake and perform data preparation using Spark

- IAM roles are used to manage data access using Lake Formation

- Studio is used as the single visual interface to interactively query and prepare the data

The following diagram illustrates this architecture.

The following sections walk through the steps required to enable runtime IAM roles for Amazon EMR integration with an existing Studio domain. You can use the provided AWS CloudFormation stack in the Deploy the solution section below to set up the architectural components for this solution.

Prerequisites

Before you get started, make sure you have the following prerequisites:

- An AWS account

- An IAM user with administrator access

Set up Amazon EMR with runtime roles

The EMR cluster should be created with IAM runtime roles enabled. For more details on using runtime roles with Amazon EMR, see Configure runtime roles for Amazon EMR steps. Associating runtime roles with EMR clusters is supported in Amazon EMR 6.9. Make sure the following configuration is in place:

- The EMR runtime role’s trust policy should allow the EMR EC2 instance profile to assume the role

- The EMR EC2 instance profile role should be able to assume the EMR runtime roles

- The EMR cluster should be created with encryption in transit

You can optionally choose to pass the SourceIdentity (the Studio user profile name) for monitoring the user resource access. Follow the steps outlined in Monitoring user resource access from Amazon SageMaker Studio to enable SourceIdentity for your Studio domain.

Finally, refer to Prepare Data using Amazon EMR for detailed setup and networking instructions on integrating Studio with EMR clusters.

Create bootstrap action for the cluster

You need to run a bootstrap action on the cluster to ensure Studio notebook’s connectivity with EMR through runtime roles. Complete the following steps:

- Download the bootstrap script from

s3://emr-data-access-control-<region>/customer-bootstrap-actions/gcsc/replace-rpms.sh, replacing region with your region - Download this RPM file from

s3://emr-data-access-control-<region>/customer-bootstrap-actions/gcsc/emr-secret-agent-1.18.0-SNAPSHOT20221121212949.noarch.rpm - Upload both files to an S3 bucket in your account and region

- When creating your EMR cluster, include the following bootstrap action:

--bootstrap-actions "Path=<S3-URI-of-the-bootstrap-script>,Args=[<S3-URI-of-the-RPM-file>]"

Update the Studio execution role

Your Studio user’s execution role needs to be updated to allow the GetClusterSessionCredentials API action. Add the following policy to the Studio execution role, replacing the resource with the cluster ARNs you wish to allow your users to connect to:

You can also use conditions to control which EMR execution roles can be used by the Studio execution role.

Alternatively, you can attach a role such as below, which restricts access to clusters based on resource tags. This allows for tag-based access control, and you can use the same policy statements across user roles, instead of explicitly adding cluster ARNs.

Set up role configurations through Studio LCC

By default, the Studio UI uses the Studio execution role to connect to the EMR cluster. If your user can access multiple roles, they can update the EMR cluster connection commands with the role ARN they want to pass as a runtime role. For a better user experience, you can set up a configuration file on the user’s home directory on Amazon Elastic File System (Amazon EFS), which automatically informs the Studio UI of the roles that are available to connect for the user. You can also automate this process through Studio Lifecycle Configurations. We provide the following sample Lifecycle Configuration script to configure the roles:

Connect to clusters from the Studio UI

After the role and Lifecycle Configuration scripts are set up, you can launch the Studio UI and connect to the clusters when you create a new notebook using any of the following kernels:

- DataScience – Python 3 kernel

- DataScience 2.0 – Python 3 kernel

- DataScience 3.0 – Python 3 kernel

- SparkAnalytics 1.0 – SparkMagic and PySpark kernels

- SparkAnalytics 2.0 – SparkMagic and PySpark kernels

- SparkMagic – PySpark kernel

Note: The Studio UI for connecting to EMR clusters using runtime roles work only on JupyterLab version 3. See Jupyter versioning for details on upgrading to JL3.

Deploy the solution

To test out the solution end to end, we provide a CloudFormation template that sets up the services included in the architecture, to enable repeatable deployments. This template creates the following resources:

- An S3 bucket for the data lake.

- An EMR cluster with EMR runtime roles enabled.

- IAM roles for accessing the data in data lake, with fine-grained permissions:

Marketing-data-access-roleSales-data-access-roleElectronics-data-access-role

- A Studio domain and two user profiles. The Studio execution roles for the users allow the users to assume their corresponding EMR runtime roles.

- A Lifecycle Configuration to enable the selection of the role to use for the EMR connection.

- A Lake Formation database populated with the TPC data.

- Networking resources required for the setup, such as VPC, subnets, and security groups.

To deploy the solution, complete the following steps:

- Choose Launch Stack to launch the CloudFormation stack:

- Enter a stack name, provide the following parameters –

- An idle timeout for the EMR cluster (to avoid paying for the cluster when it’s not being used).

- An S3 URI with the EMR encryption key. You can follow the steps in the EMR documentation here to generate a key and zip file specific to your region. If you are deploying in US East (N. Virginia), remember to use

CN=*.ec2.internal, as specified in the documentation here. Make sure to upload the zip file on a S3 bucket in the same region as your CloudFormation stack deployment.

- Select I acknowledge that AWS CloudFormation might create IAM resources with custom names.

- Choose Create stack.

Once the Stack is created, allow Amazon EMR to query Lake Formation by updating the External Data Filtering settings on Lake Formation. Follow the instructions provided in the Lake Formation guide here, and choose ‘Amazon EMR’ for Session tag values, and enter your AWS account ID under AWS account IDs.

Test role-based data access

With the infrastructure in place, you’re ready to test out the fine-grained data access for the two Studio users. To recap, the user David should only be able to access non-sensitive customer data. Tina can access data in two tables: sales and product information. Let’s test each user profile.

David’s user profile

To test your data access with David’s user profile, complete the following steps:

- Log in to the AWS console.

- From the created Studio domain, launch Studio from the user profile

david-non-sensitive-customer. - In the Studio UI, start a notebook with any of the supported kernels, e.g., SparkMagic image with the PySpark kernel.

The cluster is pre-created in the account.

- Connect to the cluster by choosing Cluster in your notebook and choosing the cluster

<StackName>-emr-cluster. In the role selector pop-up, choose the<StackName>-marketing-data-access-role. - Choose Connect.

This will automatically create a notebook cell with magic commands to connect to the cluster. Wait for the cell to execute and the connection to be established before proceeding with the remaining steps.

Now let’s query the marketing table from the notebook.

- In a new cell, enter the following query and run the cell:

After the cell runs successfully, you can view the first 10 records in the table. Note that you can’t view the customers’ name, as the user only has permissions to read non-sensitive data, through column-level filtering.

Let’s test to make sure David can’t read any sensitive customer data.

- In a new cell, run the following query:

This cell should throw an Access Denied error.

Tina’s user profile

Tina’s Studio execution role allows her to access the Lake Formation database using two EMR execution roles. This is achieved by listing the role ARNs in a configuration file in Tina’s file directory. These roles can be set using Studio Lifecycle Configurations to persist the roles across app restarts. To test Tina’s access, complete the following steps:

- Launch Studio from the user profile

tina-sales-electronics.

It’s a good practice to close any previous Studio sessions on your browser when switching user profiles. There can only be one active Studio user session at a time.

- In the Studio UI, start a notebook with any of the supported kernels, e.g., SparkMagic image with the PySpark kernel.

- Connect to the cluster by choosing Cluster in your notebook and choosing the cluster

<StackName>-emr-cluster. - Choose Connect.

Because Tina’s profile is set up with multiple EMR roles, you’re prompted with a UI drop-down that allows you to connect using multiple roles.

The Studio execution role is also available in the dropdown, as the clusters connect using the user’s execution role by default to connect to the cluster.

You can directly provide Lake Formation access to the user’s execution role as well.This will automatically create a notebook cell with magic commands to connect to the cluster, using the chosen role.Now let’s query the sales table from the notebook.

- In a new cell, enter the following query and run the cell:

After the cell runs successfully, you can view the first 10 records in the table.

Now let’s try accessing the product table.

- Choose Cluster again, and choose the cluster.

- In the role prompt pop-up, choose the role

<StackName>-electronics-data-access-roleand connect to the cluster. - After you’re connected successfully to the cluster with the electronics data access role, create a new cell and run the following query:

This cell should complete successfully, and you can view the first 10 records in the products table.

With a single Studio user profile, you have now successfully assumed multiple roles, and queried data in Lake Formation using multiple roles, without the need for restarting the notebooks or creating additional clusters. Now that you’re able to access the data using appropriate roles, you can interactively explore the data, visualize the data, and prepare data for training. You also used different user profiles to provide your users in different teams access to a specific table or columns and rows, without the need for additional clusters.

Clean up

When you’re finished experimenting with this solution, clean up your resources:

- Shut down the Studio apps for the user profiles. See Shut Down and Update SageMaker Studio and Studio Apps for instructions. Ensure that all apps are deleted before deleting the stack.

The EMR cluster will be automatically deleted after the idle timeout value.

- Delete the EFS volume created for the domain. You can view the EFS volume attached with the domain by using a DescribeDomain API call.

- Make sure to empty the S3 buckets created by this stack.

- Delete the stack from the AWS CloudFormation console.

Conclusion

This post showed you how you can use runtime roles to connect Studio with Amazon EMR to apply fine-grained data access control with Lake Formation. We also demonstrated how multiple Studio users canconnect to the same EMR cluster, each using a runtime IAM role scoped with permissions matching their individual level of access to data. We detailed the steps required to manually set up the integration, and provided a CloudFormation template to set up the infrastructure end to end. This feature is available in the following AWS regions: Europe (Paris), US East (N. Virginia and Ohio) and US West (Oregon), and the CloudFormation template will deploy in US East (N. Virginia and Ohio) and US West (Oregon).

To learn more about using EMR with SageMaker Studio, visit Prepare Data using Amazon EMR. We encourage you to try out this new functionality, and connect with the Machine Learning & AI community if you have any questions or feedback!

About the authors

Durga Sury is a ML Solutions Architect in the Amazon SageMaker Service SA team. She is passionate about making machine learning accessible to everyone. In her 3 years at AWS, she has helped set up AI/ML platforms for enterprise customers. When she isn’t working, she loves motorcycle rides, mystery novels, and hikes with her four-year old husky.

Durga Sury is a ML Solutions Architect in the Amazon SageMaker Service SA team. She is passionate about making machine learning accessible to everyone. In her 3 years at AWS, she has helped set up AI/ML platforms for enterprise customers. When she isn’t working, she loves motorcycle rides, mystery novels, and hikes with her four-year old husky.

Sriharsh Adari is a Senior Solutions Architect at Amazon Web Services (AWS), where he helps customers work backwards from business outcomes to develop innovative solutions on AWS. Over the years, he has helped multiple customers on data platform transformations across industry verticals. His core area of expertise include Technology Strategy, Data Analytics, and Data Science. In his spare time, he enjoys playing Tennis, binge-watching TV shows, and playing Tabla.

Sriharsh Adari is a Senior Solutions Architect at Amazon Web Services (AWS), where he helps customers work backwards from business outcomes to develop innovative solutions on AWS. Over the years, he has helped multiple customers on data platform transformations across industry verticals. His core area of expertise include Technology Strategy, Data Analytics, and Data Science. In his spare time, he enjoys playing Tennis, binge-watching TV shows, and playing Tabla.

Maira Ladeira Tanke is an ML Specialist Solutions Architect at AWS. With a background in data science, she has 9 years of experience architecting and building ML applications with customers across industries. As a technical lead, she helps customers accelerate their achievement of business value through emerging technologies and innovative solutions. In her free time, Maira enjoys traveling and spending time with her family someplace warm.

Maira Ladeira Tanke is an ML Specialist Solutions Architect at AWS. With a background in data science, she has 9 years of experience architecting and building ML applications with customers across industries. As a technical lead, she helps customers accelerate their achievement of business value through emerging technologies and innovative solutions. In her free time, Maira enjoys traveling and spending time with her family someplace warm.

Sumedha Swamy is a Principal Product Manager at Amazon Web Services. He leads SageMaker Studio team to build it into the IDE of choice for interactive data science and data engineering workflows. He has spent the past 15 years building customer-obsessed consumer and enterprise products using Machine Learning. In his free time he likes photographing the amazing geology of the American Southwest.

Sumedha Swamy is a Principal Product Manager at Amazon Web Services. He leads SageMaker Studio team to build it into the IDE of choice for interactive data science and data engineering workflows. He has spent the past 15 years building customer-obsessed consumer and enterprise products using Machine Learning. In his free time he likes photographing the amazing geology of the American Southwest.

Jun Lyu is a Software Engineer on the SageMaker Notebooks team. He has a Master’s degree in engineering from Duke University. He has been working for Amazon since 2015 and has contributed to AWS services like Amazon Machine Learning, Amazon SageMaker Notebooks, and Amazon SageMaker Studio. In his spare time, he enjoys spending time with his family, reading, cooking, and playing video games.

Jun Lyu is a Software Engineer on the SageMaker Notebooks team. He has a Master’s degree in engineering from Duke University. He has been working for Amazon since 2015 and has contributed to AWS services like Amazon Machine Learning, Amazon SageMaker Notebooks, and Amazon SageMaker Studio. In his spare time, he enjoys spending time with his family, reading, cooking, and playing video games.

AWS Cloud technology for near-real-time cardiac anomaly detection using data from wearable devices

Cardiovascular diseases (CVDs) are the number one cause of death globally: more people die each year from CVDs than from any other cause.

The COVID-19 pandemic made organizations change healthcare delivery to reduce staff contact with sick people and the overall pressure on the healthcare system. This technology enables organizations to deliver telehealth solutions, which monitor and detect conditions that can put patient health at risk.

In this post, we present an AWS architecture that processes live electrocardiogram (ECG) feeds from common wearable devices, analyzes the data, provides near-real-time information via a web dashboard. If a potential critical condition is detected, it sends real-time alerts to subscribed individuals.

Solution overview

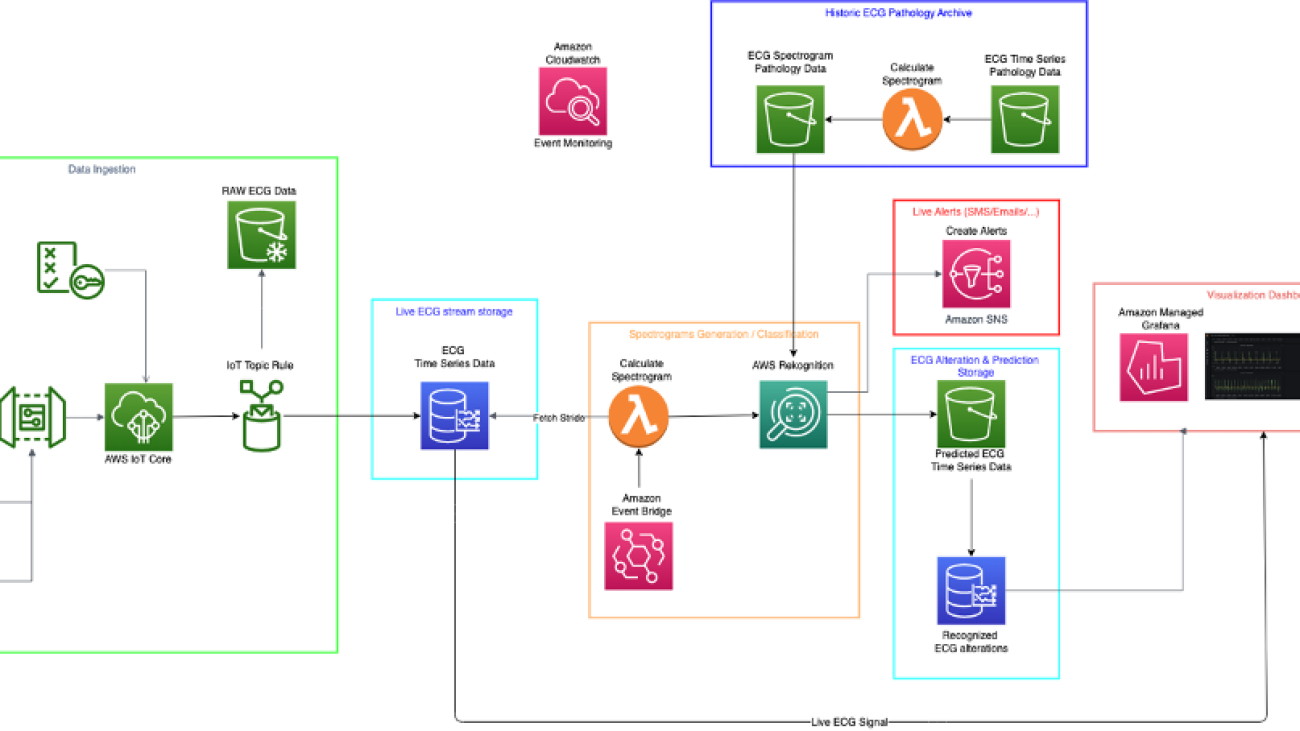

The architecture is divided in six different layers:

- Data ingestion

- Live ECG stream storage

- ECG data processing

- Historic ECG pathology archive

- Live alerts

- Visualization dashboard

The following diagram shows the high-level architecture.

In the following sections, we discuss each layer in more detail.

Data ingestion

The data ingestion layer uses AWS IoT Core as the connection point between the external remote sensors and the AWS Cloud architecture, which is capable of storing, transforming, analyzing, and showing insights from the acquired live feeds from remote wearable devices.

When the data from the remote wearable devices reaches AWS IoT Core, it can be sent using an AWS IoT rule and associated actions.

In the proposed architecture, we use one rule and one action. The rule extracts data from the raw stream using a simple SQL statement, as outlined by the following AWS IoT Core rule definition SQL code.

SELECT device_id, ecg, ppg, bpm, timestamp() as timestamp FROM ‘dt/sensor/#’The action writes the extracted data from the rule into an Amazon Timestream database.

For more information on how to implement workloads using AWS IoT Core, refer to Implementing time-critical cloud-to-device IoT message patterns on AWS IoT Core.

Live ECG stream storage

Live data arriving from connected ECG sensors is immediately stored in Timestream, which is purposely designed to store time series data.

From Timestream, data is periodically extracted into shards and subsequently processed by AWS Lambda to generate spectrograms and by Amazon Rekognition to perform ECG spectrogram classification.

You can create and manage a Timestream database via the AWS Management Console, from the AWS Command Line Interface (AWS CLI), or via API calls.

On the Timestream console, you can observe and monitor various database metrics, as shown in the following screenshot.

In addition, you can run various queries against a given database.

ECG data processing

The processing layer is composed of Amazon EventBridge, Lambda, and Amazon Rekognition.

The core of the detection centers on the ability to create spectrograms from a time series stride and use Amazon Rekognition Custom Labels, trained with an archive of spectrograms generated from time series strides of ECG data from patients affected by various pathologies, to perform a classification of the incoming ECG data live stream transformed into spectrograms by Lambda.

EventBridge event details

With EventBridge, it’s possible to create event-driven applications at scale across AWS.

In the case of the ECG near-real-time analysis, EventBridge is used to create an event (SpectrogramPeriodicGeneration) that periodically triggers a Lambda function to generate spectrograms from the raw ECG data and send a request to Amazon Rekognition to analyze the spectrograms to detect signs of anomalies.

The following screenshot shows the configuration details of the SpectrogramPeriodicGeneration event.

Lambda function details

The Lambda function GenerateSpectrogramsFromTimeSeries, written entirely in Python, functions as orchestrator among the different steps needed to perform a classification of an ECG spectrogram. It’s a crucial piece of the processing layer that detects if an incoming ECG signal presents signs of possible anomalies.

The Lambda function has three main purposes:

- Fetch a 1-minute stride from the live ECG stream

- Generate spectrograms from it

- Initiate an Amazon Rekognition job to perform classification of the generated spectrograms

Amazon Rekognition details

The ECG analysis to detect if anomalies are present is based on the classification of spectrograms generated from 1-minute-long ECG trace strides.

To accomplish this classification job, we use Rekognition Custom Labels to train a model capable of identifying different cardiac pathologies found in spectrograms generated from ECG traces of people with various cardiac conditions.

To start using Rekognition Custom Labels, we need to specify the locations of the datasets, which contain the data that Amazon Rekognition uses for labeling, training, and validation.

Looking inside of the defined datasets, it’s possible to see more details that Amazon Rekognition has extracted from the given Amazon Simple Storage Service (Amazon S3) bucket.

From this page, we can see the labels that Amazon Rekognition has automatically generated by matching the folder names present in the S3 bucket.

In addition, Amazon Rekognition provides a preview of the labeled images.

The following screenshot shows the details of the S3 bucket used by Amazon Rekognition.

After you have defined a dataset, you can use Rekognition Custom Labels to train on your data, and deploy the model for inference afterwards.

The Rekognition Custom Labels project pages provide details about each available project and a tree representation of all the models that have been created.

Moreover, the project pages show the status of the available models and their performances.

You can choose the models on the Rekognition Custom Labels console to see more details of each model, as shown in the following screenshot.

Further details about the model are available on the Model details tab.

For further assessment of model performance, choose View test results. The following screenshot shows an example of test results from our model.

Historic ECG pathology archive

The pathology archive layer receives raw time series ECG data, generates spectrograms, and stores those in a separate bucket that you can use to further train your Rekognition Custom Labels model.

Visualization dashboard

The live visualization dashboard, responsible for showing real-time ECGs, PPG traces, and live BPM, is implemented via Amazon Managed Grafana.

Amazon Managed Grafana is a fully managed service that is developed together with Grafana Labs and based on open-source Grafana. Enhanced with enterprise capabilities, Amazon Managed Grafana makes it easy for you to visualize and analyze your operational data at scale.

On the Amazon Managed Grafana console, you can create workspaces, which are logically isolated Grafana servers where you can create Grafana dashboards. The following screenshot shows a list of our available workspaces.

You can also set up the following on the Workspaces page:

- Users

- User groups

- Data sources

- Notification channels

The following screenshot shows the details of our workspace and its users.

In the Data sources section, we can review and set up all the source feeds that populate the Grafana dashboard.

In the following screenshot, we have three sources configured:

You can choose Configure in Grafana for a given data source to configure it directly in Amazon Managed Grafana.

You’re asked to authenticate within Grafana. For this post, we use AWS IAM Identity Center (Successor to AWS Single Sign-On)

After you log in, you’re redirected to the Grafana home page. From here, you can view your saved dashboards. As shown in the following screenshot, we can access our Heart Health Monitoring dashboard.

You can also choose the gear icon in the navigation pane and perform various configuration tasks on the following:

- Data sources

- Users

- User groups

- Statistics

- Plugins

- Preferences

For example, if we choose Data Sources, we can add sources that will feed Grafana boards.

The following screenshot shows the configuration panel for Timestream.

If we navigate to the Heart Health Monitoring dashboard from the Grafana home page, we can review the widgets and information included within the dashboard.

Conclusion

With services like AWS IoT Core, Lambda, Amazon SNS, and Grafana, you can build a serverless solution with an event-driven architecture capable of ingesting, processing, and monitoring data streams in near-real time from a variety of devices, including common wearable devices.

In this post, we explored one way to ingest, process, and monitor live ECG data generated from a synthetic wearable device in order to provide insights to help determine if anomalies might be present in the ECG data stream.

To learn more about how AWS is accelerating innovation in healthcare, visit AWS for Health.

About the Author

Benedetto Carollo is a Senior Solution Architect for medical imaging and healthcare at Amazon Web Services in Europe, Middle East, and Africa. His work focuses on helping medical imaging and healthcare customers solve business problems by leveraging technology. Benedetto has over 15 years of experience of technology and medical imaging and has worked for companies like Canon Medical Research and Vital Images. Benedetto received his summa cum laude MSc in Software Engineering from the University of Palermo – Italy.

Benedetto Carollo is a Senior Solution Architect for medical imaging and healthcare at Amazon Web Services in Europe, Middle East, and Africa. His work focuses on helping medical imaging and healthcare customers solve business problems by leveraging technology. Benedetto has over 15 years of experience of technology and medical imaging and has worked for companies like Canon Medical Research and Vital Images. Benedetto received his summa cum laude MSc in Software Engineering from the University of Palermo – Italy.