Posted by Malaya Jules, Program Manager, Google

This week, the premier conference on Empirical Methods in Natural Language Processing (EMNLP 2022) is being held in Abu Dhabi, United Arab Emirates. We are proud to be a Diamond Sponsor of EMNLP 2022, with Google researchers contributing at all levels. This year we are presenting over 50 papers and are actively involved in 10 different workshops and tutorials.

If you’re registered for EMNLP 2022, we hope you’ll visit the Google booth to learn more about the exciting work across various topics, including language interactions, causal inference, question answering and more. Take a look below to learn more about the Google research being presented at EMNLP 2022 (Google affiliations in bold).

Committees

Organizing Committee includes: Eunsol Choi, Imed Zitouni

Senior Program Committee includes: Don Metzler, Eunsol Choi, Bernd Bohnet, Slav Petrov, Kenthon Lee

Papers

Transforming Sequence Tagging Into A Seq2Seq Task

Karthik Raman, Iftekhar Naim, Jiecao Chen, Kazuma Hashimoto, Kiran Yalasangi, Krishna Srinivasan

On the Limitations of Reference-Free Evaluations of Generated Text

Daniel Deutsch, Rotem Dror, Dan Roth

Chunk-based Nearest Neighbor Machine Translation

Pedro Henrique Martins, Zita Marinho, André F. T. Martins

Evaluating the Impact of Model Scale for Compositional Generalization in Semantic Parsing

Linlu Qiu*, Peter Shaw, Panupong Pasupat, Tianze Shi, Jonathan Herzig, Emily Pitler, Fei Sha, Kristina Toutanova

MasakhaNER 2.0: Africa-centric Transfer Learning for Named Entity Recognition

David Ifeoluwa Adelani, Graham Neubig, Sebastian Ruder, Shruti Rijhwani, Michael Beukman, Chester Palen-Michel, Constantine Lignos, Jesujoba O. Alabi, Shamsuddeen H. Muhammad, Peter Nabende, Cheikh M. Bamba Dione, Andiswa Bukula, Rooweither Mabuya, Bonaventure F. P. Dossou, Blessing Sibanda, Happy Buzaaba, Jonathan Mukiibi, Godson Kalipe, Derguene Mbaye, Amelia Taylor, Fatoumata Kabore, Chris Chinenye Emezue, Anuoluwapo Aremu, Perez Ogayo, Catherine Gitau, Edwin Munkoh-Buabeng, Victoire M. Koagne, Allahsera Auguste Tapo, Tebogo Macucwa, Vukosi Marivate, Elvis Mboning, Tajuddeen Gwadabe, Tosin Adewumi, Orevaoghene Ahia, Joyce Nakatumba-Nabende, Neo L. Mokono, Ignatius Ezeani, Chiamaka Chukwuneke, Mofetoluwa Adeyemi, Gilles Q. Hacheme, Idris Abdulmumin, Odunayo Ogundepo, Oreen Yousuf, Tatiana Moteu Ngoli, Dietrich Klakow

T-STAR: Truthful Style Transfer using AMR Graph as Intermediate Representation

Anubhav Jangra, Preksha Nema, Aravindan Raghuveer

Exploring Document-Level Literary Machine Translation with Parallel Paragraphs from World Literature

Katherine Thai, Marzena Karpinska, Kalpesh Krishna, Bill Ray, Moira Inghilleri, John Wieting, Mohit Iyyer

ASQA: Factoid Questions Meet Long-Form Answers

Ivan Stelmakh*, Yi Luan, Bhuwan Dhingra, Ming-Wei Chang

Efficient Nearest Neighbor Search for Cross-Encoder Models using Matrix Factorization

Nishant Yadav, Nicholas Monath, Rico Angell, Manzil Zaheer, Andrew McCallum

CPL: Counterfactual Prompt Learning for Vision and Language Models

Xuehai He, Diji Yang, Weixi Feng, Tsu-Jui Fu, Arjun Akula, Varun Jampani, Pradyumna Narayana, Sugato Basu, William Yang Wang, Xin Eric Wang

Correcting Diverse Factual Errors in Abstractive Summarization via Post-Editing and Language Model Infilling

Vidhisha Balachandran, Hannaneh Hajishirzi, William Cohen, Yulia Tsvetkov

Dungeons and Dragons as a Dialog Challenge for Artificial Intelligence

Chris Callison-Burch, Gaurav Singh Tomar, Lara J Martin, Daphne Ippolito, Suma Bailis, David Reitter

Exploring Dual Encoder Architectures for Question Answering

Zhe Dong, Jianmo Ni, Daniel M. Bikel, Enrique Alfonseca, Yuan Wang, Chen Qu, Imed Zitouni

RED-ACE: Robust Error Detection for ASR using Confidence Embeddings

Zorik Gekhman, Dina Zverinski, Jonathan Mallinson, Genady Beryozkin

Improving Passage Retrieval with Zero-Shot Question Generation

Devendra Sachan, Mike Lewis, Mandar Joshi, Armen Aghajanyan, Wen-tau Yih, Joelle Pineau, Luke Zettlemoyer

MuRAG: Multimodal Retrieval-Augmented Generator for Open Question Answering over Images and Text

Wenhu Chen, Hexiang Hu, Xi Chen, Pat Verga, William Cohen

Decoding a Neural Retriever’s Latent Space for Query Suggestion

Leonard Adolphs, Michelle Chen Huebscher, Christian Buck, Sertan Girgin, Olivier Bachem, Massimiliano Ciaramita, Thomas Hofmann

Hyper-X: A Unified Hypernetwork for Multi-Task Multilingual Transfer

Ahmet Üstün, Arianna Bisazza, Gosse Bouma, Gertjan van Noord, Sebastian Ruder

Offer a Different Perspective: Modeling the Belief Alignment of Arguments in Multi-party Debates

Suzanna Sia, Kokil Jaidka, Hansin Ahuja, Niyati Chhaya, Kevin Duh

Meta-Learning Fast Weight Language Model

Kevin Clark, Kelvin Guu, Ming-Wei Chang, Panupong Pasupat, Geoffrey Hinton, Mohammad Norouzi

Large Dual Encoders Are Generalizable Retrievers

Jianmo Ni, Chen Qu, Jing Lu, Zhuyun Dai, Gustavo Hernández Ábrego, Vincent Y. Zhao, Yi Luan, Keith B. Hall, Ming-Wei Chang, Yinfei Yang

CONQRR: Conversational Query Rewriting for Retrieval with Reinforcement Learning

Zeqiu Wu*, Yi Luan, Hannah Rashkin, David Reitter, Hannaneh Hajishirzi, Mari Ostendorf, Gaurav Singh Tomar

Overcoming Catastrophic Forgetting in Zero-Shot Cross-Lingual Generation

Tu Vu*, Aditya Barua, Brian Lester, Daniel Cer, Mohit Iyyer, Noah Constant

RankGen: Improving Text Generation with Large Ranking Models

Kalpesh Krishna, Yapei Chang, John Wieting, Mohit Iyyer

UnifiedSKG: Unifying and Multi-Tasking Structured Knowledge Grounding with Text-to-Text Language Models

Tianbao Xie, Chen Henry Wu, Peng Shi, Ruiqi Zhong, Torsten Scholak, Michihiro Yasunaga, Chien-Sheng Wu, Ming Zhong, Pengcheng Yin, Sida I. Wang, Victor Zhong, Bailin Wang, Chengzu Li, Connor Boyle, Ansong Ni, Ziyu Yao, Dragomir Radev, Caiming Xiong, Lingpeng Kong, Rui Zhang, Noah A. Smith, Luke Zettlemoyer and Tao Yu

M2D2: A Massively Multi-domain Language Modeling Dataset

Machel Reid, Victor Zhong, Suchin Gururangan, Luke Zettlemoyer

Tomayto, Tomahto. Beyond Token-level Answer Equivalence for Question Answering Evaluation

Jannis Bulian, Christian Buck, Wojciech Gajewski, Benjamin Boerschinger, Tal Schuster

COCOA: An Encoder-Decoder Model for Controllable Code-switched Generation

Sneha Mondal, Ritika Goyal, Shreya Pathak, Preethi Jyothi, Aravindan Raghuveer

Crossmodal-3600: A Massively Multilingual Multimodal Evaluation Dataset (see blog post)

Ashish V. Thapliyal, Jordi Pont-Tuset, Xi Chen, Radu Soricut

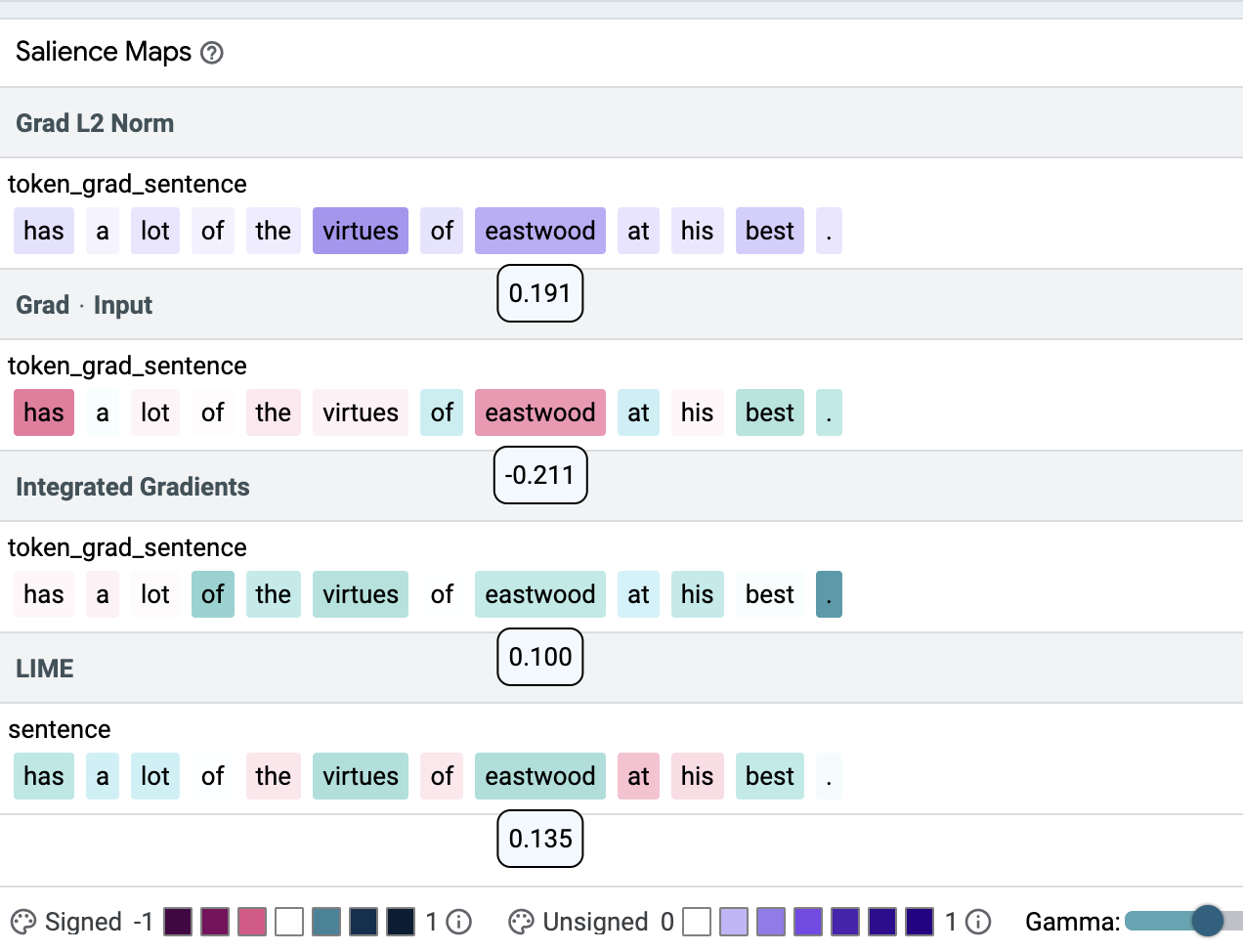

“Will You Find These Shortcuts?” A Protocol for Evaluating the Faithfulness of Input Salience Methods for Text Classification (see blog post)

Jasmijn Bastings, Sebastian Ebert, Polina Zablotskaia, Anders Sandholm, Katja Filippova

Intriguing Properties of Compression on Multilingual Models

Kelechi Ogueji*, Orevaoghene Ahia, Gbemileke A. Onilude, Sebastian Gehrmann, Sara Hooker, Julia Kreutzer

FETA: A Benchmark for Few-Sample Task Transfer in Open-Domain Dialogue

Alon Albalak, Yi-Lin Tuan, Pegah Jandaghi, Connor Pryor, Luke Yoffe, Deepak Ramachandran, Lise Getoor, Jay Pujara, William Yang Wang

SHARE: a System for Hierarchical Assistive Recipe Editing

Shuyang Li, Yufei Li, Jianmo Ni, Julian McAuley

Context Matters for Image Descriptions for Accessibility: Challenges for Referenceless Evaluation Metrics

Elisa Kreiss, Cynthia Bennett, Shayan Hooshmand, Eric Zelikman, Meredith Ringel Morris, Christopher Potts

Just Fine-tune Twice: Selective Differential Privacy for Large Language Models

Weiyan Shi, Ryan Patrick Shea, Si Chen, Chiyuan Zhang, Ruoxi Jia, Zhou Yu

Findings of EMNLP

Leveraging Data Recasting to Enhance Tabular Reasoning

Aashna Jena, Manish Shrivastava, Vivek Gupta, Julian Martin Eisenschlos

QUILL: Query Intent with Large Language Models using Retrieval Augmentation and Multi-stage Distillation

Krishna Srinivasan, Karthik Raman, Anupam Samanta, Lingrui Liao, Luca Bertelli, Michael Bendersky

Adapting Multilingual Models for Code-Mixed Translation

Aditya Vavre, Abhirut Gupta, Sunita Sarawagi

Table-To-Text generation and pre-training with TABT5

Ewa Andrejczuk, Julian Martin Eisenschlos, Francesco Piccinno, Syrine Krichene, Yasemin Altun

Stretching Sentence-pair NLI Models to Reason over Long Documents and Clusters

Tal Schuster, Sihao Chen, Senaka Buthpitiya, Alex Fabrikant, Donald Metzler

Knowledge-grounded Dialog State Tracking

Dian Yu*, Mingqiu Wang, Yuan Cao, Izhak Shafran, Laurent El Shafey, Hagen Soltau

Sparse Mixers: Combining MoE and Mixing to Build a More Efficient BERT

James Lee-Thorp, Joshua Ainslie

EdiT5: Semi-Autoregressive Text Editing with T5 Warm-Start

Jonathan Mallinson, Jakub Adamek, Eric Malmi, Aliaksei Severyn

Autoregressive Structured Prediction with Language Models

Tianyu Liu, Yuchen Eleanor Jiang, Nicholas Monath, Ryan Cotterell and Mrinmaya Sachan

Faithful to the Document or to the World? Mitigating Hallucinations via Entity-Linked Knowledge in Abstractive Summarization

Yue Dong*, John Wieting, Pat Verga

Investigating Ensemble Methods for Model Robustness Improvement of Text Classifiers

Jieyu Zhao*, Xuezhi Wang, Yao Qin, Jilin Chen, Kai-Wei Chang

Topic Taxonomy Expansion via Hierarchy-Aware Topic Phrase Generation

Dongha Lee, Jiaming Shen, Seonghyeon Lee, Susik Yoon, Hwanjo Yu, Jiawei Han

Benchmarking Language Models for Code Syntax Understanding

Da Shen, Xinyun Chen, Chenguang Wang, Koushik Sen, Dawn Song

Large-Scale Differentially Private BERT

Rohan Anil, Badih Ghazi, Vineet Gupta, Ravi Kumar, Pasin Manurangsi

Towards Tracing Knowledge in Language Models Back to the Training Data

Ekin Akyurek, Tolga Bolukbasi, Frederick Liu, Binbin Xiong, Ian Tenney, Jacob Andreas, Kelvin Guu

Predicting Long-Term Citations from Short-Term Linguistic Influence

Sandeep Soni, David Bamman, Jacob Eisenstein

Workshops

Widening NLP

Organizers include: Shaily Bhatt, Sunipa Dev, Isidora Tourni

The First Workshop on Ever Evolving NLP (EvoNLP)

Organizers include: Bhuwan Dhingra

Invited Speakers include: Eunsol Choi, Jacob Einstein

Massively Multilingual NLU 2022

Invited Speakers include: Sebastian Ruder

Second Workshop on NLP for Positive Impact

Invited Speakers include: Milind Tambe

BlackboxNLP – Workshop on analyzing and interpreting neural networks for NLP

Organizers include: Jasmijn Bastings

MRL: The 2nd Workshop on Multi-lingual Representation Learning

Organizers include: Orhan Firat, Sebastian Ruder

Novel Ideas in Learning-to-Learn through Interaction (NILLI)

Program Committee includes: Yu-Siang Wang

Tutorials

Emergent Language-Based Coordination In Deep Multi-Agent Systems

Marco Baroni, Roberto Dessi, Angeliki Lazaridou

Tutorial on Causal Inference for Natural Language Processing

Zhijing Jin, Amir Feder, Kun Zhang

Modular and Parameter-Efficient Fine-Tuning for NLP Models

Sebastian Ruder, Jonas Pfeiffer, Ivan Vulic

* Work done while at Google

Read More

Google’s Startups for Sustainable Development program supports impact-driven startups who are building a more sustainable future.

Google’s Startups for Sustainable Development program supports impact-driven startups who are building a more sustainable future.