Making AI more helpful for everyoneRead More

Introducing Gemini: our largest and most capable AI model

Making AI more helpful for everyoneRead More

Introducing Gemini: our largest and most capable AI model

Making AI more helpful for everyoneRead More

Introducing Gemini: our largest and most capable AI model

Making AI more helpful for everyoneRead More

Boosting RAG-based intelligent document assistants using entity extraction, SQL querying, and agents with Amazon Bedrock

Conversational AI has come a long way in recent years thanks to the rapid developments in generative AI, especially the performance improvements of large language models (LLMs) introduced by training techniques such as instruction fine-tuning and reinforcement learning from human feedback. When prompted correctly, these models can carry coherent conversations without any task-specific training data. However, they can’t generalize well to enterprise-specific questions because, to generate an answer, they rely on the public data they were exposed to during pre-training. Such data often lacks the specialized knowledge contained in internal documents available in modern businesses, which is typically needed to get accurate answers in domains such as pharmaceutical research, financial investigation, and customer support.

To create AI assistants that are capable of having discussions grounded in specialized enterprise knowledge, we need to connect these powerful but generic LLMs to internal knowledge bases of documents. This method of enriching the LLM generation context with information retrieved from your internal data sources is called Retrieval Augmented Generation (RAG), and produces assistants that are domain specific and more trustworthy, as shown by Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. Another driver behind RAG’s popularity is its ease of implementation and the existence of mature vector search solutions, such as those offered by Amazon Kendra (see Amazon Kendra launches Retrieval API) and Amazon OpenSearch Service (see k-Nearest Neighbor (k-NN) search in Amazon OpenSearch Service), among others.

However, the popular RAG design pattern with semantic search can’t answer all types of questions that are possible on documents. This is especially true for questions that require analytical reasoning across multiple documents. For example, imagine that you are planning next year’s strategy of an investment company. One essential step would be to analyze and compare the financial results and potential risks of candidate companies. This task involves answering analytical reasoning questions. For instance, the query “Give me the top 5 companies with the highest revenue in the last 2 years and identify their main risks” requires multiple steps of reasoning, some of which can use semantic search retrieval, whereas others require analytical capabilities.

In this post, we show how to design an intelligent document assistant capable of answering analytical and multi-step reasoning questions in three parts. In Part 1, we review the RAG design pattern and its limitations on analytical questions. Then we introduce you to a more versatile architecture that overcomes these limitations. Part 2 helps you dive deeper into the entity extraction pipeline used to prepare structured data, which is a key ingredient for analytical question answering. Part 3 walks you through how to use Amazon Bedrock LLMs to query that data and build an LLM agent that enhances RAG with analytical capabilities, thereby enabling you to build intelligent document assistants that can answer complex domain-specific questions across multiple documents.

Part 1: RAG limitations and solution overview

In this section, we review the RAG design pattern and discuss its limitations on analytical questions. We also present a more versatile architecture that overcomes these limitations.

Overview of RAG

RAG solutions are inspired by representation learning and semantic search ideas that have been gradually adopted in ranking problems (for example, recommendation and search) and natural language processing (NLP) tasks since 2010.

The popular approach used today is formed of three steps:

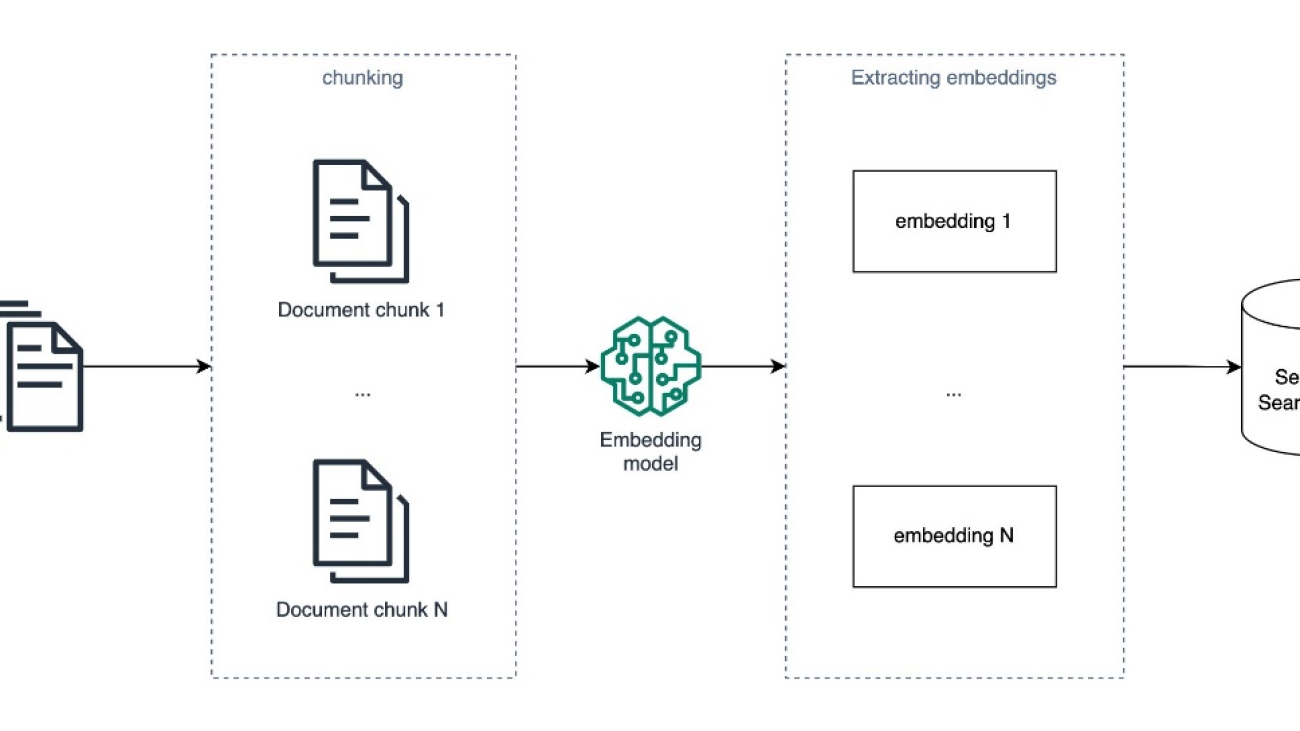

- An offline batch processing job ingests documents from an input knowledge base, splits them into chunks, creates an embedding for each chunk to represent its semantics using a pre-trained embedding model, such as Amazon Titan embedding models, then uses these embeddings as input to create a semantic search index.

- When answering a new question in real time, the input question is converted to an embedding, which is used to search for and extract the most similar chunks of documents using a similarity metric, such as cosine similarity, and an approximate nearest neighbors algorithm. The search precision can also be improved with metadata filtering.

- A prompt is constructed from the concatenation of a system message with a context that is formed of the relevant chunks of documents extracted in step 2, and the input question itself. This prompt is then presented to an LLM model to generate the final answer to the question from the context.

With the right underlying embedding model, capable of producing accurate semantic representations of the input document chunks and the input questions, and an efficient semantic search module, this solution is able to answer questions that require retrieving existent information in a database of documents. For example, if you have a service or a product, you could start by indexing its FAQ section or documentation and have an initial conversational AI tailored to your specific offering.

Limitations of RAG based on semantic search

Although RAG is an essential component in modern domain-specific AI assistants and a sensible starting point for building a conversational AI around a specialized knowledge base, it can’t answer questions that require scanning, comparing, and reasoning across all documents in your knowledge base simultaneously, especially when the augmentation is based solely on semantic search.

To understand these limitations, let’s consider again the example of deciding where to invest based on financial reports. If we were to use RAG to converse with these reports, we could ask questions such as “What are the risks that faced company X in 2022,” or “What is the net revenue of company Y in 2022?” For each of these questions, the corresponding embedding vector, which encodes the semantic meaning of the question, is used to retrieve the top-K semantically similar chunks of documents available in the search index. This is typically accomplished by employing an approximate nearest neighbors solution such as FAISS, NMSLIB, pgvector, or others, which strive to strike a balance between retrieval speed and recall to achieve real-time performance while maintaining satisfactory accuracy.

However, the preceding approach can’t accurately answer analytical questions across all documents, such as “What are the top 5 companies with the highest net revenues in 2022?”

This is because semantic search retrieval attempts to find the K most similar chunks of documents to the input question. But because none of the documents contain comprehensive summaries of revenues, it will return chunks of documents that merely contain mentions of “net revenue” and possibly “2022,” without fulfilling the essential condition of focusing on companies with the highest revenue. If we present these retrieval results to an LLM as context to answer the input question, it may formulate a misleading answer or refuse to answer, because the required correct information is missing.

These limitations come by design because semantic search doesn’t conduct a thorough scan of all embedding vectors to find relevant documents. Instead, it uses approximate nearest neighbor methods to maintain reasonable retrieval speed. A key strategy for efficiency in these methods is segmenting the embedding space into groups during indexing. This allows for quickly identifying which groups may contain relevant embeddings during retrieval, without the need for pairwise comparisons. Additionally, even traditional nearest neighbors techniques like KNN, which scan all documents, only compute basic distance metrics and aren’t suitable for the complex comparisons needed for analytical reasoning. Therefore, RAG with semantic search is not tailored for answering questions that involve analytical reasoning across all documents.

To overcome these limitations, we propose a solution that combines RAG with metadata and entity extraction, SQL querying, and LLM agents, as described in the following sections.

Overcoming RAG limitations with metadata, SQL, and LLM agents

Let’s examine more deeply a question on which RAG fails, so that we can trace back the reasoning required to answer it effectively. This analysis should point us towards the right approach that could complement RAG in the overall solution.

Consider the question: “What are the top 5 companies with the highest revenue in 2022?”

To be able to answer this question, we would need to:

- Identify the revenue for each company.

- Filter down to keep the revenues of 2022 for each of them.

- Sort the revenues in descending order.

- Slice out the top 5 revenues alongside the company names.

Typically, these analytical operations are done on structured data, using tools such as pandas or SQL engines. If we had access to a SQL table containing the columns company, revenue, and year, we could easily answer our question by running a SQL query, similar to the following example:

SELECT company, revenue FROM table_name WHERE year = 2022 ORDER BY revenue DESC LIMIT 5;Storing structured metadata in a SQL table that contains information about relevant entities enables you to answer many types of analytical questions by writing the correct SQL query. This is why we complement RAG in our solution with a real-time SQL querying module against a SQL table, populated by metadata extracted in an offline process.

But how can we implement and integrate this approach to an LLM-based conversational AI?

There are three steps to be able to add SQL analytical reasoning:

- Metadata extraction – Extract metadata from unstructured documents into a SQL table

- Text to SQL – Formulate SQL queries from input questions accurately using an LLM

- Tool selection – Identify if a question must be answered using RAG or a SQL query

To implement these steps, first we recognize that information extraction from unstructured documents is a traditional NLP task for which LLMs show promise in achieving high accuracy through zero-shot or few-shot learning. Second, the ability of these models to generate SQL queries from natural language has been proven for years, as seen in the 2020 release of Amazon QuickSight Q. Finally, automatically selecting the right tool for a specific question enhances the user experience and enables answering complex questions through multi-step reasoning. To implement this feature, we delve into LLM agents in a later section.

To summarize, the solution we propose is composed of the following core components:

- Semantic search retrieval to augment generation context

- Structured metadata extraction and querying with SQL

- An agent capable of using the right tools to answer a question

Solution overview

The following diagram depicts a simplified architecture of the solution. It helps you identify and understand the role of the core components and how they interact to implement the full LLM-assistant behavior. The numbering aligns with the order of operations when implementing this solution.

In practice, we implemented this solution as outlined in the following detailed architecture.

For this architecture, we propose an implementation on GitHub, with loosely coupled components where the backend (5), data pipelines (1, 2, 3) and front end (4) can evolve separately. This is to simplify the collaboration across competencies when customizing and improving the solution for production.

Deploy the solution

To install this solution in your AWS account, complete the following steps:

- Clone the repository on GitHub.

- Install the backend AWS Cloud Development Kit (AWS CDK) app:

- Open the

backendfolder. - Run

npm installto install the dependencies. - If you have never used the AWS CDK in the current account and Region, run bootstrapping with

npx cdk bootstrap. - Run

npx cdk deployto deploy the stack.

- Open the

- Optionally, run the

streamlit-uias follows:- We recommend cloning this repository into an Amazon SageMaker Studio environment. For more information, refer to Onboard to Amazon SageMaker Domain using Quick setup.

- Inside the

frontend/streamlit-uifolder, runbash run-streamlit-ui.sh. - Choose the link with the following format to open the demo:

https://{domain_id}.studio.{region}.sagemaker.aws/jupyter/default/proxy/{port_number}/.

- Finally, you can run the Amazon SageMaker pipeline defined in the

data-pipelines/04-sagemaker-pipeline-for-documents-processing.ipynbnotebook to process the input PDF documents and prepare the SQL table and the semantic search index used by the LLM assistant.

In the rest of this post, we focus on explaining the most important components and design choices, to hopefully inspire you when designing your own AI assistant on an internal knowledge base. We assume that components 1 and 4 are straightforward to understand, and focus on the core components 2, 3, and 5.

Part 2: Entity extraction pipeline

In this section, we dive deeper into the entity extraction pipeline used to prepare structured data, which is a key ingredient for analytical question answering.

Text extraction

Documents are typically stored in PDF format or as scanned images. They may be formed of simple paragraph layouts or complex tables, and contain digital or handwritten text. To extract information correctly, we need to transform these raw documents into plain text, while preserving their original structure. To do this, you can use Amazon Textract, which is a machine learning (ML) service that provides mature APIs for text, tables, and forms extraction from digital and handwritten inputs.

In component 2, we extract text and tables as follows:

- For each document, we call Amazon Textract to extract the text and tables.

- We use the following Python script to recreate tables as pandas DataFrames.

- We consolidate the results into a single document and insert tables as markdown.

This process is outlined by the following flow diagram and concretely demonstrated in notebooks/03-pdf-document-processing.ipynb.

Entity extraction and querying using LLMs

To answer analytical questions effectively, you need to extract relevant metadata and entities from your document’s knowledge base to an accessible structured data format. We suggest using SQL to store this information and retrieve answers due to its popularity, ease of use, and scalability. This choice also benefits from the proven language models’ ability to generate SQL queries from natural language.

In this section, we dive deeper into the following components that enable analytical questions:

- A batch process that extracts structured data out of unstructured data using LLMs

- A real-time module that converts natural language questions to SQL queries and retrieves results from a SQL database

You can extract the relevant metadata to support analytical questions as follows:

- Define a JSON schema for information you need to extract, which contains a description of each field and its data type, and includes examples of the expected values.

- For each document, prompt an LLM with the JSON schema and ask it to extract the relevant data accurately.

- When the document length is beyond the context length, and to reduce the extraction cost with LLMs, you can use semantic search to retrieve and present the relevant chunks of documents to the LLM during extraction.

- Parse the JSON output and validate the LLM extraction.

- Optionally, back up the results on Amazon S3 as CSV files.

- Load into the SQL database for later querying.

This process is managed by the following architecture, where the documents in text format are loaded with a Python script that runs in an Amazon SageMaker Processing job to perform the extraction.

For each group of entities, we dynamically construct a prompt that includes a clear description of the information extraction task, and includes a JSON schema that defines the expected output and includes the relevant document chunks as context. We also add a few examples of input and correct output to improve the extraction performance with few-shot learning. This is demonstrated in notebooks/05-entities-extraction-to-structured-metadata.ipynb.

Part 3: Build an agentic document assistant with Amazon Bedrock

In this section, we demonstrate how to use Amazon Bedrock LLMs to query data and build an LLM agent that enhances RAG with analytical capabilities, thereby enabling you to build intelligent document assistants that can answer complex domain-specific questions across multiple documents. You can refer to the Lambda function on GitHub for the concrete implementation of the agent and tools described in this part.

Formulate SQL queries and answer analytical questions

Now that we have a structured metadata store with the relevant entities extracted and loaded into a SQL database that we can query, the question that remains is how to generate the right SQL query from the input natural language questions?

Modern LLMs are good at generating SQL. For instance, if you request from the Anthropic Claude LLM through Amazon Bedrock to generate a SQL query, you will see plausible answers. However, we need to abide by a few rules when writing the prompt to reach more accurate SQL queries. These rules are especially important for complex queries to reduce hallucination and syntax errors:

- Describe the task accurately within the prompt

- Include the schema of the SQL tables within the prompt, while describing each column of the table and specifying its data type

- Explicitly tell the LLM to only use existing column names and data types

- Add a few rows of the SQL tables

You could also postprocess the generated SQL query using a linter such as sqlfluff to correct formatting, or a parser such as sqlglot to detect syntax errors and optimize the query. Moreover, when the performance doesn’t meet the requirement, you could provide a few examples within the prompt to steer the model with few-shot learning towards generating more accurate SQL queries.

From an implementation perspective, we use an AWS Lambda function to orchestrate the following process:

- Call an Anthropic Claude model in Amazon Bedrock with the input question to get the corresponding SQL query. Here, we use the SQLDatabase class from LangChain to add schema descriptions of relevant SQL tables, and use a custom prompt.

- Parse, validate, and run the SQL query against the Amazon Aurora PostgreSQL-Compatible Edition database.

The architecture for this part of the solution is highlighted in the following diagram.

Security considerations to prevent SQL injection attacks

As we enable the AI assistant to query a SQL database, we have to make sure this doesn’t introduce security vulnerabilities. To achieve this, we propose the following security measures to prevent SQL injection attacks:

- Apply least privilege IAM permissions – Limit the permission of the Lambda function that runs the SQL queries using an AWS Identity and Access Management (IAM) policy and role that follows the least privilege principle. In this case, we grant read-only access.

- Limit data access – Only provide access to the bare minimum of tables and columns to prevent information disclosure attacks.

- Add a moderation layer – Introduce a moderation layer that detects prompt injection attempts early on and prevents them from propagating to the rest of the system. It can take the form of rule-based filters, similarity matching against a database of known prompt injection examples, or an ML classifier.

Semantic search retrieval to augment generation context

The solution we propose uses RAG with semantic search in component 3. You can implement this module using knowledge bases for Amazon Bedrock. Additionally, there are a variety of others options to implement RAG, such as the Amazon Kendra Retrieval API, Amazon OpenSearch vector database, and Amazon Aurora PostgreSQL with pgvector, among others. The open source package aws-genai-llm-chatbot demonstrates how to use many of these vector search options to implement an LLM-powered chatbot.

In this solution, because we need both SQL querying and vector search, we decided to use Amazon Aurora PostgreSQL with the pgvector extension, which supports both features. Therefore, we implement the semantic-search RAG component with the following architecture.

The process of answering questions using the preceding architecture is done in two main stages.

First, an offline-batch process, run as a SageMaker Processing job, creates the semantic search index as follows:

- Either periodically, or upon receiving new documents, a SageMaker job is run.

- It loads the text documents from Amazon S3 and splits them into overlapping chunks.

- For each chunk, it uses an Amazon Titan embedding model to generate an embedding vector.

- It uses the PGVector class from LangChain to ingest the embeddings, with their document chunks and metadata, into Amazon Aurora PostgreSQL and create a semantic search index on all the embedding vectors.

Second, in real time and for each new question, we construct an answer as follows:

- The question is received by the orchestrator that runs on a Lambda function.

- The orchestrator embeds the question with the same embedding model.

- It retrieves the top-K most relevant documents chunks from the PostgreSQL semantic search index. It optionally uses metadata filtering to improve precision.

- These chunks are inserted dynamically in an LLM prompt alongside the input question.

- The prompt is presented to Anthropic Claude on Amazon Bedrock, to instruct it to answer the input question based on the available context.

- Finally, the generated answer is sent back to the orchestrator.

An agent capable of using tools to reason and act

So far in this post, we have discussed treating questions that require either RAG or analytical reasoning separately. However, many real-world questions demand both capabilities, sometimes over multiple steps of reasoning, in order to reach a final answer. To support these more complex questions, we need to introduce the notion of an agent.

LLM agents, such as the agents for Amazon Bedrock, have emerged recently as a promising solution capable of using LLMs to reason and adapt using the current context and to choose appropriate actions from a list of options, which presents a general problem-solving framework. As discussed in LLM Powered Autonomous Agents, there are multiple prompting strategies and design patterns for LLM agents that support complex reasoning.

One such design pattern is Reason and Act (ReAct), introduced in ReAct: Synergizing Reasoning and Acting in Language Models. In ReAct, the agent takes as input a goal that can be a question, identifies the pieces of information missing to answer it, and proposes iteratively the right tool to gather information based on the available tools’ descriptions. After receiving the answer from a given tool, the LLM reassesses whether it has all the information it needs to fully answer the question. If not, it does another step of reasoning and uses the same or another tool to gather more information, until a final response is ready or a limit is reached.

The following sequence diagram explains how a ReAct agent works toward answering the question “Give me the top 5 companies with the highest revenue in the last 2 years and identify the risks associated with the top one.”

The details of implementing this approach in Python are described in Custom LLM Agent. In our solution, the agent and tools are implemented with the following highlighted partial architecture.

To answer an input question, we use AWS services as follows:

- A user inputs their question through a UI, which calls an API on Amazon API Gateway.

- API Gateway sends the question to a Lambda function implementing the agent executor.

- The agent calls the LLM with a prompt that contains a description of the tools available, the ReAct instruction format, and the input question, and then parses the next action to complete.

- The action contains which tool to call and what the action input is.

- If the tool to use is SQL, the agent executor calls SQLQA to convert the question to SQL and run it. Then it adds the result to the prompt and calls the LLM again to see if it can answer the original question or if more actions are needed.

- Similarly, if the tool to use is semantic search, then the action input is parsed out and used to retrieve from the PostgreSQL semantic search index. It adds the results to the prompt and checks if the LLM is able to answer or needs another action.

- After all the information to answer a question is available, the LLM agent formulates a final answer and sends it back to the user.

You can extend the agent with further tools. In the implementation available on GitHub, we demonstrate how you can add a search engine and a calculator as extra tools to the aforementioned SQL engine and semantic search tools. To store the ongoing conversation history, we use an Amazon DynamoDB table.

From our experience so far, we have seen that the following are keys to a successful agent:

- An underlying LLM capable of reasoning with the ReAct format

- A clear description of the available tools, when to use them, and a description of their input arguments with, potentially, an example of the input and expected output

- A clear outline of the ReAct format that the LLM must follow

- The right tools for solving the business question made available to the LLM agent to use

- Correctly parsing out the outputs from the LLM agent responses as it reasons

To optimize costs, we recommend caching the most common questions with their answers and updating this cache periodically to reduce calls to the underlying LLM. For instance, you can create a semantic search index with the most common questions as explained previously, and match the new user question against the index first before calling the LLM. To explore other caching options, refer to LLM Caching integrations.

Supporting other formats such as video, image, audio, and 3D files

You can apply the same solution to various types of information, such as images, videos, audio, and 3D design files like CAD or mesh files. This involves using established ML techniques to describe the file content in text, which can then be ingested into the solution that we explored earlier. This approach enables you to conduct QA conversations on these diverse data types. For instance, you can expand your document database by creating textual descriptions of images, videos, or audio content. You can also enhance the metadata table by identifying properties through classification or object detection on elements within these formats. After this extracted data is indexed in either the metadata store or the semantic search index for documents, the overall architecture of the proposed system remains largely consistent.

Conclusion

In this post, we showed how using LLMs with the RAG design pattern is necessary for building a domain-specific AI assistant, but is insufficient to reach the required level of reliability to generate business value. Because of this, we proposed extending the popular RAG design pattern with the concepts of agents and tools, where the flexibility of tools allows us to use both traditional NLP techniques and modern LLM capabilities to enable an AI assistant with more options to seek information and assist users in solving business problems efficiently.

The solution demonstrates the design process towards an LLM assistant able to answer various types of retrieval, analytical reasoning, and multi-step reasoning questions across all of your knowledge base. We also highlighted the importance of thinking backward from the types of questions and tasks that your LLM assistant is expected to help users with. In this case, the design journey led us to an architecture with the three components: semantic search, metadata extraction and SQL querying, and LLM agent and tools, which we think is generic and flexible enough for multiple use cases. We also believe that by getting inspiration from this solution and diving deep into your users’ needs, you will be able to extend this solution further toward what works best for you.

About the authors

Mohamed Ali Jamaoui is a Senior ML Prototyping Architect with 10 years of experience in production machine learning. He enjoys solving business problems with machine learning and software engineering, and helping customers extract business value with ML. As part of AWS EMEA Prototyping and Cloud Engineering, he helps customers build business solutions that leverage innovations in MLOPs, NLP, CV and LLMs.

Mohamed Ali Jamaoui is a Senior ML Prototyping Architect with 10 years of experience in production machine learning. He enjoys solving business problems with machine learning and software engineering, and helping customers extract business value with ML. As part of AWS EMEA Prototyping and Cloud Engineering, he helps customers build business solutions that leverage innovations in MLOPs, NLP, CV and LLMs.

Giuseppe Hannen is a ProServe Associate Consultant. Giuseppe applies his analytical skills in combination with AI&ML to develop clear and effective solutions for his customers. He loves to come up with simple solutions to complicated problems, especially those that involve the latest technological developments and research.

Giuseppe Hannen is a ProServe Associate Consultant. Giuseppe applies his analytical skills in combination with AI&ML to develop clear and effective solutions for his customers. He loves to come up with simple solutions to complicated problems, especially those that involve the latest technological developments and research.

Laurens ten Cate is a Senior Data Scientist. Laurens works with enterprise customers in EMEA helping them accelerate their business outcomes using AWS AI/ML technologies. He specializes in NLP solutions and focusses on the Supply Chain & Logistics industry. In his free time he enjoys reading and art.

Laurens ten Cate is a Senior Data Scientist. Laurens works with enterprise customers in EMEA helping them accelerate their business outcomes using AWS AI/ML technologies. He specializes in NLP solutions and focusses on the Supply Chain & Logistics industry. In his free time he enjoys reading and art.

Irina Radu is a Prototyping Engagement Manager, part of AWS EMEA Prototyping and Cloud Engineering. She is helping customers get the best out of the latest tech, innovate faster and think bigger.

Irina Radu is a Prototyping Engagement Manager, part of AWS EMEA Prototyping and Cloud Engineering. She is helping customers get the best out of the latest tech, innovate faster and think bigger.

How Q4 Inc. used Amazon Bedrock, RAG, and SQLDatabaseChain to address numerical and structured dataset challenges building their Q&A chatbot

This post is co-written with Stanislav Yeshchenko from Q4 Inc.

Enterprises turn to Retrieval Augmented Generation (RAG) as a mainstream approach to building Q&A chatbots. We continue to see emerging challenges stemming from the nature of the assortment of datasets available. These datasets are often a mix of numerical and text data, at times structured, unstructured, or semi-structured.

Q4 Inc. needed to address some of these challenges in one of their many AI use cases built on AWS. In this post, we discuss a Q&A bot use case that Q4 has implemented, the challenges that numerical and structured datasets presented, and how Q4 concluded that using SQL may be a viable solution. Finally, we take a closer look at how the Q4 team used Amazon Bedrock and SQLDatabaseChain to implement a RAG-based solution with SQL generation.

Use case overview

Q4 Inc., headquartered in Toronto, with offices in New York and London, is a leading capital markets access platform that is transforming how issuers, investors, and sellers efficiently connect, communicate, and engage with each other. The Q4 Platform facilitates interactions across the capital markets through IR website products, virtual events solutions, engagement analytics, investor relations Customer Relationship Management (CRM), shareholder and market analysis, surveillance, and ESG tools.

In today’s fast-paced and data-driven financial landscape, Investor Relations Officers (IROs) play a critical role in fostering communication between a company and its shareholders, analysts, and investors. As part of their daily duties, IROs analyze diverse datasets, including CRM, ownership records, and stock market data. The aggregate of this data is used to generate financial reports, set investor relations goals, and manage communication with existing and potential investors.

To meet the growing demand for efficient and dynamic data retrieval, Q4 aimed to create a chatbot Q&A tool that would provide an intuitive and straightforward method for IROs to access the necessary information they need in a user-friendly format.

The end goal was to create a chatbot that would seamlessly integrate publicly available data, along with proprietary customer-specific Q4 data, while maintaining the highest level of security and data privacy. As for performance, the goal was to maintain a query response time of seconds to ensure a positive experience for end-users.

Financial markets is a regulated industry with high stakes involved. Providing incorrect or outdated information can impact investors’ and shareholders’ trust, in addition to other possible data privacy risks. Understanding the industry and the requirements, Q4 sets data privacy and response accuracy as its guiding principles in evaluating any solution before it can be taken to market.

For the proof of concept, Q4 decided to use a financial ownership dataset. The dataset consists of time series data points representing the number of assets owned; the transaction history between investment institutions, individuals, and public companies; and many more elements.

Because Q4 wanted to ensure it could satisfy all the functional and non-functional requirements we’ve discussed, the project also needed to stay commercially feasible. This was respected throughout the process of deciding on the approach, architecture, choice of technology, and solution-specific elements.

Experimentation and challenges

It was clear from the beginning that to understand a human language question and generate accurate answers, Q4 would need to use large language models (LLMs).

The following are some of the experiments that were conducted by the team, along with the challenges identified and lessons learned:

- Pre-training – Q4 understood the complexity and challenges that come with pre-training an LLM using its own dataset. It quickly became obvious that this approach is resource intensive with many non-trivial steps, such as data preprocessing, training, and evaluation. In addition to the effort involved, it would be cost prohibitive. Considering the nature of the time series dataset, Q4 also realized that it would have to continuously perform incremental pre-training as new data came in. This would have required a dedicated cross-disciplinary team with expertise in data science, machine learning, and domain knowledge.

- Fine-tuning – Fine-tuning a pre-trained foundation model (FM) involved using several labeled examples. This approach showed some initial success, but in many cases, model hallucination was a challenge. The model struggled to understand nuanced contextual cues and returned incorrect results.

- RAG with semantic search – Conventional RAG with semantic search was the last step before moving to SQL generation. The team experimented with using search, semantic search, and embeddings to extract context. During the embeddings experiment, the dataset was converted into embeddings, stored in a vector database, and then matched with the embeddings of the question to extract context. The retrieved context in any of the three experiments was then used to augment the original prompt as an input to the LLM. This approach worked well for text-based content, where the data consists of natural language with words, sentences, and paragraphs. Considering the nature of Q4’s dataset, which is mostly financial data consisting of numbers, financial transactions, stock quotes, and dates, the results in all three cases were suboptimal. Even when using embeddings, the embeddings generated from numbers struggled with similarity ranking, and in many cases led to retrieving incorrect information.

Q4’s conclusion: Generating SQL is the path forward

Considering the challenges faced using conventional RAG methodology, the team started to consider SQL generation. The idea was to use the LLM to first generate a SQL statement from the user question, presented to the LLM in natural language. The generated query is then run against the database to fetch the relevant context. The context is finally used to augment the input prompt for a summarization step.

Q4’s hypothesis was that in order to get higher recall for the retrieval step, specifically for the numerical dataset, they needed to first generate SQL from the user question. This was believed to not only increase accuracy, but also keep the context within the business domain for a given question. For the query generation, and to generate accurate SQL, Q4 needed to make the LLM fully context aware of their dataset structure. This meant the prompt needed to include the database schema, a few sample data rows, and human-readable field explanations for the fields that are not easy to comprehend.

Based on the initial tests, this method showed great results. The LLM equipped with all the necessary information was able to generate the correct SQL, which was then run against the database to retrieve the correct context. After experimenting with the idea, Q4 decided that SQL generation was the way forward to address context extraction challenges for their own specific dataset.

Let’s start with describing the overall solution approach, break it down to its components, and then put the pieces together.

Solution overview

LLMs are large models with billions of parameters that are pre-trained using very large amounts of data from a variety of sources. Due to the breadth of the training datasets, LLMs are expected to have general knowledge in a variety of domains. LLMs are also known for their reasoning abilities, which vary from one model to another. This general behavior can be optimized to a specific domain or industry by further optimizing a foundation model using additional domain-specific pre-training data or by fine-tuning using labeled data. Given the right context, metadata, and instructions, a well-selected general purpose LLM can produce good-quality SQL as long as it has access to the right domain-specific context.

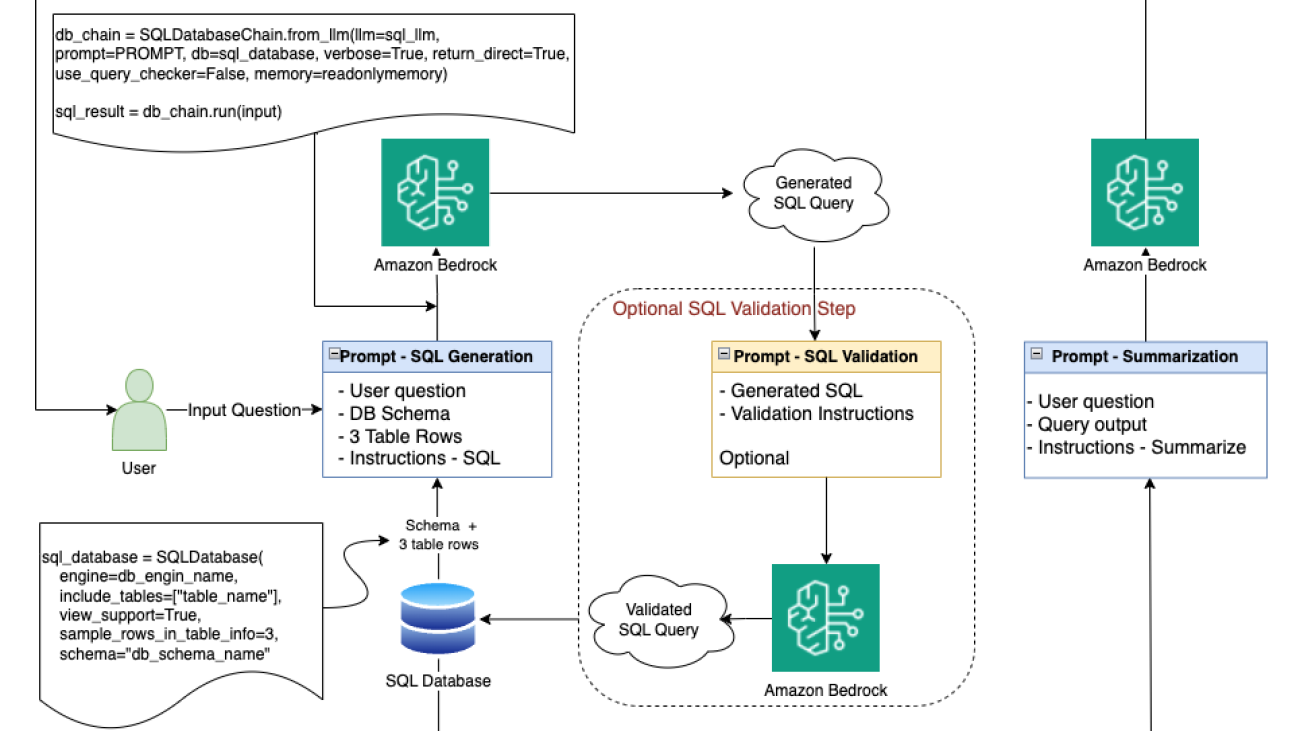

In Q4’s use case, we start with translating the customer question into SQL. We do this by combining the user question, database schema, some sample database rows, and detailed instructions as a prompt to the LLM to generate SQL. After we have the SQL, we can run a validation step if deemed necessary. When we’re happy with the quality of the SQL, we run the query against the database to retrieve the relevant context that we need for the following step. Now that we have the relevant context, we can send the user’s original question, the context retrieved, and a set of instructions back to the LLM to produce a final summarized response. The goal of the last step is to have the LLM summarize the results and provide a contextual and accurate answer that can be then passed along to the user.

The choice of LLM used at every stage of the process highly impacts the accuracy, cost, and performance. Choosing a platform or technology that can allow you the flexibility to switch between LLMs within the same use case (multiple LLM trips for different tasks), or across different use cases, can be beneficial in optimizing the quality of the output, latency, and cost. We address the choice of LLM later in this post.

Solution building blocks

Now that we have highlighted the approach at a high level, let’s dive into the details, starting with the solution building blocks.

Amazon Bedrock

Amazon Bedrock is a fully managed service that offers a choice of high-performing FMs from leading companies, including AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon. Amazon Bedrock also offers a broad set of tools that are needed to build generative AI applications, simplify the development process, and maintain privacy and security. In addition, with Amazon Bedrock you can choose from various FM options, and you can further fine-tune the models privately using your own data to align models’ responses with your use case requirements. Amazon Bedrock is fully serverless with no underlying infrastructure to manage extending access to available models through a single API. Lastly, Amazon Bedrock supports several security and privacy requirements, including HIPAA eligibility and GDPR compliance.

In Q4’s solution, we use Amazon Bedrock as a serverless, API-based, multi-foundation model building block. Because we intend to make multiple trips to the LLM within the same use case, based on the task type, we can choose the model that is most optimal for a specific task, be it SQL generation, validation, or summarization.

LangChain

LangChain is an open source integration and orchestration framework with a set of pre-built modules (I/O, retrieval, chains, and agents) that you can use to integrate and orchestrate tasks between FMs, data sources, and tools. The framework facilitates building generative AI applications that require orchestrating multiple steps to produce the desired output, without having to write code from scratch. LangChain supports Amazon Bedrock as a multi-foundation model API.

Specific to Q4’s use case, we use LangChain for coordinating and orchestrating tasks in our workflow, including connecting to data sources and LLMs. This approach has simplified our code because we can use the existing LangChain modules.

SQLDatabaseChain

SQLDatabaseChain is a LangChain chain that can be imported from langchain_experimental. SLDatabaseChain makes it straightforward to create, implement, and run SQL queries, using its effective text-to-SQL conversions and implementations.

In our use case, we use SQLDatabaseChain in the SQL generation, simplifying and orchestrating interactions between the database and the LLM.

The dataset

Our structured dataset can reside in a SQL database, data lake, or data warehouse as long as we have support for SQL. In our solution, we can use any dataset type with SQL support; this should be abstracted from the solution and shouldn’t change the solution in any way.

Implementation details

Now that we have explored the solution approach, solution components, the choice of technology, and tools, we can put the pieces together. The following diagram highlights the end-to-end solution.

Let’s walk through the implementation details and the process flow.

Generate the SQL query

To simplify coding, we use existing frameworks. We use LangChain as an orchestration framework. We start with the input stage, where we receive the user question in natural language.

In this first stage, we take this input and generate an equivalent SQL that we can run against the database for context extraction. To generate SQL, we use SQLDatabaseChain, which relies on Amazon Bedrock for access to our desired LLM. With Amazon Bedrock, using a single API, we get access to a number of underlying LLMs and can pick the right one for each LLM trip we make. We first establish a connection to the database and retrieve the required table schema along with some sample rows from the tables we intend to use.

In our testing, we found 2–5 rows of table data to be sufficient to give enough information to the model without adding too much unnecessary overhead. Three rows were just enough to provide context, without overwhelming the model with too much input. In our use case, we started with Anthropic Claude V2. The model is known for its advanced reasoning and articulate contextual responses when provided with the right context and instructions. As part of the instructions, we can include more clarifying details to the LLM. For example, we can describe that column Comp_NAME stands for the company name. We now can construct the prompt by combining the user question as is, the database schema, three sample rows from the table we intend to use, and a set of instructions to generate the required SQL in clean SQL format without comments or additions.

All the input elements combined are considered as the model input prompt. A well-engineered input prompt that is tailored to the model’s preferred syntax highly impacts both the quality and performance of the output. The choice of model to use for a specific task is also important, not only because it impacts the output quality, but also because it has cost and performance implications.

We discuss model selection and prompt engineering and optimization later in this post, but it’s worth noting that for the query generation stage, we noticed that Claude Instant was able to produce comparable results, especially when the user question is well phrased and not as sophisticated. However, Claude V2 produced better results even with more complex and indirect user input. We learned that although in some cases Claude Instant may provide sufficient accuracy at a better latency and price point, our case for query generation was better suited for Claude V2.

Verify the SQL query

Our next step is to verify that the LLM has successfully generated the right query syntax and that the query makes contextual sense considering the database schemas and the example rows provided. For this verification step, we can revert to native query validation within SQLDatabaseChain, or we can run a second trip to the LLM including the query generated along with validation instruction.

If we use an LLM for the validation step, we can use the same LLM as before (Claude V2) or a smaller, more performant LLM for a simpler task, such as Claude Instant. Because we’re using Amazon Bedrock, this should be a very simple adjustment. Using the same API, we can change the model name in our API call, which takes care of the change. It’s important to note that in most cases, a smaller LLM can provide better efficiency in both cost and latency and should be considered—as long as you’re getting the accuracy desired. In our case, testing proved the query generated to be consistently accurate and with the right syntax. Knowing that, we were able to skip this validation step and save on latency and cost.

Run the SQL query

Now that we have the verified SQL query, we can run the SQL query against the database and retrieve the relevant context. This should be a straightforward step.

We take the generated context, provide it to the LLM of our choice with the initial user question and some instruction, and ask the model to generate a contextual and articulate summary. We then present the generated summary to the user as an answer to the initial question, all aligned with the context extracted from our dataset.

For the LLM involved in the summarization step, we can use either Titan Text Express or Claude Instant. They would both present good options for the summarization task.

Application integration

The Q&A chatbot capability is one of Q4’s AI services. To ensure modularity and scalability, Q4 builds AI services as microservices that are accessible to Q4 applications through APIs. This API-based approach enables seamless integration with the Q4 Platform ecosystem and facilitates exposing the AI services’ capabilities to the full suite of platform applications.

The main objective of the AI services is to provide straightforward capabilities for retrieving data from any public or proprietary data source using natural language as input. In addition, the AI services provide additional layers of abstraction to ensure that functional and non-functional requirements, such as data privacy and security are met. The following diagram demonstrates the integration concept.

Implementation challenges

In addition to the challenges presented by the nature of the structured, numerical dataset that we discussed earlier, Q4 was faced with a number of other implementation challenges that needed to be addressed.

LLM selection and performance

Selecting the right LLM for the task is crucial because it directly impacts the quality of output as well as the performance (round trip latency). Here are some factors that play into the LLM selection process:

- Type of LLM – The way the FMs are architected and the initial data the model has been pre-trained on determines the types of tasks the LLM would be good at and how good it will be. For example, a text LLM would be good at text generation and summarization, whereas a text-to-image or image-to-text model would be more geared towards image analytics and generation tasks.

- LLM size – FM sizes are measured by the number of model parameters a particular model has, typically in billions for modern LLMs. Typically, the larger the model, the more expensive to initially train or subsequently fine-tune. On the other hand, in general, for the same model architecture, the larger the model is, the smarter we expect it to be in performing the type of task it is geared towards.

- LLM performance – Typically, the larger the model, the more time it takes to generate output, assuming you’re using the same compute and I/O parameters (prompt and output size). In addition, for the same model size, performance is highly impacted by how optimized your prompt is, the size of the I/O tokens, and the clarity and syntax of the prompt. A well-engineered prompt, along with an optimized I/O token size, can improve the model response time.

Therefore, when optimizing your task, consider the following best practices:

- Choose a model that is suitable for the task at hand

- Select the smallest model size that can produce the accuracy you’re looking for

- Optimize your prompt structure and be as specific as possible with the instructions in a way that is easy for the model to understand

- Use the smallest input prompt that can provide enough instruction and context to produce the accuracy level you’re looking for

- Limit the output size to the smallest size that can be meaningful for you and satisfy your output requirements

Taking the model selection and performance optimization factors into account, we went to work to optimize our SQL generation use case. After some testing, we noticed that, provided we have the right context and instructions, Claude Instant, with the same prompt data, would produce comparable quality of SQL as Claude V2 at a much better performance and price point. This stands true when the user input is more direct and simpler in nature. For more sophisticated input, Claude V2 was necessary to produce the desired accuracy.

Applying the same logic on the summarization task led us to conclude that using Claude Instant or Titan Text Express would produce the accuracy required at a much better performance point than if we use a larger model such as Claude V2. Titan Text Expressed also offered better price-performance, as we discussed earlier.

The orchestration challenge

We realized that there is a lot to orchestrate before we can get a meaningful output response for the user question. As shown in the solution overview, the process involved multiple database trips and multiple LLM trips that are intertwined. If we were to build from scratch, we would have had to make a significant investment in the undifferentiated heavy lifting just to get the basic code ready. We quickly pivoted to using LangChain as an orchestration framework, taking advantage of the power of the open source community, and reusing existing modules without reinventing the wheel.

The SQL challenge

We also realized that generating SQL is not as simple as context extraction mechanisms like semantic search or using embeddings. We need to first get the database schema and a few sample rows to include in our prompt to the LLM. There is also the SQL validation stage, where we needed to interact with both the database and the LLM. SQLDatabaseChain was the obvious choice of tool. Because it’s part of LangChain, it was straightforward to adapt, and now we can manage the SQL generation and verification assisted with the chain, minimizing the amount of work we needed to do.

Performance challenges

With the use of Claude V2, and after proper prompt engineering (which we discuss in the next section), we were able to produce high-quality SQL. Considering the quality of the SQL generated, we started to look at how much value the validation stage is actually adding. After further analyzing the results, it became clear that the quality of the SQL generated was consistently accurate in a way that made the cost/benefit of adding an SQL validation stage unfavorable. We ended up eliminating the SQL validation stage without negatively impacting the quality of our output and shaved off the SQL validation round trip time.

In addition to optimizing for a more cost- and performance-efficient LLM for the summarization step, we were able to use Titan Text Express to get better performance and cost-efficiency.

Further performance optimization involved fine-tuning the query generation process using efficient prompt engineering techniques. Rather than providing an abundance of tokens, the focus was on providing the least amount of input tokens, in the right syntax that the model is trained to understand, and with the minimal yet optimal set of instructions. We discuss this more in the next section—it’s an important topic that is applicable not only here but also in other use cases.

Prompt engineering and optimization

You can adjust Claude on Amazon Bedrock for various business use cases if the right prompt engineering techniques are employed. Claude mainly acts as a conversational assistant that utilizes a human/assistant format. Claude is trained to fill in text for the assistant role. Given the instructions and prompt completions desired, we can optimize our prompts for Claude using several techniques.

We start with a proper formatted prompt template that gives a valid completion, then we can further optimize the responses experimenting with prompting with various sets of inputs that are representative of real-world data. It’s recommended to get many inputs while developing a prompt template. You can also use separate sets of prompt development data and test data.

Another way to optimize the Claude response is to experiment and iterate by adding rules, instructions, and useful optimizations. From these optimizations, you can view different types of completions by, for example, telling Claude to mention “I don’t know” to prevent hallucinations, thinking step by step, using prompt chaining, giving room to “think” as it generates responses, and double-checking for comprehension and accuracy.

Let’s use our query generation task and discuss some of the techniques we used to optimize our prompt. There were a few core elements that benefited our query generation efforts:

- Using the proper human/assistant syntax

- Utilizing XML tags (Claude respects and understands XML tags)

- Adding clear instructions for the model to prevent hallucination

The following generic example shows how we used the human/assistant syntax, applied XML tags, and added instructions to restrict the output to SQL and instruct the model to say “sorry, I am unable to help” if it can’t produce relevant SQL. The XML tags were used to frame the instructions, additional hints, database schema, additional table explanations, and example rows.

The final working solution

After we had addressed all the challenges identified during the proof of concept, we had fulfilled all the solution requirements. Q4 was satisfied with the quality of the SQL generated by the LLM. This stands true for simple tasks that required only a WHERE clause to filter the data, and also with more complex tasks that required context-based aggregations with GROUP BY and mathematical functions. The end-to-end latency of the overall solution came within what was defined as acceptable for the use case—single-digit seconds. This was all thanks to the choice of an optimal LLM at every stage, proper prompt engineering, eliminating the SQL verification step, and using an efficient LLM for the summarization step (Titan Text Express or Claude Instant).

It’s worth noting that using Amazon Bedrock as a fully managed service and the ability to have access to a suite of LLMs through the same API allowed for experimentation and seamless switching between LLMs by changing the model name in the API call. With this level of flexibility, Q4 was able to choose the most performant LLM for each LLM call based on the nature of the task, be it query generation, verification, or summarization.

Conclusion

There is no one solution that fits all use cases. In a RAG approach, the quality of the output highly depends on providing the right context. Extracting the right context is key, and every dataset is different with its unique characteristics.

In this post, we demonstrated that for numerical and structured datasets, using SQL to extract the context used for augmentation can lead to more favorable results. We also demonstrated that frameworks like LangChain can minimize the coding effort. Additionally, we discussed the need to be able to switch between LLMs within the same use case in order to achieve the most optimal accuracy, performance, and cost. Finally, we highlighted how Amazon Bedrock, being serverless and with a variety of LLMs under the hood, provides the flexibility needed to build secure, performant, and cost-optimized applications with the least amount of heavy lifting.

Start your journey towards building generative AI-enabled applications by identifying a use case of value to your business. SQL generation, as the Q4 team learned, can be a game changer in building smart applications that integrate with your data stores, unlocking revenue potential.

About the authors

Tamer Soliman is a Senior Solutions Architect at AWS. He helps Independent Software Vendor (ISV) customers innovate, build, and scale on AWS. He has over two decades of industry experience in consulting, training, and professional services. He is a multi patent inventor with three granted patents and his experience spans multiple technology domains including telecom, networking, application integration, AI/ML, and cloud deployments. He specializes in AWS Networking and has a profound passion for machine leaning, AI, and Generative AI.

Tamer Soliman is a Senior Solutions Architect at AWS. He helps Independent Software Vendor (ISV) customers innovate, build, and scale on AWS. He has over two decades of industry experience in consulting, training, and professional services. He is a multi patent inventor with three granted patents and his experience spans multiple technology domains including telecom, networking, application integration, AI/ML, and cloud deployments. He specializes in AWS Networking and has a profound passion for machine leaning, AI, and Generative AI.

Mani Khanuja is a Tech Lead – Generative AI Specialists, author of the book – Applied Machine Learning and High Performance Computing on AWS, and a member of the Board of Directors for Women in Manufacturing Education Foundation Board. She leads machine learning (ML) projects in various domains such as computer vision, natural language processing and generative AI. She helps customers to build, train and deploy large machine learning models at scale. She speaks in internal and external conferences such re:Invent, Women in Manufacturing West, YouTube webinars and GHC 23. In her free time, she likes to go for long runs along the beach.

Mani Khanuja is a Tech Lead – Generative AI Specialists, author of the book – Applied Machine Learning and High Performance Computing on AWS, and a member of the Board of Directors for Women in Manufacturing Education Foundation Board. She leads machine learning (ML) projects in various domains such as computer vision, natural language processing and generative AI. She helps customers to build, train and deploy large machine learning models at scale. She speaks in internal and external conferences such re:Invent, Women in Manufacturing West, YouTube webinars and GHC 23. In her free time, she likes to go for long runs along the beach.

Stanislav Yeshchenko is a Software Architect at Q4 Inc.. He has over a decade of industry experience in software development and system architecture. His diverse background spanning roles such as Technical Lead and Senior Full Stack Developer, powers his contributions to advancing innovation of the Q4 Platform. Stanislav is dedicated to driving technical innovation and shaping strategic solutions in the field.

Stanislav Yeshchenko is a Software Architect at Q4 Inc.. He has over a decade of industry experience in software development and system architecture. His diverse background spanning roles such as Technical Lead and Senior Full Stack Developer, powers his contributions to advancing innovation of the Q4 Platform. Stanislav is dedicated to driving technical innovation and shaping strategic solutions in the field.

Introducing Gemini: our largest and most capable AI model

Gemini is our most capable and general model, built to be multimodal and optimized for three different sizes: Ultra, Pro and Nano.Read More

Gemini is our most capable and general model, built to be multimodal and optimized for three different sizes: Ultra, Pro and Nano.Read More

Pixel 8 Pro — the first smartphone with AI built in — is now running Gemini Nano, plus more AI updates coming to the Pixel portfolio

New Feature Drop brings updates to your Pixel hardware. Plus, Gemini Nano now powers on-device generative AI features for Pixel 8 Pro.Read More

New Feature Drop brings updates to your Pixel hardware. Plus, Gemini Nano now powers on-device generative AI features for Pixel 8 Pro.Read More

Bard gets its biggest upgrade yet with Gemini

We’re starting to bring Gemini’s advanced capabilities into Bard.Read More

We’re starting to bring Gemini’s advanced capabilities into Bard.Read More

Research Focus: Week of December 4, 2023

Welcome to Research Focus, a series of blog posts that highlights notable publications, events, code/datasets, new hires and other milestones from across the research community at Microsoft.

NEW RESEARCH

Leveraging Large Language Models for Automated Proof Synthesis in Rust

Formal verification can probably guarantee the correctness of critical system software, but the high proof burden has long hindered its wide adoption. Recently, large language models (LLMs) have shown success in code analysis and synthesis. In a recent paper: Leveraging Large Language Models for Automated Proof Synthesis in Rust, researchers from Microsoft present a combination of LLMs and static analysis to synthesize invariants, assertions, and other proof structures for a Rust-based formal verification framework called Verus.

In a few-shot setting, GPT-4 demonstrates impressive logical ability in generating postconditions and loop invariants, especially when analyzing short code snippets. However, GPT-4 does not consistently retain and propagate the full context information needed by Verus, a task that can be straightforwardly accomplished through static analysis. Based on these observations, the researchers developed a prototype based on OpenAI’s GPT-4 model. This prototype decomposes the verification task into multiple smaller ones, iteratively queries GPT-4, and combines its output with lightweight static analysis. Evaluating the prototype with a developer in the automation loop on 20 vector-manipulating programs showed that it significantly reduces human effort in writing entry-level proof code.

MICROSOFT RESEARCH PODCAST

Intern Insights: Dr. Madeleine Daepp with Jennifer Scurrell and Alejandro Cuevas

In this episode, PhD students Jennifer Scurrell and Alejandro Cuevas talk to Senior Researcher Dr. Madeleine Daepp. They discuss the internship culture at Microsoft Research, from opportunities to connect with researchers to the teamwork they say helped make it possible for them to succeed, and the impact they hope to have with their work.

NEW RESEARCH

Don’t Forget the User: It’s Time to Rethink Network Measurements

The goal of network measurement is to characterize how and how well a network is performing. This has traditionally meant a focus on the bits and bytes — low-level network metrics such as latency and throughput, which have the advantage of being objective but are limited in representativeness and reach. In a recent paper: Don’t Forget the User: It’s Time to Rethink Network Measurements, researchers from Microsoft argue that users also provide a rich and largely untapped source of implicit and explicit signals that could complement and expand the coverage of traditional measurement methods. Implicit feedback leverages user actions to indirectly infer network performance and the resulting quality of user experience. Explicit feedback leverages user input, typically provided offline, to expand the reach of network measurement, especially for newer ones.

The researchers analyze example scenarios, including capturing implicit feedback through user actions such as the user (un)muting the mic or turning on/off the camera in a large-scale conferencing service. These techniques complement existing measurement methods and open a broad set of research directions, ranging from rethinking measurements tools, to designing user-centric networked systems and applications.

TECHNOLOGY UPDATE

Ghana 3D international telemedicine proof of concept study

A real-time 3D telemedicine system – leveraging Holoportation communication technology – was used to facilitate consultations with complex reconstructive patients prior, during, and after an overseas surgical collaboration. The system was used in a proof-of-concept clinic in November 2022 between Canniesburn Plastic Surgery Unit, UK, and the National Reconstructive Plastic Surgery and Burns Centre, Korle Bu Teaching Hospital, Ghana.

communication technology – was used to facilitate consultations with complex reconstructive patients prior, during, and after an overseas surgical collaboration. The system was used in a proof-of-concept clinic in November 2022 between Canniesburn Plastic Surgery Unit, UK, and the National Reconstructive Plastic Surgery and Burns Centre, Korle Bu Teaching Hospital, Ghana.

Four patients in Ghana were followed through their patient journey (mandibular ameloblastoma, sarcoma thigh, maxillary tumor, sarcoma back). A new report: Ghana 3D Telemedicine International MDT: A Proof-of-concept study details the responses of 13 participants (4 patients, 4 Ghana clinicians, 5 UK clinicians) completed feedback on the 3D multidisciplinary team (MDT). Outcome measures were rated highly with satisfaction 84.31/100, perceived benefit 4.54/5, overall quality 127.3/ 147, and usability 83.2/100. This data shows close alignment with that previously published on high income countries.

This novel technology has potential to enhance overseas surgical visits in low-to-middle income countries through improved planning, informed discussion with patients, expert consensus on complex cases, and fostering engagement with professionals who may be thousands of miles away.

The post Research Focus: Week of December 4, 2023 appeared first on Microsoft Research.