Transforming research ideas into meaningful impact is no small feat. It often requires the knowledge and experience of individuals from across disciplines and institutions. Collaborators, a new Microsoft Research Podcast series, explores the relationships—both expected and unexpected—behind the projects, products, and services being pursued and delivered by researchers at Microsoft and the diverse range of people they’re teaming up with.

Amid the ongoing surge of AI research, healthcare is emerging as a leading area for real-world transformation. From driving efficiency gains for clinicians to improving patient outcomes, AI is beginning to make a tangible impact. Thousands of scientific papers have explored AI systems capable of analyzing medical documents and images with unprecedented accuracy. The latest work goes even further, showing how healthcare agents can collaborate—with each other and with human doctors—embedding AI directly into clinical workflows.

In this discussion, we explore how teams across Microsoft are working together to generate advanced AI capabilities and solutions for developers and clinicians around the globe. Leading the conversation are Dr. Matthew Lungren, chief scientific officer for Microsoft Health and Life Sciences, and Jonathan Carlson, vice president and managing director of Microsoft Health Futures—two key leaders behind this collaboration. They’re joined by Smitha Saligrama, principal group engineering manager within Microsoft Health and Life Sciences, Will Guyman, group product manager within Microsoft Health and Life Sciences, and Cameron Runde, a senior strategy manager for Microsoft Research Health Futures—all of whom play crucial roles in turning AI breakthroughs into practical, life-saving innovations.

Together, these experts examine how Microsoft is helping integrate cutting-edge AI into healthcare workflows—saving time today, and lives tomorrow.

Learn more

Developing next-generation cancer care management with multi-agent orchestration

Source Blog, May 2025

Healthcare Agent Orchestrator (opens in new tab)

GitHub

Azure AI Foundry Labs (opens in new tab)

Homepage

Subscribe to the Microsoft Research Podcast:

Transcript

[MUSIC]MATTHEW LUNGREN: You’re listening to Collaborators, a Microsoft Research podcast, showcasing the range of expertise that goes into transforming mind blowing ideas into world changing technologies. Despite the advancements in AI over the decades, generative AI exploded into view in 2022, when ChatGPT became the, sort of, internet browser for AI and became the fastest adopted consumer software application in history.

JONATHAN CARLSON: From the beginning, healthcare stood out to us as an important opportunity for general reasoners to improve the lives and experiences of patients and providers. Indeed, in the past two years, there’s been an explosion of scientific papers looking at the application first of text reasoners and medicine, then multi-modal reasoners that can interpret medical images, and now, most recently, healthcare agents that can reason with each other. But even more impressive than the pace of research has been the surprisingly rapid diffusion of this technology into real world clinical workflows.

LUNGREN: So today, we’ll talk about how our cross-company collaboration has shortened that gap and delivered advanced AI capabilities and solutions into the hands of developers and clinicians around the world, empowering everyone in health and life sciences to achieve more. I’m Doctor Matt Lungren, chief scientific officer for Microsoft Health and Life Sciences.

CARLSON: And I’m Jonathan Carlson, vice president and managing director of Microsoft Health Futures.

LUNGREN: And together we brought some key players leading in the space of AI and health care from across Microsoft. Our guests today are Smitha Saligrama, principal group engineering manager within Microsoft Health and Life Sciences, Will Guyman, group product manager within Microsoft Health and Life Sciences, and Cameron Runde, a senior strategy manager for Microsoft Health Futures.

CARLSON: We’ve asked these brilliant folks to join us because each of them represents a mission critical group of cutting-edge stakeholders, scaling breakthroughs into purpose-built solutions and capabilities for health care.

LUNGREN: We’ll hear today how generative AI capabilities can unlock reasoning across every data type in medicine: text, images, waveforms, genomics. And further, how multi-agent frameworks in healthcare can accelerate complex workflows, in some cases acting as a specialist team member, safely secured inside the Microsoft 365 tools used by hundreds of millions of healthcare enterprise users across the world. The opportunity to save time today and lives tomorrow with AI has never been larger.

[MUSIC FADES]MATTHEW LUNGREN: Jonathan. You know, it’s been really interesting kind of observing Microsoft Research over the decades. I’ve, you know, been watching you guys in my prior academic career. You are always on the front of innovation, particularly in health

JONATHAN CARLSON: I mean, it’s some of what’s in our DNA, I mean, we’ve been publishing in health and life sciences for two decades here. But when we launched Health Futures as a mission-focused lab about 7 or 8 years ago, we really started with the premise that the way to have impact was to really close the loop between, not just good ideas that get published, but good ideas that can actually be grounded in real problems that clinicians and scientists care about, that then allow us to actually go from that first proof of concept into an incubation, into getting real world feedback that allows us to close that loop. And now with, you know, the HLS organization here as a product group, we have the opportunity to work really closely with you all to not just prove what’s possible in the clinic or in the lab, but actually start scaling that into the broader community.

CAMERON RUNDE: And one thing I’ll add here is that the problems that we’re trying to tackle in health care are extremely complex. And so, as Jonathan said, it’s really important that we come together and collaborate across disciplines as well as across the company of Microsoft and with our external collaborators, as well across the whole industry.

CARLSON: So, Matt, back to you. What are you guys doing in the product group? How do you guys see these models getting into the clinic?

LUNGREN: You know, I think a lot of people, you know, think about AI is just, you know, maybe just even a few years old because of GPT and how that really captured the public’s consciousness. Right?

And so, you think about the speech-to-text technology of being able to dictate something, for a clinic note or for a visit, that was typically based on Nuance technology. And so there’s a lot of product understanding of the market, how to deliver something that clinicians will use, understanding the pain points and workflows and really that Health IT space, which is sometimes the third rail, I feel like with a lot of innovation in healthcare.

But beyond that, I mean, I think now that we have this really powerful engine of Microsoft and the platform capabilities, we’re seeing, innovations on the healthcare side for data storage, data interoperability, with different types of medical data. You have new applications coming online, the ability, of course, to see generative AI now infused into the speech-to-text and, becoming Dragon Copilot, which is something that has been, you know, tremendously, received by the community.

Physicians are able to now just have a conversation with a patient. They turn to their computer and the note is ready for them. There’s no more this, we call it keyboard liberation. I don’t know if you heard that before. And that’s just been tremendous. And there’s so much more coming from that side. And then there’s other parts of the workflow that we also get engaged in — the diagnostic workflow.

So medical imaging, sharing images across different hospital systems, the list goes on. And so now when you move into AI, we feel like there’s a huge opportunity to deliver capabilities into the clinical workflow via the products and solutions we already have. But, I mean, we’ll now that we’ve kind of expanded our team to involve Azure and platform, we’re really able to now focus on the developers.

WILL GUYMAN: Yeah. And you’re always telling me as a doctor how frustrating it is to be spending time at the computer instead of with your patients. I think you told me, you know, 4,000 clicks a day for the typical doctor, which is tremendous. And something like Dragon Copilot can save that five minutes per patient. But it can also now take actions after the patient encounter so it can draft the after-visit summary.

It can order labs and medications for the referral. And that’s incredible. And we want to keep building on that. There’s so many other use cases across the ecosystem. And so that’s why in Azure AI Foundry, we have translated a lot of the research from Microsoft Research and made that available to developers to build and customize for their own applications.

SMITHA SALIGRAMA: Yeah. And as you were saying, in our transformation of moving from solutions to platforms and as, scaling solutions to other, multiple scenarios, as we put our models in AI Foundry, we provide these developer capabilities like bring your own data and fine tune these models and then apply it to, scenarios that we couldn’t even imagine. So that’s kind of the platform play we’re scaling now.

LUNGREN: Well, I want to do a reality check because, you know, I think to us that are now really focused on technology, it seems like, I’ve heard this story before, right. I, I remember even in, my academic clinical days where it felt like technology was always the quick answer and it felt like technology was, there was maybe a disconnect between what my problems were or what I think needed to be done versus kind of the solutions that were kind of, created or offered to us. And I guess at some level, how Jonathan, do you think about this? Because to do things well in the science space is one thing, to do things well in science, but then also have it be something that actually drives health care innovation and practice and translation. It’s tricky, right?

CARLSON: Yeah. I mean, as you said, I think one of the core pathologies of Big Tech is we assume every problem is a technology problem. And that’s all it will take to solve the problem. And I think, look, I was trained as a computational biologist, and that sits in the awkward middle between biology and computation. And the thing that we always have to remember, the thing that we were very acutely aware of when we set out, was that we are not the experts. We do have, you know, you as an M.D., we have everybody on the team, we have biologists on the team.

But this is a big space. And the only way we’re going to have real impact, the only way we’re even going to pick the right problems to work on is if we really partner deeply, with providers, with EHR (electronic health records) vendors, with scientists, and really understand what’s important and again, get that feedback loop.

RUNDE: Yeah, I think we really need to ground the work that we do in the science itself. You need to understand the broader ecosystem and the broader landscape, across health care and life sciences, so that we can tackle the most important problems, not just the problems that we think are important. Because, as Jonathan said, we’re not the experts in health care and life sciences. And that’s really the secret sauce. When you have the clinical expertise come together with the technical expertise. That’s how you really accelerate health care.

CARLSON: When we really launched this, this mission, 7 or 8 years ago, we really came in with the premise of, if we decide to stop, we want to be sure the world cares. And the only way that’s going to be true is if we’re really deeply embedded with the people that matter–the patients, the providers and the scientists.

LUNGREN: And now it really feels like this collaborative effort, you know, really can help start to extend that mission. Right. I think, you know, Will and Smitha, that we definitely feel the passion and the innovation. And we certainly benefit from those collaborations, too. But then we have these other partners and even customers, right, that we can start to tap into and have that flywheel keep spinning.

GUYMAN: Yeah. And the whole industry is an ecosystem. So, we have our own data sets at Microsoft Research that you’ve trained amazing AI models with. And those are in the catalog. But then you’ve also partnered with institutions like Providence or Page AI . And those models are in the catalog with their data. And then there are third parties like Nvidia that have their own specialized proprietary data sets, and their models are there too. So, we have this ecosystem of open source models. And maybe Smitha, you want to talk about how developers can actually customize these.

SALIGRAMA: Yeah. So we use the Azure AI Foundry ecosystem. Developers can feel at home if they’re using the AI Foundry. So they can look at our model cards that we publish as part of the models we publish, understand the use cases of these models, how to, quickly, bring up these APIs and, look at different use cases of how to apply these and even fine tune these models with their own data. Right. And then, use it for specific tasks that we couldn’t have even imagined.

LUNGREN: Yeah it has been interesting to see we have these health care models in the catalog again, some that came from research, some that came from third parties and other product developers and Azure’s kind of becoming the home base, I think, for a lot of health and life science developers. They’re seeing all the different modalities, all the different capabilities. And then in combination with Azure OpenAI, which as we know, is incredibly competent in lots of different use cases. How are you looking at the use cases, and what are you seeing folks use these models for as they come to the catalog and start sharing their discoveries or products?

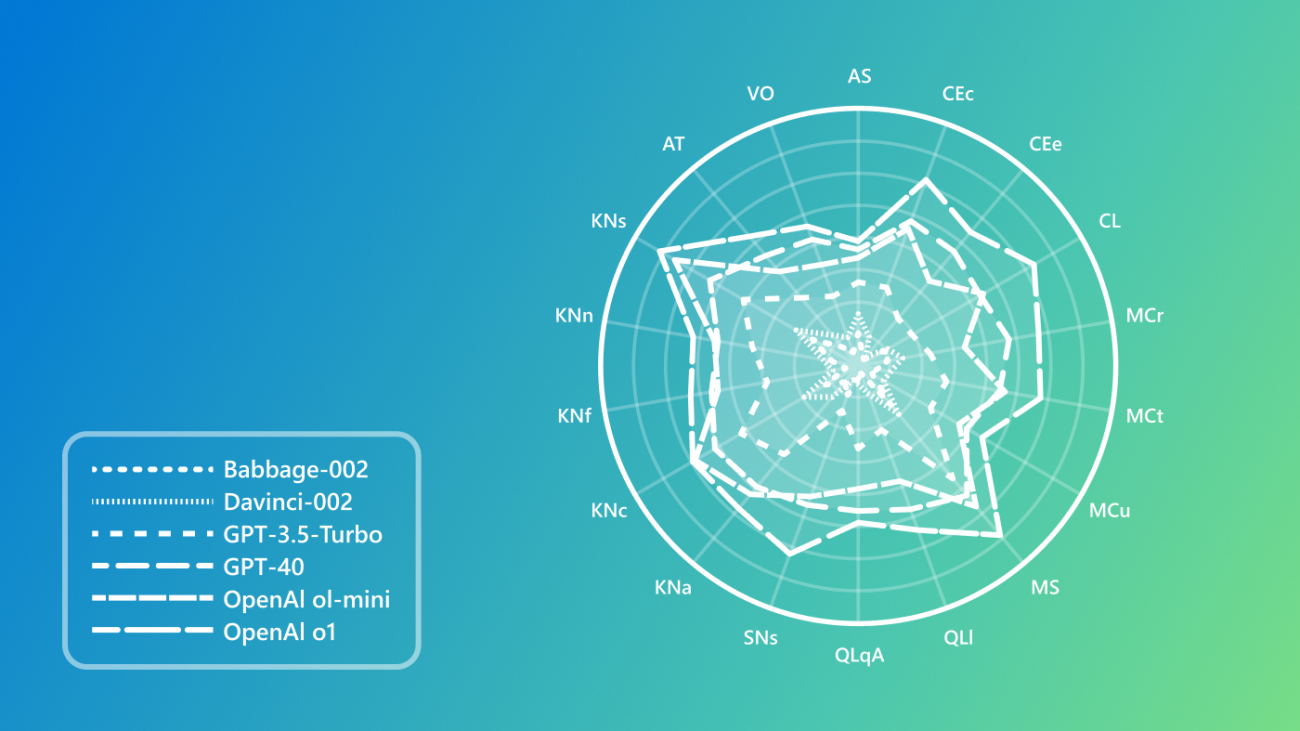

GUYMAN: Well, the general-purpose large language models are amazing for medical general reasoning. So Microsoft Research has shown that that they can perform super well on, for example, like the United States medical licensing exam, they can exceed doctor performance if they’re just picking between different multiple-choice questions. But real medicine we know is messier. It doesn’t always start with the whole patient context provided as text in the prompt. You have to get the source data and that raw data is often non-text. The majority of it is non-text. It’s things like medical imaging, radiology, pathology, ophthalmology, dermatology. It goes on and on. And there’s endless signal data, lab data. And so all of this diverse data type needs to be processed through specialized models because much of that data is not available on the public internet.

And that’s why we’re taking this partner approach, first party and third party models that can interpret all this kind of data and then connect them ultimately back to these general reasoners to reason over that.

LUNGREN: So, you know, I’ve been at this company for a while and, you know, familiar with kind of how long it takes, generally to get, you know, a really good research paper, do all the studies, do all the data analysis, and then go through the process of publishing, right, which takes, as, you know, a long time and it’s, you know, very rigorous.

And one of the things that struck me, last year, I think we, we started this big collaboration and, within a quarter, you had a Nature paper coming out from Microsoft Research, and that model that the Nature paper was describing was ready to be used by anyone on the Azure AI Foundry within that same quarter. It kind of blew my mind when I thought about it, you know, even though we were all, you know, working very hard to get that done. Any thoughts on that? I mean, has this ever happened in your career? And, you know, what’s the secret sauce to that?

CARLSON: Yeah, I mean, the time scale from research to product has been massively compressed. And I’d push that even further, which is to say, the reason why it took a quarter was because we were laying the railroad tracks as we’re driving the train. We have examples right after that when we are launching on Foundry the same day we were publishing the paper.

And frankly, the review times are becoming longer than it takes to actually productize the models. I think there’s two things that are going on with that are really converging. One is that the overall ecosystem is converging on a relatively small number of patterns, and that gives us, as a tech company, a reason to go off and really make those patterns hardened in a way that allows not just us, but third parties as well, to really have a nice workflow to publish these models.

But the other is actually, I think, a change in how we work, you know, and for most of our history as an industrial research lab, we would do research and then we’d go pitch it to somebody and try and throw it over the fence. We’ve really built a much more integrated team. In fact, if you look at that Nature paper or any of the other papers, there’s folks from product teams. Many of you are on the papers along with our clinical collaborators.

RUNDE: Yeah. I think one thing that’s really important to note is that there’s a ton of different ways that you can have impact, right? So I like to think about phasing. In Health Futures at least, I like to think about phasing the work that we do. So first we have research, which is really early innovation. And the impact there is getting our technology and our tools out there and really sharing the learnings that we’ve had.

So that can be through publications like you mentioned. It can be through open-sourcing our models. And then you go to incubation. So, this is, I think, one of the more new spaces that we’re getting into, which is maybe that blurred line between research and product. Right. Which is, how do we take the tools and technologies that we’ve built and get them into the hands of users, typically through our partnerships?

Right. So, we partner very deeply and collaborate very deeply across the industry. And incubation is really important because we get that early feedback. We get an ability to pivot if we need to. And we also get the ability to see what types of impact our technology is having in the real world. And then lastly, when you think about scale, there’s tons of different ways that you can scale. We can scale third-party through our collaborators and really empower them to go to market to commercialize the things that we’ve built together.

You can also think about scaling internally, which is why I’m so thankful that we’ve created this flywheel between research and product, and a lot of the models that we’ve built that have gone through research, have gone through incubation, have been able to scale on the Azure AI Foundry. But that’s not really our expertise. Right? The scale piece in research, that’s research and incubation. Smitha, how do you think about scaling?

SALIGRAMA: So, there are several angles to scaling the models, the state-of-the-art models we see from the research team. The first angle is, the open sourcing, to get developer trust, and very generous commercial licenses so that they can use it and for their own, use cases. The second is, we also allow them to customize these models, fine tuning these models with their own data. So a lot of different angles of how we provide support and scaling, the state-of-the-art of models we get from the research org.

GUYMAN: And as one example, you know, University of Wisconsin Health, you know, which Matt knows well. They took one of our models, which is highly versatile. They customized it in Foundry and they optimized it to reliably identify abnormal chest X-rays, the most common imaging procedure, so they could improve their turnaround time triage quickly. And that’s just one example. But we have other partners like Sectra who are doing more of operations use cases automatically routing imaging to the radiologists, setting them up to be efficient. And then Page AI is doing, you know, biomarker identification for actually diagnostics and new drug discovery. So, there’s so many use cases that we have partners already who are building and customizing.

LUNGREN: The part that’s striking to me is just that, you know, we could all sit in a room and think about all the different ways someone might use these models on the catalog. And I’m still shocked at the stuff that people use them for and how effective they are. And I think part of that is, you know, again, we talk a lot about generative AI and healthcare and all the things you can do. Again, you know, in text, you refer to that earlier and certainly off the shelf, there’s really powerful applications. But there is, you know, kind of this tip of the iceberg effect where under the water, most of the data that we use to take care of our patients is not text. Right. It’s all the different other modalities. And I think that this has been an unlock right, sort of taking these innovations, innovations from the community, putting them in this ecosystem kind of catalog, essentially. Right. And then allowing folks to kind of, you know, build and develop applications with all these different types of data. Again, I’ve been surprised at what I’m seeing.

CARLSON: This has been just one of the most profound shifts that’s happened in the last 12 months, really. I mean, two years ago we had general models in text that really shifted how we think about, I mean, natural language processing got totally upended by that. Turns out the same technology works for images as well. It doesn’t only allow you to automatically extract concepts from images, but allows you to align those image concepts with text concepts, which means that you can have a conversation with that image. And once you’re in that world now, you are a place where you can start stitching together these multimodal models that really change how you can interact with the data, and how you can start getting more information out of the raw primary data that is part of the patient journey.

LUNGREN: Well, and we’re going to get to that because I think you just touched on something. And I want to re-emphasize stitching these things together. There’s a lot of different ways to potentially do that. Right? There’s ways that you can literally train the model end to end with adapters and all kinds of other early fusion fusions. All kinds of ways. But one of the things that the word of the I guess the year is going to be agents and an agent is a very interesting term to think about how you might abstract away some of the components or the tasks that you want the model to, to accomplish in the midst of sort of a real human to maybe model interaction. Can you talk a little bit more about, how we’re thinking about agents in this, in this platform approach?

GUYMAN: Well, this is our newest addition to the Azure AI Foundry. So there’s an agent catalog now where we have a set of pre-configured agents for health care. And then we also have a multi-agent orchestrator that can jump start the process of developers building their own multi-agent workflows to tackle some complex real-world tasks that clinicians have to deal with. And these agents basically combine a general reasoner, like a large language model, like a GPT 4o or an o series model with a specialized model, like a model that understands radiology or pathology with domain-specific knowledge and tools. So the knowledge might be, you know, public guidelines or, you know, medical journals or your own private data from your EHR or medical imaging system, and then tools like a code interpreter to deal with all of the numeric data or tools like that that the clinicians are using today, like PowerPoint, Word, Teams and etc. And so we’re allowing developers to build and customize each of these agents in Foundry and then deploy them into their workflows.

LUNGREN: And, and I really like that concept because, you know, as, as a, as a from the user personas, I think about myself as a user. How am I going to interact with these agents? Where does it naturally fit? And I and I sort of, you know, I’ve seen some of the demonstrations and some of the work that’s going on with Stanford in particular, showing that, you know, and literally in a Teams chat, I can have my clinician colleagues and I can have specialized health care agents that kind of interact, like I’m interacting with a human on a chat.

It is a completely mind-blowing thing for me. And it’s a light bulb moment for me to I wonder, what have we, what have we heard from folks that have, you know, tried out this health care agent orchestrator in this kind of deployment environment via Teams?

GUYMAN: Well, someone joked, you know, are you sure you’re not using Teams because you work at Microsoft? [LAUGHS] But, then we actually were meeting with one of the, radiologists at one of our partners, and they said that that morning they had just done a Teams meeting, or they had met with other specialists to talk about a patient’s cancer case, or they were coming up with a treatment plan.

And that was the light bulb moment for us. We realized, actually, Teams is already being used by physicians as an internal communication tool, as a tool to get work done. And especially since the pandemic, a lot of the meetings moved to virtual and telemedicine. And so it’s a great distribution channel for AI, which is often been a struggle for AI to actually get in the hands of clinicians. And so now we’re allowing developers to build and then deploy very easily and extend it into their own workflows.

CARLSON: I think that’s such an important point. I mean, if you think about one of the really important concepts in computer science is an application programing interface, like some set of rules that allow two applications to talk to each other. One of the big pushes, really important pushes, in medicine has been standards that allow us to actually have data standards and APIs that allow these to talk to each other, and yet still we end up with these silos. There’s silos of data. There’s silos of applications.

And just like when you and I work on our phone, we have to go back and forth between applications. One of the things that I think agents do is that it takes the idea that now you can use language to understand intent and effectively program an interface, and it creates a whole new abstraction layer that allows us to simplify the interaction between not just humans and the endpoint, but also for developers.

It allows us to have this abstraction layer that lets different developers focus on different types of models, and yet stitch them all together in a very, very natural, way, not just for the users, but for the ability to actually deploy those models.

SALIGRAMA: Just to add to what Jonathan was mentioning, the other cool thing about the Microsoft Teams user interface is it’s also enterprise ready.

RUNDE: And one important thing that we’re thinking about, is exactly this from the very early research through incubation and then to scale, obviously. Right. And so early on in research, we are actively working with our partners and our collaborators to make sure that we have the right data privacy and consent in place. We’re doing this in incubation as well. And then obviously in scale. Yep.

LUNGREN: So, I think AI has always been thought of as a savior kind of technology. We talked a little bit about how there’s been some ups and downs in terms of the ability for technology to be effective in health care. At the same time, we’re seeing a lot of new innovations that are really making a difference. But then we kind of get, you know, we talked about agents a little bit. It feels like we’re maybe abstracting too far. Maybe it’s things are going too fast, almost. What makes this different? I mean, in your mind is this truly a logical next step or is it going to take some time?

CARLSON: I think there’s a couple things that have happened. I think first, on just a pure technology. What led to ChatGPT? And I like to think of really three major breakthroughs.

The first was new mathematical concepts of attention, which really means that we now have a way that a machine can figure out which parts of the context it should actually focus on, just the way our brains do. Right? I mean, if you’re a clinician and somebody is talking to you, the majority of that conversation is not relevant for the diagnosis. But, you know how to zoom in on the parts that matter. That’s a super powerful mathematical concept. The second one is this idea of self-supervision. So, I think one of the fundamental problems of machine learning has been that you have to train on labeled training data and labels are expensive, which means data sets are small, which means the final models are very narrow and brittle. And the idea of self-supervision is that you can just get a model to automatically learn concepts, and the language is just predict the next word. And what’s important about that is that leads to models that can actually manipulate and understand really messy text and pull out what’s important about that, and then and then stitch that back together in interesting ways.

And the third concept, that came out of those first two, was just the observational scale. And that’s that more is better, more data, more compute, bigger models. And that really leads to a reason to keep investing. And for these models to keep getting better. So that as a as a groundwork, that’s what led to ChatGPT. That’s what led to our ability now to not just have rule-based systems or simple machine learning based systems to take a messy EHR record, say, and pull out a couple concepts.

But to really feed the whole thing in and say, okay, I need you to figure out which concepts are in here. And is this particular attribute there, for example. That’s now led to the next breakthrough, which is all those core ideas apply to images as well. They apply to proteins, to DNA. And so we’re starting to see models that understand images and the concepts of images, and can actually map those back to text as well.

So, you can look at a pathology image and say, not just at the cell, but it appears that there’s some certain sort of cancer in this particular, tissue there. And then you take those two things together and you layer on the fact that now you have a model, or a set of models, that can understand intent, can understand human concepts and biomedical concepts, and you can start stitching them together into specialized agents that can actually reason with each other, which at some level gives you an API as a developer to say, okay, I need to focus on a pathology model and get this really, really, sound while somebody else is focusing on a radiology model, but now allows us to stitch these all together with a user interface that we can now talk to through natural language.

RUNDE: I’d like to double click a little bit on that medical abstraction piece that you mentioned. Just the amount of data, clinical data that there is for each individual patient. Let’s think about cancer patients for a second to make this real. Right. For every cancer patient, it could take a couple of hours to structure their information. And why is that important? Because, you have to get that information in a structured way and abstract relevant information to be able to unlock precision health applications right, for each patient. So, to be able to match them to a trial, right, someone has to sit there and go through all of the clinical notes from their entire patient care journey, from the beginning to the end. And that’s not scalable. And so one thing that we’ve been doing in an active project that we’ve been working on with a handful of our partners, but Providence specifically, I’ll call out, is using AI to actually abstract and curate that information. So that gives time back to the health care provider to spend with patients, instead of spending all their time curating this information.

And this is super important because it sets the scene and the backbone for all those precision health applications. Like I mentioned, clinical trial matching, tumor boards is another really important example here. Maybe Matt, you can talk to that a little bit.

LUNGREN: It’s a great example. And you know it’s so funny. We’ve talked about this use case and the you know the health care agent orchestrator is sort of the at the initial lighthouse use case was a tumor board setting. And I remember when we first started working with some of the partners on this, I think we were you know, under a research kind of lens, thinking about what could this, what new diagnoses could have come up with or what new insights might have and what was really a really key moment for us, I think, was noticing that we had developed an agent that can take all of the multimodal data about a patient’s chart, organize it in a timeline, in chronological fashion, and then allow folks to click on different parts of the timeline to ground it back to the note. And just that, which doesn’t sound like a really interesting research paper. It was mind blowing for clinicians who, again, as you said, spend a great deal of time, often outside of the typical work hours, trying to organize these patient records in order to go present to a tumor board.

And a tumor board is a critical meeting that happens at many cancer centers where specialists all get together, come with their perspective, and make a comment on what would be the best next step in treatment. But the background in preparing for that is you know, again, organizing the data. But to your point, also, what are the clinical trials that are active? There are thousands of clinical trials. There’s hundreds every day added. How can anyone keep up with that? And these are the kinds of use cases that start to bubble up. And you realize that a technology that understands concepts, context and can reason over vast amounts of data with a language interface-that is a powerful tool. Even before we get to some of the, you know, unlocking new insights and even precision medicine, this is that idea of saving time before lives to me. And there’s an enormous amount of undifferentiated heavy lifting that happens in health care that these agents and these kinds of workflows can start to unlock.

GUYMAN: And we’ve packaged these agents, the manual abstraction work that, you know, manually takes hours. Now we have an agent. It’s in Foundry along with the clinical trial matching agent, which I think at Providence you showed could double the match rate over the baseline that they were using by using the AI for multiple data sources. So, we have that and then we have this orchestration that is using this really neat technology from Microsoft Research. Semantic Kernel, Magentic One, Omni Parser. These are technologies that are good at figuring out which agent to use for a given task. So a clinician who’s used to working with other specialists, like a radiologist, a pathologist, a surgeon, they can now also consult these specialist agents who are experts in their domain and there’s shared memory across the agents.

There’s turn taking, there’s negotiation between the agents. So, there’s this really interesting system that’s emerging. And again, this is all possible to be used through Teams. And there’s some great extensibility as well. We’ve been talking about that and working on some cool tools.

SALIGRAMA: Yeah. Yeah. No, I think if I have to geek out a little bit on how all this agent tech orchestrations are coming up, like I’ve been in software engineering for decades, it’s kind of a next version of distributed systems where you have these services that talk to each other. It’s a more natural way because LLMs are giving these natural ways instead of a structured API ways of conversing. We have these agents which can naturally understand how to talk to each other. Right. So this is like the next evolution of our systems now. And the way we’re packaging all of this is multiple ways based on all the standards and innovation that’s happening in this space. So, first of all, we are building these agents that are very good at specific tasks, like, Will was saying like, a trial matching agent or patient timeline agents.

So, we take all of these, and then we package it in a workflow and an orchestration. We use the standard, some of these coming from research. The Semantic Kernel, the Magentic-One. And then, all of these also allow us to extend these agents with custom agents that can be plugged in. So, we are open sourcing the entire agent orchestration in AI Foundry templates, so that developers can extend their own agents, and make their own workflows out of it. So, a lot of cool innovation happening to apply this technology to specific scenarios and workflows.

LUNGREN: Well, I was going to ask you, like, so as part of that extension. So, like, you know, folks can say, hey, I have maybe a really specific part of my workflow that I want to use some agents for, maybe one of the agents that can do PubMed literature search, for example. But then there’s also agents that, come in from the outside, you know, sort of like I could, I can imagine a software company or AI company that has a built-in agent that plugs in as well.

SALIGRAMA: Yeah. Yeah, absolutely. So, you can bring your own agent. And then we have these, standard ways of communicating with agents and integrating with the orchestration language so you can bring your own agent and extend this health care agent, agent orchestrator to your own needs.

LUNGREN: I can just think of, like, in a group chat, like a bunch of different specialist agents. And I really would want an orchestrator to help find the right tool, to your point earlier, because I’m guessing this ecosystem is going to expand quickly. Yeah. And I may not know which tool is best for which question. I just want to ask the question. Right.

SALIGRAMA: Yeah. Yeah.

CARLSON: Well, I think to that point to I mean, you said an important point here, which is tools, and these are not necessarily just AI tools. Right? I mean, we’ve known this for a while, right? LLMS are not very good at math, but you can have it use a calculator and then it works very well. And you know you guys both brought up the universal medical abstraction a couple times.

And one of the things that I find so powerful about that is we’ve long had this vision within the precision health community that we should be able to have a learning hospital system. We should be able to actually learn from the actual real clinical experiences that are happening every day, so that we can stop practicing medicine based off averages.

There’s a lot of work that’s gone on for the last 20 years about how to actually do causal inference. That’s not an AI question. That’s a statistical question. The bottleneck, the reason why we haven’t been able to do that is because most of that information is locked up in unstructured text. And these other tools need essentially a table.

And so now you can decompose this problem, say, well, what if I can use AI not to get to the causal answer, but to just structure the information. So now I can put it into the causal inference tool. And these sorts of patterns I think again become very, not just powerful for a programmer, but they start pulling together different specialties. And I think we’ll really see an acceleration, really, of collaboration across disciplines because of this.

CARLSON: So, when I joined Microsoft Research 18 years ago, I was doing work in computational biology. And I would always have to answer the question: why is Microsoft in biomedicine? And I would always kind of joke saying, well, it is. We sell Office and Windows to every health care system in the world. We’re already in the space. And it really struck me to now see that we’ve actually come full circle. And now you can actually connect in Teams, Word, PowerPoint, which are these tools that everybody uses every day, but they’re actually now specialize-able through these agents. Can you guys talk a little bit about what that looks like from a developer perspective? How can provider groups actually start playing with this and see this come to life?

SALIGRAMA: A lot of healthcare organizations already use Microsoft productivity tools, as you mentioned. So, they asked the developers, build these agents, and use our healthcare orchestrations, to plug in these agents and expose these in these productivity tools. They will get access to all these healthcare workers. So the healthcare agent orchestrator we have today integrates with Microsoft Teams, and it showcases an example of how you can at (@) mention these agents and talk to them like you were talking to another person in a Teams chat. And then it also provides examples of these agents and how they can use these productivity tools. One of the examples we have there is how they can summarize the assessments of this whole chat into a Word Doc, or even convert that into a PowerPoint presentation, for later on.

CARLSON: One of the things that has struck me is how easy it is to do. I mean, Will, I don’t know if you’ve worked with folks that have gone from 0 to 60, like, how fast? What does that look like?

GUYMAN: Yeah, it’s funny for us, the technology to transfer all this context into a Word Document or PowerPoint presentation for a doctor to take to a meeting is relatively straightforward compared to the complicated clinical trial matching multimodal processing. The feedback has been tremendous in terms of, wow, that saves so much time to have this organized report that then I can show up to meeting with and the agents can come with me to that meeting because they’re literally having a Teams meeting, often with other human specialists. And the agents can be there and ask and answer questions and fact check and source all the right information on the fly. So, there’s a nice integration into these existing tools.

LUNGREN: We worked with several different centers just to kind of understand, you know, where this might be useful. And, like, as I think we talked about before, the ideas that we’ve come up with again, this is a great one because it’s complex. It’s kind of hairy. There’s a lot of things happening under the hood that don’t necessarily require a medical license to do, right, to prepare for a tumor board and to organize data. But, it’s fascinating, actually. So, you know, folks have come up with ideas of, could I have an agent that can operate an MRI machine, and I can ask the agent to change some parameters or redo a protocol. We thought that was a pretty powerful use case. We’ve had others that have just said, you know, I really want to have a specific agent that’s able to kind of act like deep research does for the consumer side, but based on the context of my patient, so that it can search all the literature and pull the data in the papers that are relevant to this case. And the list goes on and on from operations all the way to clinical, you know, sort of decision making at some level. And I think that the research community that’s going to sprout around this will help us, guide us, I guess, to see what is the most high-impact use cases. Where is this effective? And maybe where it’s not effective.

But to me, the part that makes me so, I guess excited about this is just that I don’t have to think about, okay, well, then we have to figure out Health IT. Because it’s always, you know, we always have great ideas and research, and it always feels like there’s such a huge chasm to get it in front of the health care workers that might want to test this out. And it feels like, again, this productivity tool use case again with the enterprise security, the possibility for bringing in third parties to contribute really does feel like it’s a new surface area for innovation.

CARLSON: Yeah, I love that. Look. Let me end by putting you all on the spot. So, in three years, multimodal agents will do what? Matt, I’ll start with you.

LUNGREN: I am convinced that it’s going to save massive amount of time before it saves many lives.

RUNDE: I’ll focus on the patient care journey and diagnostic journey. I think it will kind of transform that process for the patient itself and shorten that process.

GUYMAN: Yeah, I think we’ve seen already papers recently showing that different modalities surfaced complementary information. And so we’ll see kind of this AI and these agents becoming an essential companion to the physician, surfacing insights that would have been overlooked otherwise.

SALIGRAMA: And similar to what you guys were saying, agents will become important assistants to healthcare workers, reducing a lot of documentation and workflow, excess work they have to do.

CARLSON: I love that. And I guess for my part, I think really what we’re going to see is a massive unleash of creativity. We’ve had a lot of folks that have been innovating in this space, but they haven’t had a way to actually get it into the hands of early adopters. And I think we’re going to see that really lead to an explosion of creativity across the ecosystem.

LUNGREN: So, where do we get started? Like where are the developers who are listening to this? The folks that are at, you know, labs, research labs and developing health care solutions. Where do they go to get started with the Foundry, the models we’ve talked about, the healthcare agent orchestrator. Where do they go?

GUYMAN: So AI.azure.com is the AI Foundry. It’s a website you can go as a developer. You can sign in with your Azure subscription, get your Azure account, your own VM, all that stuff. And you have an agent catalog, the model catalog. You can start from there. There is documentation and templates that you can then deploy to Teams or other applications.

LUNGREN: And tutorials are coming. Right. We have recordings of tutorials. We’ll have Hackathons, some sessions and then more to come. Yeah, we’re really excited.

[MUSIC]LUNGREN: Thank you so much, guys for joining us.

CARLSON: Yes. Yeah. Thanks.

SALIGRAMA: Thanks for having us.

[MUSIC FADES]

The post Collaborators: Healthcare Innovation to Impact appeared first on Microsoft Research.