[MUSIC FADES]

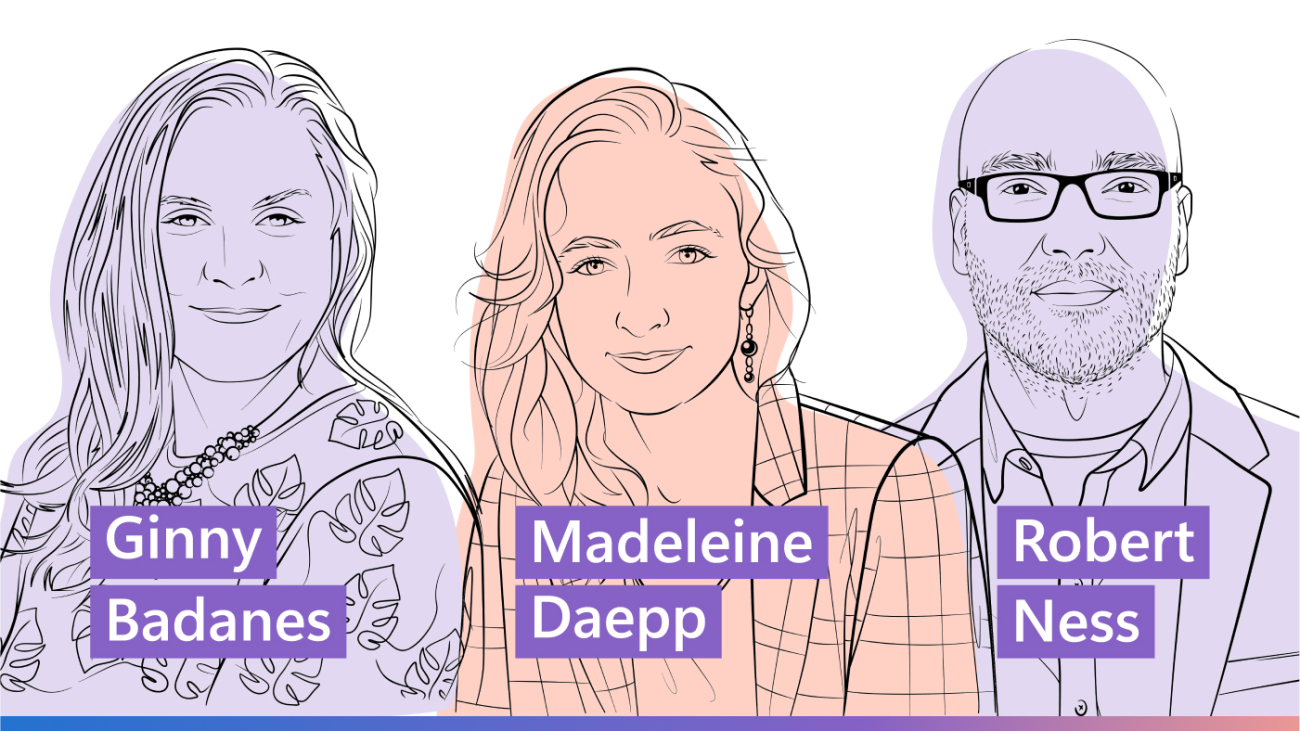

I’m your guest host, Ginny Badanes, and I lead Microsoft’s Democracy Forward program, where we’ve spent the past year deeply engaged in supporting democratic elections around the world, including the recent US elections. We have been working on everything from raising awareness of nation-state propaganda efforts to helping campaigns and election officials prepare for deepfakes to protecting political campaigns from cyberattacks. Today, I’m joined by two researchers who have also been diving deep into the impact of generative AI on democracy.

Microsoft senior researchers Madeleine Daepp and Robert Osazuwa Ness are studying generative AI’s influence in the political sphere with the goal of making AI systems more robust against misuse while supporting the development of AI tools that can strengthen democratic processes and systems. They spent time in Taiwan and India earlier this year, where both had big democratic elections. Madeleine and Robert, welcome to the podcast!

MADELEINE DAEPP: Thanks for having us.

ROBERT OSAZUWA NESS: Thanks for having us.

BADANES: So I have so many questions for you all—from how you conducted your research to what you’ve learned—and I’m really interested in what you think comes next. But first, let’s talk about how you got involved in this in the first place. Could you both start by telling me a little bit about your backgrounds and just what got you into AI research in the first place?

DAEPP: Sure. So I’m a senior researcher here at Microsoft Research in the Special Projects team. But I did my PhD at MIT in urban studies and planning. And I think a lot of folks hear that field and think, oh, you know, housing, like upzoning housing and figuring out transportation systems. But it really is a field that’s about little “d” democracy, right. About how people make choices about shared public spaces every single day. You know, I joined Microsoft first off to run this, sort of, technology deployment in the city of Chicago, running a low-cost air-quality-sensor network for the city. And when GPT-4 came out, you know, first ChatGPT, and then we, sort of, had this big recognition of, sort of, how well this technology could do in summarizing and in representing opinions and in making sense of big unstructured datasets, right. I got actually very excited. Like, I thought this could be used for town planning processes. [LAUGHS] Like, I thought we could … I had a whole project with a wonderful intern, Eva Maxfield Brown, looking at, can we summarize planning documents using AI? Can we build out policies from conversations that people have in shared public spaces? And so that was very much the impetus for thinking about how to apply and build things with this amazing new technology in these spaces.

BADANES: Robert, I think your background is a little bit different, yet you guys ended up in a similar place. So how did you get there?

NESS: Yeah, so I’m also on Special Projects, Microsoft Research. My work is focusing on large language models, LLMs. And, you know, so I focus on making these models more reliable and controllable in real-world applications. And my PhD is in statistics. And so I focus a lot on using just basic bread-and-butter statistical methods to try and control and understand LLM behavior. So currently, for example, I’m leading a team of engineers and running experiments designed to find ways to enhance a graphical approach to combining information retrieval in large language models. I work on statistical tests for testing significance of adversarial attacks on these models.

BADANES: Wow.

NESS: So, for example, if you find a way to trick one of these models into doing something it’s not supposed to do, I make sure that it’s not, like, a random fluke; that it’s something that’s reproducible. And I also work at this intersection between generative AI and, you know, Bayesian stuff, causal inference stuff. And so I came at looking at this democracy work through an alignment lens. So alignment is this task in AI of making sure these models align with human values and goals. And what I was seeing was a lot of research in the alignment space was viewing it as a technical problem. And, you know, as a statistician, we’re trained to consult, right. Like, to go to the actual stakeholders and say, hey, what are your goals? What are your values? And so this democracy work was an opportunity to do that in Microsoft Research and connected with Madeleine. So she was planning to go to Taiwan, and kind of from a past life, I wanted to become a trade economist and learned Mandarin. And so I speak fluent Mandarin and seemed like a good matchup of our skill sets …

BADANES: Yeah.

NESS: … and interests. And so that’s, kind of, how we got started.

BADANES: So, Madeleine, you brought the two of you together, but what started it for you? This podcast is all about big ideas. What sparked the big idea to bring this work that you’ve been doing on generative AI into the space of democracy and then to go out and find Robert and match up together?

DAEPP: Yeah, well, Ginny, it was you. [LAUGHS] It was actually your team.

BADANES: I didn’t plant that! [LAUGHS]

DAEPP: So, you know, I think last summer, I was working on all of these like pro-democracy applications, trying to build out, like, a social data collection tool with AI, all this kind of stuff. And I went to the elections workshop that the Democracy Forward team at Microsoft had put on, and Dave Leichtman, who, you know, was the MC of that work, was really talking about how big of a global elections year 2024 was going to be, that this—he was calling it “Votorama.” You know, that term didn’t take off. [LAUGHTER] The term that has taken off is biggest election year in history, right. Over 70 countries around the world. And, you know, we’re coming from Microsoft Research, where we were so excited about this technology. Like, when it started to pass theory of mind tests, right, which is like the ability to think about how other people are thinking, like, we were all like, oh, this is amazing; this opens up so many cool application spaces, right. When it was, like, passing benchmarks for multilingual communication, again, like, we were so excited about the prospect of building out multilingual systems. And then, all of a sudden, I was at the elections workshop, and I thought, oh no, [LAUGHS] this is not good timing.

BADANES: Yeah …

DAEPP: And because so much of my work focuses on, you know, building out computer science systems like, um, data science systems or AI systems but with communities in the loop, I really wanted to go to the folks most affected by this problem. And so I proposed a project to go to Taiwan and to study one of the … it was the second election of 2024. And Taiwan is known to be subject to more external disinformation than any other place in the world. So if you were going to see something anywhere, you would see it there. Also, it has amazing civil society response so really interesting people to talk to. But I do not speak, Chinese, right. Like, I don’t have the context; I don’t speak the language. And so part of my process is to hire a half-local team. We had an amazing interpreter, Vickie Wang, and then a wonderful graduate student, Ti-Chung Cheng, who supported this work. But then also my team, Special Projects, happened to have this person who, like, not only is a leading AI researcher publishing in NeurIPS, like building out these systems, but who also spoke Chinese, had worked in technology security, and had a real understanding of international studies and economics as well as AI. And so for me, like, finding Robert as a collaborator was kind of a unicorn moment.

BADANES: So it sounds like it was a match made in heaven of skill sets and abilities. Before we get into what you all found there, which I do want to get into, I first think it’s helpful—I don’t know, when we’re dealing with these, like, complicated issues, particularly things that are moving and changing really quickly, sometimes I found it’s helpful to agree on definitions and sort of say, this is what we mean when we say this word. And that helps lead to understanding. So while I know that this research is about more than deepfakes—and we’ll talk about some of the things that are more than deepfakes—I am curious how you all define that term and how you think of it. Because this is something that I think is constantly moving and changing. So how have you all been thinking about the definition of that term?

NESS: So I’ve been thinking about it in terms of the intention behind it, right. We say deepfake, and I think colloquially that means kind of all of generative AI. That’s a bit unfortunate because there are things that are … you know, you can use generative AI to generate cartoons …

BADANES: Right.

NESS: … or illustrations for a children’s book. And so in thinking about what are we really talking about in the context of deepfakes in the political context, elections context, it’s deception, right. I’m trying to use this technology to, say, create some kind of false record of events, say, for example, something that a politician says, in order to convince people that something happened that actually did not happen.

BADANES: Right.

NESS: And so that goal of deceiving, of creating a false record, that’s kind of how I have been thinking about deepfakes in contrast to the broader category of generative AI and deepfakes in terms of being a malicious use case. There are other malicious use cases that don’t necessarily have to be deceptive, as well, as well as positive use cases.

BADANES: Well, that really, I mean, that resonates with me because what we found was when you use the term deception—or another term we hear a lot that I think works is fraud—that resonates with other people, too. Like, that helps them distinguish between neutral uses or even positive uses of AI in this space and the malicious use cases, though to your point, I suppose there’s probably even deeper definitions of what malicious use could look like. Are you finding that distinction showing up in your work between fraud and deception in these use cases? Is that something that has been coming through?

DAEPP: You know, we didn’t really think about the term fraud until we started prepping for this interview with you. As Robert said, so much of what we were thinking about in our definition was this representation of people or events, you know, done in order to deceive and with malicious intent. But in fact, in all of our conversations, no matter who we were talking to, no matter what political bent, no matter, you know, national security, fact-checking, et cetera, you know, they all agreed that using AI for the purposes of scamming somebody financially was not OK, right. That’s fraud. Using AI for the purposes of nudifying, like removing somebody’s clothes and then sextorting them, right, extorting them for money out of fear that this would be shared, like, that was not OK. And those are such clear lines. And it was clear that there’s a set of uses of generative AI also in the political space, you know, of saying this person said something that they didn’t, …

BADANES: Mm-hmm.

DAEPP: … of voter suppression, that in general, there’s a very clear line that when it gets into that fraudulent place, when it gets into that simultaneously deceptive and malicious space, that’s very clearly a no-go zone.

NESS: Oftentimes during this research, I found myself thinking about this dichotomy in cybersecurity of state actors, or broadly speaking, kind of, political actors, versus criminals.

BADANES: Right.

NESS: And it’s important to understand the distinction because criminals are typically trying to target targets of opportunity and make money, while state-sponsored agents are willing to spend a lot more money and have very specific targets and have a very specific definition of success. And so, like, this fraud versus deception kind of feels like that a little bit in the sense that fraud is typically associated with criminal behavior, while, say, I might put out deceptive political messaging, but it might fall within the bounds of free speech within my country.

BADANES: Right, yeah.

NESS: And so this is not to say I disagree with that, but it just, actually, that it could be a useful contrast in terms of thinking about the criminal versus the political uses, both legitimate and illegitimate.

BADANES: Well, I also think those of us who work in the AI space are dealing in very complicated issues that the majority of the world is still trying to understand. And so any time you can find a word that people understand immediately in order to do the, sort of, storytelling: the reason that we are worried about deepfakes in elections is because we do not want voters to be defrauded. And that, we find really breaks through because people understand that term already. That’s a thing that they already know that they don’t want to be; they do not want to be defrauded in their personal life or in how they vote. And so that really, I found, breaks through. But as much as I have talked about deepfakes, I know that you—and I know there’s a lot of interest in talking about deepfakes when we talk about this subject—but I know your research goes beyond that. So what other forms of generative AI did you include in your research or did you encounter in the effort that you were doing both in Taiwan and India?

DAEPP: Yeah. So let me tell you just, kind of, a big overview of, like, our taxonomy. Because as you said, like, so much of this is just about finding a word, right. Like, so much of it is about building a shared vocabulary so that we can start to have these conversations. And so when we looked at the political space, right, elections, so much of what it means to win an election is kind of two things. It’s building an image of a candidate, right, or changing the image of your opposition and telling a story, right.

BADANES: Mm-hmm.

DAEPP: And so if you think about image creation, of course, there are deepfakes. Like, of course, there are malicious representations of a person. But we also saw a lot of what we’re calling auth fakes, like authorized fakes, right. Candidates who would actually go to a consultancy and, like, get their bodies scanned so that videos could be made of them. They’d get their voices, a bunch of snippets of their voices, recorded so that then there could be personalized phone calls, right. So these are authorized uses of their image and likeness. Then we saw a term I’ve heard in, sort of, the ether is soft fakes. So again, likenesses of a candidate, this time not necessarily authorized but promotional. They weren’t … people on Twitter—I guess, X—on Instagram, they were sharing images of the candidate that they supported that were really flattering or silly or, you know, just really sort of in support of that person. So not with malicious intent, right, with promotional intent. And then the last one, and this, I think, was Robert’s term, but in this image creation category, you know, one thing we talked about was just the way that people were also making fun of candidates. And in this case, this is a bit malicious, right. Like, they’re making fun of people; they’re satirizing them. But it’s not deceptive because, …

BADANES: Right …

DAEPP: … you know, often it has that hyper-saturated meme aesthetic. It’s very clearly AI or just, you know, per like, sort of, US standards for satire, like, a reasonable person would know that it was silly. And so Robert said, you know, oh, these influencers, they’re not trying to deceive people; like, they’re not trying to lie about candidates. They’re trying to roast them. [LAUGHTER] And so we called it a deep roast. So that’s, kind of, the images of candidates. I will say we also looked at narrative building, and there, one really important set of things that we saw was what we call text to b-roll. So, you know, a lot of folks think that you can’t really make AI videos because, like, Sora isn’t out yet[1]. But in fact, what there is a lot of is tooling to, sort of, use AI to pull from stock imagery and b-roll footage and put together a 90-second video. You know, it doesn’t look like AI; it’s a real video. So text to b- roll, AI pasta? So if you know the threat intelligence space, there’s this thing called copy pasta, where people just …

BADANES: Sure.

DAEPP: … it’s just a fun word for copy-paste. People just copy-paste terms in order to get a hashtag trending. And we talked to an ex-influencer who said, you know, we’re using AI to do this. And I asked him why. And he said, well, you know, if you just do copy-paste, the fact-checkers catch it. But if you use AI, they don’t. And so AI pasta. And there’s also some research showing that this is potentially more persuasive than copy-paste …

BADANES: Interesting.

DAEPP: … because people think there’s a social consensus. And then the last one, this is my last of the big taxonomy, and, Robert, of course, jump in on anything you want to go deeper on, but Fake News 2.0. You know, I’m sure you’ve seen this, as well. Just this, like, creation of news websites, like entire new newspapers that nobody’s ever heard of. AI avatars that are newscasters. And this is something that was happening before. Like, there’s a long tradition of pretending to be a real news pamphlet or pretending to be a real outlet. But there’s some interesting work out of … Patrick Warren at Clemson has looked at some of these and shown the quality and quantity of articles on these things has gotten a lot better and, you know, improves as a step function of, sort of, when new models come out.

NESS: And then on the flip side, you have people using the same technologies but stated clearly that it’s AI generated, right. So we mentioned the AI avatars. In India, there’s this … there’s Bhoomi, which is a AI news anchor for agricultural news, and it states there in clear terms that she’s not real. But of course, somebody who wanted to be deceptive could use the same technology to portray something that looks like a real news broadcast that isn’t. You know, and, kind of, going back, Madeleine mentioned deep roasts, right, so, kind of, using this technology to create satirical depictions of, say, a political opponent. Somebody, a colleague, sent something across my desk. It was a Douyin account—so Douyin is the version of TikTok that’s used inside China; …

BADANES: OK.

NESS: … same company, but it’s the internal version of TikTok—that was posting AI-generated videos of politicians in Taiwan. And these were excellent, real good-quality AI-generated deepfakes of these politicians. But some of them were, first off, on the bottom of all of them, it said, this is AI-generated content.

BADANES: Oh.

NESS: And some of them were, kind of, obviously meant to be funny and were clearly fake, like still images that were animated to make somebody singing a funny song, for example. A very serious politician singing a very silly song. And it’s a still image. It’s not even, it’s not even …

BADANES: a video.

NESS: …like video.

BADANES: Right, right.

NESS: And so I messaged Puma Shen, who is one of the legislators in Taiwan who was targeted by these attacks, and I said, what do you think about this? And, you know, he said, yeah, they got me. [LAUGHTER] And I said, you know, do you think people believe this? I mean, there are people who are trying to debunk it. And he said, no, our supporters don’t believe it, but, you know, people who support the other side or people who are apolitical, they might believe it, or even if it says it’s fake—they know it’s fake—but they might still say that, yeah, but this is something they would do, right. This is …

BADANES: Yeah, it fits the narrative. Yeah.

NESS: … it fits the narrative, right. And that, kind of, that really, you know, I had thought of this myself, but just hearing somebody, you know, who’s, you know, a politician who’s targeted by these attacks just saying that it’s, like, even if they believe it’s … even if they know it’s fake, they still believe it because it’s something that they would do.

BADANES: Sure.

NESS: That’s, you know, as a form of propaganda, even relative to the canonical idea of deepfake that we have, this could be more effective, right. Like, just say it’s AI and then use it to, kind of, paint the picture of the opponent in any way you like.

BADANES: Sure, and this gets into that, sort of, challenging space I think we find ourselves in right now, which is people don’t know necessarily how to tell what’s real or not. And the case you’re describing, it has labeling, so that should tell you. But a lot of the content we come across online does not have labeling. And you cannot tell just based on your eyes whether images were generated by AI or whether they’re real. One of the things that I get asked a lot is, why can’t we just build good AI to detect bad AI, right? Why don’t we have a solution where I just take a picture and I throw it into a machine and it tells me thumbs-up or thumbs-down if this is AI generated or not? And the question around detection is a really tricky one. I’m curious what you all think about, sort of, the question of, can detection solve this problem or not?

NESS: So I’ll mention one thing. So Madeleine mentioned an application of this technology called text to b-roll. And so what this is, technically speaking, what this is doing is you’re taking real footage, you stick it in a database, it’s quote, unquote “vectorized” into these representations that the AI can understand, and then you say, hey, generate a video that illustrates this narrative for me. And you provide it the text narrative, and then it goes and pulls out a whole bunch of real video from a database and curates them into a short video that you could put on TikTok, for example. So this was a fully AI-generated product, but none of the actual content is synthetic.

BADANES: Ah, right.

NESS: So in that case, your quote, unquote “AI detection tool” is not going to work.

DAEPP: Yeah, I mean, something that I find really fascinating any time that you’re dealing with a sociotechnical system, right—a technical system embedded in social context—is folks, you know, think that things are easy that are hard and things are hard that are easy, right. And so with a lot of the detections work, right, like if you put a deepfake detector out, you make that available to anyone, then what they can do is they can run a bunch of stuff by it, …

BADANES: Yeah.

DAEPP: … add a little bit of random noise, and then the deepfake detector doesn’t work anymore. And so that detection, actually, technically becomes an arms race, you know. And we’re seeing now some detectors that, like, you know, work when you’re not looking at a specific image or a specific piece of text but you’re looking at a lot all at once. That seems more promising. But, just, this is a very, very technically difficult problem, and that puts us as researchers in a really tricky place because, you know, you’re talking to folks who say, why can’t you just solve this? If you put this out, then you have to put the detector out. And we’re like, that’s actually not, that’s not a technically feasible long-term solution in this space. And the solutions are going to be social and regulatory and, you know, changes in norms as well as technical solutions that maybe are about everything outside of AI, right.

BADANES: Yeah.

DAEPP: Not about fixing the AI system but fixing the context within which it’s used.

BADANES: It’s not just a technological solution. There’s more to it. Robert?

NESS: So if somebody were to push back there, they could say, well, great; in the long term, maybe it’s an arms race, but in the short term, right, we can have solutions out there that, you know, at least in the next election cycle, we could maybe prevent some of these things from happening. And, again, kind of harkening back to cybersecurity, maybe if you make it hard enough, only the really dedicated, really high-funded people are going to be doing it rather than, you know, everybody who wants to throw a bunch of deepfakes on the internet. But the problem still there is that it focuses really on video and images, right.

BADANES: Yeah. What about audio?

NESS: What about audio? And what about text? So …

BADANES: Yeah. Those are hard. I feel like we’ve talked a lot about definitions and theoretical, but I want to make sure we talk more about what you guys saw and researched and understood on the ground, in particular, your trips to India and Taiwan and even if you want to reflect on how those compare to the US environment. What did you actually uncover? What surprised you? What was different between those countries?

DAEPP: Yeah, I mean, right, so Taiwan … both of these places are young democracies. And that’s really interesting, right. So like in Taiwan, for example, when people vote, they vote on paper. And anybody can go watch. That’s part of their, like, security strategies. Like, anyone around the world can just come and watch. People come from far. They fly in from Canada and Japan and elsewhere just to watch Taiwanese people vote. And then similarly in India, there’s this rule where you have to be walking distance from your polling place, and so the election takes two months. And, like, your polling places move from place to place, and sometimes, it arrives on an elephant. And so these were really interesting places to, like, I as an American, just, like, found it very, very fascinating to and important to be outside of the American context. You know, we just take for granted that how we do democracy is how other people do it. But Taiwan was very much a joint, like, civil society–government everyday response to this challenge of having a lot of efforts to manipulate public opinion happening with, you know, real-world speeches, with AI, with anything that you can imagine. You know, and I think the Microsoft Threat Analysis Center released a report documenting some of the, sort of, video stuff[2]. There’s a use of AI to create videos the night before the election, things like this. But then India is really thinking of … so India, right, it’s the world’s biggest democracy, right. Like, nearly a billion people were eligible to vote.

BADANES: Yeah.

NESS: And arguably the most diverse, right?

DAEPP: Yeah, arguably the most diverse in terms of languages, contexts. And it’s also positioning itself as the AI laboratory for the Global South. And so folks, including folks at the MSR (Microsoft Research) Bangalore lab, are leaders in thinking about representing low-resource languages, right, thinking about cultural representation in AI models. And so there you have all of these technologists who are really trying to innovate and really trying to think about what’s the next clever application, what’s the next clever use. And so that, sort of, that taxonomy that we talked about, like, I think just every week, every interview, we, sort of, had new things to add because folks there were just constantly trying all different kinds of ways of engaging with the public.

NESS: Yeah, I think for me, in India in particular, you know, India is an engineering culture, right. In terms of, like, the professional culture there, they’re very, kind of, engineering skewed. And so I think one of the bigger surprises for me was seeing people who were very experienced and effective campaign operatives, right, people who would go and, you know, hit the pavement; do door knocking; kind of, segment neighborhoods by demographics and voter block, these people were also, you know, graduated in engineering from an IIT (Indian Institute of Technology), …

BADANES: Sure.

NESS: … right, and so … [LAUGHS] so they were happy to pick up these tools and leverage them to support their expertise in this work, and so some of the, you know, I think a lot of the narrative that we tell ourselves in AI is how it’s going to be, kind of, replacing people in doing their work. But what I saw in India was that people who were very effective had a lot of domain expertise that you couldn’t really automate away and they were the ones who are the early adopters of these tools and were applying it in ways that I think we’re behind on in terms of, you know, ideas in the US.

BADANES: Yeah, I mean, there’s, sort of, this sentiment that AI only augments existing problems and can enhance existing solutions, right. So we’re not great at translation tools, but AI will make us much better at that. But that also can then be weaponized and used as a tool to deceive people, which propaganda is not new, right? We’re only scaling or making existing problems harder, or adversaries are trying to weaponize AI to build on things they’ve already been doing, whether that’s cyberattacks or influence operations. And while the three of us are in different roles, we do work for the same company. And it’s a large technology company that is helping bring AI to the world. At the same time, I think there are some responsibilities when we look at, you know, bad actors who are looking to manipulate our products to create and spread this kind of deceptive media, whether it’s in elections or in other cases like financial fraud or other ways that we see this being leveraged. I’m curious what you all heard from others when you’ve been doing your research and also what you think our responsibilities are as a big tech company when it comes to keeping actors from using our products in those ways.

DAEPP: You know, when I started using GPT-4, one of the things I did was I called my parents, and I said, if you hear me on a phone call, …

BADANES: Yeah.

DAEPP: … like, please double check. Ask me things that only I would know. And when I walk around Building 99, which is, kind of, a storied building in which a lot of Microsoft researchers work, everybody did that call. We all called our parents.

BADANES: Interesting.

DAEPP: Or, you know, we all checked in. So just as, like, we have a responsibility to the folks that we care about, I think as a company, that same, sort of, like, raising literacy around the types of fraud to expect and how to protect yourself from them—I think that gets back to that fraud space that we talked about—and, you know, supporting law enforcement, sharing what needs to be shared, I think that without question is a space that we need to work in. I will say a lot of the folks we talked with, they were using Llama on a local GPU, right.

BADANES: OK.

DAEPP: They were using open-source models. They were sometimes … they were testing out Phi. They would use Phi, Grok, Llama, like anything like that. And so that raises an interesting question about our guardrails and our safety practices. And I think there, we have an, like, our obligation and our opportunity actually is to set the standard, right. To say, OK, like, you know, if you use local Llama and it spouts a bunch of stuff about voter suppression, like, you can get in trouble for that. And so what does it mean to have a safe AI that wins in the marketplace, right? That’s an AI that people can feel confident and comfortable about using and one that’s societally safe but also personally safe. And I think that’s both a challenge and a real opportunity for us.

BADANES: Yeah … oh, go ahead, Robert, yeah …

NESS: Going back to the point about fraud. It was this year, in January, when that British engineering firm Arup, when somebody used a deepfake to defraud that company of about $25 million, …

BADANES: Yeah.

NESS: … their Hong Kong office. And after that happened, some business managers in Microsoft reached out to me regarding a major client who wanted to start red teaming. And by red teaming, I mean intentionally targeting your executives and employees with these types of attacks in order to figure out where your vulnerabilities as an organization are. And I think, yeah, it got me thinking like, wow, I would, you know, can we do this for my dad? [LAUGHS] Because I think that was actually a theme that came out from a lot of this work, which was, like, how can we empower the people who are really on the frontlines of defending democracy in some of these places in terms of the tooling there? So we talked about, say, AI detection tools, but the people who are actually doing fact-checking, they’re looking more than at just the video or the images; they’re actually looking at a, kind of, holistic … taking a holistic view of the news story and doing some proper investigative journalism to see if something is fake or not.

BADANES: Yeah.

NESS: And so I think as a company who creates products, can we take a more of a product mindset to building tools that support that entire workflow in terms of fact-checking or investigative journalism in the context of democratic outcomes …

BADANES: Yeah.

NESS: … where maybe looking at individual deepfake content is just a piece of that.

BADANES: Yeah, you know, I think there’s a lot of parallels here to cybersecurity. That’s also what we’ve found, is this idea that, first of all, the “no silver bullet,” as we were talking about earlier with the detection piece. Like, you can’t expect your system to be secure just because you have a firewall, right. You have to have this, like, defense in-depth approach where you have lots of different layers. And one of those layers has been on the literacy side, right. Training and teaching people not to click on a phishing link, understanding that they should scroll over the URL. Like, these are efforts that have been taken up, sort of, in a broad societal sense. Employers do it. Big tech companies do it. Governments do it through PSAs and other things. So there’s been a concerted effort to get a population who might not have been aware of the fact that they were about to be scammed to now know not to click on that link. I think, you know, you raised the point about literacy. And I think there’s something to be said about media literacy in this space. It’s both AI literacy—understanding what it is—but also understanding that people may try to defraud you. And whether that is in the political sense or in the financial sense, once you have that, sort of, skill set in place, you’re going to be protected. One thing that I’ve heard, though, as I have conversations about this challenge … I’ve heard a couple things back from people specifically in civil society. One is not to put the impetus too much on the end consumer, which I think I’m hearing that we also recognize there’s things that we as technology companies should be focusing on. But the other thing is the concern that in, sort of, the long run, we’re going to all lose trust in everything we see anyway. And I’ve heard some people refer to that as the trust deficit. Have you all seen anything promising in the space to give you a sense around, can we ever trust what we’re looking at again, or are we actually just training everyone to not believe anything they see? Which I hope is not the case. I am an optimist. But I’d love to hear what you all came across. Are there signs of hope here where we might actually have a place where we can trust what we see again?

DAEPP: Yeah. So two things. There is this phenomenon called the liar’s dividend, right, …

BADANES: Sure, yeah.

DAEPP: … which is where that if you educate folks about how AI can be used to create fake clips, fake audio clips, fake videos, then if somebody has a real audio clip, a real video, they can claim that it’s AI. And I think we talk, you know, again, this is, like, in a US-centric space, we talk about this with politicians, but the space in which this is really concerning, I think, is war crimes, right …

BADANES: Oh, yeah.

DAEPP: … I think are these real human rights infractions where you can prevent evidence from getting out or being taken seriously. And we do see that right after invasions, for example, these days. But this is actually a space … like, I just told you, like, oh, like, detection is so hard and not technically, like, that’ll be an arms race! But actually, there is this wonderful project, Project Providence, that is a Microsoft collaboration with a company called Truepic that … it’s, like, an app, right. And what happens is when you take a photo using this app, it encrypts the, you know, hashes the GPS coordinates where the photo was taken, the time, the day, and uploads that with the pixels, with the image, to Azure. And then later, when a journalist goes to use that image, they can see that the pixels are exactly the same, and then they can check the location and they can confirm the GPS. And this actually meets evidentiary standards for the UN human rights tribunal, right.

BADANES: Right.

DAEPP: So this is being used in Ukraine to document war crimes. And so, you know, what if everybody had that app on their phone? That means you don’t … you know, most photos you take, you can use an AI tool and immediately play with. But in that particular situation where you need to confirm provenance and you need to confirm that this was a real event that happened, that is a technology that exists, and I think folks like the C2PA coalition (Coalition for Content Provenance and Authenticity) can make that happen across hardware providers.

NESS: And I think the challenge for me is, we can’t separate this problem from some of the other, kind of, fundamental problems that we have in our media environment now, right. So, for example, if I go on to my favorite social media app and I see videos from some conflicts around the world, and these videos could be not AI generated and I still could be, you know, the target of some PR campaign to promote certain content and suppress other ones. The videos could be authentic videos, but not actually be accurate depictions of what they claim to be. And so I think that this is a … the AI presents a complicating factor in an already difficult problem space. And I think, you know, trying to isolate these different variables and targeting them individually is pretty tricky. I do think that despite the liar’s dividend that media literacy is a very positive area to, kind of, focus energy …

BADANES: Yeah.

NESS: … in the sense that, you know, you mentioned earlier, like, using this term fraud, again, going back to this analogy with cybersecurity and cybercrime, that it tends to resonate with people. We saw that, as well, especially in Taiwan, didn’t we, Madeleine? Well, in India, too, with the sextortion fears. But in Taiwan, a lot of just cybercrime in terms of defrauding people of money. And one of the things that we had observed there was that talking about generative AI in the context of elections was difficult to talk to people about it because people, kind of, immediately went into their political camps, right.

BADANES: Yeah.

NESS: And so you had to, kind of, penetrate … you know, people were trying to, kind of, suss out which side you were on when you’re trying to educate them about this topic.

BADANES: Sure.

NESS: But if you talk to—but everybody’s, like, fraud itself is a lot less partisan.

BADANES: Yeah, it’s a neutral term.

NESS: Exactly. And so it becomes a very useful way to, kind of, get these ideas out there.

BADANES: That’s really interesting. And I love the provenance example because it really gets to the question about authenticity. Like, where did something come from? What is the origin of that media? Where has it traveled over time? And if AI is a component of it, then that’s a noted fact. But it doesn’t put us into the space of AI or not AI, which I think is where a lot of the, sort of, labeling has gone so far. And I understand the instinct to do that. But I like the idea of moving more towards how do you know more about an image of which whether there was AI involved or not is a component but does not have judgment. That does not make the picture good or bad. It doesn’t make it true or false. It’s just more information for you to consume. And then, of course, the media literacy piece, people need to know to look for those indicators and want them and ask for them from the technology company. So I think that’s a good, that’s a good silver lining. You gave me the light at the end of the tunnel I think I was looking for on the post-truth world. So, look, here’s the big question. You guys have been spending this time focusing on AI and democracy in this big, massive global election year. There was a lot of hype. [LAUGHS] There was a lot of hype. Lots of articles written about how this was going to be the AI election apocalypse. What say you? Was it? Was it not?

NESS: I think it was, well, we definitely have documented cases where this happened. And I’m wary of this question, particularly again from the cybersecurity standpoint, which is if you were not the victim of a terrible hack that brought down your entire company, would you say, like, well, it didn’t happen, so it’s not going to happen, right. You would never …

BADANES: Yeah.

NESS: That would be a silly attitude to have, right. And also, you don’t know what you don’t know, right. So, like, a lot of the, you know, we mentioned sextortion; we mentioned these cybercrimes. A lot of these are small-dollar crimes, which means they don’t get reported or they don’t get reported for reasons of shame. And so we don’t even have numbers on a lot of that. And we know that the political techniques are going to mirror the criminal techniques.

BADANES: Yeah.

NESS: And also, I worry about, say, down-ballot elections. Like, so much of, kind of, our election this year, a lot of the focus was on the national candidates, but, you know, if local poll workers are being targeted, if disinformation campaigns are being put out about local candidates, it’s not going get the kind of play in the national media such that you and I might hear about it. And so I’m, you know, so I’ll hand it off to Madeleine, but yeah.

DAEPP: So absolutely agree with Robert’s point, right. If your child was affected by sextortion, if you are a country that had an audio clip go viral, this was the deepfake deluge for you, right. That said, something that happened, you know, in India as in the United States, there were major prosecutions very early on, right.

BADANES: Yeah.

DAEPP: So in India, there was a video. It turned out not to be a deepfake. It turned out to be a “cheap fake,” to your point about, you know, the question isn’t whether there’s AI involved; the question is whether this is an attempt to defraud. And five people were charged for this video.

BADANES: Yeah.

DAEPP: And in the United States, right, those Biden robocalls using Biden’s voice to tell folks not to vote, like, that led to a million-dollar fine, I think, for the telecoms and $6 million for the consultant who created that. And when we talk to people in India, you know, people who work in this space, they said, well, I’m not going to do that; like, I’m going to focus on other things. So internal actors pay attention to these things. That really changes what people do and how they do it. And so that, I do think the work that your team did, right, to educate candidates about looking out for the stuff, the work that the MTAC (Microsoft Threat Analysis Center) did to track usage and report it, all of that, I think, was, actually, those interventions, I think, worked. I think they were really important, and I do think that what we are … this absence of a deluge is actually a huge number of people making a very concerted effort to prevent it from happening.

BADANES: That’s encouraging.

NESS: Madeleine, you made a really important point that this deterrence from prosecution, it’s effective for internal actors, …

BADANES: Yeah.

DAEPP: Yeah, that’s right.

NESS: … right. So for foreign states who are trying to interfere with other people’s elections, the fear of prosecution is not going to be as much of a deterrent.

BADANES: That is true. I will say what we saw in this election cycle, in particular in the US, was a concerted effort by the intelligence community to call out and name nation-state actors who were either doing cyberattacks or influence operations, specific videos that they identified, whether there was AI involved or not. I think that level of communication with the public while maybe doesn’t lead to those actors going to jail—maybe someday—but does in fact lead to a more aware public and therefore hopefully a less effective campaign. If people on the other end … and it’s a little bit into the literacy space, and it’s something that we’ve seen government again in this last cycle do very effectively, to name and shame essentially when they see these things in part, though, to make sure voters are aware of what’s happening. We’re not quite through this big global election year; we have a couple more elections before we really hit the end of the year, but it’s winding down. What is next for you all? Are you all going to continue this work? Are you going build on it? What comes next?

DAEPP: So our research in India actually wasn’t focused specifically on elections. It was about AI and digital communications.

BADANES: Ahh.

DAEPP: Because, you know, again, like India is this laboratory.

BADANES: Sure.

DAEPP: And I think what we learned from that work is that, you know, this is going to be a part of our digital communications and our information system going forward without question. And the question is just, like, what are the viable business models, right? What are the applications that work? And again, that comes back to making sure that whatever AI … you know, people when they build AI into their entire, you know, newsletter-writing system, when they build it into their content production, that they can feel confident that it’s safe and that it meets their needs and that they’re protected when they use it. And similarly, like, what are those applications that really work, and how do you empower those lead users while mitigating those harms and supporting civil society and mitigating those harms? I think that’s an incredible, like, that’s—as a researcher—that’s, you know, that’s a career, right.

BADANES: Yeah.

DAEPP: That’s a wonderful research space. And so I think understanding how to support AI that is safe, that enables people globally to have self-determination in how models represent them, and that is usable and powerful, I think that’s broadly …

BADANES: Where this goes.

DAEPP: … what I want to drive.

BADANES: Robert, how about you?

NESS: You know, so I mentioned earlier on these AI alignment issues.

BADANES: Yeah.

NESS: And I was really fascinated by how local and contextual those issues really are. So to give an example from Taiwan, we train these models on training data that we find from the internet. Well, when it comes to, say, Mandarin Chinese, you can imagine the proportion of content, of just the quantity of content, on the internet that comes from China is a lot more than the quantity that comes from Taiwan. And of course, what’s politically correct in China is different from what’s politically correct in Taiwan. And so when we were talking to Taiwanese, a lot of people had these concerns about, you know, having these large language models that reflected Taiwanese values. We heard the same thing in India about just people on different sides of the political spectrum and, kind of, looking at … a YouTuber in India had walked us through this … how, for example, a founding father of India, there was a disparate literature in favor of this person and some more critical of this person, and he had spent time trying to suss out whether GPT-4 was on one side or the other.

BADANES: Oh. Whose side are you on? [LAUGHS]

NESS: Right, and so I think for our alignment research at Microsoft Research, this becomes the beginning of, kind of, a very fruitful way of engaging with local stakeholders and making sure that we can reflect these concerns in the models that we develop and deploy.

BADANES: Yeah. Well, first, I just want to thank you guys for all the work you’ve done. This is amazing. We’ve really enjoyed partnering with you. I’ve loved learning about the research and the efforts, and I’m excited to see what you do next. I always want to end these kinds of conversations on a more positive note, because we’ve talked a lot about the weaponization of AI and, you know, how … ethical areas that are confusing and … but I am sure at some point in your work, you came across really positive use cases of AI when it comes to democracy, or at least I hope you have. [LAUGHS] Do you have any examples or can you leave us with something about where you see either it going or actively being used in a way to really strengthen democratic processes or systems?

DAEPP: Yeah, I mean, there is just a big paper in Science, right, which, as researchers, when something comes out in Science, you know your field is about to change, right, …

BADANES: Yeah.

DAEPP: … showing that an AI model in, like, political deliberations, small groups of UK residents talking about difficult topics like Brexit, you know, climate crisis, difficult topics, that in these conversations, an AI moderator created, like, consensus statements that represented the majority opinion, still showed the minority opinion, but that participants preferred to a human-written statement and in fact preferred to their original opinion.

BADANES: Wow.

DAEPP: And that this, you know, not only works in these randomized controlled trials but actually works in a real citizens deliberation. And so that potential of, like, carefully fine-tuned, like, carefully aligned AI to actually help people find points of agreement, that’s a really exciting space.

BADANES: So next time my kids are in a fight, I’m going to point them to Copilot and say, work with Copilot to mediate. [LAUGHS] No, that’s really, really interesting. Robert, how about you?

NESS: She, kind of, stole my example. [LAUGHTER] But I’ll take it from a different perspective. So, yes, like how these technologies can enable people to collaborate and ideally, I think, from a democratic standpoint, at a local level, right. So, I mean, I think so much of our politics were, kind of, focused at the national-level campaign, but our opportunity to collaborate is much more … we’re much more easily … we can collaborate much more easily with people who are in our local constituencies. And I think to myself about, kind of, like, the decline particularly of local newspapers, local media.

BADANES: Right.

NESS: And so I wonder, you know, can these technologies help address that problem in terms of just, kind of, information about, say, your local community, as well as local politicians. And, yeah, and to Madeleine’s point, so Madeleine started the conversation talking about her background in urban planning and some of the work she did, you know, working on a local level with local officials to bring technology to the level of cities. And I think, like, well, you know, politics are local, right. So, you know, I think that that’s where there’s a lot of opportunity for improvement.

BADANES: Well, Robert, you just queued up a topic for a whole other podcast because our team also does a lot of work around journalism, and I will say we have seen that AI at the local level with local news is really a powerful tool that we’re starting to see a lot of appetite and interest for in order to overcome some of the hurdles they face right now in that industry when it comes to capacity, financing, you know, not able to be in all of the places they want to be at once to make sure that they’re reporting equally across the community. This is, like, a perfect use case for AI, and we’re starting to see folks who are really using it. So maybe we’ll come back and do this again another time on that topic. But I just want to thank you both, Madeleine and Robert, for joining us today and sharing your insights. This was really a fascinating conversation. I know I learned a lot. I hope that our listeners learned a lot, as well.

[MUSIC]

And, listeners, I hope that you tune in for more episodes of Ideas, where we continue to explore the technologies shaping our future and the big ideas behind them. Thank you, guys, so much.

DAEPP: Thank you.

NESS: Thank you.

[MUSIC FADES]

[1] The video generation model Sora was released publicly earlier this month (opens in new tab).

[2] For a summary of and link to the report, see the Microsoft On the Issues blog post China tests US voter fault lines and ramps AI content to boost its geopolitical interests (opens in new tab).