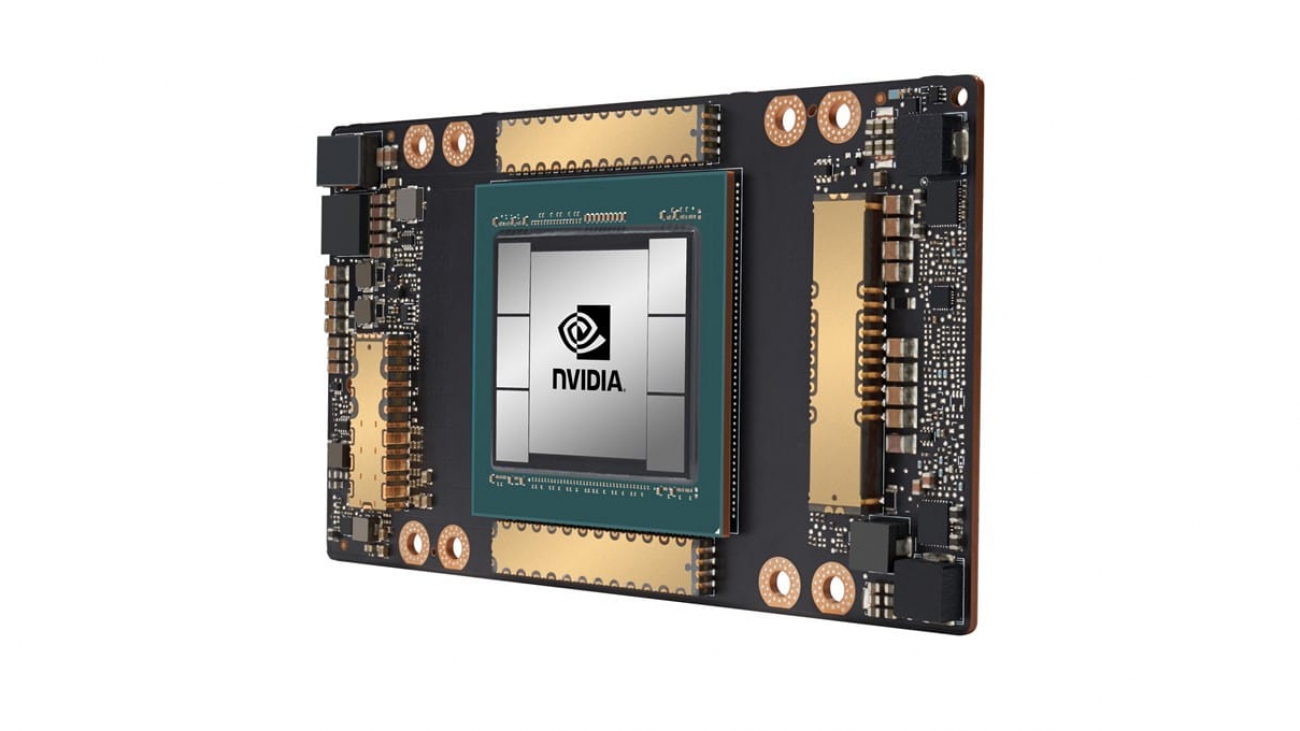

The NVIDIA A100 Tensor Core GPU has landed on Google Cloud.

Available in alpha on Google Compute Engine just over a month after its introduction, A100 has come to the cloud faster than any NVIDIA GPU in history.

Today’s introduction of the Accelerator-Optimized VM (A2) instance family featuring A100 makes Google the first cloud service provider to offer the new NVIDIA GPU.

A100, which is built on the newly introduced NVIDIA Ampere architecture, delivers NVIDIA’s greatest generational leap ever. It boosts training and inference computing performance by 20x over its predecessors, providing tremendous speedups for workloads to power the AI revolution.

“Google Cloud customers often look to us to provide the latest hardware and software services to help them drive innovation on AI and scientific computing workloads, ” said Manish Sainani, director of Product Management at Google Cloud. “With our new A2 VM family, we are proud to be the first major cloud provider to market NVIDIA A100 GPUs, just as we were with NVIDIA T4 GPUs. We are excited to see what our customers will do with these new capabilities.”

In cloud data centers, A100 can power a broad range of compute-intensive applications, including AI training and inference, data analytics, scientific computing, genomics, edge video analytics, 5G services, and more.

Fast-growing, critical industries will be able to accelerate their discoveries with the breakthrough performance of A100 on Google Compute Engine. From scaling up AI training and scientific computing, to scaling out inference applications, to enabling real-time conversational AI, A100 accelerates complex and unpredictable workloads of all sizes running in the cloud.

NVIDIA CUDA 11, coming to general availability soon, makes accessible to developers the new capabilities of NVIDIA A100 GPUs, including Tensor Cores, mixed-precision modes, multi-instance GPU, advanced memory management and standard C++/Fortran parallel language constructs.

Breakthrough A100 Performance in the Cloud for Every Size Workload

The new A2 VM instances can deliver different levels of performance to efficiently accelerate workloads across CUDA-enabled machine learning training and inference, data analytics, as well as high performance computing.

For large, demanding workloads, Google Compute Engine offers customers the a2-megagpu-16g instance, which comes with 16 A100 GPUs, offering a total of 640GB of GPU memory and 1.3TB of system memory — all connected through NVSwitch with up to 9.6TB/s of aggregate bandwidth.

For those with smaller workloads, Google Compute Engine is also offering A2 VMs in smaller configurations to match specific applications’ needs.

Google Cloud announced that additional NVIDIA A100 support is coming soon to Google Kubernetes Engine, Cloud AI Platform and other Google Cloud services. For more information, including technical details on the new A2 VM family and how to sign up for access, visit the Google Cloud blog.

The post NVIDIA Ampere GPUs Come to Google Cloud at Speed of Light appeared first on The Official NVIDIA Blog.