In under a month amid the global pandemic, a small team assembled the world’s seventh-fastest computer.

Today that mega-system, called Selene, communicates with its operators on Slack, has its own robot attendant and is driving AI forward in automotive, healthcare and natural-language processing.

While many supercomputers tap exotic, proprietary designs that take months to commission, Selene is based on an open architecture NVIDIA shares with its customers.

The Argonne National Laboratory, outside Chicago, is using a system based on Selene’s DGX SuperPOD design to research ways to stop the coronavirus. The University of Florida will use the design to build the fastest AI computer in academia.

DGX SuperPODs are driving business results for companies like Continental in automotive, Lockheed Martin in aerospace and Microsoft in cloud-computing services.

Birth of an AI System

The story of how and why NVIDIA built Selene starts in 2015.

NVIDIA engineers started their first system-level design with two motivations. They wanted to build something both powerful enough to train the AI models their colleagues were building for autonomous vehicles and general purpose enough to serve the needs of any deep-learning researcher.

The result was the SATURNV cluster, born in 2016 and based on the NVIDIA Pascal GPU. When the more powerful NVIDIA Volta GPU debuted a year later, the budding systems group’s motivation and its designs expanded rapidly.

AI Jobs Grow Beyond the Accelerator

“We’re trying to anticipate what’s coming based on what we hear from researchers, building machines that serve multiple uses and have long lifetimes, packing as much processing, memory and storage as possible,” said Michael Houston, a chief architect who leads the systems team.

As early as 2017, “we were starting to see new apps drive the need for multi-node training, demanding very high-speed communications between systems and access to high-speed storage,” he said.

AI models were growing rapidly, requiring multiple GPUs to handle them. Workloads were demanding new computing styles, like model parallelism, to keep pace.

So, in fast succession, the team crafted ever larger clusters of V100-based NVIDIA DGX-2 systems, called DGX PODs. They used 32, then 64 DGX-2 nodes, culminating in a 96-node architecture dubbed the DGX SuperPOD.

They christened it Circe for the irresistible Greek goddess. It debuted in June 2019 at No. 22 on the TOP500 list of the world’s fastest supercomputers and currently holds No. 23.

Cutting Cables in a Computing Jungle

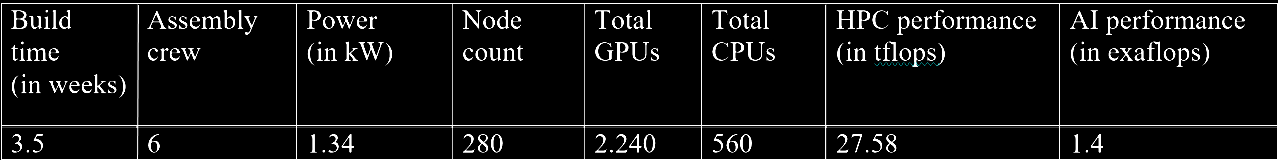

Along the way, the team learned lessons about networking, storage, power and thermals. Those learnings got baked into the latest NVIDIA DGX systems, reference architectures and today’s 280-node Selene.

In the race through ever larger clusters to get to Circe, some lessons were hard won.

“We tore everything out twice, we literally cut the cables out. It was the fastest way forward, but it still had a lot of downtime and cost. So we vowed to never do that again and set ease of expansion and incremental deployment as a fundamental design principle,” said Houston.

The team redesigned the overall network to simplify assembling the system.

They defined modules of 20 nodes connected by relatively simple “thin switches.” Each of these so-called scalable units could be laid down, cookie-cutter style, turned on and tested before the next one was added.

The design let engineers specify set lengths of cables that could be bundled together with Velcro at the factory. Racks could be labeled and mapped, radically simplifying the process of filling them with dozens of systems.

Doubling Up on InfiniBand

Early on, the team learned to split up compute, storage and management fabrics into independent planes, spreading them across more, faster network-interface cards.

The number of NICs per GPU doubled to two. So did their speeds, going from 100 Gbit per second InfiniBand in Circe to 200G HDR InfiniBand in Selene. The result was a 4x increase in the effective node bandwidth.

Likewise, memory and storage links grew in capacity and throughput to handle jobs with hot, warm and cold storage needs. Four storage tiers spanned 100 terabyte/second memory links to 100 Gbyte/s storage pools.

Power and thermals stayed within air-cooled limits. The default designs used 35kW racks typical in leased data centers, but they can stretch beyond 50kW for the most aggressive supercomputer centers and down to 7kW racks some telcos use.

Seeking the Big, Balanced System

The net result is a more balanced design that can handle today’s many different workloads. That flexibility also gives researchers the freedom to explore new directions in AI and high performance computing.

“To some extent HPC and AI both require max performance, but you have to look carefully at how you deliver that performance in terms of power, storage and networking as well as raw processing,” said Julie Bernauer, who leads an advanced development team that’s worked on all of NVIDIA’s large-scale systems.

Skeleton Crews on Strict Protocols

The gains paid off in early 2020.

Within days of the pandemic hitting, the first NVIDIA Ampere architecture GPUs arrived, and engineers faced the job of assembling the 280-node Selene.

In the best of times, it can take dozens of engineers a few months to assemble, test and commission a supercomputer-class system. NVIDIA had to get Selene running in a few weeks to participate in industry benchmarks and fulfill obligations to customers like Argonne.

And engineers had to stay well within public-health guidelines of the pandemic.

“We had skeleton crews with strict protocols to keep staff healthy,” said Bernauer.

“To unbox and rack systems, we used two-person teams that didn’t mix with the others — they even took vacation at the same time. And we did cabling with six-foot distances between people. That really changes how you build systems,” she said.

Even with the COVID restrictions, engineers racked up to 60 systems in a day, the maximum their loading dock could handle. Virtual log-ins let administrators validate cabling remotely, testing the 20-node modules as they were deployed.

Bernauer’s team put several layers of automation in place. That cut the need for people at the co-location facility where Selene was built, a block from NVIDIA’s Silicon Valley headquarters.

Slacking with a Supercomputer

Selene talks to staff over a Slack channel as if it were a co-worker, reporting loose cables and isolating malfunctioning hardware so the system can keep running.

“We don’t want to wake up in the night because the cluster has a problem,” Bernauer said.

It’s part of the automation customers can access if they follow the guidance in the DGX POD and SuperPOD architectures.

Thanks to this approach, the University of Florida, for example, is expected to rack and power up a 140-node extension to its HiPerGator system, switching on the most powerful AI supercomputer in academia within as little as 10 days of receiving it.

As an added touch, the NVIDIA team bought a telepresence robot from Double Robotics so non-essential designers sheltering at home could maintain daily contact with Selene. Tongue-in-cheek, they dubbed it Trip given early concerns essential technicians on site might bump into it.

The fact that Trip is powered by an NVIDIA Jetson TX2 module was an added attraction for team members who imagined some day they might tinker with its programming.

Since late July, Trip’s been used regularly to let them virtually drive through Selene’s aisles, observing the system through the robot’s camera and microphone.

“Trip doesn’t replace a human operator, but if you are worried about something at 2 a.m., you can check it without driving to the data center,” she said.

Delivering HPC, AI Results at Scale

In the end, it’s all about the results, and they came fast.

In June, Selene hit No. 7 on the TOP500 list and No. 2 on the Green500 list of the most power-efficient systems. In July, it broke records in all eight systems tests for AI training performance in the latest MLPerf benchmarks.

“The big surprise for me was how smoothly everything came up given we were using new processors and boards, and I credit all the testing along the way,” said Houston. “To get this machine up and do a bunch of hard back-to-back benchmarks gave the team a huge lift,” he added.

The work pre-testing NGC containers and HPC software for Argonne was even more gratifying. The lab is already hammering on hard problems in protein docking and quantum chemistry to shine a light on the coronavirus.

Separately, Circe donates many of its free cycles to the Folding@Home initiative that fights COVID.

At the same time, NVIDIA’s own researchers are using Selene to train autonomous vehicles and refine conversational AI, nearing advances they’re expected to report soon. They are among more than a thousand jobs run, often simultaneously, on the system so far.

Meanwhile the team already has on the whiteboard ideas for what’s next. “Give performance-obsessed engineers enough horsepower and cables and they will figure out amazing things,” said Bernauer.

At top: An artist’s rendering of a portion of Selene.

The post AI of the Storm: How We Built the Most Powerful Industrial Computer in the U.S. in Three Weeks During a Pandemic appeared first on The Official NVIDIA Blog.