NVIDIA’s first digital human technology small language model is being demonstrated in Mecha BREAK, a new multiplayer mech game developed by Amazing Seasun Games, to bring its characters to life and provide a more dynamic and immersive gameplay experience on GeForce RTX AI PCs.

The new on-device model, called Nemotron-4 4B Instruct, improves the conversation abilities of game characters, allowing them to more intuitively comprehend players and respond naturally.

NVIDIA has optimized ACE technology to run directly on GeForce RTX AI PCs and laptops. This greatly improves developers’ ability to deploy state-of-the-art digital human technology in next-generation games such as Mecha BREAK.

A Small Language Model Purpose-Built for Role-Playing

NVIDIA Nemotron-4 4B Instruct provides better role-play, retrieval-augmented generation and function-calling capabilities, allowing game characters to more intuitively comprehend player instructions, respond to gamers and perform more accurate and relevant actions.

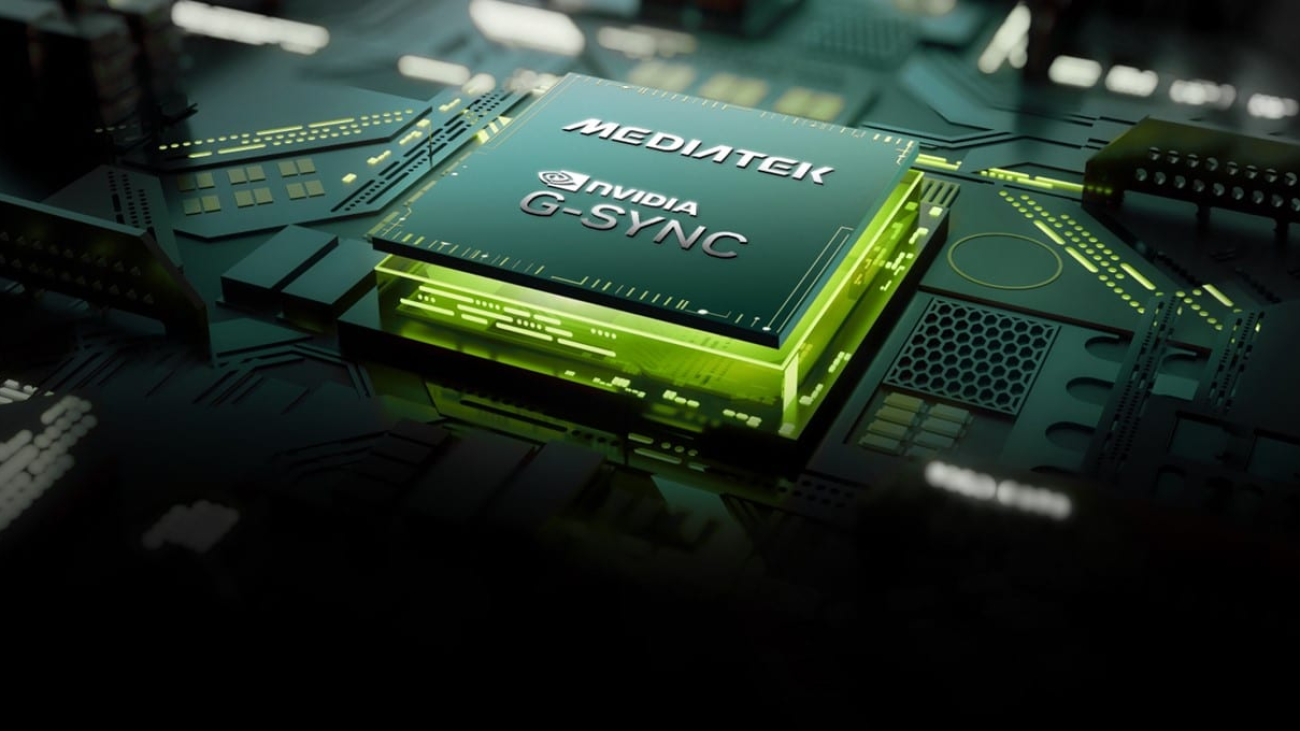

The model is available as an NVIDIA NIM microservice, which provides a streamlined path for developing and deploying generative AI-powered applications. The NIM is optimized for low memory usage, offering faster response times and providing developers a way to take advantage of over 100 million GeForce RTX-powered PCs and laptops.

Nemotron-4 4B Instruct is part of NVIDIA ACE, a suite of digital human technologies that provide speech, intelligence and animation powered by generative AI. It’s available as a NIM for cloud and on-device deployment by game developers.

First Game Showcases ACE NIM Microservices

Mecha BREAK, developed by Amazing Seasun Games, a Kingsoft Corporation game subsidiary, is showcasing the NVIDIA Nemotron-4 4B Instruct NIM running on device in the first display of ACE-powered game interactions. The NVIDIA Audio2Face-3D NIM and Whisper, OpenAI’s automatic speech recognition model, provide facial animation and speech recognition running on-device. ElevenLabs powers the character’s voice through the cloud.

In this demo, shown first at Gamescom, one of the world’s biggest gaming expos, NVIDIA ACE and digital human technologies allow players to interact with a mechanic non-playable character (NPC) that can help them choose from a diverse range of mechanized robots, or mechs, to complement their playstyle or team needs, assist in appearance customization and give advice on how to best prepare their colossal war machine for battle.

“We’re excited to showcase the power and potential of ACE NIM microservices in Mecha BREAK, using Audio2Face and Nemotron-4 4B Instruct to dramatically enhance in-game immersion,” said Kris Kwok, CEO of Amazing Seasun Games.

Perfect World Games Explores Latest Digital Human Technologies

NVIDIA ACE and digital human technologies continue to expand their footprint in the gaming industry.

Global game publisher and developer Perfect World Games is advancing its NVIDIA ACE and digital human technology demo, Legends, with new AI-powered vision capabilities. Within the demo, the character Yun Ni can see gamers and identify people and objects in the real world using the computer’s camera powered by ChatGPT-4o, adding an augmented reality layer to the gameplay experience. These capabilities unlock a new level of immersion and accessibility for PC games.

Learn more about NVIDIA ACE and download the NIM to begin building game characters powered by generative AI.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)