Some dream of code. Others dream of beer. NVIDIA’s Eric Boucher does both at once, and the result couldn’t be more aptly named.

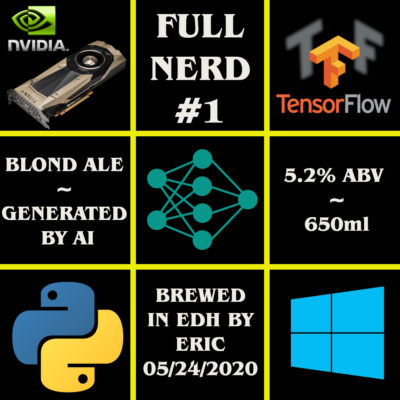

Full Nerd #1 is a crisp, light-bodied blonde ale perfect for summertime quaffing.

Eric, an engineer in the GPU systems software kernel driver team, went to sleep one night in May wrestling with two problems.

One, he had to wring key information from the often cryptic logs for the systems he oversees to help his team respond to issues faster.

The other: the veteran home brewer wanted a way to brew new kinds of beer.

“I woke up in the morning and I knew just what to do,” Boucher said. “Basically I got both done on one night’s broken sleep.”

Both solutions involved putting deep learning to work on a NVIDIA TITAN V GPU. Such powerful gear tends to encourage this sort of parallel processing, it seems.

Eric, a native of France now based near Sacramento, Calif., began homebrewing two decades ago, inspired by a friend and mentor at Sun Microsystems. He took a break from it when his children were first born.

Now that they’re older, he’s begun brewing again in earnest, using gear in both his garage and backyard, turning to AI for new recipes this spring.

Of course, AI has been used in the past to help humans analyze beer flavors, and even create wild new craft beer names. Eric’s project, however, is more ambitious, because it’s relying on AI to create new beer recipes.

You’ve Got Ale — GPU Speeds New Brew Ideas

For training data, Eric started with the all-grain ale recipes from MoreBeer, a hub for brewing enthusiasts, where he usually shops for recipe kits and ingredients.

Eric focused on ales because they’re relatively easy and quick to brew, and encompass a broad range of different styles, from hearty Irish stout to tangy and refreshing Kölsch.

He used wget — an open source program that retrieves content from the web — to save four index pages of ale recipes.

Then, using a Python script, he filtered the downloaded HTML pages and downloaded the linked recipe PDFs. He then converted the PDFs to plain text and used another Python script to interpret the text and generate recipes in a standardized format.

He fed these 108 recipes — including one for Russian River Brewing’s legendary Pliny the Elder IPA — to Textgenrnn, a recurrent neural network, a type of neural network that can be applied to a sequence of data to help guess what should come next.

And, because no one likes to wait for good beer, he ran it on an NVIDIA TITAN V GPU. Eric estimates it cuts the time to learn from the recipes database to seven minutes from one hour and 45 minutes using a CPU alone.

After a little tuning, Eric generated 10 beer recipes. They ranged from dark stouts to yellowish ales, and in flavor from bitter to light.

To Eric’s surprise, most looked reasonable (though a few were “plain weird and impossible to brew” like a recipe that instructed him to wait 45 days with hops in the wort, or unfermented beer, before adding the yeast).

Speed of Light (Beer)

With the approaching hot California summer in mind, Eric selected a blonde ale.

He was particularly intrigued because the recipe suggested adding Warrior, Cascade, and Amarillo hops — the flowers of the herbaceous perennial Humulus lupulus that gives good beer a range of flavors, from bitter to citrusy — an “intriguing schedule.”

He was particularly intrigued because the recipe suggested adding Warrior, Cascade, and Amarillo hops — the flowers of the herbaceous perennial Humulus lupulus that gives good beer a range of flavors, from bitter to citrusy — an “intriguing schedule.”

The result, Eric reports, was refreshing, “not too sweet, not too bitter,” with “a nice, fresh hops smell and a long, complex finish.”

He dubbed the result Full Nerd #1.

The AI-generated brew became the latest in a long line of brews with witty names Eric has produced, including a bourbon oak-infused beer named, appropriately enough, “The Groot Beer,” in honor of the tree-like creature from Marvel’s “Guardians of the Galaxy.”

Eric’s next AI brewing project: perhaps a dark stout, for winter, or a lager, a light, crisp beer that requires months of cold storage to mature.

For now, however, there’s plenty of good brew to drink. Perhaps too much. Eric usually shares his creations with his martial arts buddies. But with social distancing in place amidst the global COVID-19 pandemic, the five gallons, or forty pints, is more than the light drinker knows what to do with.

Eric, it seems, has found a problem deep learning can’t help him with. Bottoms up.

The post Deep Learning on Tap: NVIDIA Engineer Turns to AI, GPU to Invent New Brew appeared first on The Official NVIDIA Blog.