NVIDIA contributed the largest ever indoor synthetic dataset to the Computer Vision and Pattern Recognition (CVPR) conference’s annual AI City Challenge — helping researchers and developers advance the development of solutions for smart cities and industrial automation.

The challenge, garnering over 700 teams from nearly 50 countries, tasks participants to develop AI models to enhance operational efficiency in physical settings, such as retail and warehouse environments, and intelligent traffic systems.

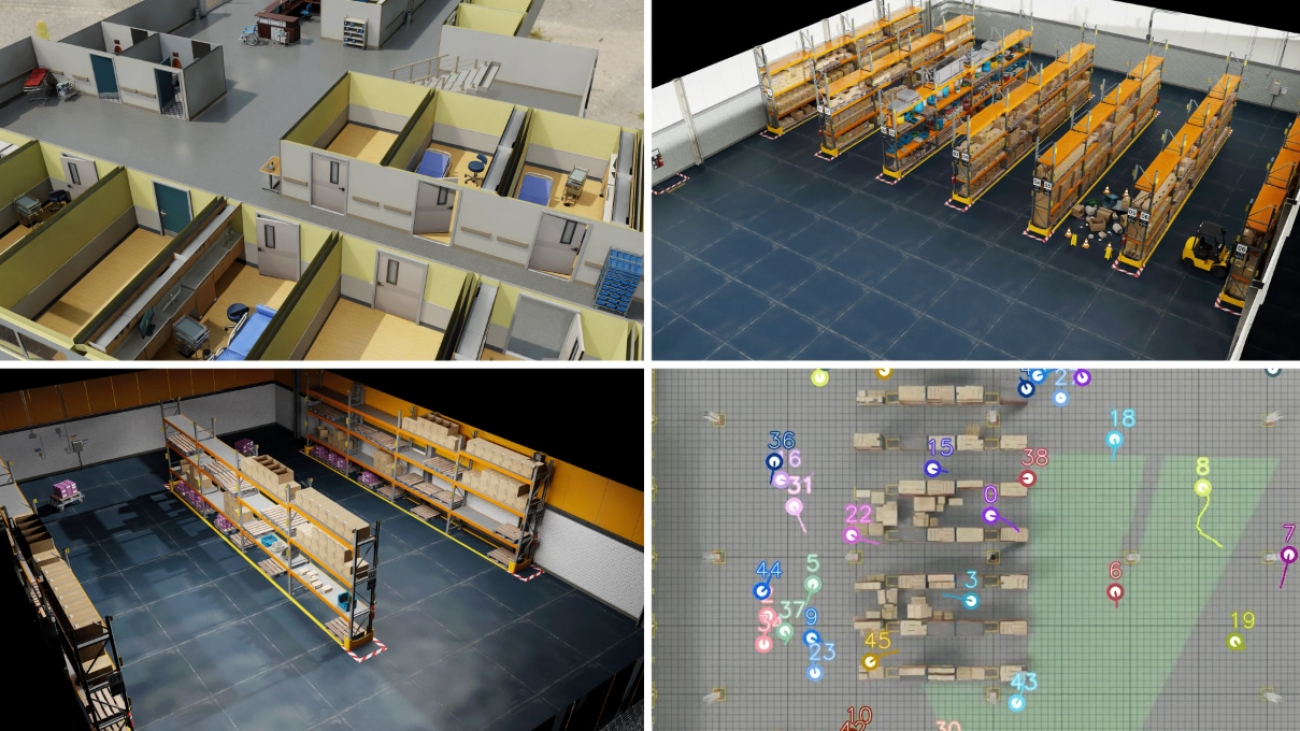

Teams tested their models on the datasets that were generated using NVIDIA Omniverse, a platform of application programming interfaces (APIs), software development kits (SDKs) and services that enable developers to build Universal Scene Description (OpenUSD)-based applications and workflows.

Creating and Simulating Digital Twins for Large Spaces

In large indoor spaces like factories and warehouses, daily activities involve a steady stream of people, small vehicles and future autonomous robots. Developers need solutions that can observe and measure activities, optimize operational efficiency, and prioritize human safety in complex, large-scale settings.

Researchers are addressing that need with computer vision models that can perceive and understand the physical world. It can be used in applications like multi-camera tracking, in which a model tracks multiple entities within a given environment.

To ensure their accuracy, the models must be trained on large, ground-truth datasets for a variety of real-world scenarios. But collecting that data can be a challenging, time-consuming and costly process.

AI researchers are turning to physically based simulations — such as digital twins of the physical world — to enhance AI simulation and training. These virtual environments can help generate synthetic data used to train AI models. Simulation also provides a way to run a multitude of “what-if” scenarios in a safe environment while addressing privacy and AI bias issues.

Creating synthetic data is important for AI training because it offers a large, scalable, and expandable amount of data. Teams can generate a diverse set of training data by changing many parameters including lighting, object locations, textures and colors.

Building Synthetic Datasets for the AI City Challenge

This year’s AI City Challenge consists of five computer vision challenge tracks that span traffic management to worker safety.

NVIDIA contributed datasets for the first track, Multi-Camera Person Tracking, which saw the highest participation, with over 400 teams. The challenge used a benchmark and the largest synthetic dataset of its kind — comprising 212 hours of 1080p videos at 30 frames per second spanning 90 scenes across six virtual environments, including a warehouse, retail store and hospital.

Created in Omniverse, these scenes simulated nearly 1,000 cameras and featured around 2,500 digital human characters. It also provided a way for the researchers to generate data of the right size and fidelity to achieve the desired outcomes.

The benchmarks were created using Omniverse Replicator in NVIDIA Isaac Sim, a reference application that enables developers to design, simulate and train AI for robots, smart spaces or autonomous machines in physically based virtual environments built on NVIDIA Omniverse.

Omniverse Replicator, an SDK for building synthetic data generation pipelines, automated many manual tasks involved in generating quality synthetic data, including domain randomization, camera placement and calibration, character movement, and semantic labeling of data and ground-truth for benchmarking.

Ten institutions and organizations are collaborating with NVIDIA for the AI City Challenge:

- Australian National University, Australia

- Emirates Center for Mobility Research, UAE

- Indian Institute of Technology Kanpur, India

- Iowa State University, U.S.

- Johns Hopkins University, U.S.

- National Yung-Ming Chiao-Tung University, Taiwan

- Santa Clara University, U.S.

- The United Arab Emirates University, UAE

- University at Albany – SUNY, U.S.

- Woven by Toyota, Japan

Driving the Future of Generative Physical AI

Researchers and companies around the world are developing infrastructure automation and robots powered by physical AI — which are models that can understand instructions and autonomously perform complex tasks in the real world.

Generative physical AI uses reinforcement learning in simulated environments, where it perceives the world using accurately simulated sensors, performs actions grounded by laws of physics, and receives feedback to reason about the next set of actions.

Developers can tap into developer SDKs and APIs, such as the NVIDIA Metropolis developer stack — which includes a multi-camera tracking reference workflow — to add enhanced perception capabilities for factories, warehouses and retail operations. And with the latest release of NVIDIA Isaac Sim, developers can supercharge robotics workflows by simulating and training AI-based robots in physically based virtual spaces before real-world deployment.

Researchers and developers are also combining high-fidelity, physics-based simulation with advanced AI to bridge the gap between simulated training and real-world application. This helps ensure that synthetic training environments closely mimic real-world conditions for more seamless robot deployment.

NVIDIA is taking the accuracy and scale of simulations further with the recently announced NVIDIA Omniverse Cloud Sensor RTX, a set of microservices that enable physically accurate sensor simulation to accelerate the development of fully autonomous machines.

This technology will allow autonomous systems, whether a factory, vehicle or robot, to gather essential data to effectively perceive, navigate and interact with the real world. Using these microservices, developers can run large-scale tests on sensor perception within realistic, virtual environments, significantly reducing the time and cost associated with real-world testing.

Omniverse Cloud Sensor RTX microservices will be available later this year. Sign up for early access.

Showcasing Advanced AI With Research

Participants submitted research papers for the AI City Challenge and a few achieved top rankings, including:

- Overlap Suppression Clustering for Offline Multi-Camera People Tracking: This paper introduces a tracking method that includes identifying individuals within a single camera’s view, selecting clear images for easier recognition, grouping similar appearances, and helping to clarify identities in challenging situations.

- A Robust Online Multi-Camera People Tracking System With Geometric Consistency and State-aware Re-ID Correction: This research presents a new system that uses geometric and appearance data to improve tracking accuracy, and includes a mechanism that adjusts identification features to fix tracking errors.

- Cluster Self-Refinement for Enhanced Online Multi-Camera People Tracking: This research paper addresses specific challenges faced in online tracking, such as the storage of poor-quality data and errors in identity assignment.

All accepted papers will be presented at the AI City Challenge 2024 Workshop, taking place on June 17.

At CVPR 2024, NVIDIA Research will present over 50 papers, introducing generative physical AI breakthroughs with potential applications in areas like autonomous vehicle development and robotics.

Papers that used NVIDIA Omniverse to generate synthetic data or digital twins of environments for model simulation, testing and validation include:

- FoundationPose: Unified 6D Pose Estimation and Tracking of Novel Objects: FoundationPose is a versatile model for estimating and tracking the 3D position and orientation of objects. The model operates by using a few reference images or a 3D representation to accurately understand an object’s shape.

- Neural Implicit Representation for Building Digital Twins of Unknown Articulated Objects: This research paper presents a method for creating digital models of objects from two 3D scans, improving accuracy by analyzing how movable parts connect and move between positions.

- BEHAVIOR Vision Suite: Customizable Dataset Generation via Simulation: The BEHAVIOR Vision Suite generates customizable synthetic data for computer vision research, allowing researchers to adjust settings like lighting and object placement.

Read more about NVIDIA Research at CVPR, and learn more about the AI City Challenge.

Get started with NVIDIA Omniverse by downloading the standard license free, access OpenUSD resources and learn how Omniverse Enterprise can connect teams. Follow Omniverse on Instagram, Medium, LinkedIn and X. For more, join the Omniverse community on the forums, Discord server, Twitch and YouTube channels.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)