For younger generations, paper bills, loan forms and even cash might as well be in a museum. Smartphones in hand, their financial services largely take place online.

The financial-technology companies that serve them are in a race to develop AI that can make sense of the vast amount of data the companies collect — both to provide better customer service and to improve their own backend operations.

Vietnam-based fintech company MoMo has developed a super-app that includes payment and financial transaction processing in one self-contained online commerce platform. The convenience of this all-in-one mobile platform has already attracted over 30 million users in Vietnam.

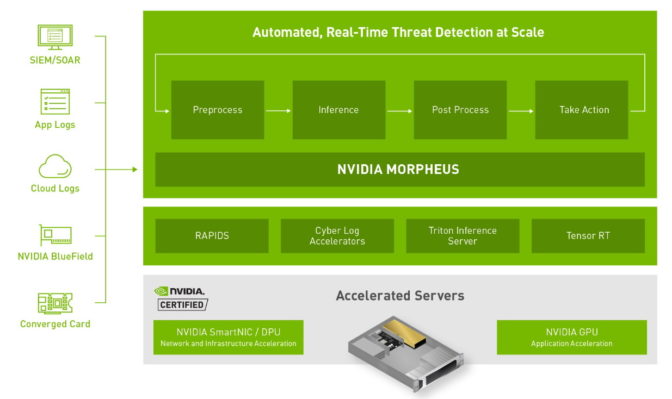

To improve the efficiency of the platform’s chatbots, know-your-customer (eKYC) systems and recommendation engines, MoMo uses NVIDIA GPUs running in Google Cloud. It uses NVIDIA DGX systems for training and batch processing.

In just a few months, MoMo has achieved impressive results in speeding development of solutions that are more robust and easy to scale. Using NVIDIA GPUs for eKYC inference tasks has resulted in a 10x speedup compared to using CPU, the company says. For the MoMo Face Payment service, using TensorRT has reduced training and inference time by 10x.

AI Offers a Different Perspective

Tuan Trinh, director of data science at MoMo, describes his company’s use of AI as a way to get a different perspective on its business. One such project processes vast amounts of data and turns it into computerized visuals or graphs that can then be analyzed to improve connectivity between users in the app.

MoMo developed its own AI algorithm that uses over a billion data points to direct recommendations of additional services and products to its customers. These offerings help maintain a line of communication with the company’s user base that helps boost engagement and conversion.

The company also deploys a recommendation box on the home screen of its super-app. This caused its click-through rate to improve dramatically as the AI prompts customers with useful recommendations and keeps them engaged.

With AI, MoMo says it can process the habits of 10 million active users over the course of the last 30-60 days to train its predictive models. In addition, NVIDIA Triton Inference Server helps unify the serving flows for recommendation engines, which significantly reduces the effort to deploy AI applications in production environments. In addition, TensorRT has contributed to 3x performance improvement of MoMo’s payment services AI model inference, boosting the customer experience.

Chatbots Advance the Conversation

MoMo’s will use AI-powered chatbots to allow it to scale up faster when accommodating and engaging with users. Chatbot services are especially effective on mobile device apps, which tend to be popular with younger users, who often prefer them over making phone calls to customer service.

Chatbot users can inquire about a product and get the support they need to evaluate it before purchasing — all from one interface — which is essential for a super-app like MoMo’s that functions as a one-stop-shop.

The chatbots are also an effective vehicle for upselling or suggesting additional services, MoMo says. When combined with machine learning, it’s possible to categorize target audiences for different products or services to customize their experience with the app.

AI chatbots have the additional benefit of freeing up MoMo’s customer service team to handle other important tasks.

Better Credit Scoring

Credit history data from all of MoMo’s 30 million-plus users can be applied to models used for risk control of financial services by using AI algorithms. MoMo has applied credit scoring to the lending services within its super-app. Since the company doesn’t solely depend on traditional deep learning for tasks that are less complex, MoMo’s development team has been able to obtain higher accuracy with shorter processing times.

The MoMo app takes less than 2 seconds to make a lending decision but is still able to reduce taking on risky lending targets with more accurate predictions from AI. This helps keep customers from taking on too much debt, and helps MoMo from missing out on potential revenue.

Since AI is capable of processing both structured and unstructured data, it’s able to incorporate information beyond traditional credit scores, like whether customers spend their money on necessities or luxuries, to assess a borrower’s risk more accurately.

Future of AI in Fintech

With fintechs increasingly applying AI to their massive data stores, MoMo’s team predicts the industry will need to evaluate how to do so in a way that keeps user data safe — or risk losing customer loyalty. MoMo already plans to expand its use of graph neural networks and models based on its proven ability to dramatically improve its operations.

The MoMo team also believes that AI could one day make credit scores obsolete. Since AI is able to make decisions based on broader unstructured data, it’s possible to determine loan approval by considering other risks besides a credit score. This would help open up the pool of potential users on fintech apps like MoMo’s to people in underserved and underbanked communities, who may not have credit scores, let alone “good” ones.

With around one in four American adults “underbanked,” which makes it more difficult for them to get a loan or credit card, and more than half of Africa’s population completely “credit invisible,” which refers to people without a bank or a credit score, MoMo believes AI could bring banking access to communities like these and open up a new user base for fintech apps at the same time.

Explore NVIDIA’s AI solutions and enterprise-level AI platforms driving innovation in financial services.

The post All-In-One Financial Services? Vietnam’s MoMo Has a Super-App for That appeared first on NVIDIA Blog.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)