Attention, recruits! It’s time to test combat skills and strategic prowess. Drop into the heart of the action this GFN Thursday with the launch of the highly anticipated first-person blockbuster Call of Duty: Black Ops 6, streaming in the cloud Oct. 24, 9pm PT.

Plus, embark on an adventure to defend an empire in the remake of the classic role-playing game (RPG), Romancing SaGa 2: Revenge of the Seven.

That’s just the tip of the iceberg. These are part of the nine titles being added to GeForce NOW’s library of over 2,000 games. Members can also look forward to a new reward — a free in-game Stag-Heart Skull Sallet Hat — for the award-winning RPG The Elder Scrolls Online, starting on Thursday, Oct. 31. Get ready by opting into GeForce NOW’s Rewards program today.

Mission Critical

Get ready, soldiers — the next assignment awaits in the cloud. Call of Duty: Black Ops 6 is set against the backdrop of the Gulf War in the early 1990s, and will bring a thrilling Campaign, action-packed Multiplayer and a Zombies experience sure to thrill fans. The new game introduces the Omnimovement system to the franchise, allowing players to sprint, slide and dive in any direction, enhancing tactical gameplay across all modes.

Call of Duty: Black Ops 6 launches with 16 new Multiplayer maps and two Zombies maps — Terminus and Liberty Falls. In Multiplayer, the classic Prestige system makes a comeback, while the single player Campaign experience promises a gripping espionage narrative. With its blend of historical fiction, gameplay innovations and fan-favorite features, Black Ops 6 aims to deliver an intense Call of Duty experience that pushes the boundaries of the franchise.

Prepare for adrenaline-fueled missions with an Ultimate GeForce NOW membership. These members get an advantage on the field with ultra-low-latency gaming, streaming from a GeForce RTX 4080 gaming rig in the cloud.

Lucky Number Seven

Save the Empire with a little help from the cloud — Romancing SaGa2: Revenge of the Seven is coming to GeForce NOW.

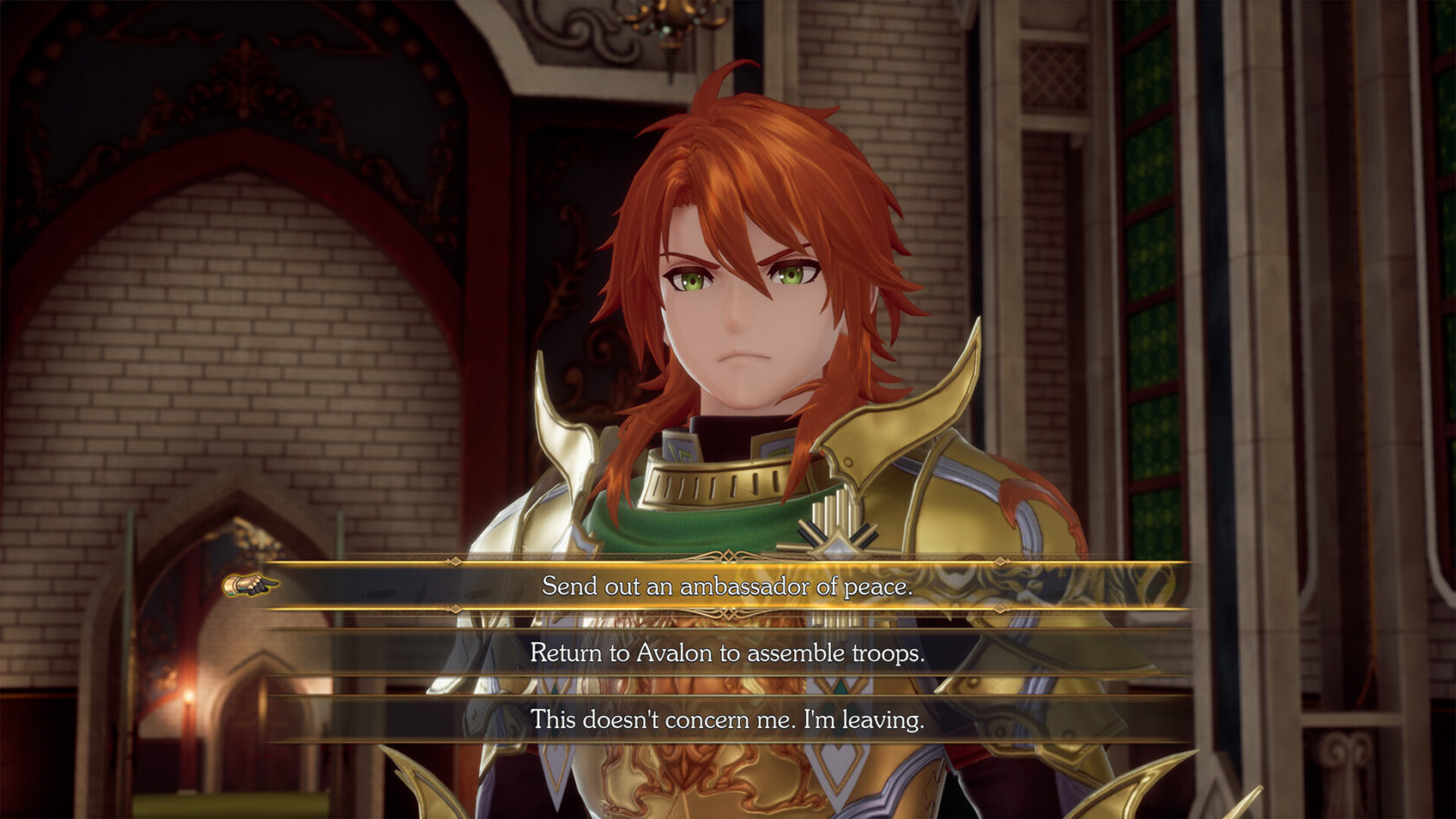

Experience the full remake of Square Enix’s groundbreaking nonlinear RPG, Romancing SaGa2: Revenge of the Seven, first released in 1993 in Japan. The adventure includes both new and classic SaGa franchise features, complete with Japanese and English voiceovers, original and rearranged compositions, and much more. It’s an ideal entry point for new players and provides an amped-up experience for longtime fans.

The game features the Seven Heroes, once hailed as saviors before the ancients feared their power and banished them to another dimension. Thousands of years have passed, and the heroes have become legends. Furious that humankind has forgotten their many sacrifices, they’ve now returned as villains bent on revenge.

Members can join forces with characters from over 30 different classes featuring a wide variety of professions and races, each with their own favored weapons, unique abilities and effective tactics. Ultimate members can stream at up to 4K resolution with extended session lengths and more.

Hircine’s Hunt … From the Cloud

A new game reward has arrived for GeForce NOW members. New and existing The Elder Scrolls Online players can dawn the rare Stag-Heart Skull Sallet Hat, a fierce antlered helm with untamed strength. It’s the perfect way for gamers to forge their legends in Tamriel and stand out during the Witches Festival.

Members who’ve opted into GeForce NOW’s Rewards program can check their email for instructions on how to redeem it. Ultimate and Priority members can start redeeming the reward now, while free members will be able to claim it starting tomorrow, Oct. 25. It’s available through Sunday, Nov. 24, first come, first served.

Play Today

Members can look for the following games available to stream in the cloud this week:

- Worshippers of Cthulhu (New release on Steam, Oct. 21)

- No More Room in Hell 2 (New release on Steam, Oct. 22)

- Romancing SaGa 2: Revenge of the Seven (New release on Steam, Oct. 24)

- Windblown (New release on Steam, Oct. 24)

- Call of Duty: Black Ops 6 (New release on Steam, Battle.net and Xbox, available on PC Game Pass, Oct. 25)

- Call of Duty HQ, including Call of Duty: Modern Warfare III and Call of Duty: Warzone (Xbox, available on PC Game Pass)

- DUCKSIDE (Steam)

- Off the Grid (Epic Games Store)

- Selaco (Steam)

What are you planning to play this weekend? Let us know on X or in the comments below.

the cloud is calling, will you answer?

—

NVIDIA GeForce NOW (@NVIDIAGFN) October 23, 2024