Editor’s note: This post is a part of our Meet the Omnivore series, which features individual creators and developers who use NVIDIA Omniverse to accelerate their 3D workflows and create virtual worlds.

Creative studio Elara Systems doesn’t shy away from sensitive subjects in its work.

Part of its mission for a recent client was to use fun, captivating visuals to help normalize what could be considered a touchy health subject — and boost medical outcomes as a result.

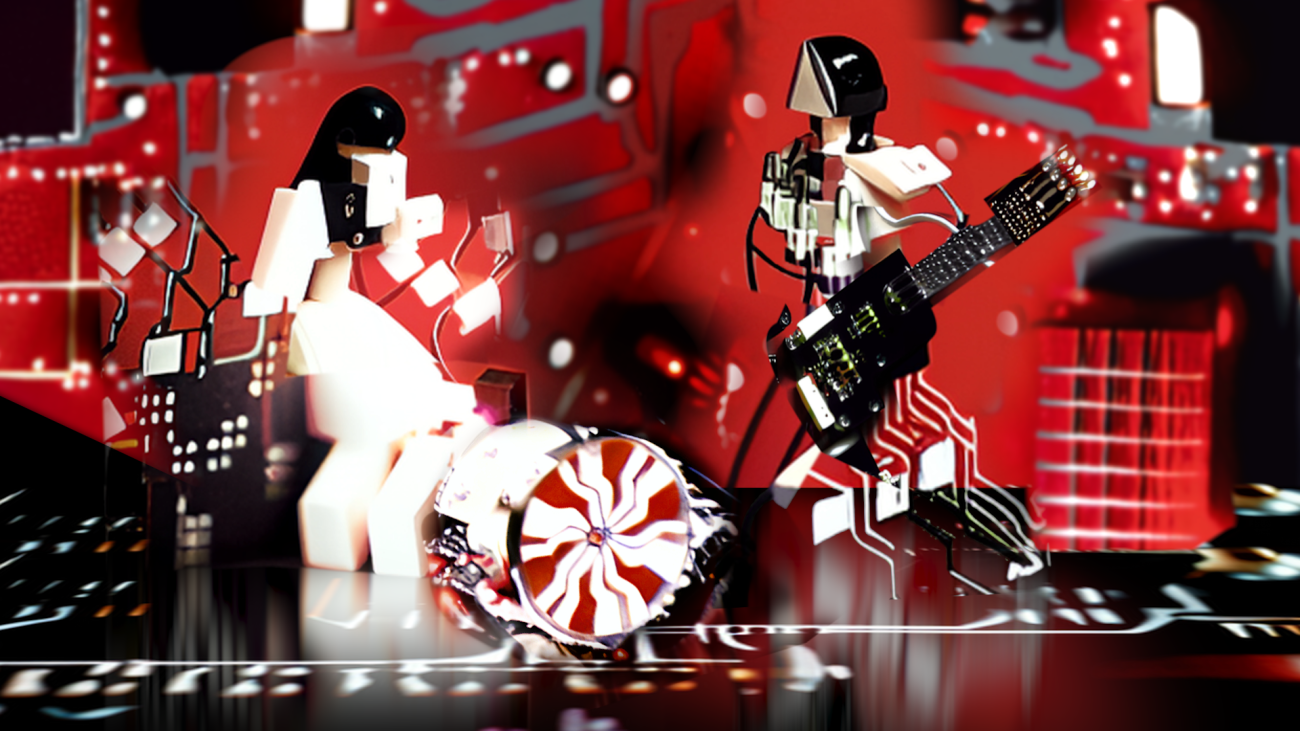

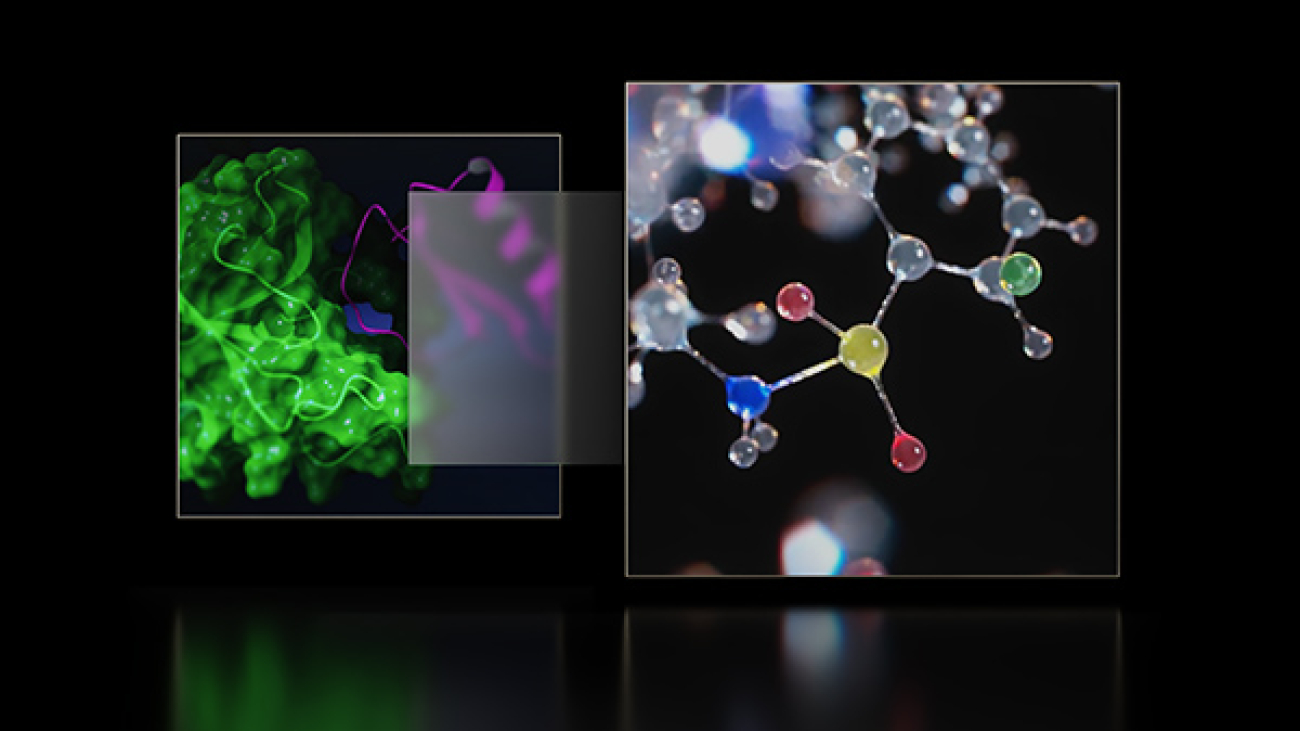

In collaboration with Boston Scientific and the Sickle Cell Society, the Elara Systems team created a character-driven 3D medical animation using the NVIDIA Omniverse development platform for connecting 3D pipelines and building metaverse applications.

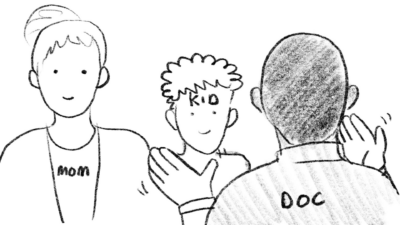

The video aims to help adolescents experiencing sickle cell disease understand the importance of quickly telling an adult or a medical professional if they’re experiencing symptoms like priapism — a prolonged, painful erection that could lead to permanent bodily damage.

“Needless to say, this is something that could be quite frightening for a young person to deal with,” said Benjamin Samar, technical director at Elara Systems. “We wanted to make it crystal clear that living with and managing this condition is achievable and, most importantly, that there’s nothing to be ashamed of.”

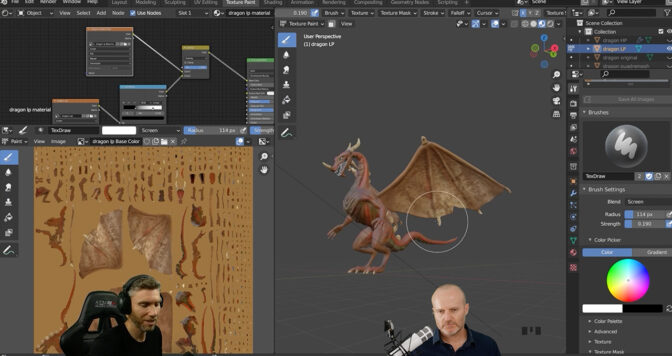

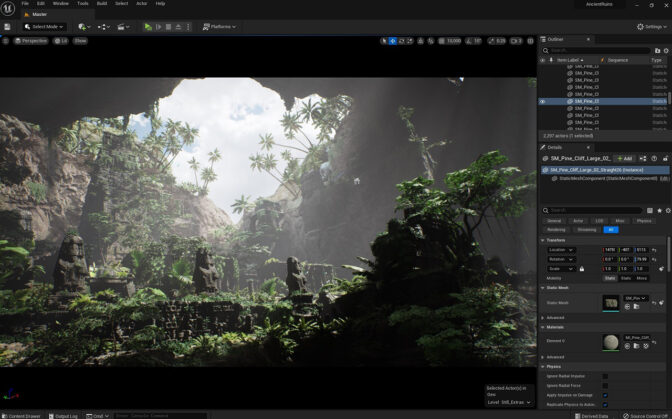

To bring their projects to life, the Elara Systems team turns to the USD Composer app, generative AI-powered Audio2Face and Audio2Gesture, as well as Omniverse Connectors to Adobe Substance 3D Painter, Autodesk 3ds Max, Autodesk Maya and other popular 3D content-creation tools like Blender, Epic Games Unreal Engine, Reallusion iClone and Unity.

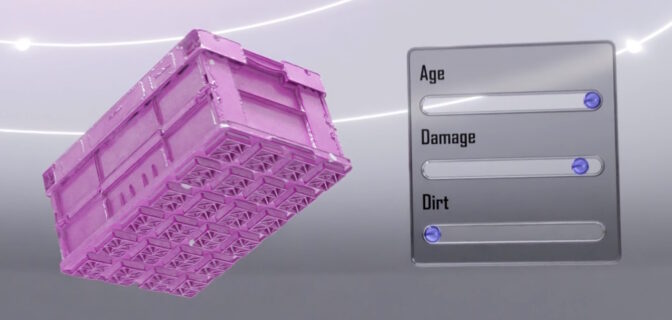

For the sickle cell project, the team relied on Adobe Substance 3D Painter to organize various 3D environments and apply custom textures to all five characters. Adobe After Effects was used to composite the rendered content into a single, cohesive short film.

It’s all made possible thanks to the open and extensible Universal Scene Description (USD) framework on which Omniverse is built.

“USD is extremely powerful and solves a ton of problems that many people may not realize even exist when it comes to effectively collaborating on a project,” Samar said. “For example, I can build a scene in Substance 3D Painter, export it to USD format and bring it into USD Composer with a single click. Shaders are automatically generated and linked, and we can customize things further if desired.”

An Animation to Boost Awareness

Grounding the sickle cell awareness campaign in a relatable, personal narrative was a “uniquely human approach to an otherwise clinical discussion,” said Samar, who has nearly two decades of industry experience spanning video production, motion graphics, 3D animation and extended reality.

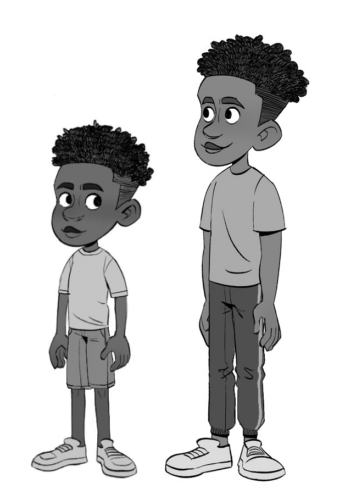

The team accomplished this strategy through a 3D character named Leon — a 13-year-old soccer lover who shares his experiences about a tough day when he first learned how to manage his sickle cell disease.

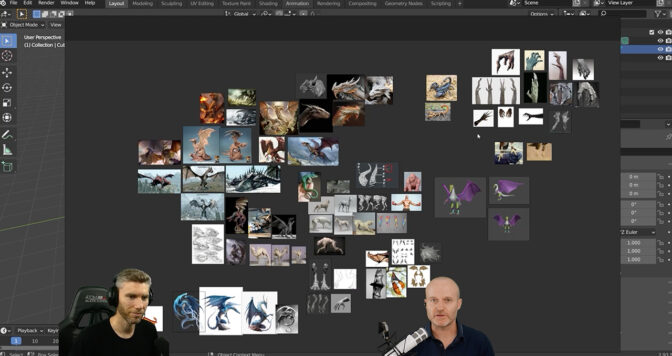

The project began with detailed discussions about Sickle Cell Society’s goals for the short, followed by scripting, storyboarding and creating various sketches. “Once an early concept begins to crystallize in the artists’ minds, the creative process is born and begins to build momentum,” Samar said.

Then, the team created rough 2D mockups using the illustration app Procreate on a tablet. This stage of the artistic process centered on establishing character outfits, proportions and other details. The final concept art was used as a clear reference to drive the rest of the team’s design decisions.

Moving to 3D, the Elara Systems team tapped Autodesk Maya to build, rig and fully animate the characters, as well as Adobe Substance 3D Painter and Autodesk 3ds Max to create the short’s various environments.

“I’ve found the animated point cache export option in the Omniverse Connector for Maya to be invaluable,” Samar said. “It helps ensure that what we’re seeing in Maya will persist when brought into USD Composer, which is where we take advantage of real-time rendering to create high-quality visuals.”

The real-time rendering enabled by Omniverse was “critically important, because without it, we would have had zero chance of completing and delivering this content anywhere near our targeted deadline,” the technical artist said.

“I’m also a big fan of the Reallusion to Omniverse workflow,” he added.

The Connector allows users to easily bring characters created using Reallusion iClone into Omniverse, which helps to deliver visually realistic skin shaders. And USD Composer can enable real-time performance sessions for iClone characters when live-linked with a motion-capture system.

“Omniverse offers so much potential to help streamline workflows for traditional 3D animation teams, and this is just scratching the surface — there’s an ever-expanding feature set for those interested in robotics, digital twins, extended reality and game design,” Samar said. “What I find most assuring is the sheer speed of the platform’s development — constant updates and new features are being added at a rapid pace.”

Join In on the Creation

Creators and developers across the world can download NVIDIA Omniverse for free, and enterprise teams can use the platform for their 3D projects.

Check out artwork from other “Omnivores” and submit projects in the gallery. Connect your workflows to Omniverse with software from Adobe, Autodesk, Epic Games, Maxon, Reallusion and more.

Follow NVIDIA Omniverse on Instagram, Medium, Twitter and YouTube for additional resources and inspiration. Check out the Omniverse forums, and join our Discord server and Twitch channel to chat with the community.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)