Editor’s note: This post is part of our weekly In the NVIDIA Studio series, which celebrates featured artists, offers creative tips and tricks, and demonstrates how NVIDIA Studio technology improves creative workflows. We’re also deep diving on new GeForce RTX 40 Series GPU features, technologies and resources, and how they dramatically accelerate content creation.

An earlier version of the blog incorrectly noted that the December Studio Driver was available today. Stay tuned for updates on this month’s driver release.

Time to tackle one of the most challenging tasks for aspiring movie makers — creating aesthetically pleasing visual effects — courtesy of visual effects artist and filmmaker Jay Lippman this week In the NVIDIA Studio.

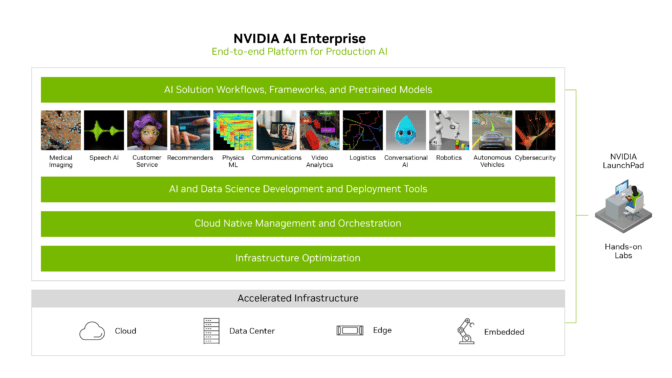

In addition, the new NVIDIA Omniverse Unreal Engine Connector 200.2 allows Unreal users to send and live-sync assets in the Omniverse Nucleus server — unlocking the ability to open, edit and sync Unreal with other creative apps — to build more expansive virtual worlds in complete ray-traced fidelity.

Plus, the NVIDIA Studio #WinterArtChallenge is delivering un-brr-lievable entries. Check out some of the featured artwork and artists at the end of this post.

(Inter)Stellar Video-Editing Tips and Tricks

A self-taught filmmaker, Lippman grew up a big fan of science fiction and horror. Most of the short sketches on his YouTube channel are inspired by movies and shows in that genre, he said, but his main inspiration for content derives from his favorite punk bands.

“I always admired that they didn’t rely on big record deals to get their music out there,” he said. “That whole scene was centered around this culture of DIY, and I’ve tried to bring that same mentality into the world of filmmaking, figuring out how to create what you want with the tools that you have.”

That independent spirit drives Make Your VFX Shots Look REAL — a sci-fi cinematic and tutorial that displays the wonder behind top-notch graphics and the know-how for making them your own.

Lippman uses Blackmagic Design’s DaVinci Resolve software for video editing, color correction, visual effects, motion graphics and audio post-production. His new GeForce RTX 4080 GPU enables him to edit footage while applying effects freely and easily.

Lippman took advantage of the new AV1 encoder found in DaVinci Resolve, OBS Studio and Adobe Premiere Pro via the Voukoder plug-in, encoding 40% faster and unlocking higher resolutions and crisper image quality.

“The GeForce RTX 4080 GPU is a no-brainer for anyone who does graphics-intensive work, video production or high-end streaming,” Lippman said.

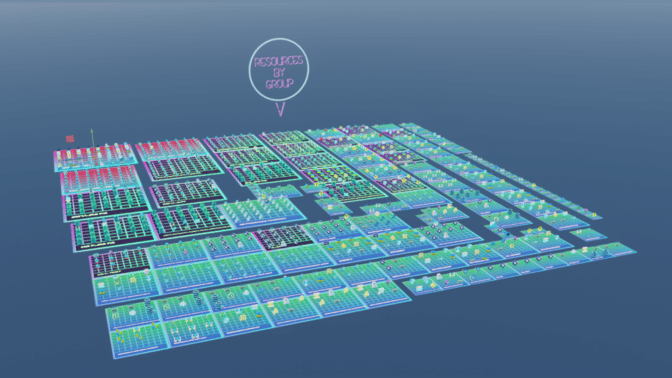

The majority of Make Your VFX Shots Look REAL was built in DaVinci Resolve’s Fusion page, featuring a node-based workflow with hundreds of 2D and 3D tools. He uploaded footage from his Blackmagic Pocket Cinema Camera in 6K resolution then proceeded to composite VFX.

The artist started by refining motion blur, a key element of any movement in camera footage shot in 24 frames per second or higher. Animated elements like the blue fireball must include motion blur, or they’ll look out of place. Applying a transform node with motion blur, done faster with a GeForce RTX GPU, created the necessary realism, Lippman said.

Lippman then lit the scene and enhanced elements in the composition by emitting absent light in the original footage. He creates lighting and adds hues by using a probe modifier on the popular DaVinci Resolve color corrector, a GPU-accelerated task.

The artist then matches movement, critical for adding 2D or 3D effects to footage. In this case, Lippman replaced the straightforward blue sky with a haunting, cloudy, gloomy gray. Within Fusion, Lippman selected the merge mode, connecting the sky with the composition. He then right clicked the center of the video and used the Merge:1 Center, Modify With and Tracker position features with minor adjustments to complete tracking movement.

Lippman rounds out his creative workflow with color matching. He said it’s critical to have the proper mental approach alongside realistic expectations while applying VFX composition.

“Our goal is not to make our VFX shots look real, it’s to make them look like they were shot on the same camera, on the same lens, at the same time of the original footage,” said Lippman. “A big part of it is matching colors, contrast and overall brightness with all of the scene elements.”

Lippman color matched the sky, clouds and UFO by adding a color-corrector node to a single cloud node, tweaking the hue and likeness to match the rest of the sky. Edits were then applied to the remaining clouds. Lippman also applied a color-correction node to the UFO, tying up the scene with matching colors.

When it came time for final exports, the exclusive NVIDIA dual encoders found in GeForce RTX 40 Series GPUs slashed Lippman’s export time by half. This can help freelancers like him meet sharp deadlines. The dual encoders can be found in Adobe Premiere Pro (via the popular Voukoder plug-in), Jianying Pro (China’s top video-editing app) and DaVinci Resolve.

“The GeForce RTX 4080 is a powerhouse and definitely gives you the ability to do more with less,” he said. “It’s definitely faster than the dual RTX 2080 GPU setup I’d been using and twice as fast as the RTX 3080 Ti, while using less power and costing around the same. Plus, it unlocks the AV1 Codec in DaVinci Resolve and streaming in AV1.”

Check out his review.

As AI plays a greater role in creative workflows, video editors can explore DaVinci Resolve’s vast suite of RTX-accelerated, AI-powered features that are an incredible boon for efficiency.

These include Face Refinement, which detects facial features for fast touch-ups such as sharpening eyes and subtle relighting; Speed Warp, which quickly creates super-slow-motion videos; and Detect Scene Cuts, which uses DaVinci Resolve’s neural engine to predict video cuts without manual edits.

The Unreal Engine Connector Arrives

NVIDIA Omniverse Unreal Engine Connector 200.2 supports enhancements to non-destructive live sessions with Omni Live 2.0, allowing for more robust real-time collaboration of Universal Scene Description (USD) assets within Unreal Engine. Thumbnails are now supported in its content browser from Nucleus for Omniverse USD and open-source material definition language (MDL), which creates a more intuitive user experience.

The Omniverse Unreal Engine Connector also supports updates to Unreal Engine 5.1, including:

- Lumen — Unreal Engine 5’s fully dynamic global illumination and reflections system that renders diffuse interreflection with infinite bounces and indirect specular reflections in large, detailed environments at massive scale.

- Nanite — the virtualized geometry system using internal mesh formats and rendering technology to render pixel-scale detail at high object counts.

- Virtual Shadow Maps — to deliver consistent, high-resolution shadowing that works with film-quality assets and large, dynamically lit open worlds.

Omniverse Unreal Engine Connector supports versions 4.27, 5.0 and 5.1 of Unreal Editor. View the complete release notes.

Weather It’s Cold or Not, the #WinterArtChallenge Carries On

Enter NVIDIA Studio’s #WinterArtChallenge, running through the end of the year, by sharing winter-themed art on Instagram, Twitter or Facebook for a chance to be featured on our social media channels.

@lowpolycurls’ 3D winter scene — with its unique, 2D painted-on style textures — gives us all the warm feels during a cold winter night.

Snow much fun!

𝑊𝑒𝑎𝑡ℎ𝑒𝑟 it’s cold or not, we’re enjoying this beautiful #WinterArtChallenge share from @lowpolycurls.

Share your winter-themed art with us for a chance to be featured on our social channels and in an upcoming Studio Standouts YouTube video!

pic.twitter.com/aWAPoczok8

— NVIDIA Studio (@NVIDIAStudio) November 28, 2022

Be sure to tag #WinterArtChallenge to join.

Get creativity-inspiring updates directly to your inbox by subscribing to the NVIDIA Studio newsletter.

The post Visual Effects Artist Jay Lippman Takes Viewers Behind the Camera This Week ‘In the NVIDIA Studio’ appeared first on NVIDIA Blog.

Flying

Flying NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)