Digital artists and creative professionals have plenty to be excited about at NVIDIA GTC.

Impressive NVIDIA Studio laptop offerings from ASUS and MSI launch with upgraded RTX GPUs, providing more options for professional content creators to elevate and expand creative possibilities.

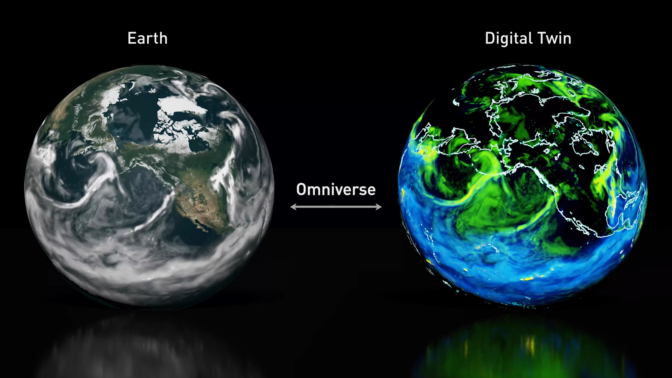

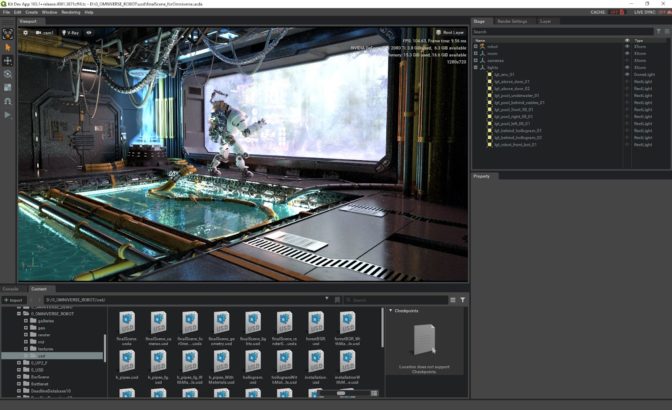

NVIDIA Omniverse gets a significant upgrade — including updates to the Omniverse Create, Machinima and Showroom apps; with an upcoming, imminent, View release. A new Unreal Engine Omniverse Connector beta is out now with our Adobe Substance 3D Painter Connector close behind.

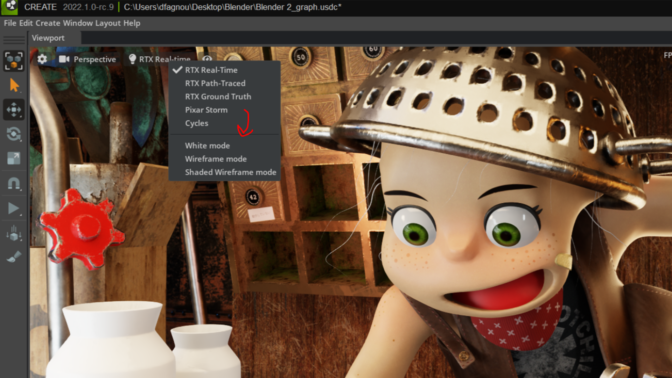

Omniverse artists can now use Pixar HDStorm, Chaos V-Ray, Maxon Redshift and OTOY Octane Hydra render delegates within the viewport of all Omniverse apps, bringing more freedom and choice to 3D creative workflows, with Blender Cycles coming soon. Read our Omniverse blog for more details.

NVIDIA Canvas, the beta app sensation using advanced AI to quickly turn simple brushstrokes into realistic landscape images, has received a stylish update.

The March Studio Driver, available for download today, optimizes the latest creative app updates, featuring Blender Cycles 3.1, all with the stability and reliability NVIDIA Studio delivers.

To celebrate, NVIDIA is kicking off the #MadeInMachinima contest. Artists can remix iconic characters from Squad, Mount & Blade II: Bannerlord and Mechwarrior 5 into a cinematic short in Omniverse Machinima to win NVIDIA Studio laptops. The submission window opens on March 29 and runs through June 27. Visit the contest landing page for details.

New NVIDIA RTX Laptop GPUs Unlock Endless Creative Possibilities

Professionals on the go have powerful new laptop GPUs to choose from, with faster speeds and larger memory options: RTX A5500, RTX A4500 , RTX A3000 12GB, RTX A2000 8GB and NVIDIA RTX A1000. These GPUs incorporate the latest RTX and Max-Q technology, are available in thin and light laptops, and deliver extraordinary performance.

Our new flagship laptop GPU, the NVIDIA RTX A5500 with 16GB of memory, is capable of handling the most challenging 3D and video workloads; with up to double the rendering performance of the previous generation RTX 5000.

The most complex, advanced, creative workflows have met their match.

NVIDIA Studio Laptop Drop

Three extraordinary Studio laptops are available for purchase today.

The ASUS ProArt Studiobook 16 is capable of incredible performance, and is configurable with a wide-range of professional and consumer GPUs. It’s rich with creative features: certified color-accurate 16-inch 120 Hz 3.2K OLED wide-view 16:10 display, a three-button touchpad for 3D designers, ASUS dial for video editing and an enlarged touchpad for stylus support.

MSI’s Creator Z16P and Z17 sport an elegant and minimalist design, featuring up to an NVIDIA RTX 3080 Ti or RTX A5500 GPU, and boast a factory-calibrated True Pixel display with QHD+ resolution and 100 percent DCI-P3 color.

NVIDIA Studio laptops are tested and validated for maximum performance and reliability. They feature the latest NVIDIA technologies that deliver real-time ray tracing, AI-enhanced features and time-saving rendering capabilities. These laptops have access to the exclusive Studio suite of software — including best-in-class Studio Drivers, NVIDIA Omniverse, Canvas, Broadcast and more.

In the weeks ahead, ASUS and GIGABYTE will make it even easier for new laptop owners to enjoy one of the Studio benefits. Upgraded livestreams, voice chats and video calls — powered by AI — will be available immediately with the NVIDIA Broadcast app preinstalled in their Pro Art and AERO product lines.

To Omniverse and Beyond

New Omniverse Connections are expanding the ecosystem and are now available in beta: Unreal Engine 5 Omniverse Connector and the Adobe Substance 3D Material Extension, with the Adobe Substance 3D Painter Omniverse Connector very close behind, allowing users to enjoy seamless, live-edit texture and material workflows.

Maxon’s Cinema4D now supports USD and is compatible with OmniDrive, unlocking Omniverse workflows for visualization specialists.

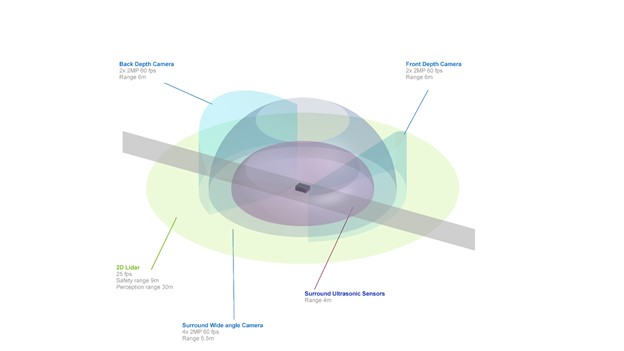

Artists can now use Pixar HD Storm, Chaos V-Ray, Maxon Redshift and OTOY Octane renderers within the viewport of all Omniverse apps, with Blender Cycles coming soon. Be it refining 3D scenes or exporting final projects, artists can switch between the lightning-fast Omniverse RTX Renderer, or their preferred renderer with advantageous features.

CAD designers can now directly import 26 popular CAD formats into Omniverse USD scenes.

The integration of NVIDIA Maxine’s body pose estimation feature in the Omniverse Machinima app gives users the ability to track and capture motion in real time using a single camera — without requiring a MoCap suit — with live conversion from a 2D camera capture to a 3D model.

Read more about Omniverse for content creators here.

And if you haven’t downloaded Omniverse, now’s the time.

Your Canvas, Never Out of Style

Styles in Canvas — preset filters that modify the look and feel of the painting — can now be modified in up to 10 different variations.

More style variations enhance artist creativity while providing additional options within the theme of their selected style.

Check out style variations; and if you haven’t already, download Canvas, which is free for RTX owners.

3D Creative App Updates Backed by March NVIDIA Studio Driver

In addition to supporting the latest updates for NVIDIA Omniverse and NVIDIA Canvas, the March Studio Driver also supports a host of other recent creative app and renderer updates.

The highly anticipated Blender 3.1 update adds USD preview surface material export support, making it easier to move assets between USD-supported apps, including Omniverse.

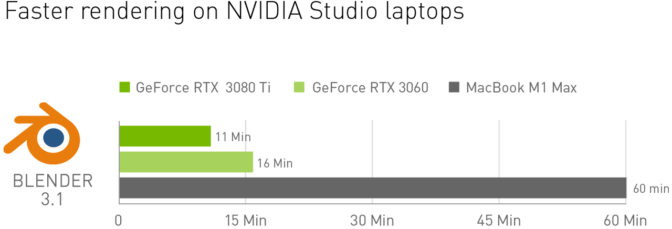

Blender artists equipped with NVIDIA RTX GPUs maintain performance advantages over Mac. Midrange GeForce RTX 3060 Studio laptops deliver 3.5x faster rendering than the fastest M1 Max Macbooks per Blender’s benchmark testing.

Luxion Keyshot 11 brings several updates: GPU-accelerated 3D paint features, physical simulation using NVIDIA PhysX, and NVIDIA Optix shader enhancements, speeding up animation workflows by up to 3x.

GPU Audio Inc., with an eye on the future, taps into parallel processing power for audio solutions, introducing an NVIDIA GPU-based VST filter to remove extreme frequencies and improve sound quality — an audio production game changer.

Download the March Studio Driver today.

On-Demand Sessions for Creators

Join the first GTC breakout session dedicated to the creative community.

“NVIDIA Studio and Omniverse for the Next Era of Creativity” will include artists and directors from NVIDIA’s creative team. Network with fellow 3D artists and get Omniverse feature support to enhance 3D workflows. Join this free session on Wednesday, March 23, from 7-8 a.m. Pacific.

It’s just one of many Omniverse sessions available to watch live or on demand, including the featured sessions below:

- Creating a Real-Time, Photoreal Ramen Shop in Omniverse with Gabriele Leone, senior art director at NVIDIA, and Andrew Averkin, senior environmental artist at NVIDIA.

- New Approach to Fashion Design With Adobe Substance 3D & Omniverse with Rob Bryant, creative 3D artist at NVIDIA.

- A Primer on Materials for NVIDIA Omniverse with Jan Jordan, senior software product manager for MDL at NVIDIA.

Themed GTC sessions and demos covering visual effects, virtual production and rendering, AI art galleries, and building and infrastructure design are also available to help realize your creative ambition.

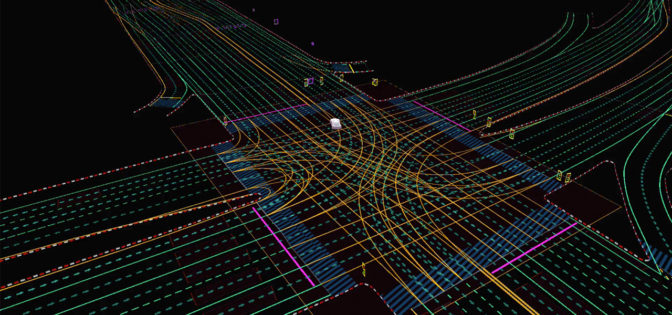

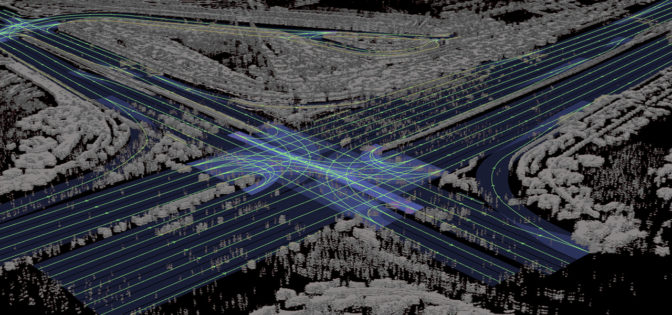

Also this week, game artists, producers, developers and designers are coming together for the annual Game Developers Conference where NVIDIA launched Omniverse for Developers, providing a more collaborative environment for the creation of virtual worlds.

At GDC, NVIDIA sessions to assist content creators in the gaming industry will feature virtual worlds and AI, real-time ray tracing, and developer tools. Check out the complete list.

To boost your creativity throughout the year, follow NVIDIA Studio on Facebook, Twitter and Instagram. There you’ll find the latest information on creative app updates, new Studio apps, creator contests and more. Get updates directly to your inbox by subscribing to the Studio newsletter.

The post At GTC: NVIDIA RTX Professional Laptop GPUs Debut, New NVIDIA Studio Laptops, a Massive Omniverse Upgrade and NVIDIA Canvas Update appeared first on NVIDIA Blog.