Pulse oximetry is a noninvasive test that measures the oxygen saturation level in a patient’s blood, and it has become an important tool for monitoring many patients, including those with Covid-19. But new research links faulty readings from pulse oximeters with racial disparities in health outcomes, potentially leading to higher rates of death and complications such as organ dysfunction, in patients with darker skin.

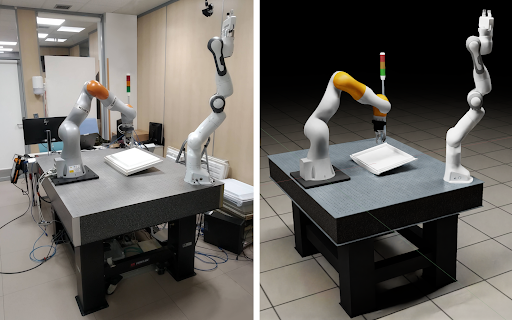

It is well known that non-white intensive care unit (ICU) patients receive less-accurate readings of their oxygen levels using pulse oximeters — the common devices clamped on patients’ fingers. Now, a paper co-authored by MIT scientists reveals that inaccurate pulse oximeter readings can lead to critically ill patients of color receiving less supplemental oxygen during ICU stays.

The paper, “Assessment of Racial and Ethnic Differences in Oxygen Supplementation Among Patients in the Intensive Care Unit,” published in JAMA Internal Medicine, focused on the question of whether there were differences in supplemental oxygen administration among patients of different races and ethnicities that were associated with pulse oximeter performance discrepancies.

The findings showed that inaccurate readings of Asian, Black, and Hispanic patients resulted in them receiving less supplemental oxygen than white patients. These results provide insight into how health technologies such as the pulse oximeter contribute to racial and ethnic disparities in care, according to the researchers.

The study’s senior author, Leo Anthony Celi, clinical research director and principal research scientist at the MIT Laboratory for Computational Physiology, and a principal research scientist at the MIT Institute for Medical Engineering and Science (IMES), says the challenge is that health care technology is routinely designed around the majority population.

“Medical devices are typically developed in rich countries with white, fit individuals as test subjects,” he explains. “Drugs are evaluated through clinical trials that disproportionately enroll white individuals. Genomics data overwhelmingly come from individuals of European descent.”

“It is therefore not surprising that we observe disparities in outcomes across demographics, with poorer outcomes among those who were not included in the design of health care,” Celi adds.

While pulse oximeters are widely used due to ease of use, the most accurate way to measure blood oxygen saturation (SaO2) levels is by taking a sample of the patient’s arterial blood. False readings of normal pulse oximetry (SpO2) can lead to hidden hypoxemia. Elevated bilirubin in the bloodstream and the use of certain medications in the ICU called vasopressors can also throw off pulse oximetry readings.

More than 3,000 participants were included in the study, of whom 2,667 were white, 207 Black, 112 Hispanic, and 83 Asian — using data from the Medical Information Mart for Intensive Care version 4, or MIMIC-IV dataset. This dataset is comprised of more than 50,000 patients admitted to the ICU at Beth Israel Deaconess Medical Center, and includes both pulse oximeter readings and oxygen saturation levels detected in blood samples. MIMIC-IV also includes rates of administration of supplemental oxygen.

When the researchers compared SpO2 levels taken by pulse oximeter to oxygen saturation from blood samples, they found that Black, Hispanic, and Asian patients had higher SpO2 readings than white patients for a given blood oxygen saturation level measured in blood samples. The turnaround time of arterial blood gas analysis may take from several minutes up to an hour. As a result, clinicians typically make decisions based on pulse oximetry reading, unaware of its suboptimal performance in certain patient demographics.

Eric Gottlieb, the study’s lead author, a nephrologist, a lecturer at MIT, and a Harvard Medical School fellow at Brigham and Women’s Hospital, called for more research to be done, in order to better understand “how pulse oximeter performance disparities lead to worse outcomes; possible differences in ventilation management, fluid resuscitation, triaging decisions, and other aspects of care should be explored. We then need to redesign these devices and properly evaluate them to ensure that they perform equally well for all patients.”

Celi emphasizes that understanding biases that exist within real-world data is crucial in order to better develop algorithms and artificial intelligence to assist clinicians with decision-making. “Before we invest more money on developing artificial intelligence for health care using electronic health records, we have to identify all the drivers of outcome disparities, including those that arise from the use of suboptimally designed technology,” he argues. “Otherwise, we risk perpetuating and magnifying health inequities with AI.”

Celi described the project and research as a testament to the value of data sharing that is the core of the MIMIC project. “No one team has the expertise and perspective to understand all the biases that exist in real-world data to prevent AI from perpetuating health inequities,” he says. “The database we analyzed for this project has more than 30,000 credentialed users consisting of teams that include data scientists, clinicians, and social scientists.”

The many researchers working on this topic together form a community that shares and performs quality checks on codes and queries, promotes reproducibility of the results, and crowdsources the curation of the data, Celi says. “There is harm when health data is not shared,” he says. “Limiting data access means limiting the perspectives with which data is analyzed and interpreted. We’ve seen numerous examples of model mis-specifications and flawed assumptions leading to models that ultimately harm patients.”

Chaitra Mathur is a Principal Solutions Architect at AWS. She guides customers and partners in building highly scalable, reliable, secure, and cost-effective solutions on AWS. She is passionate about Machine Learning and helps customers translate their ML needs into solutions using AWS AI/ML services. She holds 5 certifications including the ML Specialty certification. In her spare time, she enjoys reading, yoga, and spending time with her daughters.

Chaitra Mathur is a Principal Solutions Architect at AWS. She guides customers and partners in building highly scalable, reliable, secure, and cost-effective solutions on AWS. She is passionate about Machine Learning and helps customers translate their ML needs into solutions using AWS AI/ML services. She holds 5 certifications including the ML Specialty certification. In her spare time, she enjoys reading, yoga, and spending time with her daughters.