Skycatch, a San Francisco-based startup, has been helping companies mine both data and minerals for nearly a decade.

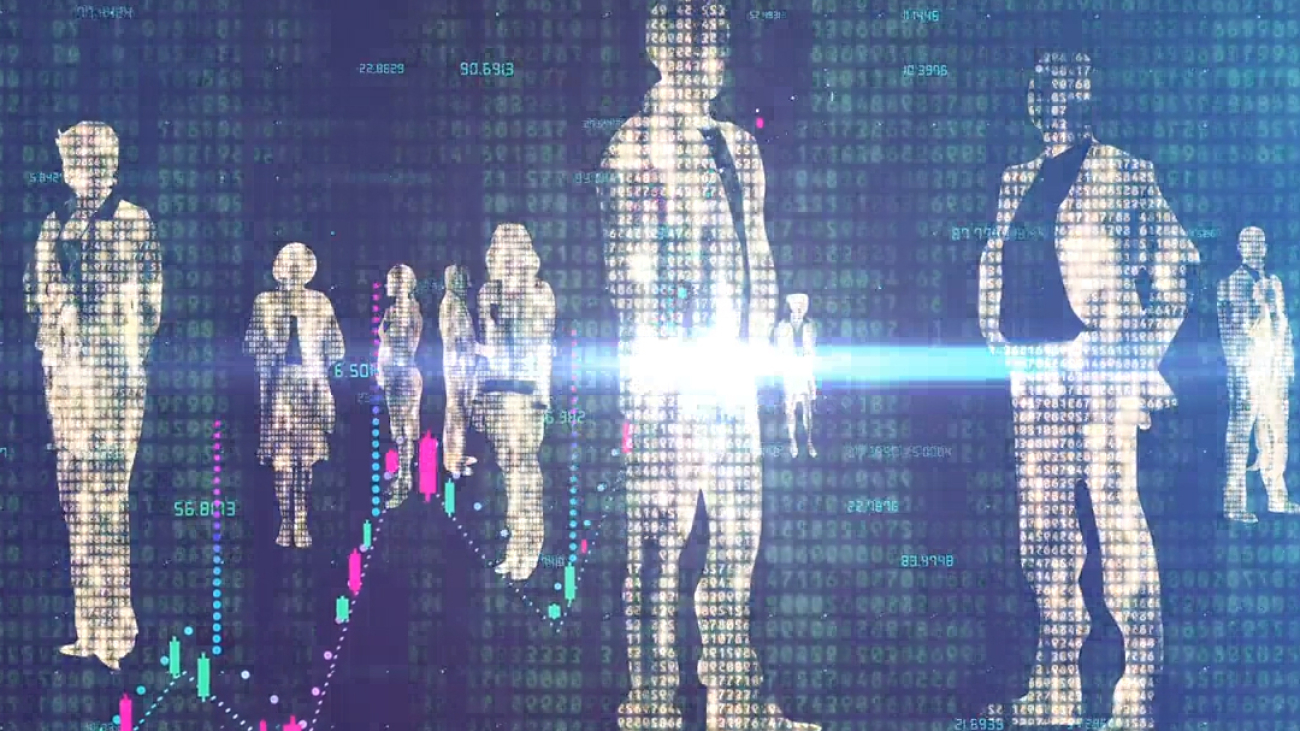

The software-maker is now digging into the creation of digital twins, with an initial focus on the mining and construction industry, using the NVIDIA Omniverse platform for connecting and building custom 3D pipelines.

SkyVerse, which is a part of Skycatch’s vision AI platform, is a combination of computer vision software and custom Omniverse extensions that enables users to enrich and animate virtual worlds of mines and other sites with near-real-time geospatial data.

“With Omniverse, we can turn massive amounts of non-visual data into dynamic visual information that’s easy to contextualize and consume,” said Christian Sanz, founder and CEO of Skycatch. “We can truly recreate the physical world.”

SkyVerse can help industrial sites simulate variables such as weather, broken machines and more up to five years into the future — while learning from happenings up to five years in the past, Sanz said.

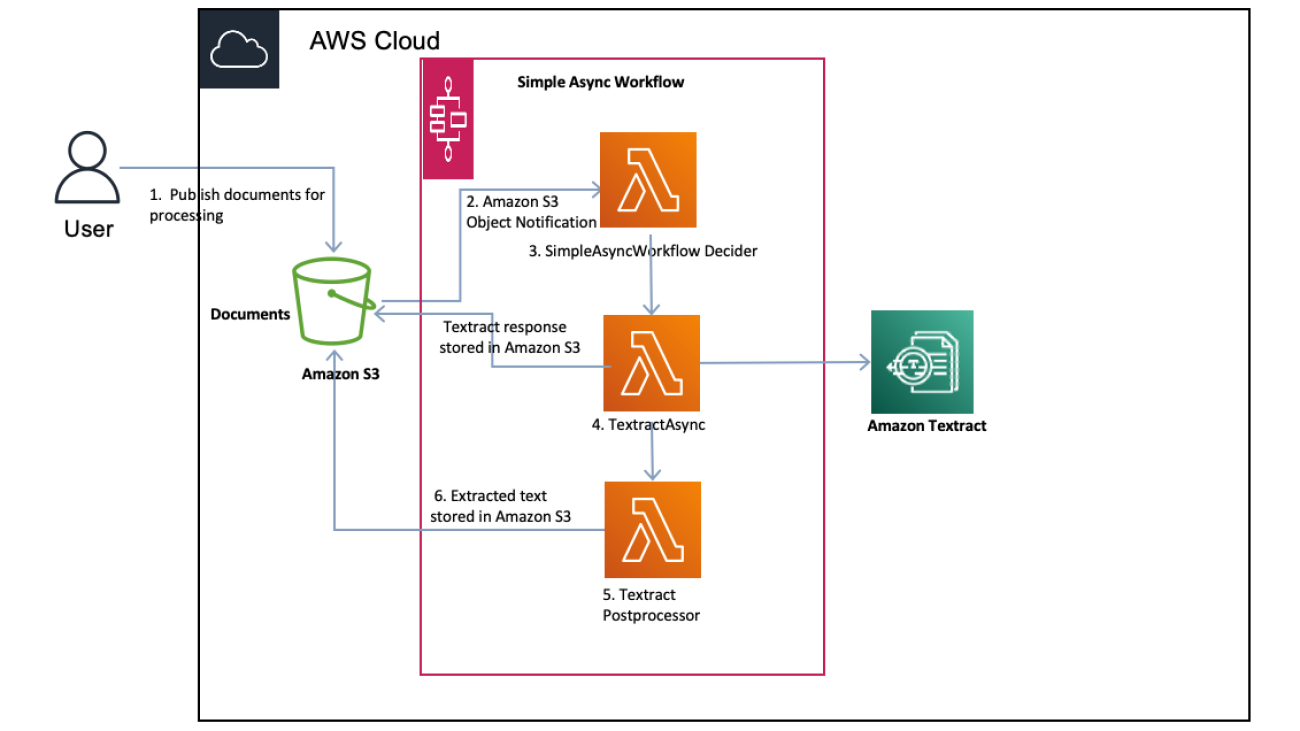

The platform automates the entire visualization pipeline for mining and construction environments.

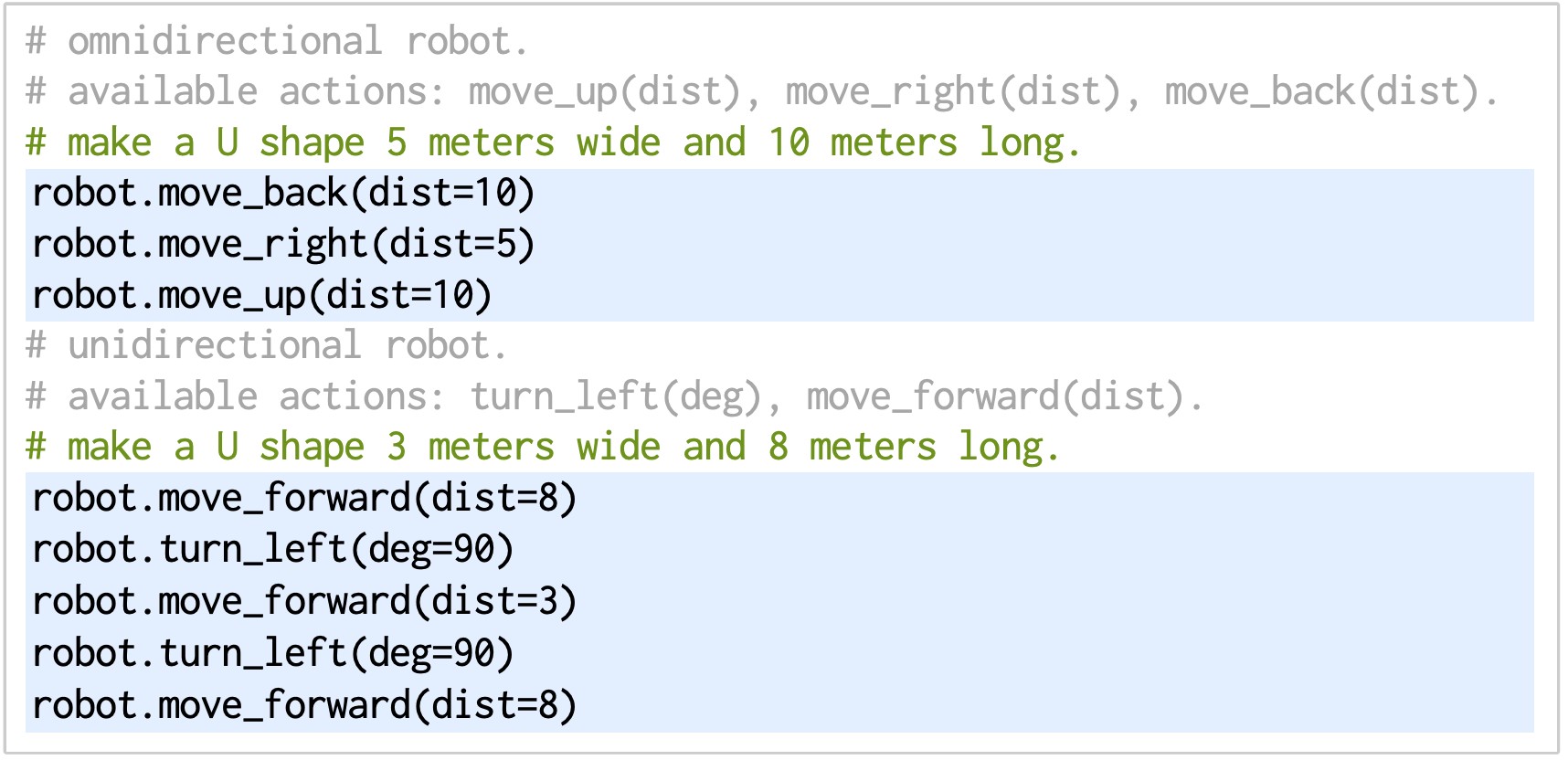

First, it processes data from drones, lidar and other sensors across the environment, whether at the edge using the NVIDIA Jetson platform or in the cloud.

It then creates 3D meshes from 2D images, using neural networks built from NVIDIA’s pretrained models to remove unneeded objects like dump trucks and other equipment from the visualizations.

Next, SkyVerse stitches this into a single 3D model that’s converted to the Universal Scene Description (USD) framework. The master model is then brought into Omniverse Enterprise for the creation of a digital twin that’s live-synced with real-world telemetry data.

“The simulation of machines in the environment, different weather conditions, traffic jams — no other platform has enabled this, but all of it is possible in Omniverse with hyperreal physics and object mass, which is really groundbreaking,” Sanz said.

Skycatch is a Premier partner in NVIDIA Inception, a free, global program that nurtures startups revolutionizing industries with cutting-edge technologies. Premier partners receive additional go-to-market support, exposure to venture capital firms and technical expertise to help them scale faster.

Processing and Visualizing Data

Companies have deployed Skycatch’s fully automated technologies to gather insights from aerial data across tens of thousands of sites at several top mining companies.

The Skycatch team first determines optimal positioning of the data-collection sensors across mine vehicles using the NVIDIA Isaac Sim platform, a robotics simulation and synthetic data generation (SDG) tool for developing, testing and training AI-based robots.

“Isaac Sim has saved us a year’s worth of testing time — going into the field, placing a sensor, testing how it functions and repeating the process,” Sanz said.

The team also plans to integrate the Omniverse Replicator software development kit into SkyVerse to generate physically accurate 3D synthetic data and build SDG tools to accelerate the training of perception networks beyond the robotics domain.

Once data from a site is collected, SkyVerse uses edge devices powered by the NVIDIA Jetson Nano and Jetson AGX Xavier modules to automatically process up to terabytes of it per day and turn it into kilobyte-size analytics that can be easily transferred to frontline users.

This data processing was sped up 3x by the NVIDIA CUDA parallel computing platform, according to Sanz. The team is also looking to deploy the new Jetson Orin modules for next-level performance.

“It’s not humanly possible to go through tens of thousands of images a day and extract critical analytics from them,” Sanz said. “So we’re helping to expand human eyesight with neural networks.”

Using pretrained models from the NVIDIA TAO Toolkit, Skycatch also built neural networks that can remove extraneous objects and vehicles from the visualizations, and texturize over these spots in the 3D mesh.

The digital terrain model, which has sub-five-centimeter precision, can then be brought into Omniverse for the creation of a digital twin using the easily extensible USD framework, custom SkyVerse Omniverse extensions and NVIDIA RTX GPUs.

“It took just around three months to build the Omniverse extensions, despite the complexity of our extensions’ capabilities, thanks to access to technical experts through NVIDIA Inception,” Sanz said.

Skycatch is working with one of Canada’s leading mining companies, Teck Resources, to implement the use of Omniverse-based digital twins for its project sites.

“Teck Resources has been using Skycatch’s compute engine across all of our mine sites globally and is now expanding visualization and simulation capabilities with SkyVerse and our own digital twin strategy,” said Preston Miller, lead of technology and innovation at Teck Resources. “Delivering near-real-time visual data will allow Teck teams to quickly contextualize mine sites and make faster operational decisions on mission-critical, time-sensitive projects.”

The Omniverse extensions built by Skycatch will be available soon — learn more.

Safety and Sustainability

AI-powered data analysis and digital twins can make operational processes for mining and construction companies safer, more sustainable and more efficient.

For example, according to Sanz, mining companies need the ability to quickly locate the toe and crest (or bottom and top) of “benches,” narrow strips of land beside an open-pit mine. When a machine is automated to go in and out of a mine, it must be programmed to stay 10 meters away from the crest at all times to avoid the risk of sliding, Sanz said.

Previously, surveying and analyzing landforms to determine precise toes and crests typically took up to five days. With the help of NVIDIA AI, SkyVerse can now generate this information within minutes, Sanz said.

In addition, SkyVerse eliminates 10,000 open-pit interactions for customers per year, per site, Sanz said. These are situations in which humans and vehicles can intersect within a mine, posing a safety threat.

“At its core, Skycatch’s goal is to provide context and full awareness for what’s going on at a mining or construction site in near-real time — and better environmental context leads to enhanced safety for workers,” Sanz said.

Skycatch aims to boost sustainability efforts for the mining industry, too.

“In addition to mining companies, governmental organizations want visibility into how mines are operating — whether their surrounding environments are properly taken care of — and our platform offers these insights,” Sanz said.

Plus, minerals like cobalt, nickel and lithium are required for electrification and the energy transition. These all come from mine sites, Sanz said, which can become safer and more efficient with the help of SkyVerse’s digital twins and vision AI.

Dive deeper into technology for a sustainable future with Skycatch and other Inception partners in the on-demand webinar, Powering Energy Startup Success With NVIDIA Inception.

Creators and developers across the world can download NVIDIA Omniverse for free, and enterprise teams can use the platform for their 3D projects.

Learn more about and apply to join NVIDIA Inception.

The post Unearthing Data: Vision AI Startup Digs Into Digital Twins for Mining and Construction appeared first on NVIDIA Blog.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)

–><!–

–><!– –>

–>