AWS re:Invent season is upon us again! Just a few days to go until re:Invent takes place for the 11th year in Las Vegas, Nevada. The Artificial Intelligence and Machine Learning team at AWS has been working hard to offer amazing content, an outstanding AWS DeepRacer experience, and much more. In this post, we give you a sense of how the AI/ML track is organized and highlight a few sessions we think you’ll like.

The technical sessions in the AI/ML track are divided into four areas. First, there are many common use cases that you can address with a combination of AI/ML and other AWS services, such as Intelligent Document Processing, Contact Center Intelligence, and Personalization among others. Second, ML practitioners of all levels will find compelling content on the entire ML lifecycle, such as data preparation, training, inference, MLOps, AutoML, and no-code ML. This year, we have a renewed emphasis on responsible AI. Customers have been looking for more guidance and new tools in this space. And last but never least, we have exciting workshops and activities with AWS DeepRacer—they have become a signature event!

Visit the AWS Village at the Venetian Expo Hall to meet our AI/ML specialists at the AI/ML booth and learn more about AI/ML services and solutions. You can also chat with our AWS Manufacturing experts at the AWS Industries Networking Lounge, in the Caesars Forum Main Hall.

If you’re new to re:Invent, you can attend sessions of the following types:

- Keynotes – Join in-person or virtual, and learn about all the exciting announcements.

- Leadership sessions – Learn from AWS leaders about key topics in cloud computing.

- Breakout sessions – These 60-minute sessions are expected to have broad appeal, are delivered to larger audiences, and will be recorded. If you miss them, you can watch them on demand after re:Invent.

- Chalk talks – 60 minutes of content delivered to smaller audiences with an interactive whiteboarding session. Chalk talks are where discussions happen, and these offer you the greatest opportunity to ask questions or share your opinion.

- Workshops – Hands-on learning opportunities where, in the course of 2 hours, you’ll be able to build a solution to a problem, understand the inner workings of the resulting infrastructure, and cross-service interaction. Bring your laptop and be ready to learn!

- Builders’ sessions – These highly interactive 60-minute mini-workshops are conducted in small groups of less than 10 attendees. Some of these appeal to beginners, and others are on specialized topics.

If you have reserved your seat at any of the sessions, great! If not, we always set aside some spots for walk-ins, so make a plan and come to the room early.

To help you plan your agenda for this year’s re:Invent, here are some highlights of the AI/ML track. So buckle up, and start registering for your favorite sessions.

Visit the session catalog to learn about all AI/ML sessions.

AWS Data and Machine Learning Keynote

Swami Sivasubramanian, Vice President of AWS Data and Machine Learning – Keynote

Wednesday November 30 | 8:30 AM – 10:30 AM PST | The Venetian

Join Swami Sivasubramanian, Vice President of AWS Data and Machine Learning on Wednesday, as he reveals the latest AWS innovations that can help you transform your company’s data into meaningful insights and actions for your business, in person or via livestream.

AI/ML Leadership session

AIM217-L (LVL 200) Innovate with AI/ML to transform your business

Wednesday November 30 | 1:00 PM – 2:00 PM PST

Join Dr. Bratin Saha, VP of AI/ML at AWS, for the AI/ML thought-leadership session. Bratin will share how to use AI/ML to innovate in your business in order to disrupt the status quo. You learn how customers Baxter, BMW, and Alexa have used AWS AI/ML services to fuel business profitability and growth, the latest AI/ML trends, and the details of newly launched AWS capabilities.

Reserve your seat now!

Breakout sessions

AIM314 (LVL 300) Accelerate your ML journey with Amazon SageMaker low-code tools

Monday November 28 | 10:00 AM – 11:00 AM PST

In this session, learn how low-code tools, including Amazon SageMaker Data Wrangler, Amazon SageMaker Autopilot, and Amazon SageMaker JumpStart, make it easier to experiment faster and bring highly accurate models to production more quickly and efficiently.

Reserve your seat now!

AIM204 (LVL 200) Automate insurance document processing with AI

Monday November 28 | 4:00 PM – 5:00 PM PST

The rapid rate of data generation means that organizations that aren’t investing in document automation risk getting stuck with legacy processes that are slow, error-prone, and difficult to scale. In this session, learn how organizations can take advantage of the latest innovations in AI and ML from AWS to improve the efficiency of their document-intensive claims processing use case.

Reserve your seat now!

AIM207 (LVL 200) Make better decisions with no-code ML using SageMaker Canvas, feat. Samsung

Wednesday November 30 | 2:30 PM – 3:30 PM PSTOrganizations everywhere use ML to accurately predict outcomes and make faster business decisions. In this session, learn how you can use Amazon SageMaker Canvas to access and combine data from a variety of sources, clean data, build ML models to generate predictions with a single click, and share models across your organization to improve productivity.

Reserve your seat now!

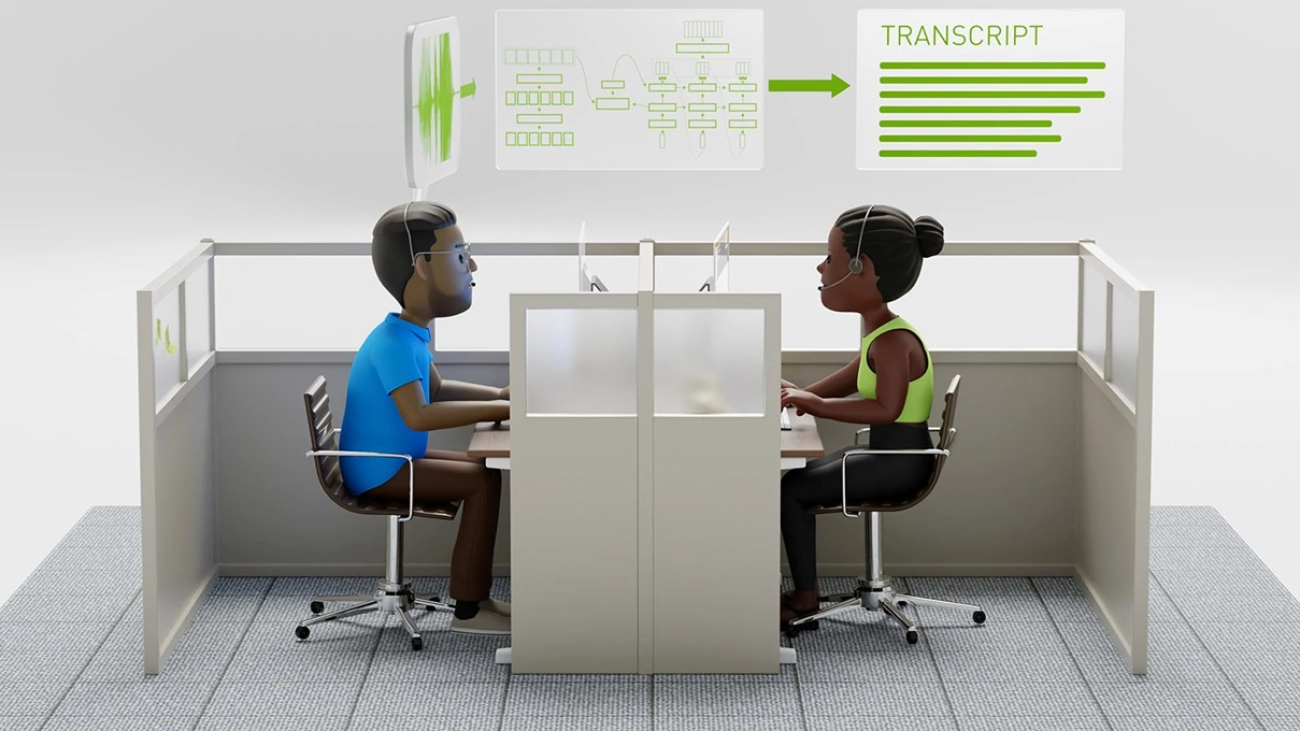

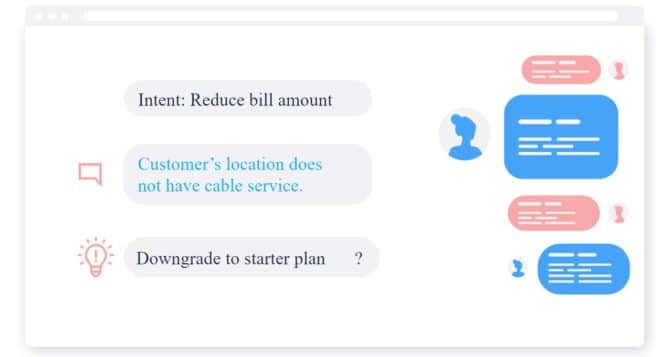

AIM307 (LVL 300) JPMorganChase real-time agent assist for contact center productivity

Wednesday November 30 | 11:30 AM – 12:30 PM PST

Resolving complex customer issues is often time-consuming and requires agents to quickly gather relevant information from knowledge bases to resolve queries accurately. Join this session to learn how JPMorgan Chase built an AWS Contact Center Intelligence (CCI) real-time agent assist solution to help 75 million customers and help 8,500 servicing agents generate next best actions in the shortest time—reducing agent frustration and churn.

Reserve your seat now!

AIM321 (LVL 300) Productionize ML workloads using Amazon SageMaker MLOps, feat. NatWest

Wednesday November 30 | 4:45 PM – 5:45 PM PST

Amazon SageMaker provides a breadth of MLOps tools to train, test, troubleshoot, deploy, and govern ML models at scale. In this session, explore SageMaker MLOps features, including SageMaker Pipelines, SageMaker Projects, SageMaker Experiments, SageMaker Model Registry, and SageMaker Model Monitor, and learn how to increase automation and improve the quality of your ML workflows.

Reserve your seat now!

AIM319 (LVL 300) Build, manage, and scale ML development with a web-based visual interface

Wednesday November 30 | 3:15 PM – 4:15 PM PST

Amazon SageMaker Studio is an integrated development environment (IDE) for data science and ML. In this session, explore how to use SageMakerStudio to prepare data and build, train, deploy, and manage ML models from a single, web-based visual interface.

Reserve your seat now!

Chalk talks

AIM341-R (LVL 300) Transforming responsible AI from theory into practice

Thursday December 1 | 4:15 PM – 5:15 PM PST

The practices of responsible AI can help reduce biased outcomes from models and improve their fairness, explainability, robustness, privacy, and transparency. Walk away from this chalk talk with best practices and hands-on support to guide you in applying responsible AI in your project.

Reserve your seat now!

*This chalk talk will be repeated Wednesday November 30 | 7:00 PM – 8:00 PM PST

AIM306-R (LVL 300) Automate content moderation and compliance with AI

Monday November 28 | 12:15 PM – 1:15 PM PST

In this chalk talk, learn how to efficiently moderate high volumes of user-generated content across media types with AI. Discover how to add humans in the moderation loop to verify low-confidence decisions and continuously improve ML models to keep online communities safe and inclusive and lower content moderation costs.

Reserve your seat now!

*This chalk talk will be repeated Wednesday November 30 | 9:15 AM – 10:15 AM PST

AIM407-R (LVL 400) Choosing the right ML instance for training and inference on AWS

Wednesday November 30 | 11:30 AM – 12:30 PM PST

This chalk talk guides you through how to choose the right compute instance type on AWS for your deep learning projects. Explore the available options, such as the most performant instance for training, the best instance for prototyping, and the most cost-effective instance for inference deployments.

Reserve your seat now!

*This chalk talk will be repeated Wednesday November 30 | 8:30 AM – 9:30 AM PST

AIM328-R (LVL 300) Explain your ML models with Amazon SageMaker Clarify

Tuesday November 29 | 2:00 PM – 3:00 PM PST

Amazon SageMaker Clarify helps organizations understand their model predictions by providing real-time explanations for models deployed on SageMaker endpoints. In this chalk talk, learn how to identify the importance of various features in overall model predictions and for individual inferences using Shapley values and detect any shifts in feature importance over time after a model is deployed to production.

Reserve your seat now!

*This chalk talk will be repeated Monday November 28 | 2:30 PM – 3:30 PM PST

Workshops

AIM342 (LVL 300) Advancing responsible AI: Bias assessment and transparency

Wednesday November 30 | 2:30 PM – 4:30 PM PST

Building and operating ML applications responsibly requires an active, consistent approach to prevent, assess, and mitigate bias. This workshop takes you through a computer vision case study in assessing unwanted bias—follow along during the workshop with a Jupyter notebook.

Reserve your seat now!

AIM402-R (LVL 400) Extract AI-driven customer insights using Post-Call Analytics

Monday November 28 | 4:00 PM – 6:00 PM PST

Companies are transforming existing contact centers by adding AI/ML to deliver actionable insights and improve automation with existing telephony systems. Join this workshop to learn how to use the AWS Contact Center Intelligence (CCI) Post-Call Analytics solution to derive AI-driven insights from virtually all customer conversations.

Reserve your seat now!

*This workshop will be repeated Wednesday November 30 | 9:15 AM – 11:15 AM PST

AIM212-R (LVL 200) Deep learning with Amazon SageMaker, AWS Trainium, and AWS Inferentia

Monday November 28 | 1:00 PM – 3:00 PM PST

Amazon EC2 Trn1 instances, powered by AWS Trainium, and Amazon EC2 Inf1 instances, powered by AWS Inferentia, deliver the best price performance for deep learning training and inference. In this workshop, walk through training a BERT model for natural language processing on Trn1 instances to save up to 50% in training costs over equivalent GPU-based EC2 instances.

Reserve your seat now!

*This workshop will be repeated Monday November 28 | 8:30 AM – 10:30 AM PST

AIM312-R (LVL 300) Build a custom recommendation engine in 2 hours with Amazon Personalize

Monday November 28 | 1:00 PM – 3:00 PM PST

In this workshop, learn how to build a customer-specific solution using your own data to deliver personalized experiences that can be integrated into your existing websites, applications, SMS, and email marketing systems using simple APIs.

Reserve your seat now!

*This workshop will be repeated Wednesday November 30 | 11:30 AM – 1:30 PM PST

Builders’ sessions

AIM325-R (LVL 300) Build applications faster with an ML-powered coding companion

Tuesday November 29 | 3:30 PM – 4:30 PM PST

Join this builders’ session to get hands-on experience with ML-powered developer tools from AWS. Learn how to accelerate application development with automatic code recommendations from Amazon CodeWhisperer and automate code reviews with Amazon CodeGuru.

Reserve your seat now!

*This session will be repeated Thursday December 1 | 12:30 PM – 1:30 PM PST

Make sure to check out the re:Invent content catalog or the AI/ML attendee guide for more AI/ML content at re:Invent.

AWS DeepRacer: Get hands-on with machine learning

Developers of all skill levels can get hands-on with ML at re:Invent by participating in AWS DeepRacer. Learn to build your own ML model from AWS ML experts in one of 11 workshop sessions, featuring guest speakers from JPMorgan Chase and Intel. Compete by racing your own ML model on real championship tracks in both the MGM and the Sands Expo, or hop in the driver’s seat to experience ML fundamentals through the fun of gamified learning with AWS DeepRacer Arcades. Whether in the classroom, on the track, or behind the wheel, AWS DeepRacer is the fastest way to get rolling with ML.

Developers: start your engines! Starting Monday November 28, the top 50 racers from around the world compete in the AWS DeepRacer League Championships presented by Intel, hosted at the AWS DeepRacer Championship Stadium in the Sands Expo. Watch trackside or tune in live on twitch.tv/aws at 3:00 PM PST on Tuesday, November 29, to see the top eight racers battle it out in the semifinals. Cheer on the finalists as they go for their shot at $20,000 in cash prizes and the right to hoist the Championship Cup.

Race on any AWS DeepRacer track on Thursday, December 1, to compete in the 2023 re:Invent Open, where the fastest competitor of the day will claim an all-expenses paid trip back to Vegas to compete in the 2023 AWS DeepRacer Championship Cup.

Attendees who participate in AWS DeepRacer Arcades or open track (non-competitive) racing will also have the chance to win one of six spots in the AWS DeepRacer Winner’s Circle Driving Experience Sweepstakes, where they will race real, full-size exotic cars on a closed track alongside the AWS DeepRacer 2022 Champions in Las Vegas.

Don’t forget to check out the AWS DeepRacer workshops before they fill up to reserve your spot. We can’t wait to see you in Las Vegas!

About the authors

Denis V. Batalov is a 17-year Amazon veteran and a PhD in Machine Learning, Denis worked on such exciting projects as Search Inside the Book, Amazon Mobile apps and Kindle Direct Publishing. Since 2013 he has helped AWS customers adopt AI/ML technology as a Solutions Architect. Currently, Denis is a Worldwide Tech Leader for AI/ML responsible for the functioning of AWS ML Specialist Solutions Architects globally. Denis is a frequent public speaker, you can follow him on Twitter @dbatalov.

Denis V. Batalov is a 17-year Amazon veteran and a PhD in Machine Learning, Denis worked on such exciting projects as Search Inside the Book, Amazon Mobile apps and Kindle Direct Publishing. Since 2013 he has helped AWS customers adopt AI/ML technology as a Solutions Architect. Currently, Denis is a Worldwide Tech Leader for AI/ML responsible for the functioning of AWS ML Specialist Solutions Architects globally. Denis is a frequent public speaker, you can follow him on Twitter @dbatalov.

Amelie Perkuhn is a Product Marketing Manager on the AI Services team at AWS. She has held various roles within AWS over the past 6 years, and in her current role, she is focused on driving adoption of AI Services including Amazon Kendra. In her spare time, Amelie enjoys the Pacific Northwest with her dog Moxie.

Amelie Perkuhn is a Product Marketing Manager on the AI Services team at AWS. She has held various roles within AWS over the past 6 years, and in her current role, she is focused on driving adoption of AI Services including Amazon Kendra. In her spare time, Amelie enjoys the Pacific Northwest with her dog Moxie.

Read More

A new report from the Geena Davis Institute, Google Research and USC uses AI to analyze representation in media.

A new report from the Geena Davis Institute, Google Research and USC uses AI to analyze representation in media.