In 2021, we launched AWS Support Proactive Services as part of the AWS Enterprise Support plan. Since its introduction, we’ve helped hundreds of customers optimize their workloads, set guardrails, and improve the visibility of their machine learning (ML) workloads’ cost and usage.

In this series of posts, we share lessons learned about optimizing costs in Amazon SageMaker. In this post, we focus on data preprocessing using Amazon SageMaker Processing and Amazon SageMaker Data Wrangler jobs.

Data preprocessing holds a pivotal role in a data-centric AI approach. However, preparing raw data for ML training and evaluation is often a tedious and demanding task in terms of compute resources, time, and human effort. Data preparation commonly needs to be integrated from different sources and deal with missing or noisy values, outliers, and so on.

Furthermore, in addition to common extract, transform, and load (ETL) tasks, ML teams occasionally require more advanced capabilities like creating quick models to evaluate data and produce feature importance scores or post-training model evaluation as part of an MLOps pipeline.

SageMaker offers two features specifically designed to help with those issues: SageMaker Processing and Data Wrangler. SageMaker Processing enables you to easily run preprocessing, postprocessing, and model evaluation on a fully managed infrastructure. Data Wrangler reduces the time it takes to aggregate and prepare data by simplifying the process of data source integration and feature engineering using a single visual interface and a fully distributed data processing environment.

Both SageMaker features provide great flexibility with several options for I/O, storage, and computation. However, setting those options incorrectly may lead to unnecessary cost, especially when dealing with large datasets.

In this post, we analyze the pricing factors and provide cost optimization guidance for SageMaker Processing and Data Wrangler jobs.

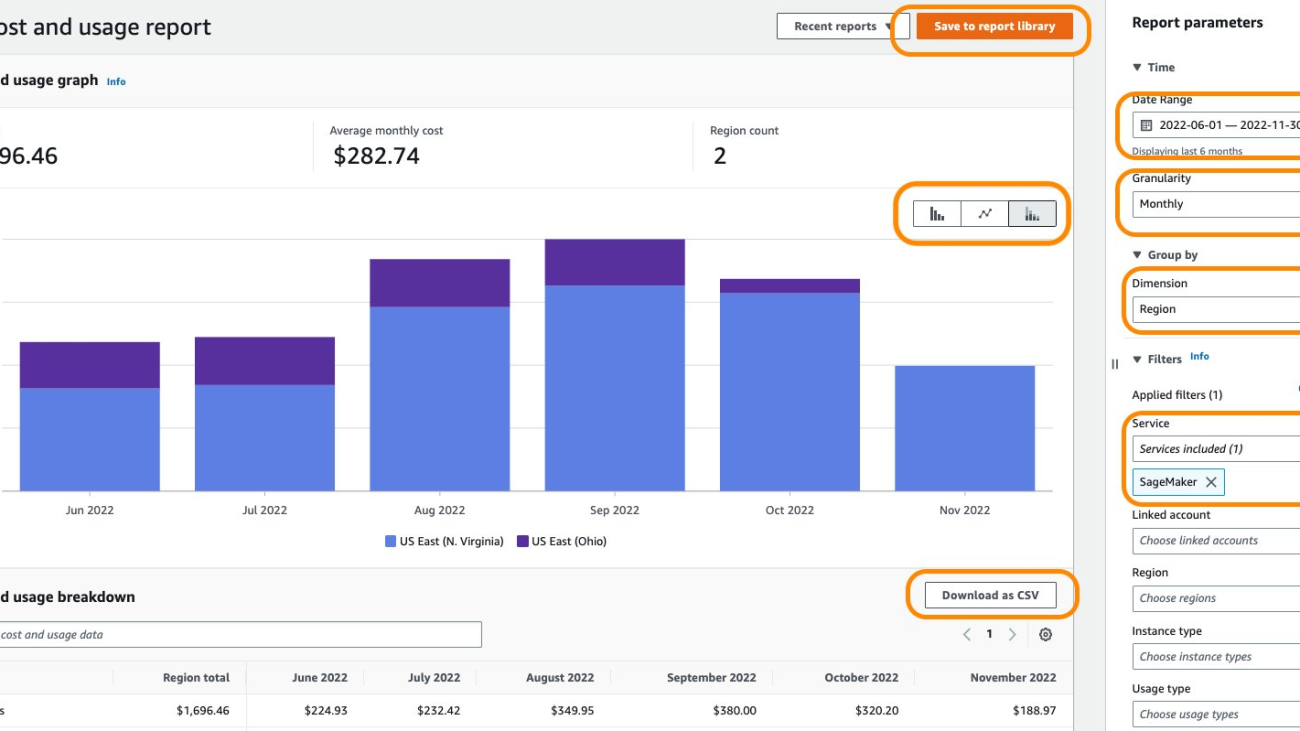

Analyze Amazon SageMaker spend and determine cost optimization opportunities based on usage:

|

SageMaker Processing

SageMaker Processing is a managed solution to run data processing and model evaluation workloads. You can use it in data processing steps such as feature engineering, data validation, model evaluation, and model interpretation in ML workflows. With SageMaker Processing, you can bring your own custom processing scripts and choose to build a custom container or use a SageMaker managed container with common frameworks like scikit-learn, Lime, Spark and more.

SageMaker Processing charges you for the instance type you choose, based on the duration of use and provisioned storage that is attached to that instance. In Part 1, we showed how to get started using AWS Cost Explorer to identify cost optimization opportunities in SageMaker.

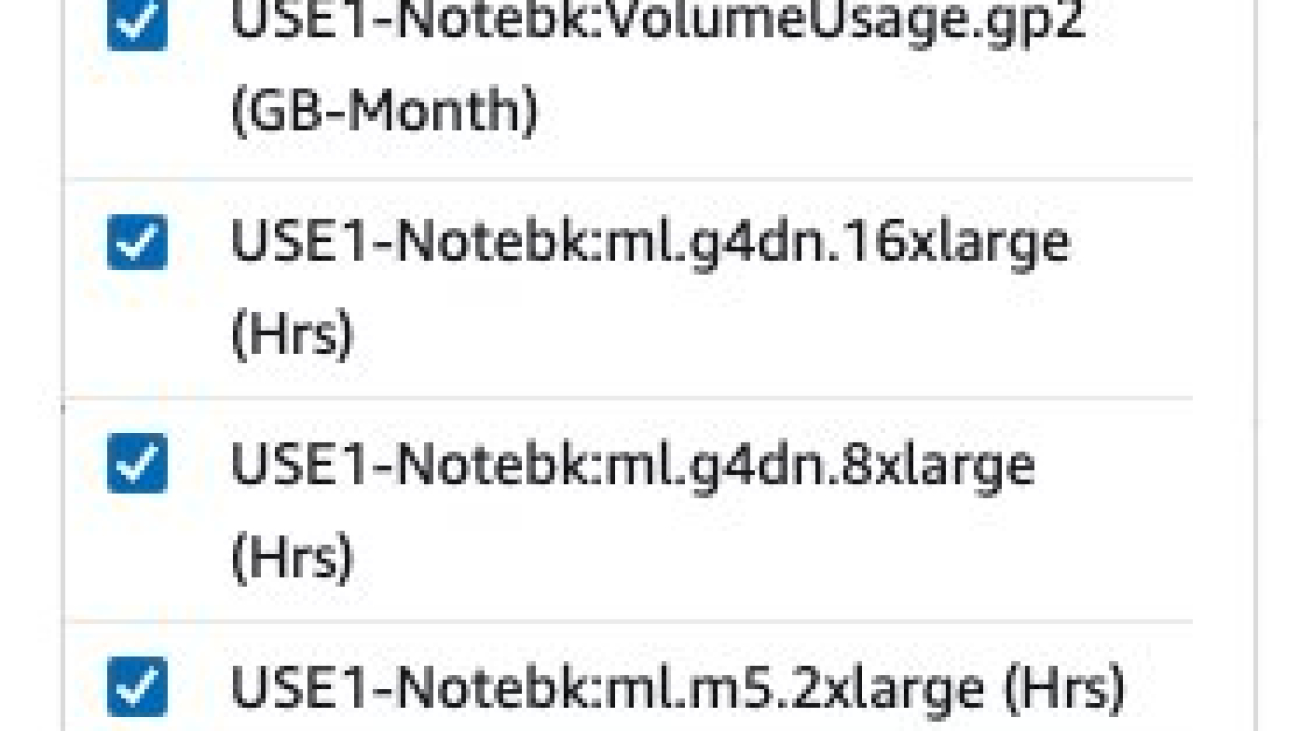

You can filter processing costs by applying a filter on the usage type. The names of these usage types are as follows:

REGION-Processing:instanceType(for example,USE1-Processing:ml.m5.large)REGION-Processing:VolumeUsage.gp2(for example,USE1-Processing:VolumeUsage.gp2)

To review your SageMaker Processing cost in Cost Explorer, start by filtering with SageMaker for Service, and for Usage type, you can select all processing instances running hours by entering the processing:ml prefix and selecting the list on the menu.

Avoid cost in processing and pipeline development

Before right-sizing and optimizing a SageMaker Processing job’s run duration, we check for high-level metrics about historic job runs. You can choose from two methods to do this.

First, you can access the Processing page on the SageMaker console.

Alternatively, you can use the list_processing_jobs API.

A Processing job status can be InProgress, Completed, Failed, Stopping, or Stopped.

A high number of failed jobs is common when developing new MLOps pipelines. However, you should always test and make every effort to validate jobs before launching them on SageMaker because there are charges for resources used. For that purpose, you can use SageMaker Processing in local mode. Local mode is a SageMaker SDK feature that allows you to create estimators, processors, and pipelines, and deploy them to your local development environment. This is a great way to test your scripts before running them in a SageMaker managed environment. Local mode is supported by SageMaker managed containers and the ones you supply yourself. To learn more about how to use local mode with Amazon SageMaker Pipelines, refer to Local Mode.

Optimize I/O-related cost

SageMaker Processing jobs offer access to three data sources as part of the managed processing input: Amazon Simple Storage Service (Amazon S3), Amazon Athena, and Amazon Redshift. For more information, refer to ProcessingS3Input, AthenaDatasetDefinition, and RedshiftDatasetDefinition, respectively.

Before looking into optimization, it’s important to note that although SageMaker Processing jobs support these data sources, they are not mandatory. In your processing code, you can implement any method for downloading the accessing data from any source (provided that the processing instance can access it).

To gain better insights into processing performance and detecting optimization opportunities, we recommend following logging best practices in your processing script. SageMaker publishes your processing logs to Amazon CloudWatch.

In the following example job log, we see that the script processing took 15 minutes (between Start custom script and End custom script).

However, on the SageMaker console, we see that the job took 4 additional minutes (almost 25% of the job’s total runtime).

This is due to the fact that in addition to the time our processing script took, SageMaker-managed data downloading and uploading also took time (4 minutes). If this proves to be a big part of the cost, consider alternate ways to speed up downloading time, such as using the Boto3 API with multiprocessing to download files concurrently, or using third-party libraries as WebDataset or s5cmd for faster download from Amazon S3. For more information, refer to Parallelizing S3 Workloads with s5cmd. Note that such methods might introduce charges in Amazon S3 due to data transfer.

Processing jobs also support Pipe mode. With this method, SageMaker streams input data from the source directly to your processing container into named pipes without using the ML storage volume, thereby eliminating the data download time and a smaller disk volume. However, this requires a more complicated programming model than simply reading from files on a disk.

As mentioned earlier, SageMaker Processing also supports Athena and Amazon Redshift as data sources. When setting up a Processing job with these sources, SageMaker automatically copies the data to Amazon S3, and the processing instance fetches the data from the Amazon S3 location. However, when the job is finished, there is no managed cleanup process and the data copied will still remain in Amazon S3 and might incur unwanted storage charges. Therefore, when using Athena and Amazon Redshift data sources, make sure to implement a cleanup procedure, such as a Lambda function that runs on a schedule or in a Lambda Step as part of a SageMaker pipeline.

Like downloading, uploading processing artifacts can also be an opportunity for optimization. When a Processing job’s output is configured using the ProcessingS3Output parameter, you can specify which S3UploadMode to use. The S3UploadMode parameter default value is EndOfJob, which will get SageMaker to upload the results after the job completes. However, if your Processing job produces multiple files, you can set S3UploadMode to Continuous, thereby enabling the upload of artifacts simultaneously as processing continues, and decreasing the job runtime.

Right-size processing job instances

Choosing the right instance type and size is a major factor in optimizing the cost of SageMaker Processing jobs. You can right-size an instance by migrating to a different version within the same instance family or by migrating to another instance family. When migrating within the same instance family, you only need to consider CPU/GPU and memory. For more information and general guidance on choosing the right processing resources, refer to Ensure efficient compute resources on Amazon SageMaker.

To fine-tune instance selection, we start by analyzing Processing job metrics in CloudWatch. For more information, refer to Monitor Amazon SageMaker with Amazon CloudWatch.

CloudWatch collects raw data from SageMaker and processes it into readable, near-real-time metrics. Although these statistics are kept for 15 months, the CloudWatch console limits the search to metrics that were updated in the last 2 weeks (this ensures that only current jobs are shown). Processing jobs metrics can be found in the /aws/sagemaker/ProcessingJobs namespace and the metrics collected are CPUUtilization, MemoryUtilization, GPUUtilization, GPUMemoryUtilization, and DiskUtilization.

The following screenshot shows an example in CloudWatch of the Processing job we saw earlier.

In this example, we see the averaged CPU and memory values (which is the default in CloudWatch): the average CPU usage is 0.04%, memory 1.84%, and disk usage 13.7%. In order to right-size, always consider the maximum CPU and memory usage (in this example, the maximum CPU utilization was 98% in the first 3 minutes). As a general rule, if your maximum CPU and memory usage is consistently less than 40%, you can safely cut the machine in half. For example, if you were using an ml.c5.4xlarge instance, you could move to an ml.c5.2xlarge, which could reduce your cost by 50%.

Data Wrangler jobs

Data Wrangler is a feature of Amazon SageMaker Studio that provides a repeatable and scalable solution for data exploration and processing. You use the Data Wrangler interface to interactively import, analyze, transform, and featurize your data. Those steps are captured in a recipe (a .flow file) that you can then use in a Data Wrangler job. This helps you reapply the same data transformations on your data and also scale to a distributed batch data processing job, either as part of an ML pipeline or independently.

For guidance on optimizing your Data Wrangler app in Studio, refer to Part 2 in this series.

In this section, we focus on optimizing Data Wrangler jobs.

Data Wrangler uses SageMaker Spark processing jobs with a Data Wrangler-managed container. This container runs the directions from the .flow file in the job. Like any processing jobs, Data Wrangler charges you for the instances you choose, based on the duration of use and provisioned storage that is attached to that instance.

In Cost Explorer, you can filter Data Wrangler jobs costs by applying a filter on the usage type. The names of these usage types are:

REGION-processing_DW:instanceType(for example,USE1-processing_DW:ml.m5.large)REGION-processing_DW:VolumeUsage.gp2(for example,USE1-processing_DW:VolumeUsage.gp2)

To view your Data Wrangler cost in Cost Explorer, filter the service to use SageMaker, and for Usage type, choose the processing_DW prefix and select the list on the menu. This will show you both instance usage (hours) and storage volume (GB) related costs. (If you want to see Studio Data Wrangler costs you can filter the usage type by the Studio_DW prefix.)

Right-size and schedule Data Wrangler job instances

At the moment, Data Wrangler supports only m5 instances with following instance sizes: ml.m5.4xlarge, ml.m5.12xlarge, and ml.m5.24xlarge. You can use the distributed job feature to fine-tune your job cost. For example, suppose you need to process a dataset that requires 350 GiB in RAM. The 4xlarge (128 GiB) and 12xlarge (256 GiB) might not be able to process and will lead you to use the m5.24xlarge instance (768 GiB). However, you could use two m5.12xlarge instances (2 * 256 GiB = 512 GiB) and reduce the cost by 40% or three m5.4xlarge instances (3 * 128 GiB = 384 GiB) and save 50% of the m5.24xlarge instance cost. You should note that these are estimates and that distributed processing might introduce some overhead that will affect the overall runtime.

When changing the instance type, make sure you update the Spark config accordingly. For example, if you have an initial ml.m5.4xlarge instance job configured with properties spark.driver.memory set to 2048 and spark.executor.memory set to 55742, and later scale up to ml.m5.12xlarge, those configuration values need to be increased, otherwise they will be the bottleneck in the processing job. You can update these variables in the Data Wrangler GUI or in a configuration file appended to the config path (see the following examples).

Another compelling feature in Data Wrangler is the ability to set a scheduled job. If you’re processing data periodically, you can create a schedule to run the processing job automatically. For example, you can create a schedule that runs a processing job automatically when you get new data (for examples, see Export to Amazon S3 or Export to Amazon SageMaker Feature Store). However, you should note that when you create a schedule, Data Wrangler creates an eventRule in EventBridge. This means you also be charged for the event rules that you create (as well as the instances used to run the processing job). For more information, see Amazon EventBridge pricing.

Conclusion

In this post, we provided guidance on cost analysis and best practices when preprocessing

data using SageMaker Processing and Data Wrangler jobs. Similar to preprocessing, there are many options and configuration settings in building, training, and running ML models that may lead to unnecessary costs. Therefore, as machine learning establishes itself as a powerful tool across industries, ML workloads needs to remain cost-effective.

SageMaker offers a wide and deep feature set for facilitating each step in the ML pipeline.

This robustness also provides continuous cost optimization opportunities without compromising performance or agility.

Refer to the following posts in this series for more information about optimizing cost for SageMaker:

|

About the Authors

Deepali Rajale is a Senior AI/ML Specialist at AWS. She works with enterprise customers providing technical guidance with best practices for deploying and maintaining AI/ML solutions in the AWS ecosystem. She has worked with a wide range of organizations on various deep learning use cases involving NLP and computer vision. She is passionate about empowering organizations to leverage generative AI to enhance their use experience. In her spare time, she enjoys movies, music, and literature.

Deepali Rajale is a Senior AI/ML Specialist at AWS. She works with enterprise customers providing technical guidance with best practices for deploying and maintaining AI/ML solutions in the AWS ecosystem. She has worked with a wide range of organizations on various deep learning use cases involving NLP and computer vision. She is passionate about empowering organizations to leverage generative AI to enhance their use experience. In her spare time, she enjoys movies, music, and literature.

Uri Rosenberg is the AI & ML Specialist Technical Manager for Europe, Middle East, and Africa. Based out of Israel, Uri works to empower enterprise customers on all things ML to design, build, and operate at scale. In his spare time, he enjoys cycling, hiking, and watching sunsets (at minimum once a day).

Uri Rosenberg is the AI & ML Specialist Technical Manager for Europe, Middle East, and Africa. Based out of Israel, Uri works to empower enterprise customers on all things ML to design, build, and operate at scale. In his spare time, he enjoys cycling, hiking, and watching sunsets (at minimum once a day).

Jesse Manders is a Senior Product Manager in the AWS AI/ML human in the loop services team. He works at the intersection of AI and human interaction with the goal of creating and improving AI/ML products and services to meet our needs. Previously, Jesse held leadership roles in engineering at Apple and Lumileds, and was a senior scientist in a Silicon Valley startup. He has an M.S. and Ph.D. from the University of Florida, and an MBA from the University of California, Berkeley, Haas School of Business.

Jesse Manders is a Senior Product Manager in the AWS AI/ML human in the loop services team. He works at the intersection of AI and human interaction with the goal of creating and improving AI/ML products and services to meet our needs. Previously, Jesse held leadership roles in engineering at Apple and Lumileds, and was a senior scientist in a Silicon Valley startup. He has an M.S. and Ph.D. from the University of Florida, and an MBA from the University of California, Berkeley, Haas School of Business. Romi Datta is a Senior Manager of Product Management in the Amazon SageMaker team responsible for Human in the Loop services. He has been in AWS for over 4 years, holding several product management leadership roles in SageMaker, S3 and IoT. Prior to AWS he worked in various product management, engineering and operational leadership roles at IBM, Texas Instruments and Nvidia. He has an M.S. and Ph.D. in Electrical and Computer Engineering from the University of Texas at Austin, and an MBA from the University of Chicago Booth School of Business.

Romi Datta is a Senior Manager of Product Management in the Amazon SageMaker team responsible for Human in the Loop services. He has been in AWS for over 4 years, holding several product management leadership roles in SageMaker, S3 and IoT. Prior to AWS he worked in various product management, engineering and operational leadership roles at IBM, Texas Instruments and Nvidia. He has an M.S. and Ph.D. in Electrical and Computer Engineering from the University of Texas at Austin, and an MBA from the University of Chicago Booth School of Business. Jonathan Buck is a Software Engineer at Amazon Web Services working at the intersection of machine learning and distributed systems. His work involves productionizing machine learning models and developing novel software applications powered by machine learning to put the latest capabilities in the hands of customers.

Jonathan Buck is a Software Engineer at Amazon Web Services working at the intersection of machine learning and distributed systems. His work involves productionizing machine learning models and developing novel software applications powered by machine learning to put the latest capabilities in the hands of customers. Alex Williams is an applied scientist in the human-in-the-loop science team at AWS AI where he conducts interactive systems research at the intersection of human-computer interaction (HCI) and machine learning. Before joining Amazon, he was a professor in the Department of Electrical Engineering and Computer Science at the University of Tennessee where he co-directed the People, Agents, Interactions, and Systems (PAIRS) research laboratory. He has also held research positions at Microsoft Research, Mozilla Research, and the University of Oxford. He regularly publishes his work at premier publication venues for HCI, such as CHI, CSCW, and UIST. He holds a PhD from the University of Waterloo.

Alex Williams is an applied scientist in the human-in-the-loop science team at AWS AI where he conducts interactive systems research at the intersection of human-computer interaction (HCI) and machine learning. Before joining Amazon, he was a professor in the Department of Electrical Engineering and Computer Science at the University of Tennessee where he co-directed the People, Agents, Interactions, and Systems (PAIRS) research laboratory. He has also held research positions at Microsoft Research, Mozilla Research, and the University of Oxford. He regularly publishes his work at premier publication venues for HCI, such as CHI, CSCW, and UIST. He holds a PhD from the University of Waterloo. Sarah Gao is a Software Development Manager in Amazon SageMaker Human In the Loop (HIL) responsible for building the ML based labeling platform. Sarah has been in AWS for over 4 years, holding several software management leadership roles in EC2 security and SageMaker. Prior to AWS she worked in various engineering management roles at Oracle and Sun Microsystem.

Sarah Gao is a Software Development Manager in Amazon SageMaker Human In the Loop (HIL) responsible for building the ML based labeling platform. Sarah has been in AWS for over 4 years, holding several software management leadership roles in EC2 security and SageMaker. Prior to AWS she worked in various engineering management roles at Oracle and Sun Microsystem. Erran Li is the applied science manager at human-in-the-loop services, AWS AI, Amazon. His research interests are 3D deep learning, and vision and language representation learning. Previously he was a senior scientist at Alexa AI, the head of machine learning at Scale AI and the chief scientist at Pony.ai. Before that, he was with the perception team at Uber ATG and the machine learning platform team at Uber working on machine learning for autonomous driving, machine learning systems and strategic initiatives of AI. He started his career at Bell Labs and was adjunct professor at Columbia University. He co-taught tutorials at ICML’17 and ICCV’19, and co-organized several workshops at NeurIPS, ICML, CVPR, ICCV on machine learning for autonomous driving, 3D vision and robotics, machine learning systems and adversarial machine learning. He has a PhD in computer science at Cornell University. He is an ACM Fellow and IEEE Fellow.

Erran Li is the applied science manager at human-in-the-loop services, AWS AI, Amazon. His research interests are 3D deep learning, and vision and language representation learning. Previously he was a senior scientist at Alexa AI, the head of machine learning at Scale AI and the chief scientist at Pony.ai. Before that, he was with the perception team at Uber ATG and the machine learning platform team at Uber working on machine learning for autonomous driving, machine learning systems and strategic initiatives of AI. He started his career at Bell Labs and was adjunct professor at Columbia University. He co-taught tutorials at ICML’17 and ICCV’19, and co-organized several workshops at NeurIPS, ICML, CVPR, ICCV on machine learning for autonomous driving, 3D vision and robotics, machine learning systems and adversarial machine learning. He has a PhD in computer science at Cornell University. He is an ACM Fellow and IEEE Fellow.

communication technology with collaborators from the

communication technology with collaborators from the