Gemini is our most capable and general model, built to be multimodal and optimized for three different sizes: Ultra, Pro and Nano.Read More

Gemini is our most capable and general model, built to be multimodal and optimized for three different sizes: Ultra, Pro and Nano.Read More

Google at EMNLP 2023

Google is proud to be a Diamond Sponsor of Empirical Methods in Natural Language Processing (EMNLP 2023), a premier annual conference, which is being held this week in Sentosa, Singapore. Google has a strong presence at this year’s conference with over 65 accepted papers and active involvement in 11 workshops and tutorials. Google is also happy to be a Major Sponsor for the Widening NLP workshop (WiNLP), which aims to highlight global representations of people, perspectives, and cultures in AI and ML. We look forward to sharing some of our extensive NLP research and expanding our partnership with the broader research community.

We hope you’ll visit the Google booth to chat with researchers who are actively pursuing the latest innovations in NLP, and check out some of the scheduled booth activities (e.g., demos and Q&A sessions listed below). Visit the @GoogleAI X (Twitter) and LinkedIn accounts to find out more about the Google booth activities at EMNLP 2023.

Take a look below to learn more about the Google research being presented at EMNLP 2023 (Google affiliations in bold).

Board & Organizing Committee

Sponsorship Chair: Shyam Upadyay

Industry Track Chair: Imed Zitouni

Senior Program Committee: Roee Aharoni, Annie Louis, Vinodkumar Prabhakaran, Shruti Rijhwani, Brian Roark, Partha Talukdar

Google Research booth activities

This schedule is subject to change. Please visit the Google booth for more information.

Developing and Utilizing Evaluation Metrics for Machine Translation & Improving Multilingual NLP

Presenter: Isaac Caswell, Dan Deutch, Jan-Thorsten Peter, David Vilar Torres

Fri, Dec 8 | 10:30AM -11:00AM SST

Differentiable Search Indexes & Generative Retrieval

Presenter: Sanket Viabhav Mehta, Vinh Tran

Fri, Dec 8 | 3:30PM -4:00PM SST

Retrieval and Generation in a single pass

Presenter: Palak Jain, Livio Baldini Soares

Sat, Dec 9 | 10:30AM -11:00AM SST

Amplifying Adversarial Attacks

Presenter: Anu Sinha

Sat, Dec 9 | 12:30PM -1:45PM SST

Automate prompt design: Universal Self-Adaptive Prompting (see blog post)

Presenter: Xingchen Qian*, Ruoxi Sun

Sat, Dec 9 | 3:30PM -4:00PM SST

Papers

SynJax: Structured Probability Distributions for JAX

Miloš Stanojević, Laurent Sartran

Adapters: A Unified Library for Parameter-Efficient and Modular Transfer Learning

Clifton Poth, Hannah Sterz, Indraneil Paul, Sukannya Purkayastha, Leon Engländer, Timo Imhof, Ivan Vulić, Sebastian Ruder, Iryna Gurevych, Jonas Pfeiffer

DocumentNet: Bridging the Data Gap in Document Pre-training

Lijun Yu, Jin Miao, Xiaoyu Sun, Jiayi Chen, Alexander Hauptmann, Hanjun Dai, Wei Wei

AART: AI-Assisted Red-Teaming with Diverse Data Generation for New LLM-Powered Applications

Bhaktipriya Radharapu, Kevin Robinson, Lora Aroyo, Preethi Lahoti

CRoW: Benchmarking Commonsense Reasoning in Real-World Tasks

Mete Ismayilzada, Debjit Paul, Syrielle Montariol, Mor Geva, Antoine Bosselut

Large Language Models Can Self-Improve

Jiaxin Huang*, Shixiang Shane Gu, Le Hou, Yuexin Wu, Xuezhi Wang, Hongkun Yu, Jiawei Han

Dissecting Recall of Factual Associations in Auto-Regressive Language Models

Mor Geva, Jasmijn Bastings, Katja Filippova, Amir Globerson

Stop Uploading Test Data in Plain Text: Practical Strategies for Mitigating Data Contamination by Evaluation Benchmarks

Alon Jacovi, Avi Caciularu, Omer Goldman, Yoav Goldberg

Selective Labeling: How to Radically Lower Data-Labeling Costs for Document Extraction Models

Yichao Zhou, James Bradley Wendt, Navneet Potti, Jing Xie, Sandeep Tata

Measuring Attribution in Natural Language Generation Models

Hannah Rashkin, Vitaly Nikolaev, Matthew Lamm, Lora Aroyo, Michael Collins, Dipanjan Das, Slav Petrov, Gaurav Singh Tomar, Iulia Turc, David Reitter

Inverse Scaling Can Become U-Shaped

Jason Wei*, Najoung Kim, Yi Tay*, Quoc Le

INSTRUCTSCORE: Towards Explainable Text Generation Evaluation with Automatic Feedback

Wenda Xu, Danqing Wang, Liangming Pan, Zhenqiao Song, Markus Freitag, William Yang Wang, Lei Li

On the Robustness of Dialogue History Representation in Conversational Question Answering: A Comprehensive Study and a New Prompt-Based Method

Zorik Gekhman, Nadav Oved, Orgad Keller, Idan Szpektor, Roi Reichart

Investigating Efficiently Extending Transformers for Long-Input Summarization

Jason Phang*, Yao Zhao, Peter J Liu

DSI++: Updating Transformer Memory with New Documents

Sanket Vaibhav Mehta*, Jai Gupta, Yi Tay, Mostafa Dehghani, Vinh Q. Tran, Jinfeng Rao, Marc Najork, Emma Strubell, Donald Metzler

MultiTurnCleanup: A Benchmark for Multi-Turn Spoken Conversational Transcript Cleanup

Hua Shen*, Vicky Zayats, Johann C Rocholl, Daniel David Walker, Dirk Padfield

Findings of EMNLP

Adaptation with Self-Evaluation to Improve Selective Prediction in LLMs

Jiefeng Chen*, Jinsung Yoon, Sayna Ebrahimi, Sercan O Arik, Tomas Pfister, Somesh Jha

A Comprehensive Evaluation of Tool-Assisted Generation Strategies

Alon Jacovi*, Avi Caciularu, Jonathan Herzig, Roee Aharoni, Bernd Bohnet, Mor Geva

1-PAGER: One Pass Answer Generation and Evidence Retrieval

Palak Jain, Livio Baldini Soares, Tom Kwiatkowski

MaXM: Towards Multilingual Visual Question Answering

Soravit Changpinyo, Linting Xue, Michal Yarom, Ashish V. Thapliyal, Idan Szpektor, Julien Amelot, Xi Chen, Radu Soricut

SDOH-NLI: A Dataset for Inferring Social Determinants of Health from Clinical Notes

Adam D. Lelkes, Eric Loreaux*, Tal Schuster, Ming-Jun Chen, Alvin Rajkomar

Machine Reading Comprehension Using Case-based Reasoning

Dung Ngoc Thai, Dhruv Agarwal, Mudit Chaudhary, Wenlong Zhao, Rajarshi Das, Jay-Yoon Lee, Hannaneh Hajishirzi, Manzil Zaheer, Andrew McCallum

Cross-lingual Open-Retrieval Question Answering for African Languages

Odunayo Ogundepo, Tajuddeen Gwadabe, Clara E. Rivera, Jonathan H. Clark, Sebastian Ruder, David Ifeoluwa Adelani, Bonaventure F. P. Dossou, Abdou Aziz DIOP, Claytone Sikasote, Gilles HACHEME, Happy Buzaaba, Ignatius Ezeani, Rooweither Mabuya, Salomey Osei, Chris Chinenye Emezue, Albert Kahira, Shamsuddeen Hassan Muhammad, Akintunde Oladipo, Abraham Toluwase Owodunni, Atnafu Lambebo Tonja, Iyanuoluwa Shode, Akari Asai, Anuoluwapo Aremu, Ayodele Awokoya, Bernard Opoku, Chiamaka Ijeoma Chukwuneke, Christine Mwase, Clemencia Siro, Stephen Arthur, Tunde Oluwaseyi Ajayi, Verrah Akinyi Otiende, Andre Niyongabo Rubungo, Boyd Sinkala, Daniel Ajisafe, Emeka Felix Onwuegbuzia, Falalu Ibrahim Lawan, Ibrahim Said Ahmad, Jesujoba Oluwadara Alabi, CHINEDU EMMANUEL MBONU, Mofetoluwa Adeyemi, Mofya Phiri, Orevaoghene Ahia, Ruqayya Nasir Iro, Sonia Adhiambo

On Uncertainty Calibration and Selective Generation in Probabilistic Neural Summarization: A Benchmark Study

Polina Zablotskaia, Du Phan, Joshua Maynez, Shashi Narayan, Jie Ren, Jeremiah Zhe Liu

Epsilon Sampling Rocks: Investigating Sampling Strategies for Minimum Bayes Risk Decoding for Machine Translation

Markus Freitag, Behrooz Ghorbani*, Patrick Fernandes*

Sources of Hallucination by Large Language Models on Inference Tasks

Nick McKenna, Tianyi Li, Liang Cheng, Mohammad Javad Hosseini, Mark Johnson, Mark Steedman

Don’t Add, Don’t Miss: Effective Content Preserving Generation from Pre-selected Text Spans

Aviv Slobodkin, Avi Caciularu, Eran Hirsch, Ido Dagan

What Makes Chain-of-Thought Prompting Effective? A Counterfactual Study

Aman Madaan*, Katherine Hermann, Amir Yazdanbakhsh

Understanding HTML with Large Language Models

Izzeddin Gur, Ofir Nachum, Yingjie Miao, Mustafa Safdari, Austin Huang, Aakanksha Chowdhery, Sharan Narang, Noah Fiedel, Aleksandra Faust

Improving the Robustness of Summarization Models by Detecting and Removing Input Noise

Kundan Krishna*, Yao Zhao, Jie Ren, Balaji Lakshminarayanan, Jiaming Luo, Mohammad Saleh, Peter J. Liu

In-Context Learning Creates Task Vectors

Roee Hendel, Mor Geva, Amir Globerson

Pre-training Without Attention

Junxiong Wang, Jing Nathan Yan, Albert Gu, Alexander M Rush

MUX-PLMs: Data Multiplexing for High-Throughput Language Models

Vishvak Murahari, Ameet Deshpande, Carlos E Jimenez, Izhak Shafran, Mingqiu Wang, Yuan Cao, Karthik R Narasimhan

PaRaDe: Passage Ranking Using Demonstrations with LLMs

Andrew Drozdov*, Honglei Zhuang, Zhuyun Dai, Zhen Qin, Razieh Rahimi, Xuanhui Wang, Dana Alon, Mohit Iyyer, Andrew McCallum, Donald Metzler*, Kai Hui

Long-Form Speech Translation Through Segmentation with Finite-State Decoding Constraints on Large Language Models

Arya D. McCarthy, Hao Zhang, Shankar Kumar, Felix Stahlberg, Ke Wu

Unsupervised Opinion Summarization Using Approximate Geodesics

Somnath Basu Roy Chowdhury*, Nicholas Monath, Kumar Avinava Dubey, Amr Ahmed, Snigdha Chaturvedi

SQLPrompt: In-Context Text-to-SQL with Minimal Labeled Data

Ruoxi Sun, Sercan O. Arik, Rajarishi Sinha, Hootan Nakhost, Hanjun Dai, Pengcheng Yin, Tomas Pfister

Retrieval-Augmented Parsing for Complex Graphs by Exploiting Structure and Uncertainty

Zi Lin, Quan Yuan, Panupong Pasupat, Jeremiah Zhe Liu, Jingbo Shang

A Zero-Shot Language Agent for Computer Control with Structured Reflection

Tao Li, Gang Li, Zhiwei Deng, Bryan Wang*, Yang Li

Pragmatics in Language Grounding: Phenomena, Tasks, and Modeling Approaches

Daniel Fried, Nicholas Tomlin, Jennifer Hu, Roma Patel, Aida Nematzadeh

Improving Classifier Robustness Through Active Generation of Pairwise Counterfactuals

Ananth Balashankar, Xuezhi Wang, Yao Qin, Ben Packer, Nithum Thain, Jilin Chen, Ed H. Chi, Alex Beutel

mmT5: Modular Multilingual Pre-training Solves Source Language Hallucinations

Jonas Pfeiffer, Francesco Piccinno, Massimo Nicosia, Xinyi Wang, Machel Reid, Sebastian Ruder

Scaling Laws vs Model Architectures: How Does Inductive Bias Influence Scaling?

Yi Tay, Mostafa Dehghani, Samira Abnar, Hyung Won Chung, William Fedus, Jinfeng Rao, Sharan Narang, Vinh Q. Tran, Dani Yogatama, Donald Metzler

TaTA: A Multilingual Table-to-Text Dataset for African Languages

Sebastian Gehrmann, Sebastian Ruder, Vitaly Nikolaev, Jan A. Botha, Michael Chavinda, Ankur P Parikh, Clara E. Rivera

XTREME-UP: A User-Centric Scarce-Data Benchmark for Under-Represented Languages

Sebastian Ruder, Jonathan H. Clark, Alexander Gutkin, Mihir Kale, Min Ma, Massimo Nicosia, Shruti Rijhwani, Parker Riley, Jean Michel Amath Sarr, Xinyi Wang, John Frederick Wieting, Nitish Gupta, Anna Katanova, Christo Kirov, Dana L Dickinson, Brian Roark, Bidisha Samanta, Connie Tao, David Ifeoluwa Adelani, Vera Axelrod, Isaac Rayburn Caswell, Colin Cherry, Dan Garrette, Reeve Ingle, Melvin Johnson, Dmitry Panteleev, Partha Talukdar

q2d: Turning Questions into Dialogs to Teach Models How to Search

Yonatan Bitton, Shlomi Cohen-Ganor, Ido Hakimi, Yoad Lewenberg, Roee Aharoni, Enav Weinreb

Emergence of Abstract State Representations in Embodied Sequence Modeling

Tian Yun*, Zilai Zeng, Kunal Handa, Ashish V Thapliyal, Bo Pang, Ellie Pavlick, Chen Sun

Evaluating and Modeling Attribution for Cross-Lingual Question Answering

Benjamin Muller*, John Wieting, Jonathan H. Clark, Tom Kwiatkowski, Sebastian Ruder, Livio Baldini Soares, Roee Aharoni, Jonathan Herzig, Xinyi Wang

Weakly-Supervised Learning of Visual Relations in Multimodal Pre-training

Emanuele Bugliarello, Aida Nematzadeh, Lisa Anne Hendricks

How Do Languages Influence Each Other? Studying Cross-Lingual Data Sharing During LM Fine-Tuning

Rochelle Choenni, Dan Garrette, Ekaterina Shutova

CompoundPiece: Evaluating and Improving Decompounding Performance of Language Models

Benjamin Minixhofer, Jonas Pfeiffer, Ivan Vulić

IC3: Image Captioning by Committee Consensus

David Chan, Austin Myers, Sudheendra Vijayanarasimhan, David A Ross, John Canny

The Curious Case of Hallucinatory (Un)answerability: Finding Truths in the Hidden States of Over-Confident Large Language Models

Aviv Slobodkin, Omer Goldman, Avi Caciularu, Ido Dagan, Shauli Ravfogel

Evaluating Large Language Models on Controlled Generation Tasks

Jiao Sun, Yufei Tian, Wangchunshu Zhou, Nan Xu, Qian Hu, Rahul Gupta, John Wieting, Nanyun Peng, Xuezhe Ma

Ties Matter: Meta-Evaluating Modern Metrics with Pairwise Accuracy and Tie Calibration

Daniel Deutsch, George Foster, Markus Freitag

Transcending Scaling Laws with 0.1% Extra Compute

Yi Tay*, Jason Wei*, Hyung Won Chung*, Vinh Q. Tran, David R. So*, Siamak Shakeri, Xavier Garcia, Huaixiu Steven Zheng, Jinfeng Rao, Aakanksha Chowdhery, Denny Zhou, Donald Metzler, Slav Petrov, Neil Houlsby, Quoc V. Le, Mostafa Dehghani

Data Similarity is Not Enough to Explain Language Model Performance

Gregory Yauney*, Emily Reif, David Mimno

Self-Influence Guided Data Reweighting for Language Model Pre-training

Megh Thakkar*, Tolga Bolukbasi, Sriram Ganapathy, Shikhar Vashishth, Sarath Chandar, Partha Talukdar

ReTAG: Reasoning Aware Table to Analytic Text Generation

Deepanway Ghosal, Preksha Nema, Aravindan Raghuveer

GATITOS: Using a New Multilingual Lexicon for Low-Resource Machine Translation

Alex Jones*, Isaac Caswell, Ishank Saxena

Video-Helpful Multimodal Machine Translation

Yihang Li, Shuichiro Shimizu, Chenhui Chu, Sadao Kurohashi, Wei Li

Symbol Tuning Improves In-Context Learning in Language Models

Jerry Wei*, Le Hou, Andrew Kyle Lampinen, Xiangning Chen*, Da Huang, Yi Tay*, Xinyun Chen, Yifeng Lu, Denny Zhou, Tengyu Ma*, Quoc V Le

“Don’t Take This Out of Context!” On the Need for Contextual Models and Evaluations for Stylistic Rewriting

Akhila Yerukola, Xuhui Zhou, Elizabeth Clark, Maarten Sap

QAmeleon: Multilingual QA with Only 5 Examples

Priyanka Agrawal, Chris Alberti, Fantine Huot, Joshua Maynez, Ji Ma, Sebastian Ruder, Kuzman Ganchev, Dipanjan Das, Mirella Lapata

Speak, Read and Prompt: High-Fidelity Text-to-Speech with Minimal Supervision

Eugene Kharitonov, Damien Vincent, Zalán Borsos, Raphaël Marinier, Sertan Girgin, Olivier Pietquin, Matt Sharifi, Marco Tagliasacchi, Neil Zeghidour

AnyTOD: A Programmable Task-Oriented Dialog System

Jeffrey Zhao, Yuan Cao, Raghav Gupta, Harrison Lee, Abhinav Rastogi, Mingqiu Wang, Hagen Soltau, Izhak Shafran, Yonghui Wu

Selectively Answering Ambiguous Questions

Jeremy R. Cole, Michael JQ Zhang, Daniel Gillick, Julian Martin Eisenschlos, Bhuwan Dhingra, Jacob Eisenstein

PRESTO: A Multilingual Dataset for Parsing Realistic Task-Oriented Dialogs (see blog post)

Rahul Goel, Waleed Ammar, Aditya Gupta, Siddharth Vashishtha, Motoki Sano, Faiz Surani*, Max Chang, HyunJeong Choe, David Greene, Chuan He, Rattima Nitisaroj, Anna Trukhina, Shachi Paul, Pararth Shah, Rushin Shah, Zhou Yu

LM vs LM: Detecting Factual Errors via Cross Examination

Roi Cohen, May Hamri, Mor Geva, Amir Globerson

A Suite of Generative Tasks for Multi-Level Multimodal Webpage Understanding

Andrea Burns*, Krishna Srinivasan, Joshua Ainslie, Geoff Brown, Bryan A. Plummer, Kate Saenko, Jianmo Ni, Mandy Guo

AfriSenti: A Twitter Sentiment Analysis Benchmark for African Languages

Shamsuddeen Hassan Muhammad, Idris Abdulmumin, Abinew Ali Ayele, Nedjma Ousidhoum, David Ifeoluwa Adelani, Seid Muhie Yimam, Ibrahim Said Ahmad, Meriem Beloucif, Saif M. Mohammad, Sebastian Ruder, Oumaima Hourrane, Alipio Jorge, Pavel Brazdil, Felermino D. M. A. Ali, Davis David, Salomey Osei, Bello Shehu-Bello, Falalu Ibrahim Lawan, Tajuddeen Gwadabe, Samuel Rutunda, Tadesse Destaw Belay, Wendimu Baye Messelle, Hailu Beshada Balcha, Sisay Adugna Chala, Hagos Tesfahun Gebremichael, Bernard Opoku, Stephen Arthur

Optimizing Retrieval-Augmented Reader Models via Token Elimination

Moshe Berchansky, Peter Izsak, Avi Caciularu, Ido Dagan, Moshe Wasserblat

SEAHORSE: A Multilingual, Multifaceted Dataset for Summarization Evaluation

Elizabeth Clark, Shruti Rijhwani, Sebastian Gehrmann, Joshua Maynez, Roee Aharoni, Vitaly Nikolaev, Thibault Sellam, Aditya Siddhant, Dipanjan Das, Ankur P Parikh

GQA: Training Generalized Multi-Query Transformer Models from Multi-Head Checkpoints

Joshua Ainslie, James Lee-Thorp, Michiel de Jong*, Yury Zemlyanskiy, Federico Lebron, Sumit Sanghai

CoLT5: Faster Long-Range Transformers with Conditional Computation

Joshua Ainslie, Tao Lei, Michiel de Jong, Santiago Ontanon, Siddhartha Brahma, Yury Zemlyanskiy, David Uthus, Mandy Guo, James Lee-Thorp, Yi Tay, Yun-Hsuan Sung, Sumit Sanghai

Improving Diversity of Demographic Representation in Large Language Models via Collective-Critiques and Self-Voting

Preethi Lahoti, Nicholas Blumm, Xiao Ma, Raghavendra Kotikalapudi, Sahitya Potluri, Qijun Tan, Hansa Srinivasan, Ben Packer, Ahmad Beirami, Alex Beutel, Jilin Chen

Universal Self-Adaptive Prompting (see blog post)

Xingchen Wan*, Ruoxi Sun, Hootan Nakhost, Hanjun Dai, Julian Martin Eisenschlos, Sercan O. Arik, Tomas Pfister

TrueTeacher: Learning Factual Consistency Evaluation with Large Language Models

Zorik Gekhman, Jonathan Herzig, Roee Aharoni, Chen Elkind, Idan Szpektor

Hierarchical Pre-training on Multimodal Electronic Health Records

Xiaochen Wang, Junyu Luo, Jiaqi Wang, Ziyi Yin, Suhan Cui, Yuan Zhong, Yaqing Wang, Fenglong Ma

NAIL: Lexical Retrieval Indices with Efficient Non-Autoregressive Decoders

Livio Baldini Soares, Daniel Gillick, Jeremy R. Cole, Tom Kwiatkowski

How Does Generative Retrieval Scale to Millions of Passages?

Ronak Pradeep*, Kai Hui, Jai Gupta, Adam D. Lelkes, Honglei Zhuang, Jimmy Lin, Donald Metzler, Vinh Q. Tran

Make Every Example Count: On the Stability and Utility of Self-Influence for Learning from Noisy NLP Datasets

Irina Bejan*, Artem Sokolov, Katja Filippova

Workshops

The Seventh Widening NLP Workshop (WiNLP)

Major Sponsor

Organizers: Sunipa Dev

Panelist: Preethi Lahoti

The Sixth Workshop on Computational Models of Reference, Anaphora and Coreference (CRAC)

Invited Speaker: Bernd Bohnet

The 3rd Workshop for Natural Language Processing Open Source Software (NLP-OSS)

Organizer: Geeticka Chauhan

Combined Workshop on Spatial Language Understanding and Grounded Communication for Robotics (SpLU-RoboNLP)

Invited Speaker: Andy Zeng

Natural Language Generation, Evaluation, and Metric (GEM)

Organizer: Elizabeth Clark

The First Arabic Natural Language Processing Conference (ArabicNLP)

Organizer: Imed Zitouni

The Big Picture: Crafting a Research Narrative (BigPicture)

Organizer: Nora Kassner, Sebastian Ruder

BlackboxNLP 2023: The 6th Workshop on Analysing and Interpreting Neural Networks for NLP

Organizer: Najoung Kim

Panelist: Neel Nanda

The SIGNLL Conference on Computational Natural Language Learning (CoNLL)

Co-Chair: David Reitter

Areas and ACs: Kyle Gorman (Speech and Phonology), Fei Liu (Natural Language Generation)

The Third Workshop on Multi-lingual Representation Learning (MRL)

Organizer: Omer Goldman, Sebastian Ruder

Invited Speaker: Orhan Firat

Tutorials

Creative Natural Language Generation

Organizer: Tuhin Chakrabarty*

* Work done while at Google

A new quantum algorithm for classical mechanics with an exponential speedup

Quantum computers promise to solve some problems exponentially faster than classical computers, but there are only a handful of examples with such a dramatic speedup, such as Shor’s factoring algorithm and quantum simulation. Of those few examples, the majority of them involve simulating physical systems that are inherently quantum mechanical — a natural application for quantum computers. But what about simulating systems that are not inherently quantum? Can quantum computers offer an exponential advantage for this?

In “Exponential quantum speedup in simulating coupled classical oscillators”, published in Physical Review X (PRX) and presented at the Symposium on Foundations of Computer Science (FOCS 2023), we report on the discovery of a new quantum algorithm that offers an exponential advantage for simulating coupled classical harmonic oscillators. These are some of the most fundamental, ubiquitous systems in nature and can describe the physics of countless natural systems, from electrical circuits to molecular vibrations to the mechanics of bridges. In collaboration with Dominic Berry of Macquarie University and Nathan Wiebe of the University of Toronto, we found a mapping that can transform any system involving coupled oscillators into a problem describing the time evolution of a quantum system. Given certain constraints, this problem can be solved with a quantum computer exponentially faster than it can with a classical computer. Further, we use this mapping to prove that any problem efficiently solvable by a quantum algorithm can be recast as a problem involving a network of coupled oscillators, albeit exponentially many of them. In addition to unlocking previously unknown applications of quantum computers, this result provides a new method of designing new quantum algorithms by reasoning purely about classical systems.

Simulating coupled oscillators

The systems we consider consist of classical harmonic oscillators. An example of a single harmonic oscillator is a mass (such as a ball) attached to a spring. If you displace the mass from its rest position, then the spring will induce a restoring force, pushing or pulling the mass in the opposite direction. This restoring force causes the mass to oscillate back and forth.

|

| A simple example of a harmonic oscillator is a mass connected to a wall by a spring. [Image Source: Wikimedia] |

Now consider coupled harmonic oscillators, where multiple masses are attached to one another through springs. Displace one mass, and it will induce a wave of oscillations to pulse through the system. As one might expect, simulating the oscillations of a large number of masses on a classical computer gets increasingly difficult.

|

| An example system of masses connected by springs that can be simulated with the quantum algorithm. |

To enable the simulation of a large number of coupled harmonic oscillators, we came up with a mapping that encodes the positions and velocities of all masses and springs into the quantum wavefunction of a system of qubits. Since the number of parameters describing the wavefunction of a system of qubits grows exponentially with the number of qubits, we can encode the information of N balls into a quantum mechanical system of only about log(N) qubits. As long as there is a compact description of the system (i.e., the properties of the masses and the springs), we can evolve the wavefunction to learn coordinates of the balls and springs at a later time with far fewer resources than if we had used a naïve classical approach to simulate the balls and springs.

We showed that a certain class of coupled-classical oscillator systems can be efficiently simulated on a quantum computer. But this alone does not rule out the possibility that there exists some as-yet-unknown clever classical algorithm that is similarly efficient in its use of resources. To show that our quantum algorithm achieves an exponential speedup over any possible classical algorithm, we provide two additional pieces of evidence.

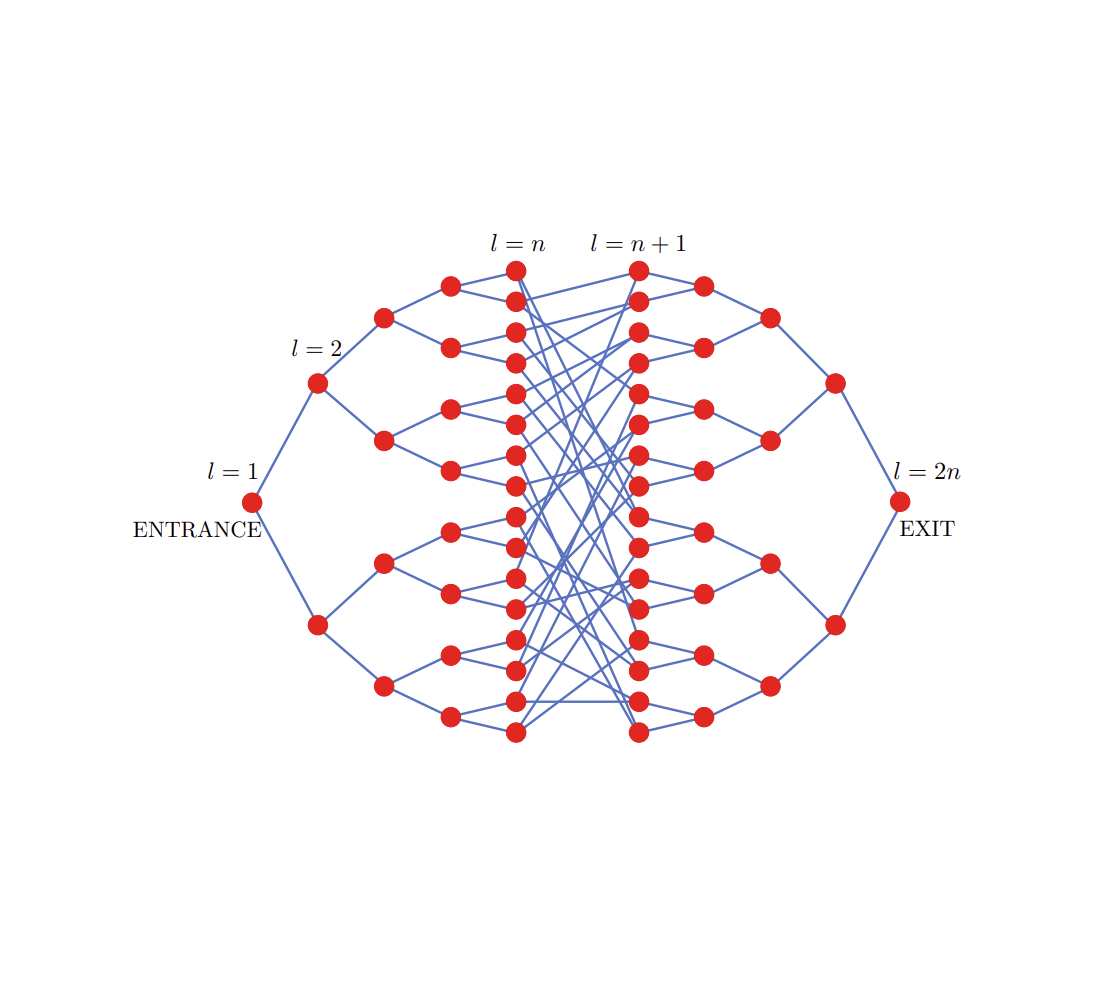

The glued-trees problem and the quantum oracle

For the first piece of evidence, we use our mapping to show that the quantum algorithm can efficiently solve a famous problem about graphs known to be difficult to solve classically, called the glued-trees problem. The problem takes two branching trees — a graph whose nodes each branch to two more nodes, resembling the branching paths of a tree — and glues their branches together through a random set of edges, as shown in the figure below.

The goal of the glued-trees problem is to find the exit node — the “root” of the second tree — as efficiently as possible. But the exact configuration of the nodes and edges of the glued trees are initially hidden from us. To learn about the system, we must query an oracle, which can answer specific questions about the setup. This oracle allows us to explore the trees, but only locally. Decades ago, it was shown that the number of queries required to find the exit node on a classical computer is proportional to a polynomial factor of N, the total number of nodes.

But recasting this as a problem with balls and springs, we can imagine each node as a ball and each connection between two nodes as a spring. Pluck the entrance node (the root of the first tree), and the oscillations will pulse through the trees. It only takes a time that scales with the depth of the tree — which is exponentially smaller than N — to reach the exit node. So, by mapping the glued-trees ball-and-spring system to a quantum system and evolving it for that time, we can detect the vibrations of the exit node and determine it exponentially faster than we could using a classical computer.

BQP-completeness

The second and strongest piece of evidence that our algorithm is exponentially more efficient than any possible classical algorithm is revealed by examination of the set of problems a quantum computer can solve efficiently (i.e., solvable in polynomial time), referred to as bounded-error quantum polynomial time or BQP. The hardest problems in BQP are called “BQP-complete”.

While it is generally accepted that there exist some problems that a quantum algorithm can solve efficiently and a classical algorithm cannot, this has not yet been proven. So, the best evidence we can provide is that our problem is BQP-complete, that is, it is among the hardest problems in BQP. If someone were to find an efficient classical algorithm for solving our problem, then every problem solved by a quantum computer efficiently would be classically solvable! Not even the factoring problem (finding the prime factors of a given large number), which forms the basis of modern encryption and was famously solved by Shor’s algorithm, is expected to be BQP-complete.

To show that our problem of simulating balls and springs is indeed BQP-complete, we start with a standard BQP-complete problem of simulating universal quantum circuits, and show that every quantum circuit can be expressed as a system of many balls coupled with springs. Therefore, our problem is also BQP-complete.

Implications and future work

This effort also sheds light on work from 2002, when theoretical computer scientist Lov K. Grover and his colleague, Anirvan M. Sengupta, used an analogy to coupled pendulums to illustrate how Grover’s famous quantum search algorithm could find the correct element in an unsorted database quadratically faster than could be done classically. With the proper setup and initial conditions, it would be possible to tell whether one of N pendulums was different from the others — the analogue of finding the correct element in a database — after the system had evolved for time that was only ~√(N). While this hints at a connection between certain classical oscillating systems and quantum algorithms, it falls short of explaining why Grover’s quantum algorithm achieves a quantum advantage.

Our results make that connection precise. We showed that the dynamics of any classical system of harmonic oscillators can indeed be equivalently understood as the dynamics of a corresponding quantum system of exponentially smaller size. In this way we can simulate Grover and Sengupta’s system of pendulums on a quantum computer of log(N) qubits, and find a different quantum algorithm that can find the correct element in time ~√(N). The analogy we discovered between classical and quantum systems can be used to construct other quantum algorithms offering exponential speedups, where the reason for the speedups is now more evident from the way that classical waves propagate.

Our work also reveals that every quantum algorithm can be equivalently understood as the propagation of a classical wave in a system of coupled oscillators. This would imply that, for example, we can in principle build a classical system that solves the factoring problem after it has evolved for time that is exponentially smaller than the runtime of any known classical algorithm that solves factoring. This may look like an efficient classical algorithm for factoring, but the catch is that the number of oscillators is exponentially large, making it an impractical way to solve factoring.

Coupled harmonic oscillators are ubiquitous in nature, describing a broad range of systems from electrical circuits to chains of molecules to structures such as bridges. While our work here focuses on the fundamental complexity of this broad class of problems, we expect that it will guide us in searching for real-world examples of harmonic oscillator problems in which a quantum computer could offer an exponential advantage.

Acknowledgements

We would like to thank our Quantum Computing Science Communicator, Katie McCormick, for helping to write this blog post.

Summary report optimization in the Privacy Sandbox Attribution Reporting API

In recent years, the Privacy Sandbox initiative was launched to explore responsible ways for advertisers to measure the effectiveness of their campaigns, by aiming to deprecate third-party cookies (subject to resolving any competition concerns with the UK’s Competition and Markets Authority). Cookies are small pieces of data containing user preferences that websites store on a user’s device; they can be used to provide a better browsing experience (e.g., allowing users to automatically sign in) and to serve relevant content or ads. The Privacy Sandbox attempts to address concerns around the use of cookies for tracking browsing data across the web by providing a privacy-preserving alternative.

Many browsers use differential privacy (DP) to provide privacy-preserving APIs, such as the Attribution Reporting API (ARA), that don’t rely on cookies for ad conversion measurement. ARA encrypts individual user actions and collects them in an aggregated summary report, which estimates measurement goals like the number and value of conversions (useful actions on a website, such as making a purchase or signing up for a mailing list) attributed to ad campaigns.

The task of configuring API parameters, e.g., allocating a contribution budget across different conversions, is important for maximizing the utility of the summary reports. In “Summary Report Optimization in the Privacy Sandbox Attribution Reporting API”, we introduce a formal mathematical framework for modeling summary reports. Then, we formulate the problem of maximizing the utility of summary reports as an optimization problem to obtain the optimal ARA parameters. Finally, we evaluate the method using real and synthetic datasets, and demonstrate significantly improved utility compared to baseline non-optimized summary reports.

ARA summary reports

We use the following example to illustrate our notation. Imagine a fictional gift shop called Du & Penc that uses digital advertising to reach its customers. The table below captures their holiday sales, where each record contains impression features with (i) an impression ID, (ii) the campaign, and (iii) the city in which the ad was shown, as well as conversion features with (i) the number of items purchased and (ii) the total dollar value of those items.

|

| Impression and conversion feature logs for Du & Penc. |

Mathematical model

ARA summary reports can be modeled by four algorithms: (1) Contribution Vector, (2) Contribution Bounding, (3) Summary Reports, and (4) Reconstruct Values. Contribution Bounding and Summary Reports are performed by the ARA, while Contribution Vector and Reconstruct Values are performed by an AdTech provider — tools and systems that enable businesses to buy and sell digital advertising. The objective of this work is to assist AdTechs in optimizing summary report algorithms.

The Contribution Vector algorithm converts measurements into an ARA format that is discretized and scaled. Scaling needs to account for the overall contribution limit per impression. Here we propose a method that clips and performs randomized rounding. The outcome of the algorithm is a histogram of aggregatable keys and values.

Next, the Contribution Bounding algorithm runs on client devices and enforces the contribution bound on attributed reports where any further contributions exceeding the limit are dropped. The output is a histogram of attributed conversions.

The Summary Reports algorithm runs on the server side inside a trusted execution environment and returns noisy aggregate results that satisfy DP. Noise is sampled from the discrete Laplace distribution, and to enforce privacy budgeting, a report may be queried only once.

Finally, the Reconstruct Values algorithm converts measurements back to the original scale. Reconstruct Values and Contribution Vector Algorithms are designed by the AdTech, and both impact the utility received from the summary report.

Error metrics

There are several factors to consider when selecting an error metric for evaluating the quality of an approximation. To choose a particular metric, we considered the desirable properties of an error metric that further can be used as an objective function. Considering desired properties, we have chosen 𝜏-truncated root mean square relative error (RMSRE𝜏) as our error metric for its properties. See the paper for a detailed discussion and comparison to other possible metrics.

Optimization

To optimize utility as measured by RMSRE𝜏, we choose a capping parameter, C, and privacy budget, 𝛼, for each slice. The combination of both determines how an actual measurement (such as two conversions with a total value of $3) is encoded on the AdTech side and then passed to the ARA for Contribution Bounding algorithm processing. RMSRE𝜏 can be computed exactly, since it can be expressed in terms of the bias from clipping and the variance of the noise distribution. Following those steps we find out that RMSRE𝜏 for a fixed privacy budget, 𝛼,or a capping parameter, C, is convex (so the error-minimizing value for the other parameter can be obtained efficiently), while for joint variables (C, 𝛼) it becomes non-convex (so we may not always be able to select the best possible parameters). In any case, any off-the-shelf optimizer can be used to select privacy budgets and capping parameters. In our experiments, we use the SLSQP minimizer from the scipy.optimize library.

Synthetic data

Different ARA configurations can be evaluated empirically by testing them on a conversion dataset. However, access to such data can be restricted or slow due to privacy concerns, or simply unavailable. One way to address these limitations is to use synthetic data that replicates the characteristics of real data.

We present a method for generating synthetic data responsibly through statistical modeling of real-world conversion datasets. We first perform an empirical analysis of real conversion datasets to uncover relevant characteristics for ARA. We then design a pipeline that uses this distribution knowledge to create a realistic synthetic dataset that can be customized via input parameters.

The pipeline first generates impressions drawn from a power-law distribution (step 1), then for each impression it generates conversions drawn from a Poisson distribution (step 2) and finally, for each conversion, it generates conversion values drawn from a log-normal distribution (step 3). With dataset-dependent parameters, we find that these distributions closely match ad-dataset characteristics. Thus, one can learn parameters from historical or public datasets and generate synthetic datasets for experimentation.

|

| Overall dataset generation steps with features for illustration. |

Experimental evaluation

We evaluate our algorithms on three real-world datasets (Criteo, AdTech Real Estate, and AdTech Travel) and three synthetic datasets. Criteo consists of 15M clicks, Real Estate consists of 100K conversions, and Travel consists of 30K conversions. Each dataset is partitioned into a training set and a test set. The training set is used to choose contribution budgets, clipping threshold parameters, and the conversion count limit (the real-world datasets have only one conversion per click), and the error is evaluated on the test set. Each dataset is partitioned into slices using impression features. For real-world datasets, we consider three queries for each slice; for synthetic datasets, we consider two queries for each slice.

For each query we choose the RMSRE𝝉 𝜏 value to be five times the median value of the query on the training dataset. This ensures invariance of the error metric to data rescaling, and allows us to combine the errors from features of different scales by using 𝝉 per each feature.

Results

We compare our optimization-based algorithm to a simple baseline approach. For each query, the baseline uses an equal contribution budget and a fixed quantile of the training data to choose the clipping threshold. Our algorithms produce substantially lower error than baselines on both real-world and synthetic datasets. Our optimization-based approach adapts to the privacy budget and data.

Conclusion

We study the optimization of summary reports in the ARA, which is currently deployed on hundreds of millions of Chrome browsers. We present a rigorous formulation of the contribution budgeting optimization problem for ARA with the goal of equipping researchers with a robust abstraction that facilitates practical improvements.

Our recipe, which leverages historical data to bound and scale the contributions of future data under differential privacy, is quite general and applicable to settings beyond advertising. One approach based on this work is to use past data to learn the parameters of the data distribution, and then to apply synthetic data derived from this distribution for privacy budgeting for queries on future data. Please see the paper and accompanying code for detailed algorithms and proofs.

Acknowledgements

This work was done in collaboration with Badih Ghazi, Pritish Kamath, Ravi Kumar, Pasin Manurangsi, and Avinash Varadarajan. We thank Akash Nadan for his help.

Unsupervised speech-to-speech translation from monolingual data

Speech-to-speech translation (S2ST) is a type of machine translation that converts spoken language from one language to another. This technology has the potential to break down language barriers and facilitate communication between people from different cultures and backgrounds.

Previously, we introduced Translatotron 1 and Translatotron 2, the first ever models that were able to directly translate speech between two languages. However they were trained in supervised settings with parallel speech data. The scarcity of parallel speech data is a major challenge in this field, so much that most public datasets are semi- or fully-synthesized from text. This adds additional hurdles to learning translation and reconstruction of speech attributes that are not represented in the text and are thus not reflected in the synthesized training data.

Here we present Translatotron 3, a novel unsupervised speech-to-speech translation architecture. In Translatotron 3, we show that it is possible to learn a speech-to-speech translation task from monolingual data alone. This method opens the door not only to translation between more language pairs but also towards translation of the non-textual speech attributes such as pauses, speaking rates, and speaker identity. Our method does not include any direct supervision to target languages and therefore we believe it is the right direction for paralinguistic characteristics (e.g., such as tone, emotion) of the source speech to be preserved across translation. To enable speech-to-speech translation, we use back-translation, which is a technique from unsupervised machine translation (UMT) where a synthetic translation of the source language is used to translate texts without bilingual text datasets. Experimental results in speech-to-speech translation tasks between Spanish and English show that Translatotron 3 outperforms a baseline cascade system.

Translatotron 3

Translatotron 3 addresses the problem of unsupervised S2ST, which can eliminate the requirement for bilingual speech datasets. To do this, Translatotron 3’s design incorporates three key aspects:

- Pre-training the entire model as a masked autoencoder with SpecAugment, a simple data augmentation method for speech recognition that operates on the logarithmic mel spectogram of the input audio (instead of the raw audio itself) and is shown to effectively improve the generalization capabilities of the encoder.

- Unsupervised embedding mapping based on multilingual unsupervised embeddings (MUSE), which is trained on unpaired languages but allows the model to learn an embedding space that is shared between the source and target languages.

- A reconstruction loss based on back-translation, to train an encoder-decoder direct S2ST model in a fully unsupervised manner.

The model is trained using a combination of the unsupervised MUSE embedding loss, reconstruction loss, and S2S back-translation loss. During inference, the shared encoder is utilized to encode the input into a multilingual embedding space, which is subsequently decoded by the target language decoder.

Architecture

Translatotron 3 employs a shared encoder to encode both the source and target languages. The decoder is composed of a linguistic decoder, an acoustic synthesizer (responsible for acoustic generation of the translation speech), and a singular attention module, like Translatotron 2. However, for Translatotron 3 there are two decoders, one for the source language and another for the target language. During training, we use monolingual speech-text datasets (i.e., these data are made up of speech-text pairs; they are not translations).

Encoder

The encoder has the same architecture as the speech encoder in the Translatotron 2. The output of the encoder is split into two parts: the first part incorporates semantic information whereas the second part incorporates acoustic information. By using the MUSE loss, the first half of the output is trained to be the MUSE embeddings of the text of the input speech spectrogram. The latter half is updated without the MUSE loss. It is important to note that the same encoder is shared between source and target languages. Furthermore, the MUSE embedding is multilingual in nature. As a result, the encoder is able to learn a multilingual embedding space across source and target languages. This allows a more efficient and effective encoding of the input, as the encoder is able to encode speech from both languages into a common embedding space, rather than maintaining a separate embedding space for each language.

Decoder

Like Translatotron 2, the decoder is composed of three distinct components, namely the linguistic decoder, the acoustic synthesizer, and the attention module. To effectively handle the different properties of the source and target languages, however, Translatotron 3 has two separate decoders, for the source and target languages.

Two part training

The training methodology consists of two parts: (1) auto-encoding with reconstruction and (2) a back-translation term. In the first part, the network is trained to auto-encode the input to a multilingual embedding space using the MUSE loss and the reconstruction loss. This phase aims to ensure that the network generates meaningful multilingual representations. In the second part, the network is further trained to translate the input spectrogram by utilizing the back-translation loss. To mitigate the issue of catastrophic forgetting and enforcing the latent space to be multilingual, the MUSE loss and the reconstruction loss are also applied in this second part of training. To ensure that the encoder learns meaningful properties of the input, rather than simply reconstructing the input, we apply SpecAugment to encoder input at both phases. It has been shown to effectively improve the generalization capabilities of the encoder by augmenting the input data.

Training objective

During the back-translation training phase (illustrated in the section below), the network is trained to translate the input spectrogram to the target language and then back to the source language. The goal of back-translation is to enforce the latent space to be multilingual. To achieve this, the following losses are applied:

- MUSE loss: The MUSE loss measures the similarity between the multilingual embedding of the input spectrogram and the multilingual embedding of the back-translated spectrogram.

- Reconstruction loss: The reconstruction loss measures the similarity between the input spectrogram and the back-translated spectrogram.

In addition to these losses, SpecAugment is applied to the encoder input at both phases. Before the back-translation training phase, the network is trained to auto-encode the input to a multilingual embedding space using the MUSE loss and reconstruction loss.

MUSE loss

To ensure that the encoder generates multilingual representations that are meaningful for both decoders, we employ a MUSE loss during training. The MUSE loss forces the encoder to generate such a representation by using pre-trained MUSE embeddings. During the training process, given an input text transcript, we extract the corresponding MUSE embeddings from the embeddings of the input language. The error between MUSE embeddings and the output vectors of the encoder is then minimized. Note that the encoder is indifferent to the language of the input during inference due to the multilingual nature of the embeddings.

|

| The training and inference in Translatotron 3. Training includes the reconstruction loss via the auto-encoding path and employs the reconstruction loss via back-translation. |

Audio samples

Following are examples of direct speech-to-speech translation from Translatotron 3:

Spanish-to-English (on Conversational dataset)

| Input (Spanish) | |

| TTS-synthesized reference (English) | |

| Translatotron 3 (English) |

Spanish-to-English (on CommonVoice11 Synthesized dataset)

| Input (Spanish) | |

| TTS-synthesized reference (English) | |

| Translatotron 3 (English) |

Spanish-to-English (on CommonVoice11 dataset)

| Input (Spanish) | |

| TTS reference (English) | |

| Translatotron 3 (English) |

Performance

To empirically evaluate the performance of the proposed approach, we conducted experiments on English and Spanish using various datasets, including the Common Voice 11 dataset, as well as two synthesized datasets derived from the Conversational and Common Voice 11 datasets.

The translation quality was measured by BLEU (higher is better) on ASR (automatic speech recognition) transcriptions from the translated speech, compared to the corresponding reference translation text. Whereas, the speech quality is measured by the MOS score (higher is better). Furthermore, the speaker similarity is measured by the average cosine similarity (higher is better).

Because Translatotron 3 is an unsupervised method, as a baseline we used a cascaded S2ST system that is combined from ASR, unsupervised machine translation (UMT), and TTS (text-to-speech). Specifically, we employ UMT that uses the nearest neighbor in the embedding space in order to create the translation.

Translatotron 3 outperforms the baseline by large margins in every aspect we measured: translation quality, speaker similarity, and speech quality. It particularly excelled on the conversational corpus. Moreover, Translatotron 3 achieves speech naturalness similar to that of the ground truth audio samples (measured by MOS, higher is better).

|

| Translation quality (measured by BLEU, where higher is better) evaluated on three Spanish-English corpora. |

|

| Speech similarity (measured by average cosine similarity between input speaker and output speaker, where higher is better) evaluated on three Spanish-English corpora. |

|

| Mean-opinion-score (measured by average MOS metric, where higher is better) evaluated on three Spanish-English corpora. |

Future work

As future work, we would like to extend the work to more languages and investigate whether zero-shot S2ST can be applied with the back-translation technique. We would also like to examine the use of back-translation with different types of speech data, such as noisy speech and low-resource languages.

Acknowledgments

The direct contributors to this work include Eliya Nachmani, Alon Levkovitch, Yifan Ding, Chulayutsh Asawaroengchai, Heiga Zhen, and Michelle Tadmor Ramanovich. We also thank Yu Zhang, Yuma Koizumi, Soroosh Mariooryad, RJ Skerry-Ryan, Neil Zeghidour, Christian Frank, Marco Tagliasacchi, Nadav Bar, Benny Schlesinger and Yonghui Wu.

Members of Fort Peck Tribes and Googlers meet to learn, celebrate and support socially beneficial technology

A team of Googlers focused on responsible innovation visited the Fort Peck Tribes in Montana to engage in relationship-building and bidirectional learning.Read More

A conversation with Refik Anadol on creativity and AI

Mira Lane explores how AI is empowering creativity with pioneering artist Refik Anadol.Read More

Mira Lane explores how AI is empowering creativity with pioneering artist Refik Anadol.Read More

Improving simulations of clouds and their effects on climate

Today’s climate models successfully capture broad global warming trends. However, because of uncertainties about processes that are small in scale yet globally important, such as clouds and ocean turbulence, these models’ predictions of upcoming climate changes are not very accurate in detail. For example, predictions of the time by which the global mean surface temperature of Earth will have warmed 2℃, relative to preindustrial times, vary by 40–50 years (a full human generation) among today’s models. As a result, we do not have the accurate and geographically granular predictions we need to plan resilient infrastructure, adapt supply chains to climate disruption, and assess the risks of climate-related hazards to vulnerable communities.

In large part this is because clouds dominate errors and uncertainties in climate predictions for the coming decades [1, 2, 3]. Clouds reflect sunlight and exert a greenhouse effect, making them crucial for regulating Earth’s energy balance and mediating the response of the climate system to changes in greenhouse gas concentrations. However, they are too small in scale to be directly resolvable in today’s climate models. Current climate models resolve motions at scales of tens to a hundred kilometers, with a few pushing toward the kilometer-scale. However, the turbulent air motions that sustain, for example, the low clouds that cover large swaths of tropical oceans have scales of meters to tens of meters. Because of this wide difference in scale, climate models use empirical parameterizations of clouds, rather than simulating them directly, which result in large errors and uncertainties.

While clouds cannot be directly resolved in global climate models, their turbulent dynamics can be simulated in limited areas by using high-resolution large eddy simulations (LES). However, the high computational cost of simulating clouds with LES has inhibited broad and systematic numerical experimentation, and it has held back the generation of large datasets for training parameterization schemes to represent clouds in coarser-resolution global climate models.

In “Accelerating Large-Eddy Simulations of Clouds with Tensor Processing Units”, published in Journal of Advances in Modeling Earth Systems (JAMES), and in collaboration with a Climate Modeling Alliance (CliMA) lead who is a visiting researcher at Google, we demonstrate that Tensor Processing Units (TPUs) — application-specific integrated circuits that were originally developed for machine learning (ML) applications — can be effectively used to perform LES of clouds. We show that TPUs, in conjunction with tailored software implementations, can be used to simulate particularly computationally challenging marine stratocumulus clouds in the conditions observed during the Dynamics and Chemistry of Marine Stratocumulus (DYCOMS) field study. This successful TPU-based LES code reveals the utility of TPUs, with their large computational resources and tight interconnects, for cloud simulations.

Climate model accuracy for critical metrics, like precipitation or the energy balance at the top of the atmosphere, has improved roughly 10% per decade in the last 20 years. Our goal is for this research to enable a 50% reduction in climate model errors by improving their representation of clouds.

Large-eddy simulations on TPUs

In this work, we focus on stratocumulus clouds, which cover ~20% of the tropical oceans and are the most prevalent cloud type on earth. Current climate models are not yet able to reproduce stratocumulus cloud behavior correctly, which has been one of the largest sources of errors in these models. Our work will provide a much more accurate ground truth for large-scale climate models.

Our simulations of clouds on TPUs exhibit unprecedented computational throughput and scaling, making it possible, for example, to simulate stratocumulus clouds with 10× speedup over real-time evolution across areas up to about 35 × 54 km2. Such domain sizes are close to the cross-sectional area of typical global climate model grid boxes. Our results open up new avenues for computational experiments, and for substantially enlarging the sample of LES available to train parameterizations of clouds for global climate models.

|

|

| Rendering of the cloud evolution from a simulation of a 285 x 285 x 2 km3 stratocumulus cloud sheet. This is the largest cloud sheet of its kind ever simulated. Left: An oblique view of the cloud field with the camera cruising. Right: Top view of the cloud field with the camera gradually pulled away. |

The LES code is written in TensorFlow, an open-source software platform developed by Google for ML applications. The code takes advantage of TensorFlow’s graph computation and Accelerated Linear Algebra (XLA) optimizations, which enable the full exploitation of TPU hardware, including the high-speed, low-latency inter-chip interconnects (ICI) that helped us achieve this unprecedented performance. At the same time, the TensorFlow code makes it easy to incorporate ML components directly within the physics-based fluid solver.

We validated the code by simulating canonical test cases for atmospheric flow solvers, such as a buoyant bubble that rises in neutral stratification, and a negatively buoyant bubble that sinks and impinges on the surface. These test cases show that the TPU-based code faithfully simulates the flows, with increasingly fine turbulent details emerging as the resolution increases. The validation tests culminate in simulations of the conditions during the DYCOMS field campaign. The TPU-based code reliably reproduces the cloud fields and turbulence characteristics observed by aircraft during a field campaign — a feat that is notoriously difficult to achieve for LES because of the rapid changes in temperature and other thermodynamic properties at the top of the stratocumulus decks.

Outlook

With this foundation established, our next goal is to substantially enlarge existing databases of high-resolution cloud simulations that researchers building climate models can use to develop better cloud parameterizations — whether these are for physics-based models, ML models, or hybrids of the two. This requires additional physical processes beyond that described in the paper; for example, the need to integrate radiative transfer processes into the code. Our goal is to generate data across a variety of cloud types, e.g., thunderstorm clouds.

|

| Rendering of a thunderstorm simulation using the same simulator as the stratocumulus simulation work. Rainfall can also be observed near the ground. |

This work illustrates how advances in hardware for ML can be surprisingly effective when repurposed in other research areas — in this case, climate modeling. These simulations provide detailed training data for processes such as in-cloud turbulence, which are not directly observable, yet are crucially important for climate modeling and prediction.

Acknowledgements

We would like to thank the co-authors of the paper: Sheide Chammas, Qing Wang, Matthias Ihme, and John Anderson. We’d also like to thank Carla Bromberg, Rob Carver, Fei Sha, and Tyler Russell for their insights and contributions to the work.

Open sourcing Project Guideline: A platform for computer vision accessibility technology

Two years ago we announced Project Guideline, a collaboration between Google Research and Guiding Eyes for the Blind that enabled people with visual impairments (e.g., blindness and low-vision) to walk, jog, and run independently. Using only a Google Pixel phone and headphones, Project Guideline leverages on-device machine learning (ML) to navigate users along outdoor paths marked with a painted line. The technology has been tested all over the world and even demonstrated during the opening ceremony at the Tokyo 2020 Paralympic Games.

Since the original announcement, we set out to improve Project Guideline by embedding new features, such as obstacle detection and advanced path planning, to safely and reliably navigate users through more complex scenarios (such as sharp turns and nearby pedestrians). The early version featured a simple frame-by-frame image segmentation that detected the position of the path line relative to the image frame. This was sufficient for orienting the user to the line, but provided limited information about the surrounding environment. Improving the navigation signals, such as alerts for obstacles and upcoming turns, required a much better understanding and mapping of the users’ environment. To solve these challenges, we built a platform that can be utilized for a variety of spatially-aware applications in the accessibility space and beyond.

Today, we announce the open source release of Project Guideline, making it available for anyone to use to improve upon and build new accessibility experiences. The release includes source code for the core platform, an Android application, pre-trained ML models, and a 3D simulation framework.

System design

The primary use-case is an Android application, however we wanted to be able to run, test, and debug the core logic in a variety of environments in a reproducible way. This led us to design and build the system using C++ for close integration with MediaPipe and other core libraries, while still being able to integrate with Android using the Android NDK.

Under the hood, Project Guideline uses ARCore to estimate the position and orientation of the user as they navigate the course. A segmentation model, built on the DeepLabV3+ framework, processes each camera frame to generate a binary mask of the guideline (see the previous blog post for more details). Points on the segmented guideline are then projected from image-space coordinates onto a world-space ground plane using the camera pose and lens parameters (intrinsics) provided by ARCore. Since each frame contributes a different view of the line, the world-space points are aggregated over multiple frames to build a virtual mapping of the real-world guideline. The system performs piecewise curve approximation of the guideline world-space coordinates to build a spatio-temporally consistent trajectory. This allows refinement of the estimated line as the user progresses along the path.

|

| Project Guideline builds a 2D map of the guideline, aggregating detected points in each frame (red) to build a stateful representation (blue) as the runner progresses along the path. |

A control system dynamically selects a target point on the line some distance ahead based on the user’s current position, velocity, and direction. An audio feedback signal is then given to the user to adjust their heading to coincide with the upcoming line segment. By using the runner’s velocity vector instead of camera orientation to compute the navigation signal, we eliminate noise caused by irregular camera movements common during running. We can even navigate the user back to the line while it’s out of camera view, for example if the user overshot a turn. This is possible because ARCore continues to track the pose of the camera, which can be compared to the stateful line map inferred from previous camera images.

Project Guideline also includes obstacle detection and avoidance features. An ML model is used to estimate depth from single images. To train this monocular depth model, we used SANPO, a large dataset of outdoor imagery from urban, park, and suburban environments that was curated in-house. The model is capable of detecting the depth of various obstacles, including people, vehicles, posts, and more. The depth maps are converted into 3D point clouds, similar to the line segmentation process, and used to detect the presence of obstacles along the user’s path and then alert the user through an audio signal.

|

| Using a monocular depth ML model, Project Guideline constructs a 3D point cloud of the environment to detect and alert the user of potential obstacles along the path. |

A low-latency audio system based on the AAudio API was implemented to provide the navigational sounds and cues to the user. Several sound packs are available in Project Guideline, including a spatial sound implementation using the Resonance Audio API. The sound packs were developed by a team of sound researchers and engineers at Google who designed and tested many different sound models. The sounds use a combination of panning, pitch, and spatialization to guide the user along the line. For example, a user veering to the right may hear a beeping sound in the left ear to indicate the line is to the left, with increasing frequency for a larger course correction. If the user veers further, a high-pitched warning sound may be heard to indicate the edge of the path is approaching. In addition, a clear “stop” audio cue is always available in the event the user veers too far from the line, an anomaly is detected, or the system fails to provide a navigational signal.

Project Guideline has been built specifically for Google Pixel phones with the Google Tensor chip. The Google Tensor chip enables the optimized ML models to run on-device with higher performance and lower power consumption. This is critical for providing real-time navigation instructions to the user with minimal delay. On a Pixel 8 there is a 28x latency improvement when running the depth model on the Tensor Processing Unit (TPU) instead of CPU, and 9x improvement compared to GPU.

|

Testing and simulation

Project Guideline includes a simulator that enables rapid testing and prototyping of the system in a virtual environment. Everything from the ML models to the audio feedback system runs natively within the simulator, giving the full Project Guideline experience without needing all the hardware and physical environment set up.

|

| Screenshot of Project Guideline simulator. |

Future direction

To launch the technology forward, WearWorks has become an early adopter and teamed up with Project Guideline to integrate their patented haptic navigation experience, utilizing haptic feedback in addition to sound to guide runners. WearWorks has been developing haptics for over 8 years, and previously empowered the first blind marathon runner to complete the NYC Marathon without sighted assistance. We hope that integrations like these will lead to new innovations and make the world a more accessible place.

The Project Guideline team is also working towards removing the painted line completely, using the latest advancements in mobile ML technology, such as the ARCore Scene Semantics API, which can identify sidewalks, buildings, and other objects in outdoor scenes. We invite the accessibility community to build upon and improve this technology while exploring new use cases in other fields.

Acknowledgements

Many people were involved in the development of Project Guideline and the technologies behind it. We’d like to thank Project Guideline team members: Dror Avalon, Phil Bayer, Ryan Burke, Lori Dooley, Song Chun Fan, Matt Hall, Amélie Jean-aimée, Dave Hawkey, Amit Pitaru, Alvin Shi, Mikhail Sirotenko, Sagar Waghmare, John Watkinson, Kimberly Wilber, Matthew Willson, Xuan Yang, Mark Zarich, Steven Clark, Jim Coursey, Josh Ellis, Tom Hoddes, Dick Lyon, Chris Mitchell, Satoru Arao, Yoojin Chung, Joe Fry, Kazuto Furuichi, Ikumi Kobayashi, Kathy Maruyama, Minh Nguyen, Alto Okamura, Yosuke Suzuki, and Bryan Tanaka. Thanks to ARCore contributors: Ryan DuToit, Abhishek Kar, and Eric Turner. Thanks to Alec Go, Jing Li, Liviu Panait, Stefano Pellegrini, Abdullah Rashwan, Lu Wang, Qifei Wang, and Fan Yang for providing ML platform support. We’d also like to thank Hartwig Adam, Tomas Izo, Rahul Sukthankar, Blaise Aguera y Arcas, and Huisheng Wang for their leadership support. Special thanks to our partners Guiding Eyes for the Blind and Achilles International.

Accelerating climate action with AI

AI has the potential to mitigate 5-10% of global greenhouse gas emissions according to our new report with Boston Consulting Group.Read More

AI has the potential to mitigate 5-10% of global greenhouse gas emissions according to our new report with Boston Consulting Group.Read More