More than 6 million pairs of eyes will be on real-time AI avatar technology in this week’s finale of America’s Got Talent — currently the second-most popular primetime TV show in the U.S..

Metaphysic, a member of the NVIDIA Inception global network of technology startups, is one of 11 acts competing for $1 million and a headline slot in AGT’s Las Vegas show in tonight’s final on NBC. It’s the first AI act to reach an AGT finals.

Called “the best act of the series so far” and “one of the most unique things we’ve ever seen on this show” by notoriously tough judge Simon Cowell, the team’s performances involve a demonstration of photorealistic AI avatars, animated in real time by singers on stage.

In Metaphysic’s semifinals act, three singers — Daniel Emmet, Patrick Dailey and John Riesen — lent their voices to AI avatars of Cowell, fellow judge Howie Mandel and host Terry Crews, performing the opera piece “Nessun Dorma.” For the finale, the team plans to “bring back one of the greatest rock and roll icons of all time,” but it’s keeping the audience guessing.

The AGT winner will be announced on Wednesday, Sept. 14.

“Metaphysic’s history-making run on America’s Got Talent has allowed us to showcase the application of AI on one of the most-watched stages in the world,” said the startup’s co-founder and CEO Tom Graham, who appears on the show alongside co-founder Chris Umé.

“While overall awareness of synthetic media has grown in recent years, Metaphysic’s AGT performances provide a front-row seat into how this technology could impact the future of everything, from the internet to entertainment to education,” he said.

Capturing Imaginations While Raising AI Awareness

Founded in 2021, London-based Metaphysic is developing AI technologies to help creators build virtual identities and synthetic content that is hyperrealistic, moving beyond the so-called uncanny valley.

The team initially went viral last year for DeepTomCruise, a TikTok channel featuring videos where actor Miles Fisher animated an AI avatar of Tom Cruise. The posts garnered around 100 million views and “provided many people with their first introduction to the incredible capabilities of synthetic media,” Graham said.

By bringing its AI avatars to the AGT stage, the company has been able to reach millions more viewers — with sophisticated camera rigs and performers on stage demonstrating how the technology works live and in real time.

AI, GPU Acceleration Behind the Curtain

Metaphysic’s AI avatar software pipeline includes variants of the popular StyleGAN model developed by NVIDIA Research. The team, which uses the TensorFlow deep learning framework, relies on NVIDIA CUDA software to accelerate its work on NVIDIA GPUs.

“Without NVIDIA hardware and software libraries, we wouldn’t be able to pull off these hyperreal results to the level we have,” said Jo Plaete, director of product innovation at Metaphysic. “The computation provided by our NVIDIA hardware platforms allows us to train larger and more complex models at a speed that allows us to iterate on them quickly, which results in those most perfectly tuned results.”

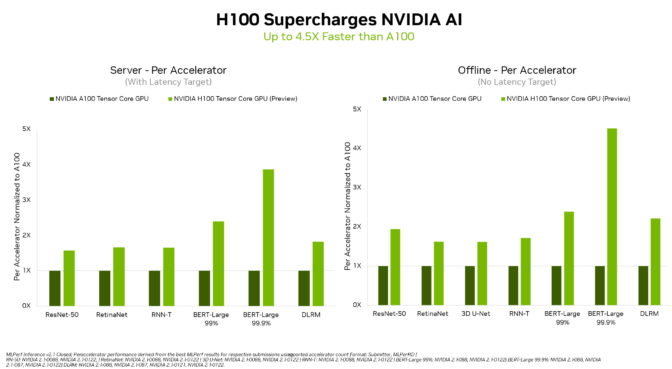

For both AI model development and inference during live performances, Metaphysic uses NVIDIA DGX systems as well as other workstations and data center configurations with NVIDIA GPUs — including NVIDIA A100 Tensor Core GPUs.

“Excellent hardware support has helped us troubleshoot things really fast when in need,” said Plaete. “And having access to the research and engineering teams helps us get a deeper understanding of the tools and how we can leverage them in our pipelines.”

Following AGT, Metaphysic plans to pursue several collaborations in the entertainment industry. The company has also launched a consumer-facing platform, called Every Anyone, that enables users to create their own hyperrealistic AI avatars.

Discover the latest in AI and metaverse technology by registering free for NVIDIA GTC, running online Sept. 19-22. Metaphysic will be part of the panel “AI for VCs: NVIDIA Inception Global Startup Showcase.”

Header photo by Chris Haston/NBC, courtesy of Metaphysic

The post AI on the Stars: Hyperrealistic Avatars Propel Startup to ‘America’s Got Talent’ Finals appeared first on NVIDIA Blog.

It’s time to show how you’ve grown as an artist (just like

It’s time to show how you’ve grown as an artist (just like

It’s time to show how you’ve grown as an artist (just like

It’s time to show how you’ve grown as an artist (just like

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)