Preventable train accidents like the 1985 disaster outside Tel Aviv in which a train collided with a school bus, killing 19 students and several adults, motivated Shahar Hania and Elen Katz to help save lives with technology.

They founded Rail Vision, an Israeli startup that creates obstacle-detection and classification systems for the global railway industry

The systems use advanced electro-optic sensors to alert train drivers and railway control centers when a train approaches potential obstacles — like humans, vehicles, animals or other objects — in real time, and in all weather and lighting conditions.

Rail Vision is a member of NVIDIA Inception — a program designed to nurture cutting-edge startups — and an NVIDIA Metropolis partner. The company uses the NVIDIA Jetson AGX Xavier edge AI platform, which provides GPU-accelerated computing in a compact and energy-efficient module, and the NVIDIA TensorRT software development kit for high-performance deep learning inference.

Pulling the Brakes in Real Time

A train’s braking distance — or the distance a train travels between when its brakes are pulled and when it comes to a complete stop — is usually so long that by the time a driver spots a railway obstacle, it could be too late to do anything about it.

For example, the braking distance for a train traveling 100 miles per hour is 800 meters, or about a half-mile, according to Hania. Rail Vision systems can detect objects on and along tracks from up to two kilometers, or 1.25 miles, away.

By sending alerts, both visual and acoustic, of potential obstacles in real time, Rail Vision systems give drivers over 20 seconds to respond and make decisions on braking.

The systems can also be integrated with a train’s infrastructure to automatically apply brakes when an obstacle is detected, even without a driver’s cue.

“Tons of deep learning inference possibilities are made possible with NVIDIA GPU technology,” Hania said. “The main advantage of using the NVIDIA Jetson platform is that there are lots of goodies inside — compressors, modules for optical flow — that all speed up the embedding process and make our systems more accurate.”

Boosting Maintenance, in Addition to Safety

In addition to preventing accidents, Rail Vision systems help save operational time and costs spent on railway maintenance — which can be as high as $50 billion annually, according to Hania.

If a railroad accident occurs, four to eight hours are typically spent handling the situation — which prevents other trains from using the track, said Hania.

Rail Vision systems use AI to monitor the tracks and prevent such workflow slow-downs, or quickly alert operators when they do occur — giving them time to find alternate routes or plans of action.

The systems are scalable and deployable for different use cases — with some focused solely on these maintenance aspects of railway operations.

Watch a Rail Vision system at work.

The post Train Spotting: Startup Gets on Track With AI and NVIDIA Jetson to Ensure Safety, Cost Savings for Railways appeared first on The Official NVIDIA Blog.

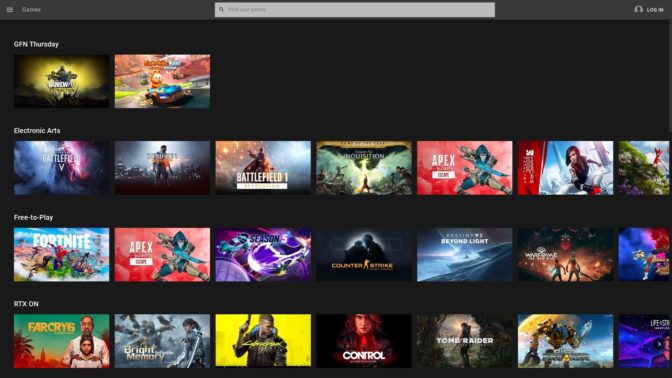

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)