In an effort to drive customer service improvements, many companies record the phone conversations between their customers and call center representatives. These call recordings are typically stored as audio files and processed to uncover insights such as customer sentiment, product or service issues, and agent effectiveness. To provide an accurate analysis of these audio files, the transcriptions need to clearly identify who spoke what and when.

However, given the average customer service agent handles 30–50 calls a day, the sheer volume of audio files to analyze quickly becomes a challenge. Companies need a robust system for transcribing audio files in large batches to improve call center quality management. Similarly, legal investigations often need to efficiently analyze case-related audio files in search of potential evidence or insight that can help win legal cases. Also, in the healthcare sector, there is a growing need for this solution to help transcribe and analyze virtual patient-provider interactions.

Amazon Transcribe is an automatic speech recognition (ASR) service that makes it easy to convert audio to text. One key feature of the service is called speaker identification, which you can use to label each individual speaker when transcribing multi-speaker audio files. You can specify Amazon Transcribe to identify 2–10 speakers in the audio clip. For the best results, define the correct number of speakers for the audio input.

A contact center, which often records multi-channel audio, can also benefit from using a feature called channel identification. The feature can separate each channel from within a single audio file and simultaneously transcribe each track. Typically, an agent and a caller are recorded on separate channels, which are merged into a single audio file. Contact center applications like Amazon Connect record agent and customer conversations on different channels (for example, the agent’s voice is captured in the left channel, and the customer’s in the right for a two-channel stereo recording). Contact centers can submit the single audio file to Amazon Transcribe, which identifies the two channels and produces a coherent merged transcript with channel labels.

In this post, we walk through a solution that analyzes audio files involving multiple speakers using Amazon Transcribe and Amazon Athena, a serverless query service for big data. Combining these two services together, you can easily set up a serverless, pay-per-use solution for processing audio files into readable text and analyze the data using standard query language (SQL).

Solution overview

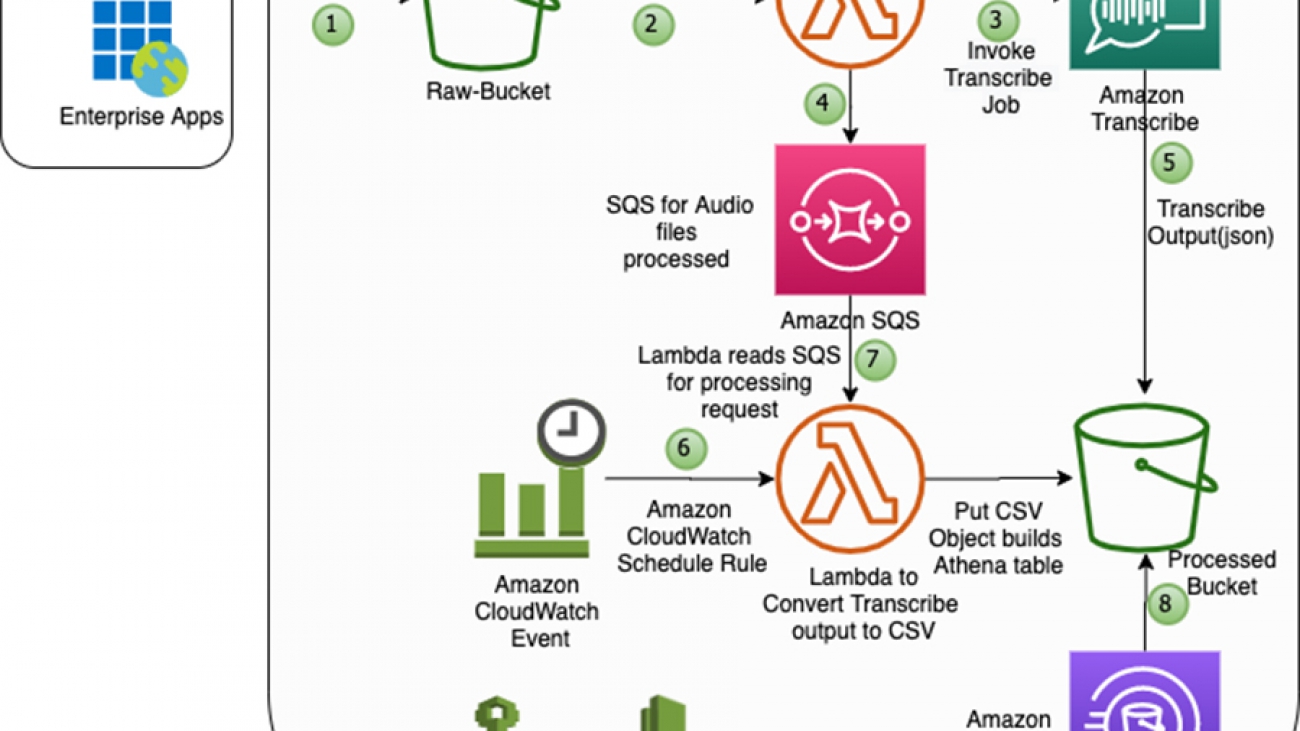

The following diagram illustrates the solution architecture.

The solution contains the following steps:

- You upload the audio file to the Amazon Simple Storage Service (Amazon S3) bucket AudioRawBucket.

- The Amazon S3 PUT event triggers the AWS Lambda function LambdaFunction1.

- The function invokes an asynchronous Amazon Transcribe API call on the uploaded audio file.

- The function also writes a message into Amazon Simple Queue Service (Amazon SQS) with the transcription job information.

- The transcription job runs and writes the output in JSON format to the target S3 bucket, AudioPrcsdBucket.

- An Amazon CloudWatch Events rule triggers the function(LambdaFunction2) to run for every 2 minutes interval.

- The function LambdaFunction2 reads the SQS queue for transcription jobs, checks for job completion, converts the JSON file to CSV, and loads an Athena table with the audio text data.

- You can access the processed audio file transcription from the AudioPrcsdBucket.

- You also query the data with Amazon Athena.

Prerequisites

To get started, you need the following:

- A valid AWS account with access to AWS services

- The Athena database “default” in an AWS account in us-east-1

- A multi-speaker audio file—for this post, we use medical-diarization.wav

To achieve the best results, we recommend the following:

- Use a lossless format, such as WAV or FLAC, with PCM 16-bit encoding

- Use a sample rate of 8000 Hz for low-fidelity audio and 16000 Hz for high-fidelity audio

Deploying the solution

You can use the provided AWS CloudFormation template to launch and configure all the resources for the solution.

- Choose Launch Stack:

This takes you to the Create stack wizard on the AWS CloudFormation console. The template is launched in the US East (N. Virginia) Region by default.

The CloudFormation templates used in this post are designed to work only in the us-east-1 Region. These templates are also not intended for production use without modification.

- On the Select Template page, keep the default URL for the CloudFormation template, and choose Next.

- On the Specify Details page, review and provide values for the required parameters in the template.

- For EnvName, enter Dev.

Dev is your environment, where you want to deploy the template. AWS CloudFormation uses this value for resources in Lambda, Amazon SQS, and other services.

- After you specify the template details, choose Next.

- On the Options page, choose Next again.

- On the Review page, select I acknowledge that AWS CloudFormation might create IAM resources with custom names.

- Choose Create Stack

It takes approximately 5–10 minutes for the deployment to complete. When the stack launch is complete, it returns outputs with information about the resources that were created.

You can view the stack outputs on the AWS Management Console or by using the following AWS Command Line Interface (AWS CLI) command:

aws cloudformation describe-stacks --stack-name <stack-name> --region us-east-1 --query Stacks[0].OutputsResources created by the CloudFormation stack

- AudioRawBucket – Stores raw audio files based on the PUT event Lambda function for Amazon Transcribe to run

- AudioPrcsdBucket – Stores the processed output

- LambdaRole1 – The Lambda role with required permissions for S3 buckets, Amazon SQS, Amazon Transcribe, and CloudWatch

- LambdaFunction1 – The initial function to run Amazon Transcribe to process the audio file, create a JSON file, and update Amazon SQS

- LambdaFunction2 – The post function that reads the SQS queue, converts (aggregates) the JSON to CSV format, and loads it into an Athena table

- TaskAudioQueue– The SQS queue for storing all audio processing requests

- ScheduledRule– The CloudWatch schedule for LambdaFunction2

- AthenaNamedQuery – The Athena table definition for storing processed audio files transcriptions with object information

The Athena table for the audio text has the following definitions:

- audio_transcribe_job – The job submitted to transcribe the audio

- time_start – The beginning timestamp for the speaker

- speaker – Speaker tags (for example, spk_0, spk-1, and so on)

- speaker_text – The text from the speaker audio

Validating the solution

You can now validate that the solution works.

- Verify the AWS CloudFormation resources were created (see previous section for instructions via the console or AWS CLI).

- Upload the sample audio file to the S3 bucket AudioRawBucket.

The transcription process is asynchronous, so it can take a few minutes for the job to complete. You can check the job status on the Amazon Transcribe console and CloudWatch console.

When the transcription job is complete and Athena table transcribe_data created, you can run Athena queries to verify the transcription output. See the following select statement:

select * from "default"."transcribe_data" order by 1,2The following table shows the output for the above select statement.

| audio_transcribe_job | time_start | speaker | speaker_text |

| medical-diarization.wav | 0:00:01 | spk_0 | Hey, Jane. So what brings you into my office today? |

| medical-diarization.wav | 0:00:03 | spk_1 | Hey, Dr Michaels. Good to see you. I’m just coming in from a routine checkup. |

| medical-diarization.wav | 0:00:07 | spk_0 | All right, let’s see, I last saw you. About what, Like a year ago. And at that time, I think you were having some minor headaches. I don’t recall prescribing anything, and we said we’d maintain some observations unless things were getting worse. |

| medical-diarization.wav | 0:00:20 | spk_1 | That’s right. Actually, the headaches have gone away. I think getting more sleep with super helpful. I’ve also been more careful about my water intake throughout my work day. |

| medical-diarization.wav | 0:00:29 | spk_0 | Yeah, I’m not surprised at all. Sleep deprivation and chronic dehydration or to common contributors to potential headaches. Rest is definitely vital when you become dehydrated. Also, your brain tissue loses water, causing your brain to shrink and, you know, kind of pull away from the skull. And this contributor, the pain receptors around the brain, giving you the sensation of a headache. So how much water are you roughly taking in each day |

| medical-diarization.wav | 0:00:52 | spk_1 | of? I’ve become obsessed with drinking enough water. I have one of those fancy water bottles that have graduated markers on the side. I’ve also been logging my water intake pretty regularly on average. Drink about three litres a day. |

| medical-diarization.wav | 0:01:06 | spk_0 | That’s excellent. Before I start the routine physical exam is there anything else you like me to know? Anything you like to share? What else has been bothering you? |

Cleaning up

To avoid incurring additional charges, complete the following steps to clean up your resources when you are done with the solution:

- Delete the Athena table

transcribe_datafrom default - Delete the prefixes and objects you created from the buckets

AudioRawBucketandAudioPrcsdBucket. - Delete the CloudFormation stack, which removes your additional resources.

Conclusion

In this post, we walked through the solution, reviewed sample implementation of audio file conversion using Amazon S3, Amazon Transcribe, Amazon SQS, Lambda, and Athena, and validated the steps for processing and analyzing multi-speaker audio files.

You can further extend this solution to perform sentiment analytics and improve your customer experience. For more information, see Detect sentiment from customer reviews using Amazon Comprehend. For more information about live call and post-call analytics, see AWS announces AWS Contact Center Intelligence solutions.

About the Authors

Mahendar Gajula is a Big Data Consultant at AWS. He works with AWS customers in their journey to the cloud with a focus on Big data, Data warehouse and AI/ML projects. In his spare time, he enjoys playing tennis and spending time with his family.

Rajarao Vijjapu is a data architect with AWS. He works with AWS customers and partners to provide guidance and technical assistance about Big Data, Analytics, AI/ML and Security projects, helping them improve the value of their solutions when using AWS.

Rajarao Vijjapu is a data architect with AWS. He works with AWS customers and partners to provide guidance and technical assistance about Big Data, Analytics, AI/ML and Security projects, helping them improve the value of their solutions when using AWS.

Paloma Pineda is a Product Marketing Manager for AWS Artificial Intelligence Devices. She is passionate about the intersection of technology, art, and human centered design. Out of the office, Paloma enjoys photography, watching foreign films, and cooking French cuisine.

Paloma Pineda is a Product Marketing Manager for AWS Artificial Intelligence Devices. She is passionate about the intersection of technology, art, and human centered design. Out of the office, Paloma enjoys photography, watching foreign films, and cooking French cuisine.

Vaibhav Sethi is the Product Manager for Amazon Personalize. He focuses on delivering products that make it easier to build machine learning solutions. In his spare time, he enjoys hiking and reading.

Vaibhav Sethi is the Product Manager for Amazon Personalize. He focuses on delivering products that make it easier to build machine learning solutions. In his spare time, he enjoys hiking and reading.