This is a guest post by Neslihan Erdogan, Global Industrial IT Manager at HAYAT HOLDING.

With the ongoing digitization of the manufacturing processes and Industry 4.0, there is enormous potential to use machine learning (ML) for quality prediction. Process manufacturing is a production method that uses formulas or recipes to produce goods by combining ingredients or raw materials.

Predictive quality comprises the use of ML methods in production to estimate and classify product-related quality based on manufacturing process data with the following goals[1]:

- Quality description – The identification of relationships between process variables and product quality. For instance, how does the volume of an adhesive ingredient effect the quality parameters, such as its strength and elasticity.

- Quality prediction – The estimation of a quality variable on the basis of process variables for decision support or for automation. For example, how much kg/m3 adhesive ingredient shall be ingested to achieve certain strength and elasticity.

- Quality classification – In addition to quality prediction, this involves estimation of certain product quality types.

In this post, we share how HAYAT HOLDING—a global player with 41 companies operating in different industries, including HAYAT, the world’s fourth-largest branded diaper manufacturer, and KEAS, the world’s fifth-largest wood-based panel manufacturer—collaborated with AWS to build a solution that uses Amazon SageMaker Model Training, Amazon SageMaker Automatic Model Tuning, and Amazon SageMaker Model Deployment to continuously improve operational performance, increase product quality, and optimize manufacturing output of medium-density fiberboard (MDF) wood panels.

Product quality prediction and adhesive consumption recommendation results can be observed by field experts through dashboards in near-real time, resulting in a faster feedback loop. Laboratory results indicate a significant impact equating to savings of $300,000 annually, reducing their carbon footprint in production by preventing unnecessary chemical waste.

ML-based predictive quality in HAYAT HOLDING

HAYAT is the world’s fourth-largest branded baby diapers manufacturer and the largest paper tissue manufacturer of the EMEA. KEAS (Kastamonu Entegre Ağaç Sanayi) is a subsidy of HAYAT HOLDING, for production in the wood-based panel industry, and is positioned as the fourth in Europe and the fifth in the world.

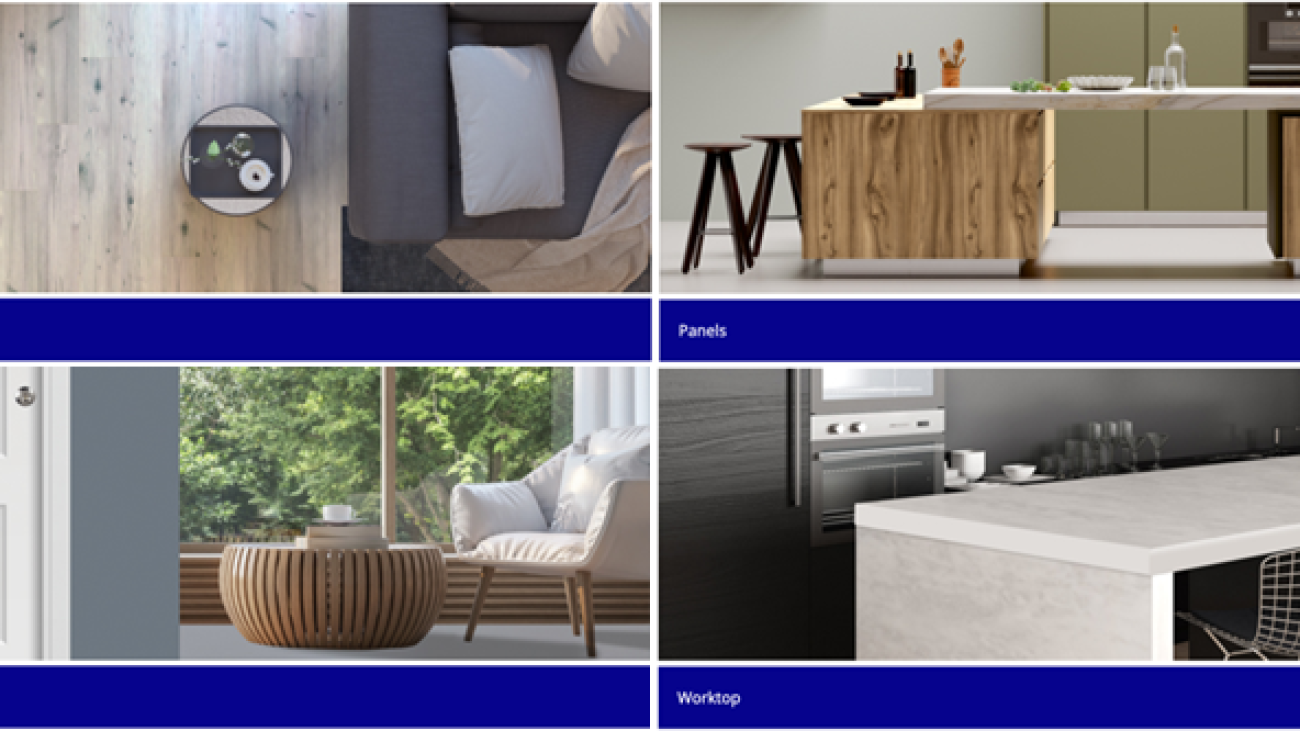

Medium-density fiberboard (MDF) is an engineered wood product made by breaking down wood residuals into fibers, combining it with adhesives, and forming it into panels by applying high temperature and pressure. It has many application areas such as furniture, cabinetry, and flooring.

Production of MDF wood panels requires extensive use of adhesives (double-digit tons consumed each year at HAYAT HOLDING).

In a typical production line, hundreds of sensors are used. Product quality is identified by tens of parameters. Applying the correct volume of adhesives is an important cost item as well as an important quality factor for the produced panel, such as density, screw holding ability, tensile strength, modulus elasticity, and bending strength. While excessive use of glue increases production costs redundantly, poor utilization of glue raises quality problems. Incorrect usage causes up to tens of thousands of dollars in a single shift. The challenge is that there is a regressive dependency of quality on the production process.

Human operators decide on the amount of glue to be used based on domain expertise. This know-how is solely empirical and takes years of expertise to build competence. To support the decision-making for the human operator, laboratory tests are performed on selected samples to precisely measure quality during production. The lab results provide feedback to the operators revealing product quality levels. Nevertheless, lab tests are not in real time and are applied with a delay of up to several hours. The human operator uses lab results to gradually adjust glue consumption to achieve the required quality threshold.

Overview of solution

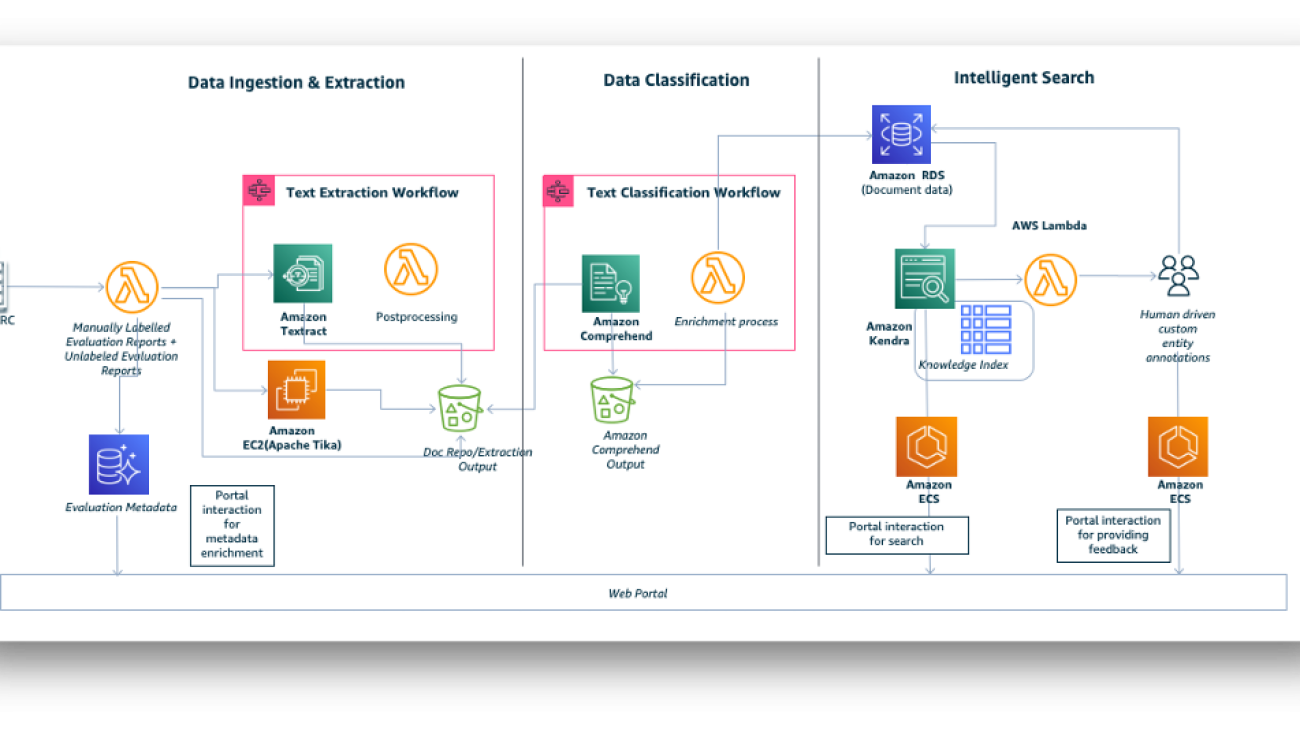

Quality prediction using ML is powerful but requires effort and skill to design, integrate with the manufacturing process, and maintain. With the support of AWS Prototyping specialists, and AWS Partner Deloitte, HAYAT HOLDING built an end-to-end pipeline as follows:

- Ingest sensor data from production plant to AWS

- Perform data preparation and ML model generation

- Deploy models at the edge

- Create operator dashboards

- Orchestrate the workflow

The following diagram illustrates the solution architecture.

Data ingestion

HAYAT HOLDING has a state-of-the art infrastructure for acquiring, recording, analyzing, and processing measurement data.

Two types of data sources exist for this use case. Process parameters are set for the production of a particular product and are usually not changed during production. Sensor data is taken during the manufacturing process and represents the actual condition of the machine.

Input data is streamed from the plant via OPC-UA through SiteWise Edge Gateway in AWS IoT Greengrass. In total, 194 sensors were imported and used to increase the accuracy of the predictions.

Model training and optimization with SageMaker automatic model tuning

Prior to the model training, a set of data preparation activities are performed. For instance, an MDF panel plant produces multiple distinct products on the same production line (multiple types and sizes of wood panels). Each batch is associated with a different product, with different raw materials and different physical characteristics. Although the equipment and process time series are recorded continuously and can be seen as a single-flow time series indexed by time, they need to be segmented by the batch they are associated with. For instance, in a shift, product panels may be produced for different durations. A sample of the produced MDF is sent to the laboratory for quality tests from time to time. Other feature engineering tasks include feature reduction, scaling, unsupervised dimensionality reduction using PCA (Principal Component Analysis), feature importance, and outlier detection.

After the data preparation phase, a two-stage approach is used to build the ML models. Lab test samples are conducted by intermittent random product sampling from the conveyor belt. Samples are sent to a laboratory for quality tests. Because the lab results can’t be presented in real time, the feedback loop is relatively slow. The first model is trained to predict lab results for product quality parameters: density, elasticity, pulling resistance, swelling, absorbed water, surface durability, moisture, surface suction, and bending resistance. The second model is trained to recommend the amount of glue to be used in production, depending on the predicted output quality.

Setting up and managing custom ML environments can be time-consuming and cumbersome. Amazon SageMaker provides a suite of built-in algorithms, pre-trained models, and pre-built solution templates to help data scientists and ML practitioners get started on training and deploying ML models quickly.

Multiple ML models were trained using SageMaker built-in algorithms for the top N most produced product types and for different quality parameters. The quality prediction models identify the relationships between glue usage and nine quality parameters. The recommendation models predict the minimum glue usage to satisfy quality requirements using the following approach: an algorithm starts from the highest allowed glue amount and reduces it step by step if all requirements are satisfied until the minimum amount of glue allowed. If the max amount of glue doesn’t satisfy all the requirements, it gives an error.

SageMaker automatic model tuning, also known as hyperparameter tuning, finds the best version of a model by running many training jobs on your dataset using the algorithm and ranges of hyperparameters that you specify. It then chooses the hyperparameter values that result in a model that performs the best, as measured by a metric that you choose.

With automatic model tuning, the team focused on defining the right objective, scoping the hyperparameters and the search space. Automatic model tuning takes care of the rest, including the infrastructure, running and orchestrating training jobs in parallel, and improving hyperparameter selection. Automatic model tuning provides a wide range of training instance types. The model was fine-tuned on c5.x2large instance types using an intelligent version of hyperparameter tuning methods that is based on the Bayesian search theory and is designed to find the best model in the shortest time.

Inference at the edge

Multiple methods are available for deploying ML models to get predictions.

SageMaker real-time inference is ideal for workloads where then are real-time, interactive, low-latency requirements. During the prototyping phase, HAYAT HOLDING deployed models to SageMaker hosting services and got endpoints that are fully managed by AWS. SageMaker multi-model endpoints provide a scalable and cost-effective solution for deploying large numbers of models. They use the same fleet of resources and a shared serving container to host all your models. This reduces hosting costs by improving endpoint utilization compared with using single-model endpoints. It also reduces deployment overhead because SageMaker manages loading models in memory and scaling them based on the traffic patterns to your endpoint.

SageMaker real-time inference is used with multi-model endpoints for cost optimization and for making all models available at all times during development. Although using an ML model for each product type results in higher inference accuracy, the cost of developing and testing these models increases accordingly, and it also becomes difficult to manage multiple models. SageMaker multi-model endpoints address these pain points and give the team a rapid and cost-effective solution to deploy multiple ML models.

Amazon SageMaker Edge provides model management for edge devices so you can optimize, secure, monitor, and maintain ML models on fleets of edge devices. Operating ML models on edge devices is challenging, because devices, unlike cloud instances, have limited compute, memory, and connectivity. After the model is deployed, you need to continuously monitor the models, because model drift can cause the quality of model to decay overtime. Monitoring models across your device fleets is difficult because you need to write custom code to collect data samples from your device and recognize skew in predictions.

For production, the SageMaker Edge Manager agent is used to make predictions with models loaded onto an AWS IoT Greengrass device.

Conclusion

HAYAT HOLDING was evaluating an advanced analytics platform as part of their digital transformation strategy and wanted to bring AI to the organization for quality prediction in production.

With the support of AWS Prototyping specialists and AWS Partner Deloitte, HAYAT HOLDING built a unique data platform architecture and an ML pipeline to address long-term business and technical needs.

HAYAT KIMYA integrated the ML solution in one of its plants. Laboratory results indicate a significant impact equating to savings of $300,000 annually, reducing their carbon footprint in production by preventing unnecessary chemical waste. The solution provides a faster feedback loop to the human operators by presenting product quality predictions and adhesive consumption recommendation results through dashboards in near-real time. The solution will eventually be deployed across HAYAT HOLDING’s other wood panel plants.

ML is a highly iterative process; over the course of a single project, data scientists train hundreds of different models, datasets, and parameters in search of maximum accuracy. SageMaker offers the most complete set of tools to harness the power of ML. It lets you organize, track, compare, and evaluate ML experiments at scale. You can boost the bottom-line impact of your ML teams to achieve significant productivity improvements using SageMaker built-in algorithms, automatic model tuning, real-time inference, and multi-model endpoints.

Accelerate time to results and optimize operations by modernizing your business approach from edge to cloud using Machine Learning on AWS. Take advantage of industry-specific innovations and solutions using AWS for Industrial.

Share your feedback and questions in the comments.

About HAYAT HOLDING

HAYAT HOLDING, whose foundations were laid in 1937, is a global player today, with 41 companies operating in different industries, including HAYAT in the fast-moving consumer goods sector, KEAS (Kastamonu Entegre Ağaç Sanayi) in the wood-based panel sector, and LIMAS in the port management sector, with a workforce of over 17,000 people. HAYAT HOLDING delivers 49 brands produced with advanced technologies in 36 production facilities in 13 countries to millions of consumers worldwide.

Operating in the fast-moving consumer goods sector, Hayat was founded in 1987. Today, rapidly advancing on the path of globalization with 21 production facilities in 8 countries around the world, Hayat is the world’s fourth-largest branded diaper manufacturer and the largest tissue producer in the Middle East, Eastern Europe, and Africa, and a major player in the fast-moving consumer goods sector. With its 16 powerful brands, including Molfix, Bebem, Molped, Joly, Bingo, Test, Has, Papia, Familia, Teno, Focus, Nelex, Goodcare, and Evony in the hygiene, home care, tissue, and personal health categories, Hayat brings HAYAT* to millions of homes in more than 100 countries.

Kastamonu Entegre Ağaç Sanayi (KEAS), the first investment of HAYAT HOLDING in its industrialization move, was founded in 1969. Continuing its uninterrupted growth towards becoming a global power in its sector, it ranks fourth in Europe and fifth in the world. KEAS ranks first in the industry with its approximately 7,000 employees and exports to more than 100 countries.

*“Hayat” means “life” in Turkish.

References

- Tercan H, “Machine learning and deep learning based predictive quality in manufacturing: a systematic review”, Journal of Intelligent Manufacturing, 2022.

About the authors

Neslihan Erdoğan, (BSc and MSc in Electrical Engineering), held various technical & business roles as a specialist, architect and manager in Information Technologies. She has been working in HAYAT as the Global Industrial IT Manager and led Industry 4.0, Digital Transformation, OT Security and Data & AI projects.

Neslihan Erdoğan, (BSc and MSc in Electrical Engineering), held various technical & business roles as a specialist, architect and manager in Information Technologies. She has been working in HAYAT as the Global Industrial IT Manager and led Industry 4.0, Digital Transformation, OT Security and Data & AI projects.

Çağrı Yurtseven (BSc in Electrical-Electronics Engineering, Bogazici University) is the Enterprise Account Manager at Amazon Web Services. He is leading Sustainability and Industrial IOT initiatives in Turkey while helping customers realize their full potential by showing the art of the possible on AWS.

Çağrı Yurtseven (BSc in Electrical-Electronics Engineering, Bogazici University) is the Enterprise Account Manager at Amazon Web Services. He is leading Sustainability and Industrial IOT initiatives in Turkey while helping customers realize their full potential by showing the art of the possible on AWS.

Cenk Sezgin (PhD – Electrical Electronics Engineering) is a Principal Manager at AWS EMEA Prototyping Labs. He supports customers with exploration, ideation, engineering and development of state-of-the-art solutions using emerging technologies such as IoT, Analytics, AI/ML & Serverless.

Cenk Sezgin (PhD – Electrical Electronics Engineering) is a Principal Manager at AWS EMEA Prototyping Labs. He supports customers with exploration, ideation, engineering and development of state-of-the-art solutions using emerging technologies such as IoT, Analytics, AI/ML & Serverless.

Hasan-Basri AKIRMAK (BSc and MSc in Computer Engineering and Executive MBA in Graduate School of Business) is a Principal Solutions Architect at Amazon Web Services. He is a business technologist advising enterprise segment clients. His area of specialty is designing architectures and business cases on large scale data processing systems and Machine Learning solutions. Hasan has delivered Business development, Systems Integration, Program Management for clients in Europe, Middle East and Africa. Since 2016 he mentored hundreds of entrepreneurs at startup incubation programs pro-bono.

Hasan-Basri AKIRMAK (BSc and MSc in Computer Engineering and Executive MBA in Graduate School of Business) is a Principal Solutions Architect at Amazon Web Services. He is a business technologist advising enterprise segment clients. His area of specialty is designing architectures and business cases on large scale data processing systems and Machine Learning solutions. Hasan has delivered Business development, Systems Integration, Program Management for clients in Europe, Middle East and Africa. Since 2016 he mentored hundreds of entrepreneurs at startup incubation programs pro-bono.

Mustafa Aldemir (BSc in Electrical-Electronics Engineering, MSc in Mechatronics and PhD-candidate in Computer Science) is the Robotics Prototyping Lead at Amazon Web Services. He has been designing and developing Internet of Things and Machine Learning solutions for some of the biggest customers across EMEA and leading their teams in implementing them. Meanwhile, he has been delivering AI courses at Amazon Machine Learning University and Oxford University.

Mustafa Aldemir (BSc in Electrical-Electronics Engineering, MSc in Mechatronics and PhD-candidate in Computer Science) is the Robotics Prototyping Lead at Amazon Web Services. He has been designing and developing Internet of Things and Machine Learning solutions for some of the biggest customers across EMEA and leading their teams in implementing them. Meanwhile, he has been delivering AI courses at Amazon Machine Learning University and Oxford University.

Shyam Srinivasan is on the AWS low-code/no-code ML product team. He cares about making the world a better place through technology and loves being part of this journey. In his spare time, Shyam likes to run long distances, travel around the world, and experience new cultures with family and friends.

Shyam Srinivasan is on the AWS low-code/no-code ML product team. He cares about making the world a better place through technology and loves being part of this journey. In his spare time, Shyam likes to run long distances, travel around the world, and experience new cultures with family and friends.

Oscar A. Garcia is the Director of the Independent Evaluation Office (IEO) of the United Nations Development Program (UNDP). As Director, he provides strategic direction, thought leadership, and credible evaluations to advance UNDP work in helping countries progress towards national SDG achievement. Oscar also currently serves as the Chairperson of the United Nations Evaluation Group (UNEG). He has more than 25 years of experience in areas of strategic planning, evaluation, and results-based management for sustainable development. Prior to joining the IEO as Director in 2020, he served as Director of IFAD’s Independent Office of Evaluation (IOE), and Head of Advisory Services for Green Economy, UNEP. Oscar has authored books and articles on development evaluation, including one on information and communication technology for evaluation. He is an economist with a master’s degree in Organizational Change Management, New School University (NY), and an MBA from Bolivian Catholic University, in association with the Harvard Institute for International Development.

Oscar A. Garcia is the Director of the Independent Evaluation Office (IEO) of the United Nations Development Program (UNDP). As Director, he provides strategic direction, thought leadership, and credible evaluations to advance UNDP work in helping countries progress towards national SDG achievement. Oscar also currently serves as the Chairperson of the United Nations Evaluation Group (UNEG). He has more than 25 years of experience in areas of strategic planning, evaluation, and results-based management for sustainable development. Prior to joining the IEO as Director in 2020, he served as Director of IFAD’s Independent Office of Evaluation (IOE), and Head of Advisory Services for Green Economy, UNEP. Oscar has authored books and articles on development evaluation, including one on information and communication technology for evaluation. He is an economist with a master’s degree in Organizational Change Management, New School University (NY), and an MBA from Bolivian Catholic University, in association with the Harvard Institute for International Development. Sathya Balakrishnan is a Sr. Customer Delivery Architect in the Professional Services team at AWS, specializing in data and ML solutions. He works with US federal financial clients. He is passionate about building pragmatic solutions to solve customers’ business problems. In his spare time, he enjoys watching movies and hiking with his family.

Sathya Balakrishnan is a Sr. Customer Delivery Architect in the Professional Services team at AWS, specializing in data and ML solutions. He works with US federal financial clients. He is passionate about building pragmatic solutions to solve customers’ business problems. In his spare time, he enjoys watching movies and hiking with his family. Thuan Tran is a Senior Solutions Architect in the World Wide Public Sector supporting the United Nations. He is passionate about using AWS technology to help customers conceptualize the art of the possible. In this spare time, he enjoys surfing, mountain biking, axe throwing, and spending time with family and friends.

Thuan Tran is a Senior Solutions Architect in the World Wide Public Sector supporting the United Nations. He is passionate about using AWS technology to help customers conceptualize the art of the possible. In this spare time, he enjoys surfing, mountain biking, axe throwing, and spending time with family and friends. Prince Mallari is an NLP Data Scientist in the Professional Services team at AWS, specializing in applications of NLP for public sector customers. He is passionate about using ML as a tool to allow customers to be more productive. In his spare time, he enjoys playing video games and developing one with his friends.

Prince Mallari is an NLP Data Scientist in the Professional Services team at AWS, specializing in applications of NLP for public sector customers. He is passionate about using ML as a tool to allow customers to be more productive. In his spare time, he enjoys playing video games and developing one with his friends.