Today we’re sitting down with Peter Lee, head of Microsoft Research. Peter and a number of MSR colleagues, including myself, have had the privilege of working to evaluate and experiment with GPT-4 and support its integration into Microsoft products.

Peter has also deeply explored the potential application of GPT-4 in health care, where its powerful reasoning and language capabilities could make it a useful copilot for practitioners in patient interaction, managing paperwork, and many other tasks.

Welcome to AI Frontiers.

[MUSIC FADES]

I’m going to jump right in here, Peter. So you and I have known each other now for a few years. And one of the values I believe that you and I share is around societal impact and in particular creating spaces and opportunities where science and technology research can have the maximum benefit to society. In fact, this shared value is one of the reasons I found coming to Redmond to work with you an exciting prospect

Now, in preparing for this episode, I listened again to your discussion with our colleague Kevin Scott on his podcast around the idea of research in context. And the world’s changed a little bit since then, and I just wonder how that thought of research in context kind of finds you in the current moment.

Peter Lee: It’s such an important question and, you know, research in context, I think the way I explained it before is about inevitable futures. You try to think about, you know, what will definitely be true about the world at some point in the future. It might be a future just one year from now or maybe 30 years from now. But if you think about that, you know what’s definitely going to be true about the world and then try to work backwards from there.

And I think the example I gave in that podcast with Kevin was, well, 10 years from now, we feel very confident as scientists that cancer will be a largely solved problem. But aging demographics on multiple continents, particularly North America but also Europe and Asia, is going to give huge rise to age-related neurological disease. And so knowing that, that’s a very different world than today, because today most of medical research funding is focused on cancer research, not on neurological disease.

And so what are the implications of that change? And what does that tell us about what kinds of research we should be doing? The research is still very future oriented. You’re looking ahead a decade or more, but it’s situated in the real world. Research in context. And so now if we think about inevitable futures, well, it’s looking increasingly inevitable that very general forms of artificial intelligence at or potentially beyond human intelligence are inevitable. And maybe very quickly, you know, like in much, much less than 10 years, maybe much less than five years.

And so what are the implications for research and the kinds of research questions and problems we should be thinking about and working on today? That just seems so much more disruptive, so much more profound, and so much more challenging for all of us than the cancer and neurological disease thing, as big as those are.

I was reflecting a little bit through my research career, and I realized I’ve lived through one aspect of this disruption five times before. The first time was when I was still an assistant professor in the late 1980s at Carnegie Mellon University, and, uh, Carnegie Mellon University, as well as several other top universities’, uh, computer science departments, had a lot of, of really fantastic research on 3D computer graphics.

It was really a big deal. And so ideas like ray tracing, radiosity, uh, silicon architectures for accelerating these things were being invented at universities, and there was a big academic conference called SIGGRAPH that would draw hundreds of professors and graduate students, uh, to present their results. And then by the early 1990s, startup companies started taking these research ideas and founding companies to try to make 3D computer graphics real. One notable company that got founded in 1993 was NVIDIA.

You know, over the course of the 1990s, this ended up being a triumph of fundamental computer science research, now to the point where today you literally feel naked and vulnerable if you don’t have a GPU in your pocket. Like if you leave your home, you know, without your mobile phone, uh, it feels bad.

And so what happened is there’s a triumph of computer science research, let’s say in this case in 3D computer graphics, that ultimately resulted in a fundamental infrastructure for life, at least in the developed world. In that transition, which is just a positive outcome of research, it also had some disruptive effect on research.

You know, in 1991, when Microsoft Research was founded, one of the founding research groups was a 3D computer graphics research group that was amongst, uh, the first three research groups for MSR. At Carnegie Mellon University and at Microsoft Research, we don’t have 3D computer graphics research anymore. There had to be a transition and a disruptive impact on researchers who had been building their careers on this. Even with the triumph of things, when you’re talking about the scale of infrastructure for human life, it moves out of the realm completely of—of fundamental research. And that’s happened with compiler design. That was my, uh, area of research. It’s happened with wireless networking; it’s happened with hypertext and, you know, hyperlinked document research, with operating systems research, and all of these things, you know, have become things that that you depend on all day, every day as you go about your life. And they all represent just majestic achievements of computer science research. We are now, I believe, right in the midst of that transition for large language models.

Llorens: I wonder if you see this particular transition, though, as qualitatively different in that those other technologies are ones that blend into the background. You take them for granted. You mentioned that I leave the home every day with a GPU in my pocket, but I don’t think of it that way. Then again, maybe I have some kind of personification of my phone that I’m not thinking of. But certainly, with language models, it’s a foreground effect. And I wonder if, if you see something different there.

Lee: You know, it’s such a good question, and I don’t know the answer to that, but I agree it feels different. I think in terms of the impact on research labs, on academia, on the researchers themselves who have been building careers in this space, the effects might not be that different. But for us, as the consumers and users of this technology, it certainly does feel different. There’s something about these large language models that seems more profound than, let’s say, the movement of pinch-to-zoom UX design, you know, out of academic research labs into, into our pockets. This might get into this big question about, I think, the hardwiring in our brains that when we interact with these large language models, even though we know consciously they aren’t, you know, sentient beings with feelings and emotions, our hardwiring forces us—we can’t resist feeling that way.

I think it’s a, it’s a deep sort of thing that we evolved, you know, in the same way that when we look at an optical illusion, we can be told rationally that it’s an optical illusion, but the hardwiring in our kind of visual perception, just no amount of willpower can overcome, to see past the optical illusion.

And similarly, I think there’s a similar hardwiring that, you know, we are drawn to anthropomorphize these systems, and that does seem to put it into the foreground, as you’ve—as you’ve put it. Yeah, I think for our human experience and our lives, it does seem like it’ll feel—your term is a good one—it’ll feel more in the foreground.

Llorens: Let’s pin some of these, uh, concepts because I think we’ll come back to them. I’d like to turn our attention now to the health aspect of your current endeavors and your path at Microsoft.

You’ve been eloquent about the many challenges around translating frontier AI technologies into the health system and into the health care space in general. In our interview, [LAUGHS] actually, um, when I came here to Redmond, you described the grueling work that would be needed there. I’d like to talk a little bit about those challenges in the context of the emergent capabilities that we’re seeing in GPT-4 and the wave of large-scale AI models that we’re seeing. What’s different about this wave of AI technologies relative to those systemic challenges in, in the health space?

Lee: Yeah, and I think to be really correct and precise about it, we don’t know that GPT-4 will be the difference maker. That still has to be proven. I think it really will, but it, it has to actually happen because we’ve been here before where there’s been so much optimism about how technology can really help health care and in advanced medicine. And we’ve just been disappointed over and over again. You know, I think that those challenges stem from maybe a little bit of overoptimism or what I call irrational exuberance. As techies, we look at some of the problems in health care and we think, oh, we can solve those. You know, we look at the challenges of reading radiological images and measuring tumor growth, or we look at, uh, the problem of, uh, ranking differential diagnosis options or therapeutic options, or we look at the problem of extracting billing codes out of an unstructured medical note. These are all problems that we think we know how to solve in computer science. And then in the medical community, they look at the technology industry and computer science research, and they’re dazzled by all of the snazzy, impressive-looking AI and machine learning and cloud computing that we have. And so there is this incredible optimism coming from both sides that ends up feeding into overoptimism because the actual challenges of integrating technology into the workflow of health care and medicine, of making sure that it’s safe and sort of getting that workflow altered to really harness the best of the technology capabilities that we have now, ends up being really, really difficult.

Furthermore, when we get into actual application of medicine, so that’s in diagnosis and in developing therapeutic pathways, they happen in a really fluid environment, which in a machine learning context involves a lot of confounding factors. And those confounding factors ended up being really important because medicine today is founded on precise understanding of causes and effects, of causal reasoning.

Our best tools right now in machine learning are essentially correlation machines. And as the old saying goes, correlation is not causation. And so if you take a classic example like does smoking cause cancer, it’s very important to take account of the confounding effects and know for certain that there’s a cause-and-effect relationship there. And so there’s always been those sorts of issues.

When we’re talking about GPT-4, I remember I was sitting next to Eric Horvitz the first time it got exposed to me. So Greg Brockman from OpenAI, who’s amazing, and actually his whole team at OpenAI is just spectacularly good. And, uh, Greg was giving a demonstration of an early version of GPT-4 that was codenamed Davinci 3 at the time, and he was showing, as part of the demo, the ability of the system to solve biology problems from the AP biology exam.

And it, you know, gets, I think, a score of 5, the maximum score of 5, on that exam. Of course, the AP exam is this multiple-choice exam, so it was making those multiple choices. But then Greg was able to ask the system to explain itself. How did you come up with that answer? And it would explain, in natural language, its answer. And what jumped out at me was in its explanation, it was using the word “because.”

“Well, I think the answer is C, because, you know, when you look at this aspect, uh, statement of the problem, this causes something else to happen, then that causes some other biological thing to happen, and therefore we can rule out answers A and B and E, and then because of this other factor, we can rule out answer D, and all the causes and effects line up.”

And so I turned immediately to Eric Horvitz, who was sitting next to me, and I said, “Eric, where is that cause-and-effect analysis coming from? This is just a large language model. This should be impossible.” And Eric just looked at me, and he just shook his head and he said, “I have no idea.” And it was just this mysterious thing.

And so that is just one of a hundred aspects of GPT-4 that we’ve been studying over the past now more than half year that seemed to overcome some of the things that have been blockers to the integration of machine intelligence in health care and medicine, like the ability to actually reason and explain its reasoning in these medical scenarios, in medical terms, and that plus its generality just seems to give us just a lot more optimism that this could finally be the very significant difference maker.

The other aspect is that we don’t have to focus squarely on that clinical application. We’ve discovered that, wow, this thing is really good at filling out forms and reducing paperwork burden. It knows how to apply for prior authorization for health care reimbursement. That’s part of the crushing kind of administrative and clerical burden that doctors are under right now.

This thing just seems to be great at that. And that doesn’t really impinge on life-or-death diagnostic or therapeutic decisions. But they happen in the back office. And those back-office functions, again, are bread and butter for Microsoft’s businesses. We know how to interact and sell and deploy technologies there, and so working with OpenAI, it seems like, again, there’s just a ton of reason why we think that it could really make a big difference.

Llorens: Every new technology has opportunities and risks associated with it. This new class of AI models and systems, you know, they’re fundamentally different because they’re not learning, uh, specialized function mapping. There were many open problems on even that kind of machine learning in various applications, and there still are, but instead, it’s—it’s got this general-purpose kind of quality to it. How do you see both the opportunities and the risks associated with this kind of general-purpose technology in the context of, of health care, for example?

Lee: Well, I—I think one thing that has made an unfortunate amount of social media and public media attention are those times when the system hallucinates or goes off the rails. So hallucination is actually a term which isn’t a very nice term. It really, for listeners who aren’t familiar with the idea, is the problem that GPT-4 and other similar systems can have sometimes where they, uh, make stuff up, fabricate, uh, information.

You know, over the many months now that we’ve been working on this, uh, we’ve witnessed the steady evolution of GPT-4, and it hallucinates less and less. But what we’ve also come to understand is that it seems that that tendency is also related to GPT-4’s ability to be creative, to make informed, educated guesses, to engage in intelligent speculation.

And if you think about the practice of medicine, in many situations, that’s what doctors and nurses are doing. And so there’s sort of a fine line here in the desire to make sure that this thing doesn’t make mistakes versus its ability to operate in problem-solving scenarios that—the way I would put it is—for the first time, we have an AI system where you can ask it questions that don’t have any known answer. It turns out that that’s incredibly useful. But now the question is—and the risk is—can you trust the answers that you get? One of the things that happens is GPT-4 has some limitations, particularly that can be exposed fairly easily in mathematics. It seems to be very good at, say, differential equations and calculus at a basic level, but I have found that it makes some strange and elementary errors in basic statistics.

There’s an example from my colleague at Harvard Medical School, Zak Kohane, uh, where he uses standard Pearson correlation kinds of math problems, and it seems to consistently forget to square a term and—and make a mistake. And then what is interesting is when you point out the mistake to GPT-4, its first impulse sometimes is to say, “Uh, no, I didn’t make a mistake; you made a mistake.” Now that tendency to kind of accuse the user of making the mistake, it doesn’t happen so much anymore as the system has improved, but we still in many medical scenarios where there’s this kind of problem-solving have gotten in the habit of having a second instance of GPT-4 look over the work of the first one because it seems to be less attached to its own answers that way and it spots errors very readily.

So that whole story is a long-winded way of saying that there are risks because we’re asking this AI system for the first time to tackle problems that require some speculation, require some guessing, and may not have precise answers. That’s what medicine is at core. Now the question is to what extent can we trust the thing, but also, what are the techniques for making sure that the answers are as good as possible. So one technique that we’ve fallen into the habit of is having a second instance. And, by the way, that second instance ends up really being useful for detecting errors made by the human doctor, as well, because that second instance doesn’t care whether the answers were produced by man or machine. And so that ends up being important. But now moving away from that, there are bigger questions that—as you and I have discussed a lot, Ashley, at work—pertain to this phrase responsible AI, uh, which has been a research area in computer science research. And that term, I think you and I have discussed, doesn’t feel apt anymore.

I don’t know if it should be called societal AI or something like that. And I know you have opinions about this. You know, it’s not just errors and correctness. It’s not just the possibility that these things might be goaded into saying something harmful or promoting misinformation, but there are bigger issues about regulation; about job displacements, perhaps at societal scale; about new digital divides; about haves and have-nots with respect to access to these things. And so there are now these bigger looming issues that pertain to the idea of risks of these things, and they affect medicine and health care directly, as well.

Llorens: Certainly, this matter of trust is multifaceted. You know, there’s trust at the level of institutions, and then there’s trust at the level of individual human beings that need to make decisions, tough decisions, you know—where, when, and if to use an AI technology in the context of a workflow. What do you see in terms of health care professionals making those kinds of decisions? Any barriers to adoption that you would see at the level of those kinds of independent decisions? And what’s the way forward there?

Lee: That’s the crucial question of today right now. There is a lot of discussion about to what extent and how should, for medical uses, how should GPT-4 and its ilk be regulated. Let’s just take the United States context, but there are similar discussions in the UK, Europe, Brazil, Asia, China, and so on.

In the United States, there’s a regulatory agency, the Food and Drug Administration, the FDA, and they actually have authority to regulate medical devices. And there’s a category of medical devices called SaMDs, software as a medical device, and the big discussion really over the past, I would say, four or five years has been how to regulate SaMDs that are based on machine learning, or AI. Steadily, there’s been, uh, more and more approval by the FDA of medical devices that use machine learning, and I think the FDA and the United States has been getting closer and closer to actually having a fairly, uh, solid framework for validating ML-based medical devices for clinical use. As far as we’ve been able to tell, those emerging frameworks don’t apply at all to GPT-4. The methods for doing the clinical validation do not make sense and don’t work for GPT-4.

And so a first question to ask is—even before you get to, should this thing be regulated?—is if you were to regulate it, how on earth would you do it. Uh, because it’s basically putting a doctor’s brain in a box. And so, Ashley, if I put a doctor—let’s take our colleague Jim Weinstein, you know, a great spine surgeon. If we put his brain in a box and I give it to you and ask you, “Please validate this thing,” how on earth do you think about that? What’s the framework for that? And so my conclusion in all of this—it’s possible that regulators will react and impose some rules, but I think it would be a mistake, because I think my fundamental conclusion of all this is that at least for the time being, the rules of application engagement have to apply to human beings, not to the machines.

Now the question is what should doctors and nurses and, you know, receptionists and insurance adjusters, and all of the people involved, you know, hospital administrators, what are their guidelines and what is and isn’t appropriate use of these things. And I think that those decisions are not a matter for the regulators, but that the medical community itself should take ownership of the development of those guidelines and those rules of engagement and encourage, and if necessary, find ways to impose—maybe through medical licensing and other certification—adherence to those things.

That’s where we’re at today. Someday in the future—and we would encourage and in fact we are actively encouraging universities to create research projects that would try to explore frameworks for clinical validation of a brain in a box, and if those research projects bear fruit, then they might end up informing and creating a foundation for regulators like the FDA to have a new form of medical device. I don’t know what you would call it, AI MD, maybe, where you could actually relieve some of the burden from human beings and instead have a version of some sense of a validated, certified brain in a box. But until we get there, you know, I think it’s—it’s really on human beings to kind of develop and monitor and enforce their own behavior.

Llorens: I think some of these questions around test and evaluation, around assurance, are at least as interesting as, [LAUGHS] you know—doing research in that space is going to be at least as interesting as—as creating the models themselves, for sure.

Lee: Yes. By the way, I want to take this opportunity just to commend Sam Altman and the OpenAI folks. I feel like, uh, you and I and other colleagues here at Microsoft Research, we’re in an extremely privileged position to get very early access, specifically to try to flesh out and get some early understanding of the implications for really critical areas of human development like health and medicine, education, and so on.

The instigator was really Sam Altman and crew at OpenAI. They saw the need for this, and they really engaged with us at Microsoft Research to kind of dive deep, and they gave us a lot of latitude to kind of explore deeply in as kind of honest and unvarnished a way as possible, and I think it’s important, and I’m hoping that as we share this with the world, that—that there can be an informed discussion and debate about things. I think it would be a mistake for, say, regulators or anyone to overreact at this point. This needs study. It needs debate. It needs kind of careful consideration, uh, just to understand what we’re dealing with here.

Llorens: Yeah, what a—what a privilege it’s been to be anywhere near the epicenter of these—of these advancements. Just briefly back to this idea of a brain in a box. One of the super interesting aspects of that is it’s not a human brain, right? So some of what we might intuitively think about when you say brain in the box doesn’t really apply, and it gets back to this notion of test and evaluation in that if I give a licensing exam, say, to the brain in the box and it passes it with flying colors, had that been a human, there would have been other things about the intelligence of that entity that are underlying assumptions that are not explicitly tested in that test that then those combined with the knowledge required for the certification makes you fit to do some job. It’s just interesting; there are ways in which the brain that we can currently conceive of as being an AI in that box underperforms human intelligence in some ways and overperforms it in others.

Lee: Right.

Llorens: Verifying and assuring that brain in that—that box I think is going to be just a really interesting challenge.

Lee: Yeah. Let me acknowledge that there are probably going to be a lot of listeners to this podcast who will really object to the idea of “brain in the box” because it crosses the line of kind of anthropomorphizing these systems. And I acknowledge that, that there’s probably a better way to talk about this than doing that. But I’m intentionally being overdramatic by using that phrase just to drive home the point, what a different beast this is when we’re talking about something like clinical validation. It’s not the kind of narrow AI—it’s not like a machine learning system that gives you a precise signature of a T-cell receptor repertoire. There’s a single right answer to those things. In fact, you can freeze the model weights in that machine learning system as we’ve done collaboratively with Adaptive Biotechnologies in order to get an FDA approval as a medical device, as an SaMD. There’s nothing that is—this is so much more stochastic. The model weights matter, but they’re not the fundamental thing.

There’s an alignment of a self-attention network that is in constant evolution. And you’re right, though, that it’s not a brain in some really very important ways. There’s no episodic memory. Uh, it’s not learning actively. And so it, I guess to your point, it is just, it’s a different thing. The big important thing I’m trying to say here is it’s also just different from all the previous machine learning systems that we’ve tried and successfully inserted into health care and medicine.

Llorens: And to your point, all the thinking around various kinds of societally important frameworks are trying to catch up to that previous generation and not yet even aimed really adequately, I think, at these new technologies. You know, as we start to wrap up here, maybe I’ll invoke Peter Lee, the head of Microsoft Research, again, [LAUGHS] kind of—kind of where we started. This is a watershed moment for AI and for computing research, uh, more broadly. And in that context, what do you see next for computing research?

Lee: Of course, AI is just looming so large and Microsoft Research is in a weird spot. You know, I had talked before about the early days of 3D computer graphics and the founding of NVIDIA and the decade-long kind of industrialization of 3D computer graphics, going from research to just, you know, pure infrastructure, technical infrastructure of life. And so with respect to AI, this flavor of AI, we’re sort of at the nexus of that. And Microsoft Research is in a really interesting position, because we are at once contributors to all of the research that is making what OpenAI is doing possible, along with, you know, great researchers and research labs around the world. We’re also then part of the company, Microsoft, that wants to make this with OpenAI a part of the infrastructure of everyday life for everybody. So we’re part of that transition. And so I think for that reason, Microsoft Research, uh, will be very focused on kind of major threads in AI; in fact, we’ve sort of identified five major AI threads.

One we’ve talked about, which is this sort of AI in society and the societal impact, which encompasses also responsible AI and so on. One that our colleague here at Microsoft Research Sébastien Bubeck has been advancing is this notion of the physics of AGI. There has always been a very important thread of theoretical computer science, uh, in machine learning. But what we’re finding is that that style of research is increasingly applicable to trying to understand the fundamental capabilities, limits, and trend lines for these large language models. And you don’t anymore get kind of hard mathematical theorems, but it’s still kind of mathematically oriented, just like physics of the cosmos and of the Big Bang and so on, so physics of AGI.

There’s a third aspect, which more is about the application level. And we’ve been, I think in some parts of Microsoft Research, calling that costar or copilot, you know, the idea of how is this thing a companion that amplifies what you’re trying to do every day in life? You know, how can that happen? What are the modes of interaction? And so on.

And then there is AI4Science. And, you know, we’ve made a big deal about this, and we still see just tremendous just evidence, in mounting evidence, that these large AI systems can give us new ways to make scientific discoveries in physics, in astronomy, in chemistry, biology, and the like. And that, you know, ends up being, you know, just really incredible.

And then there’s the core nuts and bolts, what we call model innovation. Just a little while ago, we released new model architectures, one called Kosmos, for doing multimodal kind of machine learning and classification and recognition interaction. Earlier, we did VALL-E, you know, which just based on a three-second sample of speech is able to ascertain your speech patterns and replicate speech. And those are kind of in the realm of model innovations, um, that will keep happening.

The long-term trajectory is that at some point, if Microsoft and other companies are successful, OpenAI and others, this will become a completely industrialized part of the infrastructure of our lives. And I think I would expect the research on large language models specifically to start to fade over the next decade. But then, whole new vistas will open up, and that’s on top of all the other things we do in cybersecurity, and in privacy and security, and the physical sciences, and on and on and on. For sure, it’s just a very, very special time in AI, especially along those five dimensions.

Llorens: It will be really interesting to see which aspects of the technology sink into the background and become part of the foundation and which ones remain up close and foregrounded and how those aspects change what it means to be human in some ways and maybe to be—to be intelligent, uh, in some ways. Fascinating discussion, Peter. Really appreciate the time today.

Lee: It was really great to have a chance to chat with you about things and always just great to spend time with you, Ashley.

Llorens: Likewise.

[MUSIC]

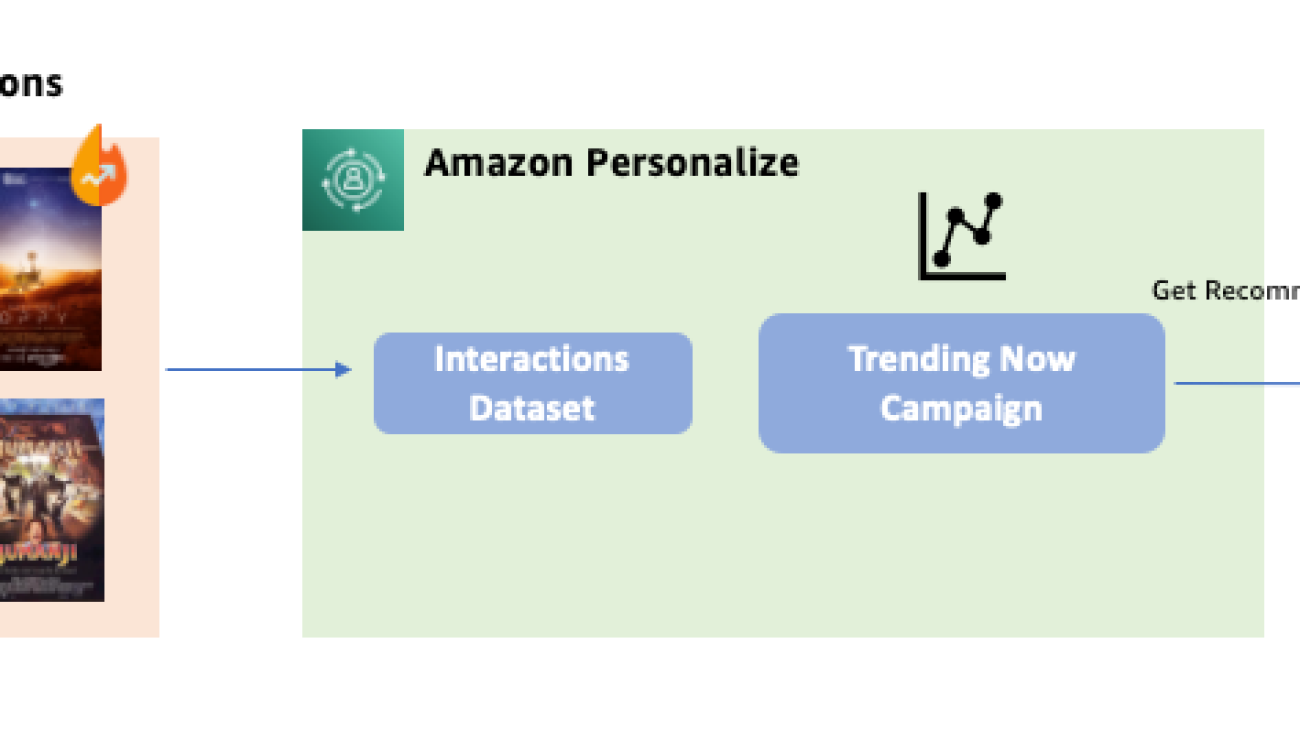

Vamshi Krishna Enabothala is a Sr. Applied AI Specialist Architect at AWS. He works with customers from different sectors to accelerate high-impact data, analytics, and machine learning initiatives. He is passionate about recommendation systems, NLP, and computer vision areas in AI and ML. Outside of work, Vamshi is an RC enthusiast, building RC equipment (planes, cars, and drones), and also enjoys gardening.

Vamshi Krishna Enabothala is a Sr. Applied AI Specialist Architect at AWS. He works with customers from different sectors to accelerate high-impact data, analytics, and machine learning initiatives. He is passionate about recommendation systems, NLP, and computer vision areas in AI and ML. Outside of work, Vamshi is an RC enthusiast, building RC equipment (planes, cars, and drones), and also enjoys gardening. Anchit Gupta is a Senior Product Manager for Amazon Personalize. She focuses on delivering products that make it easier to build machine learning solutions. In her spare time, she enjoys cooking, playing board/card games, and reading.

Anchit Gupta is a Senior Product Manager for Amazon Personalize. She focuses on delivering products that make it easier to build machine learning solutions. In her spare time, she enjoys cooking, playing board/card games, and reading. Abhishek Mangal is a Software Engineer for Amazon Personalize and works on architecting software systems to serve customers at scale. In his spare time, he likes to watch anime and believes ‘One Piece’ is the greatest piece of story-telling in recent history.

Abhishek Mangal is a Software Engineer for Amazon Personalize and works on architecting software systems to serve customers at scale. In his spare time, he likes to watch anime and believes ‘One Piece’ is the greatest piece of story-telling in recent history.

Lyme, A New T-Cell Clinical Test for the Detection of Early Lyme Disease

Lyme, A New T-Cell Clinical Test for the Detection of Early Lyme Disease

Katie Couric hosts Future Ready by Think with Google, a new interview series featuring experts in AI, cybersecurity and psychology.

Katie Couric hosts Future Ready by Think with Google, a new interview series featuring experts in AI, cybersecurity and psychology.

NVIDIA GeForce NOW (@NVIDIAGFN)

NVIDIA GeForce NOW (@NVIDIAGFN)