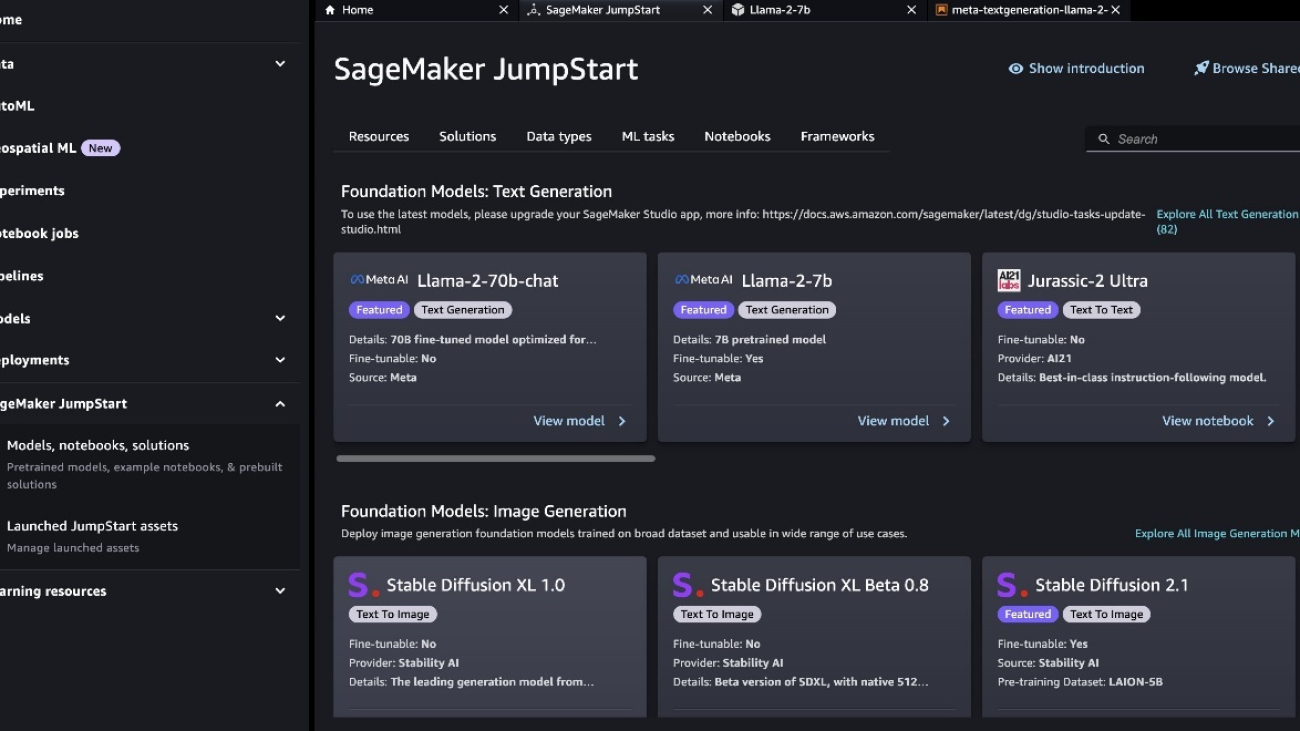

Today, we are excited to announce the capability to fine-tune Llama 2 models by Meta using Amazon SageMaker JumpStart. The Llama 2 family of large language models (LLMs) is a collection of pre-trained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters. Fine-tuned LLMs, called Llama-2-chat, are optimized for dialogue use cases. You can easily try out these models and use them with SageMaker JumpStart, which is a machine learning (ML) hub that provides access to algorithms, models, and ML solutions so you can quickly get started with ML. Now you can also fine-tune 7 billion, 13 billion, and 70 billion parameters Llama 2 text generation models on SageMaker JumpStart using the Amazon SageMaker Studio UI with a few clicks or using the SageMaker Python SDK.

Generative AI foundation models have been the focus of most of the ML and artificial intelligence research and use cases for over a year now. These foundation models perform very well with generative tasks, such as text generation, summarization, question answering, image and video generation, and more, because of their large size and also because they are trained on several large datasets and hundreds of tasks. Despite the great generalization capabilities of these models, there are often use cases that have very specific domain data (such as healthcare or financial services), because of which these models may not be able to provide good results for these use cases. This results in a need for further fine-tuning of these generative AI models over the use case-specific and domain-specific data.

In this post, we walk through how to fine-tune Llama 2 pre-trained text generation models via SageMaker JumpStart.

What is Llama 2

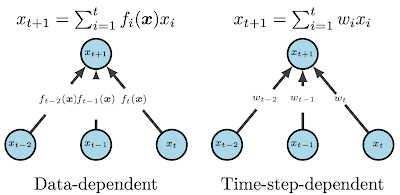

Llama 2 is an auto-regressive language model that uses an optimized transformer architecture. Llama 2 is intended for commercial and research use in English. It comes in a range of parameter sizes—7 billion, 13 billion, and 70 billion—as well as pre-trained and fine-tuned variations. According to Meta, the tuned versions use supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF) to align to human preferences for helpfulness and safety. Llama 2 was pre-trained on 2 trillion tokens of data from publicly available sources. The tuned models are intended for assistant-like chat, whereas pre-trained models can be adapted for a variety of natural language generation tasks. Regardless of which version of the model a developer uses, the responsible use guide from Meta can assist in guiding additional fine-tuning that may be necessary to customize and optimize the models with appropriate safety mitigations.

Currently, Llama 2 is available in the following regions:

- Deploy pre-trained model available:

"us-west-2", "us-east-1", "us-east-2", "eu-west-1", "ap-southeast-1", "ap-southeast-2"

- Fine-tune and deploy the fine-tuned model:

“us-east-1”, “us-west-2”,“eu-west-1”

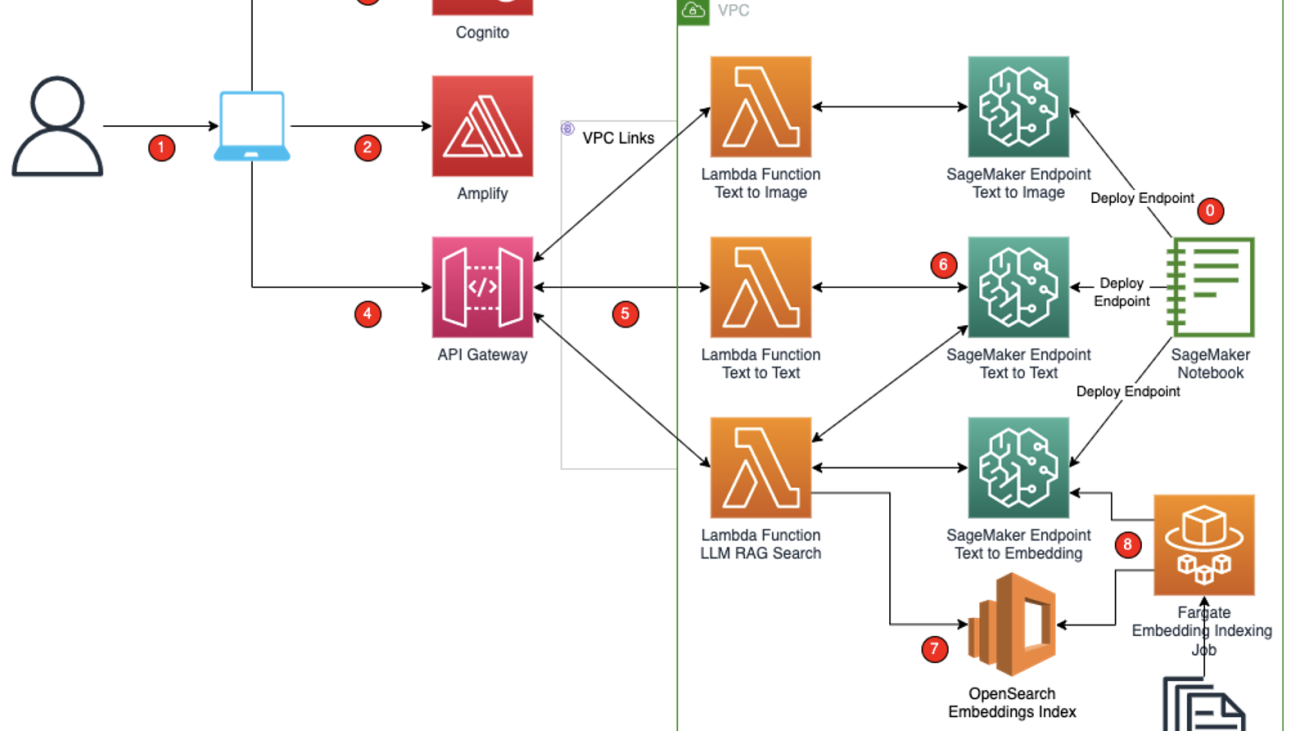

What is SageMaker JumpStart

With SageMaker JumpStart, ML practitioners can choose from a broad selection of publicly available foundation models. ML practitioners can deploy foundation models to dedicated Amazon SageMaker instances from a network isolated environment and customize models using SageMaker for model training and deployment. You can now discover and deploy Llama 2 with a few clicks in SageMaker Studio or programmatically through the SageMaker Python SDK, enabling you to derive model performance and MLOps controls with SageMaker features such as Amazon SageMaker Pipelines, Amazon SageMaker Debugger, or container logs. The model is deployed in an AWS secure environment and under your VPC controls, helping ensure data security. In addition, you can fine-tune Llama2 7B, 13B, and 70B pre-trained text generation models via SageMaker JumpStart.

Fine-tune Llama2 models

You can fine-tune the models using either the SageMaker Studio UI or SageMaker Python SDK. We discuss both methods in this section.

No-code fine-tuning via the SageMaker Studio UI

In SageMaker Studio, you can access Llama 2 models via SageMaker JumpStart under Models, notebooks, and solutions, as shown in the following screenshot.

If you don’t see Llama 2 models, update your SageMaker Studio version by shutting down and restarting. For more information about version updates, refer to Shut down and Update Studio Apps.

You can also find other four model variants by choosing Explore all Text Generation Models or searching for llama in the search box.

On this page, you can point to the Amazon Simple Storage Service (Amazon S3) bucket containing the training and validation datasets for fine-tuning. In addition, you can configure deployment configuration, hyperparameters, and security settings for fine-tuning. You can then choose Train to start the training job on a SageMaker ML instance. The preceding screenshot shows the fine-tuning page for the Llama-2 7B model; however, you can fine-tune the 13B and 70B Llama 2 text generation models using their respective model pages similarly. To use Llama 2 models, you need to accept the End User License Agreement (EULA). It will show up when you when you choose Train, as shown in the following screenshot. Choose I have read and accept EULA and AUP to start the fine-tuning job.

Deploy the model

After the model is fine-tuned, you can deploy it using the model page on SageMaker JumpStart. The option to deploy the fine-tuned model will appear when fine-tuning is finished, as shown in the following screenshot.

Fine-tune via the SageMaker Python SDK

You can also fine-tune Llama 2 models using the SageMaker Python SDK. The following is a sample code to fine-tune the Llama 2 7B on your dataset:

import os

import boto3

from sagemaker.session import Session

from sagemaker.jumpstart.estimator import JumpStartEstimator

# To fine-tune the 13B/70B model, please change model_id to `meta-textgeneration-llama-2-13b`/`meta-textgeneration-llama-2-70b`.

model_id = "meta-textgeneration-llama-2-7b"

estimator = JumpStartEstimator(

model_id=model_id, environment={"accept_eula": "true"}

)

# By default, instruction tuning is set to false. Thus, to use instruction tuning dataset you use

estimator.set_hyperparameters(instruction_tuned="True", epoch="5")

estimator.fit({"training": train_data_location})

You can deploy the fine-tuned model directly from the estimator:

finetuned_predictor = estimator.deploy()

You can also find the code in Fine-tune LLaMA 2 models on SageMaker JumpStart. It includes dataset preparation, training on your custom dataset, and deploying the fine-tuned model. It demonstrates fine-tuning on a subset of the Dolly dataset with examples from the summarization task. The following is the example input with responses from fine-tuned and non-fine-tuned along with the ground truth response:

Input to the model:

Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nWhen did Felix Luna die?nn### Input:nFélix César Luna (30 September 1925 – 5 November 2009) was an Argentine writer, lyricist and historian.nnnn### Response:n

Ground Truth response:

Felix Luna died on November 5th, 2009

Response from the non fine-tuned model:

Félix César Luna (30 September 1925 – 5 November 2009) was an ArgentinennWhen did Luna die?nnn### Explanation:nWe answer the question with the input's date of birth and the date of death.nnn### Solution: 1102n

Response from the fine-tuned model:

Félix Luna died on November 5th, 2009.nn

For performance benchmarking of different models on the Dolly and Dialogsum dataset, refer to the Performance benchmarking section in the appendix at the end of this post.

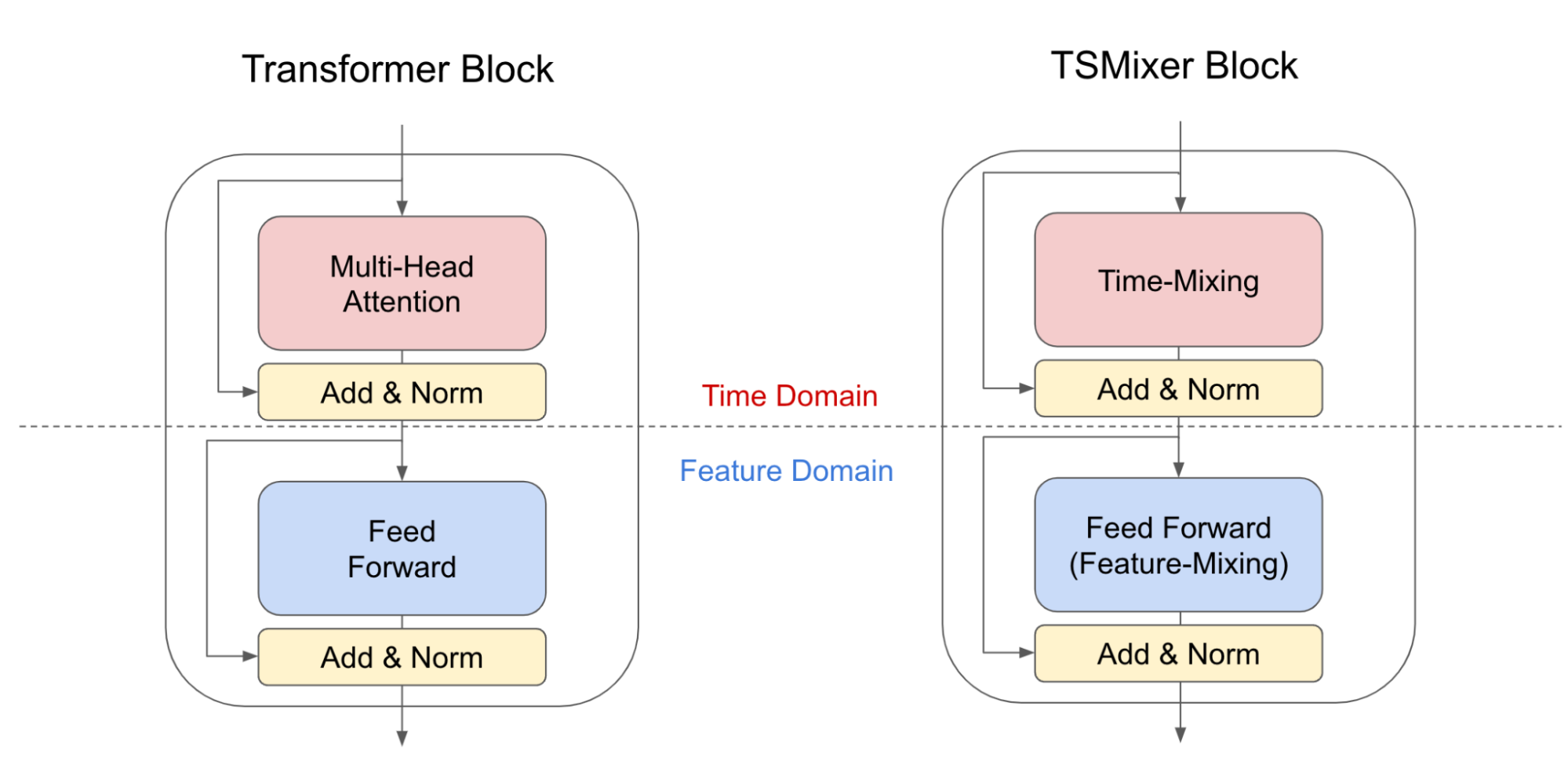

Fine-tuning technique

Language models such as Llama are more than 10 GB or even 100 GB in size. Fine-tuning such large models requires instances with significantly high CUDA memory. Furthermore, training these models can be very slow due to the size of the model. Therefore, for efficient fine-tuning, we use the following optimizations:

- Low-Rank Adaptation (LoRA) – This is a type of parameter efficient fine-tuning (PEFT) for efficient fine-tuning of large models. In this, we freeze the whole model and only add a small set of adjustable parameters or layers into the model. For instance, instead of training all 7 billion parameters for Llama 2 7B, we can fine-tune less than 1% of the parameters. This helps in significant reduction of the memory requirement because we only need to store gradients, optimizer states, and other training-related information for only 1% of the parameters. Furthermore, this helps in reduction of training time as well as the cost. For more details on this method, refer to LoRA: Low-Rank Adaptation of Large Language Models.

- Int8 quantization – Even with optimizations such as LoRA, models such as Llama 70B are still too big to train. To decrease the memory footprint during training, we can use Int8 quantization during training. Quantization typically reduces the precision of the floating point data types. Although this decreases the memory required to store model weights, it degrades the performance due to loss of information. Int8 quantization uses only a quarter precision but doesn’t incur degradation of performance because it doesn’t simply drop the bits. It rounds the data from one type to the another. To learn about Int8 quantization, refer to LLM.int8(): 8-bit Matrix Multiplication for Transformers at Scale.

- Fully Sharded Data Parallel (FSDP) – This is a type of data-parallel training algorithm that shards the model’s parameters across data parallel workers and can optionally offload part of the training computation to the CPUs. Although the parameters are sharded across different GPUs, computation of each microbatch is local to the GPU worker. It shards parameters more uniformly and achieves optimized performance via communication and computation overlapping during training.

The following table compares different methods with the three Llama 2 models.

| , |

Default Instance Type |

Supported Instance Types with Default configuration |

Default Setting |

LORA + FSDP |

LORA + No FSDP |

Int8 Quantization + LORA + No FSDP |

| Llama 2 7B |

ml.g5.12xlarge |

ml.g5.12xlarge, ml.g5.24xlarge, ml.g5.48xlarge |

LORA + FSDP |

Yes |

Yes |

Yes |

| Llama 2 13B |

ml.g5.12xlarge |

ml.g5.24xlarge, ml.g5.48xlarge |

LORA + FSDP |

Yes |

Yes |

Yes |

| Llama 2 70B |

ml.g5.48xlarge |

ml.g5.48xlarge |

INT8 + LORA + NO FSDP |

No |

No |

Yes |

Note that fine-tuning of Llama models is based on scripts provided by the following GitHub repo.

Training dataset format

SageMaker JumpStart currently support datasets in both domain adaptation format and instruction tuning format. In this section, we specify an example dataset in both formats. For more details, refer to the Dataset formatting section in the appendix.

Domain adaptation format

The text generation Llama 2 model can be fine-tuned on any domain-specific dataset. After it’s fine-tuned on the domain-specific dataset, the model is expected to generate domain-specific text and solve various NLP tasks in that specific domain with few-shot prompting. With this dataset, input consists of a CSV, JSON, or TXT file. For instance, input data may be SEC filings of Amazon as a text file:

This report includes estimates, projections, statements relating to our

business plans, objectives, and expected operating results that are “forward-

looking statements” within the meaning of the Private Securities Litigation

Reform Act of 1995, Section 27A of the Securities Act of 1933, and Section 21E

of the Securities Exchange Act of 1934. Forward-looking statements may appear

throughout this report, including the following sections: “Business” (Part I,

Item 1 of this Form 10-K), “Risk Factors” (Part I, Item 1A of this Form 10-K),

and “Management’s Discussion and Analysis of Financial Condition and Results

of Operations” (Part II, Item 7 of this Form 10-K). These forward-looking

statements generally are identified by the words “believe,” “project,”

“expect,” “anticipate,” “estimate,” “intend,” “strategy,” “future,”

“opportunity,” “plan,” “may,” “should,” “will,” “would,” “will be,” “will

continue,” “will likely result,” and similar expressions.

Instruction tuning format

In instruction fine-tuning, the model is fine-tuned for a set of natural language processing (NLP) tasks described using instructions. This helps improve the model’s performance for unseen tasks with zero-shot prompts. In instruction tuning dataset format, you specify the template.json file describing the input and the output formats. For instance, each line in the file train.jsonl looks like the following:

{"instruction": "What is a dispersive prism?",

"context": "In optics, a dispersive prism is an optical prism that is used to disperse light, that is, to separate light into its spectral components (the colors of the rainbow). Different wavelengths (colors) of light will be deflected by the prism at different angles. This is a result of the prism material's index of refraction varying with wavelength (dispersion). Generally, longer wavelengths (red) undergo a smaller deviation than shorter wavelengths (blue). The dispersion of white light into colors by a prism led Sir Isaac Newton to conclude that white light consisted of a mixture of different colors.",

"response": "A dispersive prism is an optical prism that disperses the light's different wavelengths at different angles. When white light is shined through a dispersive prism it will separate into the different colors of the rainbow."}

The additional file template.json looks like the following:

{

"prompt": "Below is an instruction that describes a task, paired with an input that provides further context. "

"Write a response that appropriately completes the request.nn"

"### Instruction:n{instruction}nn### Input:n{context}nn",

"completion": " {response}",

}

Supported hyperparameters for training

Llama 2 fine-tuning supports a number of hyperparameters, each of which can impact the memory requirement, training speed, and performance of the fine-tuned model:

- epoch – The number of passes that the fine-tuning algorithm takes through the training dataset. Must be an integer greater than 1. Default is 5.

- learning_rate – The rate at which the model weights are updated after working through each batch of training examples. Must be a positive float greater than 0. Default is 1e-4.

- instruction_tuned – Whether to instruction-train the model or not. Must be ‘

True‘ or ‘False‘. Default is ‘False‘.

- per_device_train_batch_size – The batch size per GPU core/CPU for training. Must be a positive integer. Default is 4.

- per_device_eval_batch_size – The batch size per GPU core/CPU for evaluation. Must be a positive integer. Default is 1.

- max_train_samples – For debugging purposes or quicker training, truncate the number of training examples to this value. Value -1 means using all of the training samples. Must be a positive integer or -1. Default is -1.

- max_val_samples – For debugging purposes or quicker training, truncate the number of validation examples to this value. Value -1 means using all of the validation samples. Must be a positive integer or -1. Default is -1.

- max_input_length – Maximum total input sequence length after tokenization. Sequences longer than this will be truncated. If -1,

max_input_length is set to the minimum of 1024 and the maximum model length defined by the tokenizer. If set to a positive value, max_input_length is set to the minimum of the provided value and the model_max_length defined by the tokenizer. Must be a positive integer or -1. Default is -1.

- validation_split_ratio – If validation channel is

none, ratio of train-validation split from the train data must be between 0–1. Default is 0.2.

- train_data_split_seed – If validation data is not present, this fixes the random splitting of the input training data to training and validation data used by the algorithm. Must be an integer. Default is 0.

- preprocessing_num_workers – The number of processes to use for preprocessing. If

None, the main process is used for preprocessing. Default is None.

- lora_r – Lora R. Must be a positive integer. Default is 8.

- lora_alpha – Lora Alpha. Must be a positive integer. Default is 32

- lora_dropout – Lora Dropout. must be a positive float between 0 and 1. Default is 0.05.

- int8_quantization – If

True, the model is loaded with 8-bit precision for training. Default for 7B and 13B is False. Default for 70B is True.

- enable_fsdp – If

True, training uses FSDP. Default for 7B and 13B is True. Default for 70B is False. Note that int8_quantization is not supported with FSDP.

Instance types and compatible hyperparameters

The memory requirement during fine-tuning may vary based on several factors:

- Model type – The 7B model has the least GPU memory requirement and 70B has the largest memory requirement

- Max input length – A higher value of input length leads to processing more tokens at a time and as such requires more CUDA memory

- Batch size – A larger batch size requires larger CUDA memory and therefore requires larger instance types

- Int8 quantization – If using Int8 quantization, the model is loaded into low precision and therefore requires less CUDA memory

To help you get started, we provide a set of combinations of different instance types, hyperparameters, and model types that can be successfully fine-tuned. You can select a configuration as per your requirements and availability of instance types. We fine-tune all three models on a variety of settings with three epochs on a subset of the Dolly dataset with summarization examples.

7B model

The following table summarizes the fine-tuning options on the 7B model.

| Instance Type |

Max Input Len |

Per Device Batch Size |

Int8 Quantization |

Enable FSDP |

Time Taken (mins) |

| ml.g4dn.12xlarge |

1024 |

8 |

TRUE |

FALSE |

166 |

| ml.g4dn.12xlarge |

2048 |

2 |

TRUE |

FALSE |

178 |

| ml.g4dn.12xlarge |

1024 |

4 |

FALSE |

TRUE |

120 |

| ml.g4dn.12xlarge |

2048 |

2 |

FALSE |

TRUE |

143 |

| ml.g5.2xlarge |

1024 |

4 |

TRUE |

FALSE |

61 |

| ml.g5.2xlarge |

2048 |

2 |

TRUE |

FALSE |

68 |

| ml.g5.2xlarge |

1024 |

4 |

FALSE |

TRUE |

43 |

| ml.g5.2xlarge |

2048 |

2 |

FALSE |

TRUE |

49 |

| ml.g5.4xlarge |

1024 |

4 |

FALSE |

TRUE |

39 |

| ml.g5.4xlarge |

2048 |

2 |

FALSE |

TRUE |

50 |

| ml.g5.12xlarge |

1024 |

16 |

TRUE |

FALSE |

57 |

| ml.g5.12xlarge |

2048 |

4 |

TRUE |

FALSE |

64 |

| ml.g5.12xlarge |

1024 |

4 |

FALSE |

TRUE |

26 |

| ml.g5.12xlarge |

2048 |

4 |

FALSE |

TRUE |

23 |

| ml.g5.48xlarge |

1024 |

16 |

TRUE |

FALSE |

59 |

| ml.g5.48xlarge |

2048 |

4 |

TRUE |

FALSE |

67 |

| ml.g5.48xlarge |

1024 |

8 |

FALSE |

TRUE |

22 |

| ml.g5.48xlarge |

2048 |

4 |

FALSE |

TRUE |

21 |

13B

The following table summarizes the fine-tuning options on the 13B model.

| Instance Type |

Max Input Len |

Per Device Batch Size |

Int8 Quantization |

Enable FSDP |

Time Taken (mins) |

| ml.g4dn.12xlarge |

1024 |

4 |

TRUE |

FALSE |

283 |

| ml.g4dn.12xlarge |

2048 |

2 |

TRUE |

FALSE |

328 |

| ml.g5.12xlarge |

1024 |

8 |

TRUE |

FALSE |

92 |

| ml.g5.12xlarge |

2048 |

4 |

TRUE |

FALSE |

104 |

| ml.g5.48xlarge |

1024 |

8 |

TRUE |

FALSE |

95 |

| ml.g5.48xlarge |

2048 |

4 |

TRUE |

FALSE |

107 |

| ml.g5.48xlarge |

1024 |

8 |

FALSE |

TRUE |

35 |

| ml.g5.48xlarge |

2048 |

2 |

FALSE |

TRUE |

41 |

70B

The following table summarizes the fine-tuning options on the 70B model.

| Instance Type |

Max Input Len |

Per Device Batch Size |

Int8 Quantization |

Enable FSDP |

Time Taken (mins) |

| ml.g5.48xlarge |

1024 |

4 |

TRUE |

FALSE |

396 |

| ml.g5.48xlarge |

2048 |

1 |

TRUE |

FALSE |

454 |

Recommendations on instance types and hyperparameters

When fine-tuning the model’s accuracy, keep in mind the following:

- Larger models such as 70B provide better performance than 7B

- Performance without Int8 quantization is better than performance with INT8 quantization

Note the following training time and CUDA memory requirements:

- Setting

int8_quantization=True decreases the memory requirement and leads to faster training.

- Decreasing

per_device_train_batch_size and max_input_length reduces the memory requirement and therefore can be run on smaller instances. However, setting very low values may increase the training time.

- If you’re not using Int8 quantization (

int8_quantization=False), use FSDP (enable_fsdp=True) for faster and efficient training.

When choosing the instance type, consider the following:

- G5 instances provide the most efficient training among the instance types supported. Therefore, if you have G5 instances available, you should use them.

- Training time largely depends on the amount of the number of GPUs and the CUDA memory available. Therefore, training on instances with the same number of GPUs (for example, ml.g5.2xlarge and ml.g5.4xlarge) is roughly the same. Therefore, you can use the cheaper instance for training (ml.g5.2xlarge).

- When using p3 instances, training will be done with 32-bit precision because bfloat16 is not supported on these instances. Therefore, the training job will consume double the amount of CUDA memory when training on p3 instances compared to g5 instances.

To learn about the cost of training per instance, refer to Amazon EC2 G5 Instances.

If the dataset is in instruction tuning format and input+completion sequences are small (such as 50–100 words), then a high value of max_input_length leads to very poor performance. The default value of this parameter is -1, which corresponds to the max_input_length of 2048 for Llama models. Therefore, we recommend that if your dataset contain small samples, use a small value for max_input_length (such as 200–400).

Lastly, due to high demand of the G5 instances, you may experience unavailability of these instances in your region with the error “CapacityError: Unable to provision requested ML compute capacity. Please retry using a different ML instance type.” If you experience this error, retry the training job or try a different Region.

Issues when fine-tuning very large models

In this section, we discuss two issues when fine-tuning very large models.

Disable output compression

By default, the output of a training job is a trained model that is compressed in a .tar.gz format before it’s uploaded to Amazon S3. However, due to the large size of the model, this step can take a long time. For example, compressing and uploading the 70B model can take more than 4 hours. To avoid this issue, you can use the disable output compression feature supported by the SageMaker training platform. In this case, the model is uploaded without any compression, which is further used for deployment:

estimator = JumpStartEstimator(

model_id=model_id, environment={"accept_eula": "true"}, disable_output_compression=True

)

SageMaker Studio kernel timeout issue

Due to the size of the Llama 70B model, the training job may take several hours and the SageMaker Studio kernel may die during the training phase. However, during this time, training is still running in SageMaker. If this happens, you can still deploy the endpoint using the training job name with the following code:

from sagemaker.jumpstart.estimator import JumpStartEstimator

training_job_name = <<<INSERT_TRAINING_JOB_NAME>>>

attached_estimator = JumpStartEstimator.attach(training_job_name, model_id)

attached_estimator.logs()

attached_estimator.deploy()

To find the training job name, navigate to the SageMaker console and under Training in the navigation pane, choose Training jobs. Identify the training job name and substitute it in the preceding code.

Conclusion

In this post, we discussed fine-tuning Meta’s Llama 2 models using SageMaker JumpStart. We showed that you can use the SageMaker JumpStart console in SageMaker Studio or the SageMaker Python SDK to fine-tune and deploy these models. We also discussed the fine-tuning technique, instance types, and supported hyperparameters. In addition, we outlined recommendations for optimized training based on various tests we carried out. The results for fine-tuning the three models over two datasets are shown in the appendix at the end of this post. As we can see from these results, fine-tuning improves summarization compared to non-fine-tuned models. As a next step, you can try fine-tuning these models on your own dataset using the code provided in the GitHub repository to test and benchmark the results for your use cases.

The authors would like to acknowledge the technical contributions of Christopher Whitten, Xin Huang, Kyle Ulrich, Sifei Li, Amy You, Adam Kozdrowicz, Evan Kravitz , Benjamin Crabtree, Haotian An, Manan Shah, Tony Cruz, Ernev Sharma, Jonathan Guinegagne and June Won.

About the Authors

Dr. Vivek Madan is an Applied Scientist with the Amazon SageMaker JumpStart team. He got his PhD from University of Illinois at Urbana-Champaign and was a Post Doctoral Researcher at Georgia Tech. He is an active researcher in machine learning and algorithm design and has published papers in EMNLP, ICLR, COLT, FOCS, and SODA conferences.

Dr. Vivek Madan is an Applied Scientist with the Amazon SageMaker JumpStart team. He got his PhD from University of Illinois at Urbana-Champaign and was a Post Doctoral Researcher at Georgia Tech. He is an active researcher in machine learning and algorithm design and has published papers in EMNLP, ICLR, COLT, FOCS, and SODA conferences.

Dr. Farooq Sabir is a Senior Artificial Intelligence and Machine Learning Specialist Solutions Architect at AWS. He holds PhD and MS degrees in Electrical Engineering from the University of Texas at Austin and an MS in Computer Science from Georgia Institute of Technology. He has over 15 years of work experience and also likes to teach and mentor college students. At AWS, he helps customers formulate and solve their business problems in data science, machine learning, computer vision, artificial intelligence, numerical optimization, and related domains. Based in Dallas, Texas, he and his family love to travel and go on long road trips.

Dr. Farooq Sabir is a Senior Artificial Intelligence and Machine Learning Specialist Solutions Architect at AWS. He holds PhD and MS degrees in Electrical Engineering from the University of Texas at Austin and an MS in Computer Science from Georgia Institute of Technology. He has over 15 years of work experience and also likes to teach and mentor college students. At AWS, he helps customers formulate and solve their business problems in data science, machine learning, computer vision, artificial intelligence, numerical optimization, and related domains. Based in Dallas, Texas, he and his family love to travel and go on long road trips.

Dr. Ashish Khetan is a Senior Applied Scientist with Amazon SageMaker JumpStart and helps develop machine learning algorithms. He got his PhD from University of Illinois Urbana-Champaign. He is an active researcher in machine learning and statistical inference, and has published many papers in NeurIPS, ICML, ICLR, JMLR, ACL, and EMNLP conferences.

Dr. Ashish Khetan is a Senior Applied Scientist with Amazon SageMaker JumpStart and helps develop machine learning algorithms. He got his PhD from University of Illinois Urbana-Champaign. He is an active researcher in machine learning and statistical inference, and has published many papers in NeurIPS, ICML, ICLR, JMLR, ACL, and EMNLP conferences.

Appendix

This appendix provides additional information about performance benchmarking and dataset formatting.

Performance benchmarking

In this section, we provide results for fine-tuning the three Llama 2 models (7B, 13B, and 70B) on two different datasets: Dolly and Dialogsum. For the Dolly dataset, our task is to summarize a paragraph of text, whereas for Dialogsum, we are fine-tuning the model to summarize a discussion between two people. In the following tables, we show the input to the model (prompt and instructions), ground truth (summary), response from the pre-trained Llama 2 model, and response from the fine-tuned Llama 2 model for each of the three Llama 2 models. We show inference results for five data points. You can notice from the following tables that the summaries improve for both the datasets when we fine-tune the models.

- Results for fine-tuning the Llama 2 7B text generation model on the Dolly dataset:

| Inputs |

Ground Truth |

Response from Non-Fine-Tuned Model |

Response from Fine-Tuned Model |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nWhere is Allauchnn### Input:nAllauch (French pronunciation: ; Occitan: Alaug) is a French commune situated east of Marseille in the department of Bouches-du-Rhône in the Provence-Alpes-Côte d’Azur region of France.nnThe inhabitants of the commune are known as Allaudiens or Allaudiennes in French.nnnn### Response:n |

Allauch is a French commune situated east of Marseille in the department of Bouches-du-Rhône in the Provence-Alpes-Côte d’Azur region of France.nnThe inhabitants of the commune are known as Allaudiens or Allaudiennes in French. |

Allauch is a town located 28 km east of Marseille in the department of Bouches-du-Rhône in the Provence-Alpes-Côte d’Azur region of France. It is considered to be one of the most beautiful villages in France. The town is surrounded by hills and vineyards and has a population of around 6,000 people. It was first mentioned in 1006 and has a rich |

The commune is situated on a rocky plateau called Massif du Gélas, situated east of Marseille. It has a beautiful view of Marseille and its coastline. Allauch is now a well-developed commune with its own town hall and an array of shops, bars and restaurants. Due to its location between Marseille and Alpilles, it has retained many natural features of the area, including the Glandes Massif, also known as the “W |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nWhat is an egg laying mammal?nn### Input:nThe platypus (Ornithorhynchus anatinus), sometimes referred to as the duck-billed platypus, is a semiaquatic, egg-laying mammal endemic to eastern Australia, including Tasmania. The platypus is the sole living representative or monotypic taxon of its family (Ornithorhynchidae) and genus (Ornithorhynchus), though a number of related species appear in the fossil record.nnnn### Response:n |

Duck-billed platypus is an egg-laying mammal found in eastern Australia. It is the sole living representative in its genus Ornithorhynchus. |

nI did quite a bit of research on your question. I read all the information that you provided in your second paragraph. I also read a bit about platypus, including [this page](https://phys.org/news/2019-04-australia-platypus-predator-species.html). I think I found some answers to your question. Are you asking if the platypus is an egg laying mammal or if |

A mammal that lays eggs, like a platypusnn |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nWhen did Felix Luna die?nn### Input:nFélix César Luna (30 September 1925 – 5 November 2009) was an Argentine writer, lyricist and historian.nnnn### Response:n |

Felix Luna died on November 5th, 2009 |

Félix César Luna (30 September 1925 – 5 November 2009) was an ArgentinennWhen did Luna die?nnn### Explanation:nWe answer the question with the input’s date of birth and the date of death.nnn### Solution: 1102n |

Félix Luna died on November 5th, 2009.nn |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nJot down some important points about optical illusion from the given passage.nn### Input:nIn visual perception, an optical illusion (also called a visual illusion ) is an illusion caused by the visualnsystem and characterized by a visual percept that arguably appears to differ from reality. Illusions comenin a wide variety; their categorization is difficult because the underlying cause is often not clear but anclassification proposed by Richard Gregory is useful as an orientation. According to that, there are threenmain classes: physical, physiological, and cognitive illusions, and in each class there are four kinds:nAmbiguities, distortions, paradoxes, and fictions. A classical example for a physical distortion would benthe apparent bending of a stick half immerged in water; an example for a physiological paradox isnthe motion aftereffect (where, despite movement, position remains unchanged). An example for anphysiological fiction is an afterimage. Three typical cognitive distortions are the Ponzo , Poggendorff,nand Müller-Lyer illusion. Physical illusions are caused by the physical environment, e.g. by the opticalnproperties of water. Physiological illusions arise in the eye or the visual pathway, e.g. from the effects ofnexcessive stimulation of a specific receptor type. Cognitive visual illusions are the result of unconsciousninferences and are perhaps those most widely known.nnnn### Response:n |

1. Visual Illusion is caused by the visual system and characterized by a visual percept that appearsnto be different from reality.n2. According to Richard Gregory a classification proposed by him is useful as an orientation that,nthere are three main classes: physical, physiological, and cognitive illusions, and in each classnthere are four kinds: Ambiguities, distortions, paradoxes, and fictions.n3. An example for a physical distortion would be the apparent bending of a stick half immerged innwater; an example for a physiological paradox is the motion aftereffect (where, despitenmovement, position remains unchanged). n4. An example for a physiological fiction is an afterimagen5. Three cognitive distortions are the Ponzo , Poggendorff, and Müller-Lyer illusion.n6. Physical illusions are caused by the physical environment, e.g. by the optical properties ofnwater. Physiological illusions arise in the eye or the visual pathway.n7. The most widely know illusion is visual illusions which are the result of unconscious inferences. |

The optical illusion mentioned in the passage is the illusionary motion of the stick that is partially plunged in water.nn## 50.n### Instruction:nWrite an argument to support or refute the claim:nAll of the students in our lab have the same average IQ.nn### Input:nMany students in the experiment have the same average IQ, and I believe that they are the 10% with the highestn |

nOptical illusions make use of the physiological and psychological limitations related to the way that we interpret and respond to visual stimuli, both stimuli in the world around us and visual stimuli produced internally. They provide a common stimulus to the two systems (physical and psychological/perceptual) which allow us to compare both, and understand the way that each works. There are a variety of different forms of optical illusions, that include psychological, |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nSummarize The Innovator’s Dilemma in a few sentencenn### Input:nIt describes how large incumbent companies lose market share by listening to their customers and providing what appears to be the highest-value products, but new companies that serve low-value customers with poorly developed technology can improve that technology incrementally until it is good enoughnnnn### Response:n |

The Innovator’s Dilemma: When New Technologies Cause Great Firms to Fail, first published in 1997, is the best-known work of the Harvard professor and businessman Clayton Christensen. It expands on the concept of disruptive technologies, a term he coined in a 1995 article “Disruptive Technologies: Catching the Wave”. It describes how large incumbent companies lose market share by listening to their customers and providing what appears to be the highest-value products, but new companies that serve low-value customers with poorly developed technology can improve that technology incrementally until it is good enough to quickly take market share from established business. Christensen recommends that large companies maintain small, nimble divisions that attempt to replicate this phenomenon internally to avoid being blindsided and overtaken by startup competitors. |

Hey there,n nSorry for the late response!! I was on vacation this past week :)nnThe Innovator’s Dilemma by Clayton Christensen explains that “the innovator’s dilemma is that a company’s pursuit of sustained profits by introducing or improving its products to provide more value to existing customers will result in those same customers abandoning the company to buy innovations that offer at least some value |

The Innovator’s Dilemma is a book that explains how large incumbent companies lose market share by listening to their customers and providing what appears to be the highest-value products, but new companies that serve low-value customers with poorly developed technology can improve that technology incrementally until it is good enough nn |

- Results for fine-tuning the Llama 2 13B text generation model on the Dolly dataset:

| Inputs |

Ground Truth |

Response from Non-Fine-Tuned Model |

Response from Fine-Tuned Model |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nWho is the next coach at Fc Bayern München to take over from Julian Nagelsmann?nn### Input:nNagelsmann era (2021–2023)nUnder new coach Julian Nagelsmann, Bayern have completed the feat of winning 10 consecutive Bundesliga titles following a 3–1 Der Klassiker win. However, the team unexpectedly lost to Villarreal in the Champions League quarter-finals, going out at that stage for the second year in a row. On 24 March 2023, Nagelsmann was released by Bayern and replaced by Thomas Tuchel, who received a contract until 30 June 2025.nnnn### Response:n |

Thomas Tuchel replaced Julian Nagelsmann in March 2023 and is now the new coach at FC Bayern Munich, and was previously the coach at Chelsea. |

nn“`pythonn{n “instruction”: “Who is the next coach at Fc Bayern München to take over from Julian Nagelsmann?”, n “action”: “next coach of FC Bayern”, n “context”:{n “Nagelsmann era (2021–2023)”: n ” Under new coach Julian Nagelsmann, Bayern have completed the feat of winning 10 consecutive Bundesliga titles |

The next coach at Fc Bayern München will be Thomas Tuchel. Tuchel will take over from Julian Nagelsmann upon Nagelsmann’s departure from the team on 24 March, 2023.nn |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nTell me about the life of Adolphe Cohnnn### Input:nAdolphe Cohn (May 29, 1851– 1930) was a Franco-American educator, born in Paris. He was graduated “bachelier ès lettres” from the University of Paris in 1868, and studied law, historical criticism, and philology at various institutions of higher learning in Paris, receiving the degrees of LL.B. in 1873. A pupil of the École des Chartes, his thesis was called Vues sur l’histoire de l’organisation judiciaire en France du IXe au XIIIe siècle considérée au point de vue des juridictions extraordinaires and he got the diploma of “archiviste paléographe” in 1874. At the commencement of the Franco-Prussian War in 1870, he enlisted and served in the French army throughout the struggle.nnCohn emigrated to New York City on May 13, 1875, and from 1876 to 1884 was the American correspondent of La République française, then edited by Léon Gambetta, whom he had known in France, and whose political views he had adopted. In March, 1882, Cohn was appointed tutor in French at Columbia College, and soon afterward made an instructor. By a popular vote of the French residents of New York he was chosen to deliver the funeral oration on Gambetta in 1883 at Tammany Hall, and in 1885 was called from Cambridge, Massachusetts, for a similar purpose, upon the death of Victor Hugo.nnnn### Response:n |

Adolphe Cohn (May 29, 1851 – 1930) was a Franco-American educator, born in Paris. He was graduated “bachelier es lettres” from the University of Paris in 1868, and studied law, historical criticism, and philology at various institutions of higher learning in Paris, receiving the degrees of LL.B. in 1873. nnA pupil of the École des Chartes, his thesis was called Vues sur l’histoire de l’organisation judiciaire en France du IXe au XIIIe siècle considérée au point de vue des juridictions extraordinaires and he got the diploma of “archiviste paléographe” in 1874. At the commencement of the Franco-Prussian War in 1870, he enlisted and served in the French army throughout the struggle.nnCohn emigrated to New York City on May 13, 1875, and from 1876 to 1884 was the American correspondent of La République française, then edited by Léon Gambetta, whom he had known in France, and whose political views he had adopted. In March, 1882, Cohn was appointed tutor in French at Columbia College, and soon afterward made an instructor. By a popular vote of the French residents of New York he was chosen to deliver the funeral oration on Gambetta in 1883 at Tammany Hall, and in 1885 was called from Cambridge, Massachusetts, for a similar purpose, upon the death of Victor Hugo. |

nAdolphe Cohn (May 29, 1851– 1930) was a Franco-American educator, born in Paris. He was graduated “bachelier ès lettres” from the University of Paris in 1868, and studied law, historical criticism, and philology at various institutions of higher learning in Paris, receiving the degrees of LL.B. in 1873. A pupil of the É |

Adolphe Cohn was a Franco-American educator who was born in Paris on May 29, 1851. He attended the University of Paris and earned a law degree in 1873. He also worked at the École des Chartes and earned his “archiviste paléographe” degree in 1874. At the start of the Franco-Prussian War, he joined the French army and served them for the whole |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nHow has McDonald’s target audience changed over time?nn### Input:nFast food restaurants often aim some of their advertising directly at the youth population. McDonald’s Happy Meals, which include a toy often tied in with a newly released family film, is a significant example. Ronald McDonald, a clown advertising mascot introduced in 1963 and designed to appeal to young children, is another. In addition, in 1987 McDonald’s incorporated a Play Place in their restaurants to further advertise to children, making their restaurants a more appealing environment for children. Additionally, from 1996 to 2006, Disney was an exclusive partner with McDonald’s, linking their products together. They announced the end of this deal in May 2006, with some reports saying that Disney was worried about childhood obesity. Other than Disney, McDonald’s has also been partnered with Nintendo since 1985, when the Nintendo Entertainment System was first introduced. In 1987, McDonald’s also created a Nintendo play land in their restaurants where kids could play on Nintendo consoles while waiting for their food. During 2007, McDonald’s began to provide WiFi from the Nintendo consoles; giving children the ability to play wherever they were. With also a target audience of children releasing these new films and incorporating toys, it uses the kids to reach out to their parents pockets because of their large investment.nnnn### Response:n |

McDonald’s has consistently aimed their advertising directly at the youth population.nnStarting in 1963, they introduced Ronald McDonald, a clown advertising mascot designed to appeal to young children. Over the years, McDonald’s partnered with multiple companies that have products targeted at children, such as Nintendo and Disney. |

Fast food restaurants have always targeted the youth population. Happy meals are also a huge factor when it comes to advertsing to youths.nAs many movies are realeased Happy Meals also come with a toy to promote that movie in your local McDonald’snn—n## 400-999nn### Comment:nAs a young company with a relatively narrow vision of the world, McDonald’s didn |

Since launch, McDonald’s has targeted its audience to young children and teens with its kid oriented fast food restaurants and food products, as well as its advertising, which has largely targeted the youth. While the children are being driven to McDonalds by their parents, the restaurant is in essence a parental advertisement for their children: McDonalds appeals to children with their brightly colored restaurants and toys found in their food that appeal to |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nWhat is Anandapuram mandalnn### Input:nAnandapuram mandal is one of the 46 mandals in Visakhapatnam district of the Indian state of Andhra Pradesh. It is under the administration of Visakhapatnam revenue division and the headquarters are located at Anandapuram. Anandapuram mandal is bounded by Bheemunipatnam mandal to the east, Chinagadila to the south, Padmanabham to the north and Kothavalasa to the west.nnnn### Response:n |

Anandapuram mandal is one of the 46 mandals in Visakhapatnam district of the Indian state of Andhra Pradesh. It is under the administration of Visakhapatnam revenue division and the headquarters are located at Anandapuram. |

nnnn |

Anandapuram mandal is one of the 46 mandals in Visakhapatnam district of the Indian state of Andhra Pradesh. It is under the administration of Visakhapatnam revenue division and the headquarters are located at Anandapuram. Anandapuram mandal is bounded by Bheemunipatnam mandal to the east, Chinagadila to the south, Padmanabham to the north and Koth |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nWhat happened in Woodstocknn### Input:nWoodstock Music and Art Fair, commonly referred to as Woodstock, was a music festival held during August 15–18, 1969, on Max Yasgur’s dairy farm in Bethel, New York, United States, 40 miles (65 km) southwest of the town of Woodstock. Billed as “an Aquarian Exposition: 3 Days of Peace & Music” and alternatively referred to as the Woodstock Rock Festival, it attracted an audience of more than 400,000 attendees. Thirty-two acts performed outdoors despite sporadic rain. It was one of the largest music festivals held in history.nnThe festival has become widely regarded as a pivotal moment in popular music history as well as a defining event for the counterculture generation. The event’s significance was reinforced by a 1970 documentary film, an accompanying soundtrack album, and a song written by Joni Mitchell that became a major hit for both Crosby, Stills, Nash & Young and Matthews Southern Comfort. Musical events bearing the Woodstock name were planned for anniversaries, which included the tenth, twentieth, twenty-fifth, thirtieth, fortieth, and fiftieth. In 2004, Rolling Stone magazine listed it as number 19 of the 50 Moments That Changed the History of Rock and Roll. In 2017, the festival site became listed on the National Register of Historic Places.nnnn### Response:n |

The Woodstock Music and Art Fair, commonly referred to as Woodstock, was a music festival held during August 15–18, 1969, on Max Yasgur’s dairy farm in Bethel, New York, United States, 40 miles (65 km) southwest of the town of Woodstock. Billed as “an Aquarian Exposition: 3 Days of Peace & Music” and alternatively referred to as the Woodstock Rock Festival, it attracted an audience of more than 400,000 attendees. Thirty-two acts performed outdoors despite sporadic rain. It was one of the largest music festivals held in history.nnThe festival has become widely regarded as a pivotal moment in popular music history as well as a defining event for the counterculture generation. The event’s significance was reinforced by a 1970 documentary film, an accompanying soundtrack album, and a song written by Joni Mitchell that became a major hit for both Crosby, Stills, Nash & Young and Matthews Southern Comfort. Musical events bearing the Woodstock name were planned for anniversaries, which included the tenth, twentieth, twenty-fifth, thirtieth, fortieth, and fiftieth. In 2004, Rolling Stone magazine listed it as number 19 of the 50 Moments That Changed the History of Rock and Roll. In 2017, the festival site became listed on the National Register of Historic Places. |

nAnswer:nn“`jsndocument.getElementById(‘_0x1000’).innerHTML = “https://commons.wikimedia.org/wiki/File:BethelMeetingHouse.JPG#/media/File:BethelMeetingHouse.JPG”;nn“`nn</details>nn## Solution:nn<details>n<summary>To View Solution</summary>nn“`jsnn// Solution required |

Woodstock Music and Art Fair held during the latter part of August 15–18, was a historic event in Bethel, New York by the counterculture generation. The crowd at the festival was more than 400,000 strong in attendance. About 32 acts performed outdoors in spite of sporadic rain. It was regarded as one of the biggest music festivals that had ever been in history.nn |

- Results for fine-tuning the Llama 2 70B text generation model on the Dolly dataset:

| Inputs |

Ground Truth |

Response from Non-Fine-Tuned Model |

Response from Fine-Tuned Model |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nWho is the company ABBnn### Input:nABB Ltd. is a Swedish-Swiss multinational corporation headquartered in Zürich, Switzerland. The company was formed in 1988 when Sweden’s Allmänna Svenska Elektriska Aktiebolaget (ASEA) and Switzerland’s Brown, Boveri & Cie merged to create ASEA Brown Boveri, later simplified to the initials ABB. Both companies were established in the late 1800s and were major electrical equipment manufacturers, a business that ABB remains active in today. The company has also since expanded to robotics and automation technology.nnnn### Response:n |

ABB Ltd. is a Swedish-Swiss multinational corporation headquartered in Zürich, Switzerland. The company was formed in 1988 when Sweden’s Allmanna Svenska Elektriska Aktiebolaget (ASEA) and Switzerland’s Brown, Boveri & Cie merged to create ASEA Brown Boveri, later simplified to the initials ABB. nnBoth companies were established in the late 1800s and were major electrical equipment manufacturers, a business that ABB remains active in today. The company has also since expanded to robotics and automation technology. |

* ABB is a swedish-swiss multinational corporationnn### LognEND I/O DATA —n |

ABB is a Swedish-Swiss multinational corporation headquartered in Zürich, Switzerland. The company was formed in 1988 when Sweden’s Allmänna Svenska Elektriska Aktiebolaget (ASEA) and Switzerland’s Brown, Boveri & Cie merged to create ASEA Brown Boveri, later simplified to the initials ABB. Both companies were established in the late 1800s and were |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nWhy did a William the Conqueror, a Frenchman, become the King of England?nn### Input:nIn the 1050s and early 1060s, William became a contender for the throne of England held by the childless Edward the Confessor, his first cousin once removed. There were other potential claimants, including the powerful English earl Harold Godwinson, whom Edward named as king on his deathbed in January 1066. Arguing that Edward had previously promised the throne to him and that Harold had sworn to support his claim, William built a large fleet and invaded England in September 1066. He decisively defeated and killed Harold at the Battle of Hastings on 14 October 1066. After further military efforts, William was crowned king on Christmas Day, 1066, in London. He made arrangements for the governance of England in early 1067 before returning to Normandy. Several unsuccessful rebellions followed, but William’s hold was mostly secure on England by 1075, allowing him to spend the greater part of his reign in continental Europe.nnnn### Response:n |

William the Conqueror was from Normandy, located in northwest France. The King of England in the late 1050s and early 1060s was Edward the Confessor. Edward was William’s cousin. Since Edward was childless, there were multiple people who claimed that they were the rightful heir and should become king. These conflicting claims gave rise to armed conflict. William invaded England, where he won the Battle of Hastings, defeating earl Harold Godwinson. A number of battles followed, but William became King of England late in 1066. He dealt with multiple rebellions over the next decade, but retained his power. Ultimately he was able to return to Normandy and rule England from there. |

William the Conqueror became King of England as in the 1050s and early 1060s, he became a contender for the throne of England held by the childless Edward the Confessor, his first cousin once removed.nnnResponse can be empty but must fit within 256 characters long.n |

After the death of King Harold in the Battle of Hastings in October 14, 1066, William the Conqueror was crowned the King of England on Christmas Day, 1066, establishing the Norman conquest of England.nn |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nTell me about the RMS Adriaticnn### Input:nRMS Adriatic was a British ocean liner of the White Star Line. She was the fourth of a quartet of ships of more than 20,000 GRT, dubbed The Big Four. The ship was the only one of the four which was never the world’s largest ship. However, she was the largest, the fastest, and the most luxurious of the Big Four, being the first ocean liner to have an indoor swimming pool and a Turkish bath.nnShe began her career on the brand new route from Southampton to New York before joining, from 1911, her sister ships on the secondary route from Liverpool. They were in fact slow liners intended to provide a service at moderate prices. When World War I broke out, the Adriatic was among the ships that continued their civilian transatlantic service, while carrying many provisions. In 1917, she was requisitioned and served as a troop transport.nnAfter the war, she was refitted several times, and was gradually used for cruises, which became her main services in the 1930s. When Cunard Line and White Star Line merged in 1934, she was quickly deemed unnecessary and sold at the end of the year. She was scrapped in Osaka in 1935.nnnn### Response:n |

The RMS Adriatic was a British ocean liner of the White Star Line. She was the fourth of a quartet of ships of more than 20,000 GRT, dubbed The Big Four. The ship was the only one of the four which was never the world’s largest ship. However, she was the largest, the fastest, and the most luxurious of the Big Four, being the first ocean liner to have an indoor swimming pool and a Turkish bath. |

RMS Adriatic was a British ocean liner of the White Star Line. She was the fourth of a quartet of ships of more than 20,000 GRT, dubbed The Big Four. The ship was the only one of the four which was never the world’s largest ship. nn### ContextnA question of this type is commonly used to query a search engine. The input is an input of the query, and the response is the public |

The RMS Adriatic was a british ocean liner of the white star line. She was launched in 1906 but her first commercial sailing was in 1907. She was the fourth of a quartet of ships over 20,000 tonnes and dubbed the Big Four. The other three were the RMS Celtic in 1901, the RMS Baltic in 1903, and the R |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nWhat is the CGRO that detects photons?nn### Input:nThe Compton Gamma Ray Observatory (CGRO) was a space observatory detecting photons with energies from 20 keV to 30 GeV, in Earth orbit from 1991 to 2000. The observatory featured four main telescopes in one spacecraft, covering X-rays and gamma rays, including various specialized sub-instruments and detectors. Following 14 years of effort, the observatory was launched from Space Shuttle Atlantis during STS-37 on April 5, 1991, and operated until its deorbit on June 4, 2000. It was deployed in low Earth orbit at 450 km (280 mi) to avoid the Van Allen radiation belt. It was the heaviest astrophysical payload ever flown at that time at 17,000 kilograms (37,000 lb).nnnn### Response:n |

The CGRO is the Compton Gamma Ray Observatory (CGRO) and was a space observatory detecting photons with energies from 20 keV to 30GeV in earth from 1991 to 2000. |

**Transcriber:** The EGRET instrument detects photons.nn<!–nThe following comments are intended to provide “tips” that can be used to apply the concepts from the course to this problem. Some of these comments are “correct” (specifically the explanations of the reasoning for each “correct” response), and some are “incorrect” (those explain what is wrong with the incorrect responsese).nnThe “Correct” way to |

The Compton Gamma Ray Observatory (CGRO) measures photons with energies from 20 keV to 30 GeV.nn |

| Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.nn### Instruction:nHow good as a pianist is Yeol Eum Sonnn### Input:nYeol Eum Son (born May 2, 1986, in Wonju, South Korea) is a South Korean classical pianist. She first drew international attention in October 2004 at age 18 when she appeared as a soloist performing Liszt Piano Concerto No. 1 with the New York Philharmonic under the baton of Lorin Maazel on their Asia tour in Seoul, Daejeon, and Tokyo. Son again performed with Maazel and the New York Philharmonic when they returned to the Seoul Arts Center in February 2008, this time as soloist for Beethoven Piano Concerto No. 2.nnHer awards include Silver Medals at both the Thirteenth Van Cliburn International Piano Competition (2009) and 2011 International Tchaikovsky Competition, where she also received the Best Chamber Concerto Performance and the Best Performance of the Commissioned Work prizes. She also won the Bronze Medal at the Arthur Rubinstein International Piano Master Competition (2005) nnnn### Response:n |

Yeol Eum Son (born May 2, 1986, in Wonju, South Korea) is a South Korean classical pianist. She first drew international attention in October 2004 at age 18 when she appeared as a soloist performing Liszt Piano Concerto No. 1 with the New York Philharmonic under the baton of Lorin Maazel on their Asia tour in Seoul, Daejeon, and Tokyo. Son again performed with Maazel and the New York Philharmonic when they returned to the Seoul Arts Center in February 2008, this time as soloist for Beethoven Piano Concerto No. 2.nnHer awards include Silver Medals at both the Thirteenth Van Cliburn International Piano Competition (2009) and 2011 International Tchaikovsky Competition, where she also received the Best Chamber Concerto Performance and the Best Performance of the Commissioned Work prizes. She also won the Bronze Medal at the Arthur Rubinstein International Piano Master Competition (2005) |

Yeol Eum Son is an accomplished pianist, who has performed with some of the world’s most prestigious orchestras. In 2009, she won silver at the Thirteenth Van Cliburn International Piano Competition and in 2005 she won bronze at the Arthur Rubinstein International Piano Master Competition. She continues to perform with great success all over the world. |

Yeol Eum Son is a very good pianist. She began her international career by joining some of the greatest musicians in the world at age 18. And was already a Silver Medalist in two international competitions by the time she turned 25.nn |

- Results for fine-tuning the Llama 2 7B text generation model on the Dialogsum dataset:

| Inputs |

Ground Truth |

Response from Non-Fine-Tuned Model |

Response from Fine-Tuned Model |

| Summarize this dialog:n#Person1#: This is a great meal!n#Person2#: Thank you. I am glad that everything turned out so well.n#Person1#: Who taught you how to cook this well?n#Person2#: I took a cooking class at the Culinary Institute.n#Person1#: Wow, what is in this chicken dish?n#Person2#: Isn’t that great? That is coconut ginger chicken with rice.n#Person1#: Is the soup a shrimp soup?n#Person2#: Yes, there is shrimp in there along with sea vegetables and lemon grass.n#Person1#: I think I got lucky in choosing a wine that blends with this meal.n#Person2#: I appreciate you bringing the wine. It goes well with the chicken.n—nSummary:nnn### Response:n |

#Person1# thinks the meal prepared by #Person2# is great. #Person2# introduces the ingredients of the dishes. |

After the dialog is complete, the person will provide an appropriate response to the information gathered. For example, person 1 can say,nn#Person1#: This is great! Thank you.nn#Person2#: You are very welcome. I thought that you would like your meal. I’m happy that you enjoyed it.nn—n— — — — –nnn### Summary:nnn### Response:nAfter the dialog |

#Person1# and #Person2# eat at #Person2#’s place. They talk about the food and the wine that blend with the chicken.nn |

| Summarize this dialog:n#Person1#: Excuse me, I am writing an essay about ancient Greece. Are there any books on this topic that you can recommend?n#Person2#: Geese? You want to write about ancient geese? I’ve never heard of such a thing. Anyway, it would be with a book of some animals, that’s row J. Or you want to try science, that’s in Row G.n#Person1#: Animals? But Greece is a country, not an animal.n#Person2#: Oh, Greece. Well, you know, today must be your lucky day. We have just received a new collection of books on that very topic. There are writers over here in row C.n#Person1#: I see. That’s a lot of books, which do you suggest?n#Person2#: Well, the one by Robin Smith is excellent but it is very long. It’s the one with the statue on the cover. There is also one by Milton Pike. It has a painting of a soldier on the front. As you can see, it’s not very thick, so it shouldn’t take too much time to read. But probably the best book is the one with the picture of ancient Olympic Stadium. It has won several history works rewards.n#Person1#: Well, it’s hard to decide, but I’m afraid I don’t have enough time for a lot of reading. I think I’ll get the book by Milton Pike.n#Person2#: OK. Could I have your library card please?n#Person1#: Sure, here you are.n—nSummary:nnn### Response:n |

#Person1# asks #Person2# to recommend some books on ancient Greece. #Person2# suggests the one by Robin Smith which is very long, the one by Milton Pike which is not very thick, and the one winning several history works rewards. Since #Person1# doesn’t have enough time, #Person1#’ll get the book by Milton Pike. |

n—nnSummary:n |

#Person1#’s writing an essay about ancient Greece. #Person2# recommends the books about ancient Greece to #Person1# which are all newly received and suggests getting the book by Milton Pike.nn |

| Summarize this dialog:n#Person1#: Well, I see you’ve got your books, George. But are you reading? Looks like you’re just coloring.n#Person2#: I’m marking the important parts of the book. The colors are for different things.n#Person1#: Like what?n#Person2#: Main ideas are yellow. Important details to remember are pink and the light green is for difficult ideas.n#Person1#: This seems like a lot of activities. Aren’t you wasting your time?n#Person2#: I don’t think so, mom. I’ll read it all tonight. I’ll listen to it tomorrow. And then when it’s time to study, I will already know most of the information.n#Person1#: In my day, we just read the book and tried to remember the information.n#Person2#: The teacher told us the importance of active reading. And it’s the best way to learn at whatever speed you choose.n#Person1#: I see. And what’s this notebook for?n#Person2#: That’s for taking notes. What kind of things do you write down?n#Person1#: Sometimes I write a summary. On this page I write down questions. And of course I have another page where I write down the new words.n—nSummary:nnn### Response:n |

George tells his mother that marking the important parts of the book in different colors is his way of learning. He also takes notes on the notebook and sometimes writes a summary. |

#Person1#: Ohhh! Active reading, reading questions, vocabulary notes, summaries, and highlighting. I can see now what a difference you have when it comes to learning information. I’m impressed.n#Person2#: Thanks. Reading is the best way to learn and remember new information. Are you using active reading right now?n#Person1#: Hmmm….. I’ll have to think about that.nnn#Person |

George describes the activities he’s doing in order to read his books fast. George shows the notebook to mom and explains how to take notes.nn |

| Summarize this dialog:n#Person1#: Good morning, sir. May I see your passport?n#Person2#: Yes, of course. Here it is.n#Person1#: Thank you. Are all these yours?n#Person2#: No, some of them are my friend’s. Do you want her passport, too?n#Person1#: Yes, please. Do you have any carry-on items?n#Person2#: Do you want me to put it on the belt, too?n#Person1#: Yes, please. Would you please open this big suitcase?n#Person2#: OK. Just a moment. It’s just some clothes and toiletries.n#Person1#: OK. Do you have anything to declare?n#Person2#: I think there is nothing.n#Person1#: Any fresh food, live plants, or animals?n#Person2#: Oh, no. None of that.n#Person1#: Thank you. You may lock up your suitcase. Here are your passports.n#Person2#: Thanks a lot.n—nSummary:nnn### Response:n |

#Person1# sees #Person2#’s and #Person2#’s friend’s passport and checks their luggage. #Person2# tells #Person1# they’ve nothing to declare. #Person1# lets them go. |

> I think there is nothing.nnn—n**Categories:**nn- [Conversation (32)](../conversation/32.md)n – [TSA (4)](../conversation/32/4.md)nn### Key:nn :information_source: 31 (TSA)n:information_source: 32 (TSA)nn—-n* Any copyright |

The immigration official asks #Person2# to show his passport and open his big suitcase. #Person2# has nothing to declare and he may lock up his suitcase.nn |

| Summarize this dialog:n#Person1#: Chinese tea is famous all over the world. I want to buy several boxes.n#Person2#: There are various kinds of tea in China. It depends on the taste and effect.n#Person1#: Well, I do not know Chinese tea at all.n#Person2#: This is green tea, you can drink it in summer. It can relieve summer-heat and it is good for maintaining your lungs.n#Person1#: Sounds interesting. What about this?n#Person2#: It is black tea, it has a strong taste and promotes digestion.n#Person1#: What’s this? It smells fragrant.n#Person2#: It is jasmine tea of high quality. It is fragrant and has a pleasant taste.n#Person1#: I will take the jasmine tea. Could you pack it with the wrapping paper and ribbon? It is a gift for my friend.n#Person2#: No problem. Wait for a moment.n—nSummary:nnn### Response:n |

#Person2# recommends green tea, black tee, and jasmine tee to #Person1# who wants to buy Chinese tea and #Person1# decides to take the jasmine tea. |

#[English]#: Chinese tea is famous all over the world. I want to buy several boxes.n#[French]#: Il y a différentes sorts de thé chinois au pays. C’est selon le goût, et les effets qu’on veut obtenir.n#[Spanish]#: El té chino es muy famosos, tengo que comprar varias cajas.n#[German]#: Ich mö |

#Person1# wants to buy several boxes of Chinese tea, with different kinds of tea. #Person2# helps #Person1# to select and will pack it up.nn |

- Results for fine-tuning the Llama-2 13B model on the Dialogsum dataset:

| Inputs |

Ground Truth |

Response from Non-Fine-Tuned Model |

Response from Fine-Tuned Model |

| Summarize this dialog:n#Person1#: Mrs. Thompson once ditheist, Jason.n#Person2#: Why? We shouldn’t be in trouble or anything? In fact we were super active during the entire class discussion this morning.n#Person1#: That’s just it. I don’t think it’s a bad thing at all. I think she is going to ask us to run for class monitor.n#Person2#: Whoa! Really? That’s a lot of responsibility.n#Person1#: Well, I think you’d be great at it. Besides, it’s a big honor to be asked to do it. It means that Mrs. Thompson sees us as leaders.n#Person2#: I’m not sure if I can do it by myself, though. Could we do it together?n#Person1#: Let’s ask Mrs. Thompson. I hear her coming in from the hallway now.n—nSummary:nnn### Response:n |

#Person1# tells Jason Mrs. Thompson once ditheist and #Person1# guesses she wants them to run for class monitors. They’ll ask Mrs. Thompson whether they can do this together. |

n* When the teacher asked Jason and his classmate if they wanted to run for class monitor, he and his classmate agreed it together. Both boys are Christian, but they are not friends in class.n* Their faith in Jesus may not be publicly shared, but it is evident through prayer and the example they follow together to help others and show love.nn—n## 1654nn**[Situation:** Students are talking about the recent |

Mrs. Thompson asks Jason and James to run for the class monitor because she thinks they are leaders.nn |

| Summarize this dialog:n#Person1#: Hey, let’s pull over and look at that house over there.n#Person2#: Yes, that looks great! Is it on our Open House list?n#Person1#: Yes, it is on our list.n#Person2#: Well, it looks great from the curb. Let’s go on inside.n#Person1#: Let’s sign the registry. The realtor looks kind of busy right now.n#Person2#: OK, let’s go check out the kitchen next.n#Person1#: Oh look, they have some refreshments in here.n#Person2#: Make sure that you pick up a flyer with the information about the home.n#Person1#: Does it say how much they are asking for this house?n#Person2#: Yes, it seems to have been discounted from two hundred and thirty-five thousand to two hundred and twenty-five thousandn—nSummary:nnn### Response:n |

#Person1# and #Person2# come to see a house. They check out the inside and talk about its price. |

This is an AMI response to the following stimulus.nnPerson 2: nYes, that looks great! Is it on our Open House list? nYes, it is on our list.nWell, it looks great from the curb. Let’s go on inside.nLet’s sign the registry. The realtor looks kind of busy right now.nOK, let’s go check out the kitchen next.nOh look, they have some |

#Person1# and #Person2# are looking around a house which is on their Open House list. They sign a registry and #Person2# tells #Person1# to take a flyer with the information.nn |

| Summarize this dialog:n#Person1#: How are you, Mr. Wilson? I am Tina.n#Person2#: Glad to meet you, Mrs. Tina.n#Person1#: Please have a seat. I know our employment of forcer has already given you the information about your employee contract, but I’d like to go over the main details again before signing. First, you’ll be getting a monthly salary, with one month paid vacation after one year of service.n#Person2#: That’s what I understand.n#Person1#: You’ll be covered by our medical plan while on duty. Since you’ll be middle management, you’re expected to be available up to 1. 5 hours past normal working hours. Any approved time over that will be paid at time and a half, which you can take as salary or time off.n#Person2#: Exactly my understanding.n#Person1#: A reasonable number of sick days will be covered by the company. Any extended illness will be covered by insurance. Have you read the other terms of the contract?n#Person2#: Yes, I have.n#Person1#: Do you have any other questions?n#Person2#: Just one. I noticed an item about flex-time. Is that a possibility for me?n#Person1#: Yes, it is, but you’ll have to discuss the details with your manager.n#Person2#: That’s acceptable.n#Person1#: Good. Now, if you’ll just sign here, you can start work in 3 days.n—nSummary:nnn### Response:n |

Mr.Wilson describes the elementary information about the employment contract to Tina. He mentions monthly salary, one month paid vacation, medical plans, extended working hours and flex-time. |

nNo, the summaries aren’t right. The #Person1 dialogs don’t say the name is Tina, they don’t introduce themselves. I think they both know each others’ names, so that’s unneeded.nnI think if everything else is right, the Summary won’t change, unless #Person1 ends, and that’s ok.nn |

Tina meets with Mr. Wilson to go over the main details of Tina’s employee contract. The two discuss the monthly salary and medical plan. Tina has no questions and will start work in 3 days.nn |

| Summarize this dialog:n#Person1#: Jack, what is your favorite subject?n#Person2#: My favorite subject? Well, I think I prefer science. Yes, science, I find it very interesting. The science teacher makes the subject very exciting. What about you?n#Person1#: I always do very well in the maths tests. I also like geography. I like learning about people and the places where they live. Are there any subjects that you don’t like Jack?n#Person2#: I don’t like physics very much. It’s not easy to understand, most of the children are bored with it.n—nSummary:nnn### Response:n |

Jack’s favorite subject is science while he doesn’t like physics very much. #Person1# likes maths and geography. |

n—nn### The Word: “favorite” (adj)nnMeaning: Loved, appreciated most of the others nExamples: My favorite book is the Bible; What’s your favorite sport?; My favorite color is blue.nn—nnnn### What is your ____ subject? (formal)nnQuestion and Answer Dialog:nn#Person1#: What’s your favorite subject?n# |

Jack and Jack are talking about their favorite and the least liked subjects.nn |

| Summarize this dialog:n#Person1#: Hi, Maggie. I saw your painting in the end of year school art exhibition that all the parents went to. It was great.n#Person2#: Well, our art teacher seemed really pleased with it, but I’m not sure what the parents thought about.n#Person1#: Oh, don’t worry. I only heard them saying good things about it.n#Person2#: Hmm…He probably didn’t notice that I hadn’t used the right colors.n#Person1#: I don’t think anyone noticed.n#Person2#: No, but I did. But it was too late to do anything about it. Well, next time I’ll do something better hopefully.n—nSummary:nnn### Response:n |

#Person1# saw Maggie’s painting and thinks it was great, but Maggie worries that she didn’t use the right colors and hopes she’ll do something better next time. |

This summary should highlight the key points and conclusions from the discussion.nn# Respond to Person1:n[Response summary]nn—n### Response:nThis summary should highlight the key points and conclusions from the discussion.nn# Respond to Person2:n[Response summary]nn### Person1:n[Person1 summary]n—n### Person2:n[Person2 summary]nn### Personn—n |

#Person1# saw Maggie’s painting and told Maggie that a lot of parents said great things about Maggie’s painting. Maggie does not think so.nn |

- Results for fine-tuning the Llama 2 70B model on the Dialogsum dataset:

| Inputs |

Ground Truth |

Response from Non-Fine-Tuned Model |

Response from Fine-Tuned Model |