In-context learning with Large Language Models (LLMs) has emerged as a promising avenue of research in Dialog State Tracking (DST). However, the best-performing in-context learning methods involve retrieving and adding similar examples to the prompt, requiring access to labeled training data. Procuring such training data for a wide range of domains and applications is time-consuming, expensive, and, at times, infeasible. While zero-shot learning requires no training data, it significantly lags behind the few-shot setup. Thus, ‘Can we efficiently generate synthetic data for any dialogue schema…Apple Machine Learning Research

Multichannel Voice Trigger Detection Based on Transform-average-concatenate

This paper was accepted at the workshop HSCMA at ICASSP 2024.

Voice triggering (VT) enables users to activate their devices by just speaking a trigger phrase. A front-end system is typically used to perform speech enhancement and/or separation, and produces multiple enhanced and/or separated signals. Since conventional VT systems take only single-channel audio as input, channel selection is performed. A drawback of this approach is that unselected channels are discarded, even if the discarded channels could contain useful information for VT. In this work, we propose multichannel acoustic…Apple Machine Learning Research

VideoPrism: A foundational visual encoder for video understanding

An astounding number of videos are available on the Web, covering a variety of content from everyday moments people share to historical moments to scientific observations, each of which contains a unique record of the world. The right tools could help researchers analyze these videos, transforming how we understand the world around us.

Videos offer dynamic visual content far more rich than static images, capturing movement, changes, and dynamic relationships between entities. Analyzing this complexity, along with the immense diversity of publicly available video data, demands models that go beyond traditional image understanding. Consequently, many of the approaches that best perform on video understanding still rely on specialized models tailor-made for particular tasks. Recently, there has been exciting progress in this area using video foundation models (ViFMs), such as VideoCLIP, InternVideo, VideoCoCa, and UMT). However, building a ViFM that handles the sheer diversity of video data remains a challenge.

With the goal of building a single model for general-purpose video understanding, we introduced “VideoPrism: A Foundational Visual Encoder for Video Understanding”. VideoPrism is a ViFM designed to handle a wide spectrum of video understanding tasks, including classification, localization, retrieval, captioning, and question answering (QA). We propose innovations in both the pre-training data as well as the modeling strategy. We pre-train VideoPrism on a massive and diverse dataset: 36 million high-quality video-text pairs and 582 million video clips with noisy or machine-generated parallel text. Our pre-training approach is designed for this hybrid data, to learn both from video-text pairs and the videos themselves. VideoPrism is incredibly easy to adapt to new video understanding challenges, and achieves state-of-the-art performance using a single frozen model.

| VideoPrism is a general-purpose video encoder that enables state-of-the-art results over a wide spectrum of video understanding tasks, including classification, localization, retrieval, captioning, and question answering, by producing video representations from a single frozen model. |

Pre-training data

A powerful ViFM needs a very large collection of videos on which to train — similar to other foundation models (FMs), such as those for large language models (LLMs). Ideally, we would want the pre-training data to be a representative sample of all the videos in the world. While naturally most of these videos do not have perfect captions or descriptions, even imperfect text can provide useful information about the semantic content of the video.

To give our model the best possible starting point, we put together a massive pre-training corpus consisting of several public and private datasets, including YT-Temporal-180M, InternVid, VideoCC, WTS-70M, etc. This includes 36 million carefully selected videos with high-quality captions, along with an additional 582 million clips with varying levels of noisy text (like auto-generated transcripts). To our knowledge, this is the largest and most diverse video training corpus of its kind.

|

| Statistics on the video-text pre-training data. The large variations of the CLIP similarity scores (the higher, the better) demonstrate the diverse caption quality of our pre-training data, which is a byproduct of the various ways used to harvest the text. |

Two-stage training

The VideoPrism model architecture stems from the standard vision transformer (ViT) with a factorized design that sequentially encodes spatial and temporal information following ViViT. Our training approach leverages both the high-quality video-text data and the video data with noisy text mentioned above. To start, we use contrastive learning (an approach that minimizes the distance between positive video-text pairs while maximizing the distance between negative video-text pairs) to teach our model to match videos with their own text descriptions, including imperfect ones. This builds a foundation for matching semantic language content to visual content.

After video-text contrastive training, we leverage the collection of videos without text descriptions. Here, we build on the masked video modeling framework to predict masked patches in a video, with a few improvements. We train the model to predict both the video-level global embedding and token-wise embeddings from the first-stage model to effectively leverage the knowledge acquired in that stage. We then randomly shuffle the predicted tokens to prevent the model from learning shortcuts.

What is unique about VideoPrism’s setup is that we use two complementary pre-training signals: text descriptions and the visual content within a video. Text descriptions often focus on what things look like, while the video content provides information about movement and visual dynamics. This enables VideoPrism to excel in tasks that demand an understanding of both appearance and motion.

Results

We conducted extensive evaluation on VideoPrism across four broad categories of video understanding tasks, including video classification and localization, video-text retrieval, video captioning, question answering, and scientific video understanding. VideoPrism achieves state-of-the-art performance on 30 out of 33 video understanding benchmarks — all with minimal adaptation of a single, frozen model.

|

| VideoPrism compared to the previous best-performing FMs. |

Classification and localization

We evaluate VideoPrism on an existing large-scale video understanding benchmark (VideoGLUE) covering classification and localization tasks. We found that (1) VideoPrism outperforms all of the other state-of-the-art FMs, and (2) no other single model consistently came in second place. This tells us that VideoPrism has learned to effectively pack a variety of video signals into one encoder — from semantics at different granularities to appearance and motion cues — and it works well across a variety of video sources.

|

| VideoPrism outperforms state-of-the-art approaches (including CLIP, VATT, InternVideo, and UMT) on the video understanding benchmark. In this plot, we show the absolute score differences compared with the previous best model to highlight the relative improvements of VideoPrism. On Charades, ActivityNet, AVA, and AVA-K, we use mean average precision (mAP) as the evaluation metric. On the other datasets, we report top-1 accuracy. |

Combining with LLMs

We further explore combining VideoPrism with LLMs to unlock its ability to handle various video-language tasks. In particular, when paired with a text encoder (following LiT) or a language decoder (such as PaLM-2), VideoPrism can be utilized for video-text retrieval, video captioning, and video QA tasks. We compare the combined models on a broad and challenging set of vision-language benchmarks. VideoPrism sets the new state of the art on most benchmarks. From the visual results, we find that VideoPrism is capable of understanding complex motions and appearances in videos (e.g., the model can recognize the different colors of spinning objects on the window in the visual examples below). These results demonstrate that VideoPrism is strongly compatible with language models.

|

| VideoPrism achieves competitive results compared with state-of-the-art approaches (including VideoCoCa, UMT and Flamingo) on multiple video-text retrieval (top) and video captioning and video QA (bottom) benchmarks. We also show the absolute score differences compared with the previous best model to highlight the relative improvements of VideoPrism. We report the Recall@1 on MASRVTT, VATEX, and ActivityNet, CIDEr score on MSRVTT-Cap, VATEX-Cap, and YouCook2, top-1 accuracy on MSRVTT-QA and MSVD-QA, and WUPS index on NExT-QA. |

| We show qualitative results using VideoPrism with a text encoder for video-text retrieval (first row) and adapted to a language decoder for video QA (second and third row). For video-text retrieval examples, the blue bars indicate the embedding similarities between the videos and the text queries. |

Scientific applications

Finally, we tested VideoPrism on datasets used by scientists across domains, including fields such as ethology, behavioral neuroscience, and ecology. These datasets typically require domain expertise to annotate, for which we leverage existing scientific datasets open-sourced by the community including Fly vs. Fly, CalMS21, ChimpACT, and KABR. VideoPrism not only performs exceptionally well, but actually surpasses models designed specifically for those tasks. This suggests tools like VideoPrism have the potential to transform how scientists analyze video data across different fields.

Conclusion

With VideoPrism, we introduce a powerful and versatile video encoder that sets a new standard for general-purpose video understanding. Our emphasis on both building a massive and varied pre-training dataset and innovative modeling techniques has been validated through our extensive evaluations. Not only does VideoPrism consistently outperform strong baselines, but its unique ability to generalize positions it well for tackling an array of real-world applications. Because of its potential broad use, we are committed to continuing further responsible research in this space, guided by our AI Principles. We hope VideoPrism paves the way for future breakthroughs at the intersection of AI and video analysis, helping to realize the potential of ViFMs across domains such as scientific discovery, education, and healthcare.

Acknowledgements

This blog post is made on behalf of all the VideoPrism authors: Long Zhao, Nitesh B. Gundavarapu, Liangzhe Yuan, Hao Zhou, Shen Yan, Jennifer J. Sun, Luke Friedman, Rui Qian, Tobias Weyand, Yue Zhao, Rachel Hornung, Florian Schroff, Ming-Hsuan Yang, David A. Ross, Huisheng Wang, Hartwig Adam, Mikhail Sirotenko, Ting Liu, and Boqing Gong. We sincerely thank David Hendon for their product management efforts, and Alex Siegman, Ramya Ganeshan, and Victor Gomes for their program and resource management efforts. We also thank Hassan Akbari, Sherry Ben, Yoni Ben-Meshulam, Chun-Te Chu, Sam Clearwater, Yin Cui, Ilya Figotin, Anja Hauth, Sergey Ioffe, Xuhui Jia, Yeqing Li, Lu Jiang, Zu Kim, Dan Kondratyuk, Bill Mark, Arsha Nagrani, Caroline Pantofaru, Sushant Prakash, Cordelia Schmid, Bryan Seybold, Mojtaba Seyedhosseini, Amanda Sadler, Rif A. Saurous, Rachel Stigler, Paul Voigtlaender, Pingmei Xu, Chaochao Yan, Xuan Yang, and Yukun Zhu for the discussions, support, and feedback that greatly contributed to this work. We are grateful to Jay Yagnik, Rahul Sukthankar, and Tomas Izo for their enthusiastic support for this project. Lastly, we thank Tom Small, Jennifer J. Sun, Hao Zhou, Nitesh B. Gundavarapu, Luke Friedman, and Mikhail Sirotenko for the tremendous help with making this blog post.

Knowledge distillation method for better vision-language models

Method preserves knowledge encoded in teacher model’s attention heads even when student model has fewer of them.Read More

Gemini models are coming to Performance Max

New improvements and AI-powered features are coming to Performance Max, including Gemini models for text generation.Read More

New improvements and AI-powered features are coming to Performance Max, including Gemini models for text generation.Read More

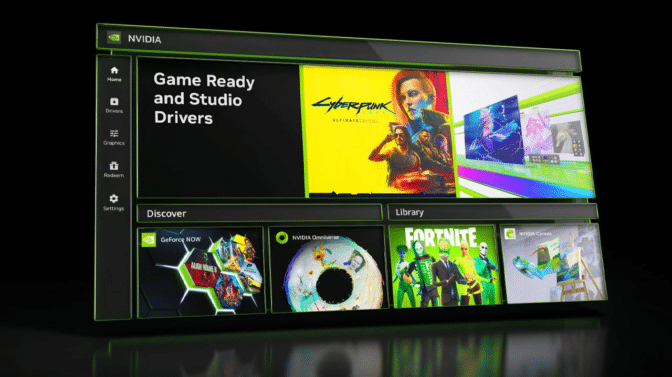

Add It to the Toolkit: February Studio Driver and NVIDIA App Beta Now Available

Editor’s note: This post is part of our weekly In the NVIDIA Studio series, which celebrates featured artists, offers creative tips and tricks, and demonstrates how NVIDIA Studio technology improves creative workflows. We’re also deep diving on new GeForce RTX 40 Series GPU features, technologies and resources, and how they dramatically accelerate content creation.

The February NVIDIA Studio Driver, designed specifically to optimize creative apps, is now available for download. Developed in collaboration with app developers, Studio Drivers undergo extensive testing to ensure seamless compatibility with creative apps while enhancing features, automating processes and speeding workflows.

Creators can download the latest driver on the public beta of the new NVIDIA app, the essential companion for creators and gamers with NVIDIA GPUs in their PCs and laptops. The NVIDIA app beta is a first step to modernize and unify the NVIDIA Control Panel, GeForce Experience and RTX Experience apps.

The NVIDIA app simplifies the process of keeping PCs updated with the latest NVIDIA drivers, enables quick discovery and installation of NVIDIA apps like NVIDIA Broadcast and NVIDIA Omniverse, unifies the GPU control center, and introduces a redesigned in-app overlay for convenient access to powerful recording tools. Download the NVIDIA app beta today.

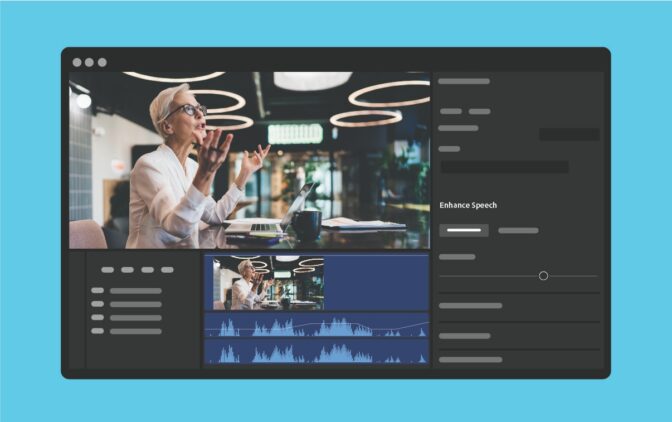

Adobe Premiere Pro’s AI-powered Enhance Speech tool is now available in general release. Accelerated by NVIDIA RTX, the new feature removes unwanted noise and improves the quality of dialogue clips so they sound professionally recorded. It’s 75% faster on a GeForce RTX 4090 laptop GPU compared with an RTX 3080 Ti.

Have a Chat with RTX, the tech demo app that lets GeForce RTX owners personalize a large language model connected to their own content. Results are fast and secure since it runs locally on a Windows RTX PC or workstation. Download Chat with RTX today.

And this week In the NVIDIA Studio, filmmaker James Matthews shares his short film, Dive, which was created with an Adobe Premiere Pro-powered workflow supercharged by his ASUS ZenBook Pro NVIDIA Studio laptop with a GeForce RTX 4070 graphics card.

Going With the Flow

Matthews’ goal with Dive was to create a visual and auditory representation of what it feels like to get swallowed up in the creative editing process.

“When I’m really deep into an edit, I sometimes feel like I’m fully immersed into the film and the editing process itself,” he said. “It’s almost like a flow state, where time stands still and you are one with your own creativity.”

To capture and visualize that feeling, Matthews used the power of his ASUS ZenBook Pro NVIDIA Studio laptop equipped with a GeForce RTX 4070 graphics card.

He started by brainstorming — listening to music and sketching conceptual images with pencil and paper. Then, Matthews added a song to his Adobe Premiere Pro timeline and created a shot list, complete with cuts and descriptions of focal range, speed, camera movement, lighting and other details.

Next, he planned location and shooting times, paying special attention to lighting conditions.

“I always have my Premiere Pro timeline up so I can really see and feel what I need to create from the images I originally drew while building the concept in my head,” Matthews said. “This helps get the pacing of each shot right, by watching it back and possibly adding it into the timeline for a test.”

Then, Matthews started editing the footage in Premiere Pro, aided by his Studio laptop. His dedicated GPU-based NVIDIA video encoder (NVENC) enabled buttery-smooth playback and scrubbing of his high-resolution and multi-stream footage, saving countless hours.

Matthews’ RTX GPU accelerated a variety of AI-powered Adobe video editing tools, such as Enhance Speech, Scene Edit Detection and Auto Color, which applies color corrections with just a few clicks.

Finally, Matthews added sound design before exporting the final files twice as fast thanks to NVENC’s dual AV1 encoders.

“The entire edit used GPU acceleration,” he shared. “Effects in Premiere Pro, along with the NVENC video encoders on the GPU, unlocked a seamless workflow and essentially allowed me to get into my flow state faster.”

Watch Matthews’ content on YouTube.

Follow NVIDIA Studio on Facebook, Instagram and X . Access tutorials on the Studio YouTube channel and get updates directly in your inbox by subscribing to the Studio newsletter.

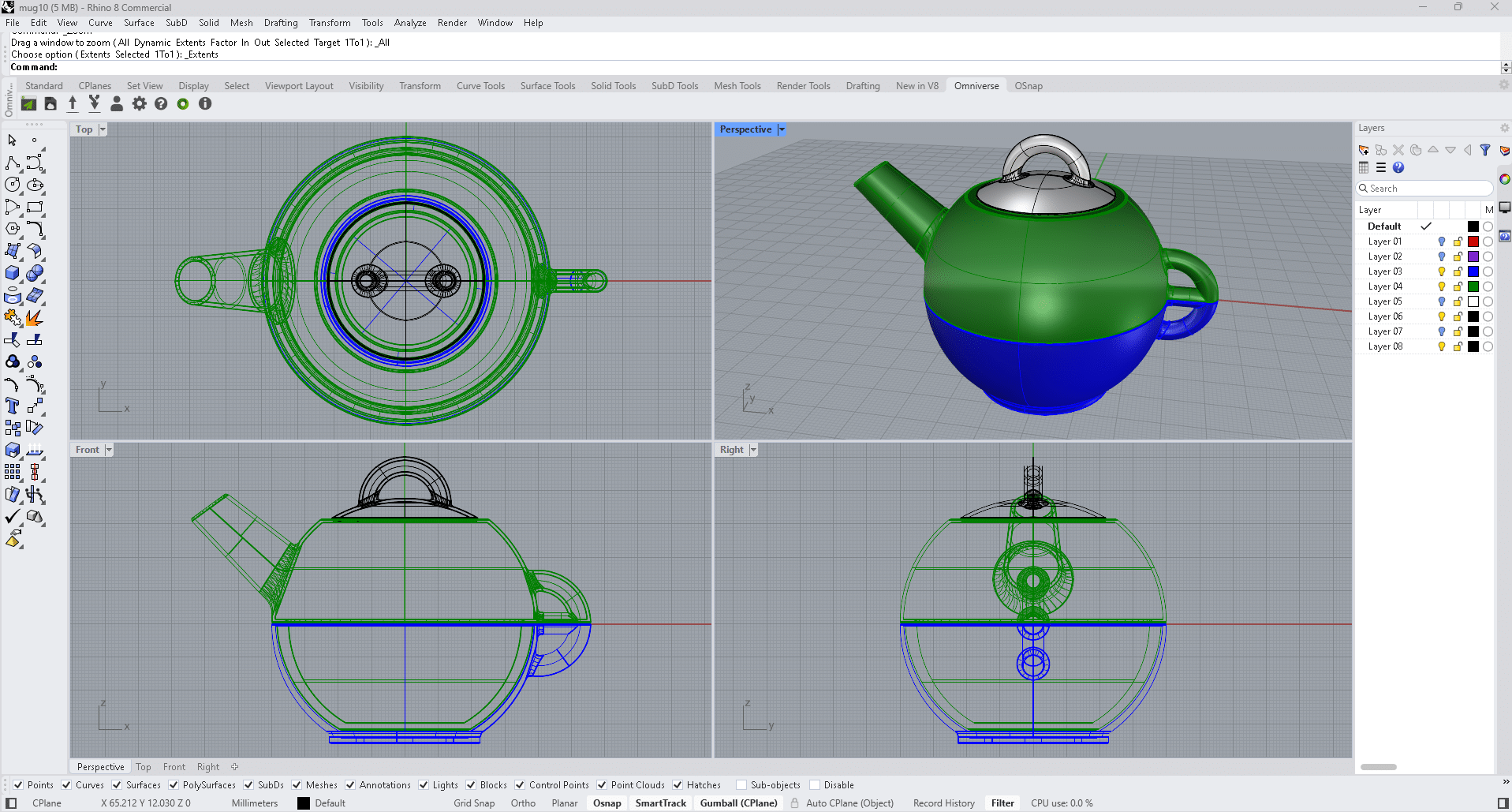

Into the Omniverse: Rhino 3D Launches OpenUSD Features to Enhance 3D Modeling and Development

Editor’s note: This post is part of Into the Omniverse, a series focused on how artists, developers and enterprises can transform their workflows using the latest advances in OpenUSD and NVIDIA Omniverse.

The combination of powerful 3D tools and groundbreaking technologies can transform the way designers bring their visions to life — and Universal Scene Description, or OpenUSD, is helping enable that synergy. It’s the framework on which the NVIDIA Omniverse platform that enables the development of OpenUSD-based tools and 3D workflows is based.

Rhinoceros, commonly known as Rhino, or Rhino 3D, is a powerful computer-aided design (CAD) and 3D modeling software used across industries — from education and jewelry design to architecture and marine modeling. The most recent software release includes support for OpenUSD export, among other updates, establishing it among the many applications embracing the new 3D standard.

Turning Creativity Into CAD Reality

Tanja Langgner, 3D artist and illustrator, grew up in Austria and now lives in a converted pigsty in the English countryside. With a background in industrial design, she’s had the opportunity to work with a slew of design agencies across Europe.

For the past decade, she’s undertaken freelance work in production and visualization, helping clients with tasks ranging from concept design and ideation to CAD and 3D modeling. Often doing industrial design work, Langgner relies on Rhino to construct CAD models, whether for production evaluation or rendering purposes.

When faced with designs requiring intricate surface patterns, “Rhino’s Grasshopper, a parametric modeler, is excellent in creating complex parametric shapes,” she said.

Langgner is no stranger to OpenUSD. She uses it to transfer assets easily from one application to another, allowing her to visualize her work more efficiently. With the new Rhino update, she can now export OpenUSD files from Rhino, further streamlining her design workflow.

Mathew Schwartz, an assistant professor in architecture and design at the New Jersey Institute of Technology, also uses Rhino and OpenUSD in his 3D workflows. Schwartz’s research and design lab, SiBORG, focuses on understanding and improving design workflows, especially with regard to accessibility, human factors and automation.

With OpenUSD, he can combine his research, Python code, 3D environments and renders, with his favorite tools in Omniverse.

Schwartz, along with Langgner, recently joined a community livestream, where he shared more details about his research — including how he’s made navigation graphs that show how someone can move in a space if they’re using a wheelchair or crutches in real-time. With his industrial design experience, he demonstrated computation using Rhino 3D and the use of generative AI for a seamless design process.

“With OpenUSD and Omniverse, we’ve been able to expand the scope of our research, as we can easily combine data analysis and visualization with the design process,” he said.

Learn more by watching the replay of the community livestream:

Rhino 3D Updates Simplify 3D Collaboration

Rhino 8, available now, brings significant enhancements to the 3D modeling experience. It enables the export of meshes, mesh vertex colors, physically based rendering materials and textures so users can seamlessly share and collaborate on 3D designs with enhanced visual elements.

The latest Rhino 8 release also includes improvements to:

- Modeling: New features, including PushPull direct editing, a ShrinkWrap function for creating water-tight meshes around various geometries, enhanced control over subdivision surfaces with the SubD Crease control tool, and improved functionality for smoother surface fillets.

- Drawing and illustration: Precision-boosting enhancements to clipping and sectioning operations, a new feature for creating reflected ceiling plans, major improvements to linetype options and enhanced UV mapping for better texture coordination.

- Operating systems: A faster-than-ever experience for Mac users thanks to Apple silicon processors and Apple Metal display technology, along with significantly accelerated rendering with the updated Cycles engine.

- Development: New Grasshopper components covering annotations, blocks, materials and user data, accompanied by a new, enhanced script editor.

Future Rhino updates will feature an expansion of export capabilities to include NURBS curves and surfaces, subdivision modeling and the option to import OpenUSD content.

The Rhino team actively seeks user feedback on desired import platforms and applications, continually working to make OpenUSD files widely accessible and adaptable across 3D environments.

Get Plugged Into the World of OpenUSD

Learn more about OpenUSD and meet experts at NVIDIA GTC, the conference for the era of AI, taking place March 18-21 at the San Jose Convention Center. Don’t miss:

- Members of the Alliance for OpenUSD (AOUSD) speaking on Monday, March 18, about the power of OpenUSD as a standard for the 3D internet.

- OpenUSD Day on Tuesday, March 19, to learn how to build generative AI-enabled 3D pipelines and tools for industrial digitalization.

- Hands-on OpenUSD training for all skill levels, from learning the fundamentals to building OpenUSD-based applications.

#OpenUSD Day will be at #GTC24.

You won’t want to miss this full day of sessions on building #genAI-enabled 3D pipelines & tools.

https://t.co/AqUmID7sRo pic.twitter.com/VHq7TiR1NF

— NVIDIA Omniverse (@nvidiaomniverse) January 29, 2024

Get started with NVIDIA Omniverse by downloading the standard license free, access OpenUSD resources, and learn how Omniverse Enterprise can connect your team. Stay up to date on Instagram, Medium and X. For more, join the Omniverse community on the forums, Discord server, Twitch and YouTube channels.

Featured image courtesy of Tanja Langgner.

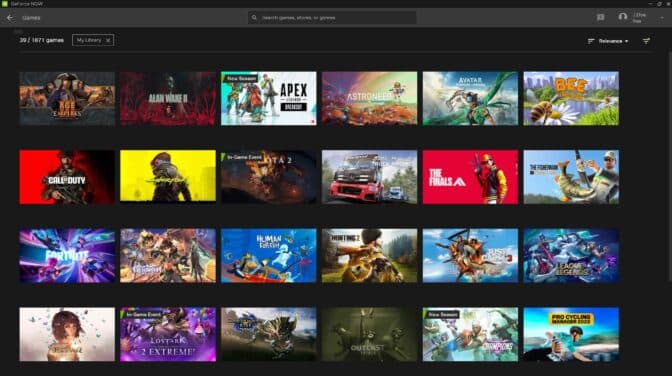

Time to Play: GeForce NOW Now Offers 1,800 Games to Stream

Top-tier games from publishing partners Bandai Namco Entertainment and Inflexion Games are joining GeForce NOW this week as the cloud streaming service’s fourth-anniversary celebrations continue.

Eleven new titles join the over 1,800 supported games in the GeForce NOW library, including Nightingale from Inflexion Games and Bandai Namco Entertainment’s Tales of Arise, Katamari Damacy REROLL and Klonoa Phantasy Reverie Series.

“Happy fourth anniversary, GeForce NOW!” cheered Jarrett Lee, head of publishing at Inflexion Games. “The platform’s ease of access and seamless performance comprise a winning combination, and we’re excited to see how cloud gaming evolves.”

Bigger Than Your Gaming Backlog

GeForce NOW offers over 1,800 games supported in the cloud, including over 100 free-to-play titles. That’s more than enough to play a different game every day for nearly five years.

The expansive GeForce NOW library supports games from popular digital stores Steam, Xbox — including supported PC Game Pass titles — Epic Games Store, Ubisoft Connect and GOG.com. From indie games to triple-A titles, there’s something for everyone to play.

Explore sprawling open worlds in the neon-drenched streets of Night City in Cyberpunk 2077, or unearth ancient secrets in the vast landscapes of Assassin’s Creed Valhalla. Test skills against friends in the high-octane action of Apex Legends or Fortnite, strategize on the battlefield in Age of Empires IV or gather the ultimate party in Baldur’s Gate 3.

Build a dream farm in Stardew Valley, explore charming worlds in Hollow Knight or build a thriving metropolis in Cities: Skylines II.

Members can also catch the latest titles in the cloud, including the newly launched dark-fantasy adventure game The Inquisitor and Ubisoft’s Skull and Bones.

Dedicated rows in the GeForce NOW app help members find the perfect game to stream, and tags indicate when sales or downloadable content are available. GeForce NOW even has game-library syncing capabilities for Steam, Xbox and Ubisoft Connect so that supported games automatically sync to members’ cloud gaming libraries for easy access.

Access titles without waiting for them to download or worrying about system specs. Plus, Ultimate members gain exclusive access to gaming servers to get to their games faster.

Let’s Get Crafty

Set out on an adventure into the mysterious and dangerous Fae Realms of Nightingale, the highly anticipated shared-world survival crafting game from Inflexion Games. Become an intrepid Realmwalker and explore, craft, build and fight across a visually stunning magical fantasy world inspired by the Victorian era.

Venture forth alone or with up to six other players in an online, shared world. The game features epic action, a variety of fantastical creatures and a Realm Card system that allows players to travel between realms and reshape landscapes.

Experience the magic of the Fae Realms in stunning resolution with RTX ON.

Roll On Over to the Cloud

Get ready for more fantasy, a touch of royalty and even some nostalgia with the latest Bandai Namco Entertainment titles coming to the cloud. Tales of Arise, Katamari Damacy REROLL, Klonoa Phantasy Reverie Series, PAC-MAN MUSEUM+ and PAC-MAN WORLD Re-PAC are now available for members to stream.

Embark on a mesmerizing journey in the fantastical world of Tales of Arise and unravel a gripping narrative that transcends the boundaries of imagination. Or roll into the whimsical, charming world of Katamari Damacy REROLL — control a sticky ball and roll up everything in its path to create colorful, celestial bodies.

Indulge in a nostalgic gaming feast with Klonoa Phantasy Reverie Series, PAC-MAN MUSEUM+ and PAC-MAN WORLD Re-PAC. The Klonoa series revitalizes dreamlike adventures, blending fantasy and reality for a captivating experience. Meanwhile, PAC-MAN MUSEUM+ invites players to munch through PAC-MAN’s iconic history, showcasing the timeless charm of the beloved yellow icon. For those seeking a classic world with a modern twist, PAC-MAN WORLD Re-PAC delivers an adventure packed with excitement and familiar ghosts.

Face Your Fate

Dive into an adrenaline-fueled journey with Terminator: Dark Fate – Defiance, where strategic prowess decides the fate of mankind against machines. In a world taken over by machines, the greatest threats remaining may come not from the machines themselves but from other human survivors.

It’s part of 11 new games this week:

- Le Mans Ultimate (New release on Steam, Feb. 20)

- Nightingale (New release on Steam, Feb. 20)

- Terminator: Dark Fate – Defiance (New release on Steam, Feb. 21)

- Garden Life: A Cozy Simulator (New release on Steam, Feb. 22)

- Pacific Drive (New release on Steam, Feb. 22)

- Solium Infernum (New release on Steam, Feb. 22)

- Katamari Damacy REROLL (Steam)

- Klonoa Phantasy Reverie Series (Steam)

- PAC-MAN MUSEUM+ (Steam)

- PAC-MAN WORLD Re-PAC (Steam)

- Tales of Arise (Steam)

And several titles in the GeForce NOW library that were in early access are launching full releases this week:

- Last Epoch (New 1.0 release on Steam, Feb. 21)

- Myth of Empires (New 1.0 release on Steam, Feb. 21)

- Sons of the Forest (New 1.0 release on Steam, Feb. 22)

What are you planning to play this weekend? Let us know on X or in the comments below.

Off to the cloud…

Who’s coming with?

—

NVIDIA GeForce NOW (@NVIDIAGFN) February 20, 2024

FOMO Alert: Discover 7 Unmissable Reasons to Attend GTC 2024

“I just got back from GTC and ….”

In four weeks, those will be among the most powerful words in your industry. But you won’t be able to use them if you haven’t been here.

NVIDIA’s GTC 2024 transforms the San Jose Convention Center into a crucible of innovation, learning and community from March 18-21, marking a return to in-person gatherings that can’t be missed.

Tech enthusiasts, industry leaders and innovators from around the world are set to present and explore over 900 sessions and close to 300 exhibits.

They’ll dive into the future of AI, computing and beyond, with contributions from some of the brightest minds at companies such as Amazon, Amgen, Character.AI, Ford Motor Co., Genentech, L’Oréal, Lowe’s, Lucasfilm and Industrial Light & Magic, Mercedes-Benz, Pixar, Siemens, Shutterstock, xAI and many more.

Among the most anticipated events is the Transforming AI Panel, featuring the original architects behind the concept that revolutionized the way we approach AI today: Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, and Illia Polosukhin.

All eight authors of “Attention Is All You Need,” the seminal 2017 NeurIPS paper that introduced the trailblazing transformer neural network architecture will appear in person at GTC on a panel hosted by NVIDIA Founder and CEO Jensen Huang.

Located in the vibrant heart of Silicon Valley, GTC stands as a pivotal gathering where the convergence of technology and community shapes the future. This conference offers more than just presentations; it’s a collaborative platform for sharing knowledge and sparking innovation.

- Exclusive Insights: Last year, Huang announced a “lightspeed” leap in computing and partnerships with giants like Microsoft to set the stage. This year, anticipate more innovations at the SAP Center, giving attendees a first look at the next transformative breakthroughs.

- Networking Opportunities: GTC’s networking events are designed to transform casual encounters into pivotal career opportunities. Connect directly with industry leaders and innovators, making every conversation a potential gateway to your next big role or project.

- Cutting-Edge Exhibits: Step into the future with exhibits that showcase the latest in AI and robotics. Beyond mere displays, these exhibits offer hands-on learning experiences, providing attendees with invaluable knowledge to stay ahead.

AI is spilling out in all directions, and GTC is the best way to capture it all. Pictured: The latest installation from AI artist Refik Anadol, whose work will be featured at GTC. - Diversity and Innovation: Begin your day at the Women In Tech breakfast. This, combined with unique experiences like generative AI art installations and street food showcases, feeds creativity and fosters innovation in a relaxed setting.

- Learn From the Best: Engage with sessions led by visionaries from organizations such as Disney Research, Google DeepMind, Johnson & Johnson Innovative Medicine, Stanford University and beyond. These aren’t just lectures but opportunities to question, engage and turn insights into actionable knowledge that can shape your career trajectory.

- Silicon Valley Experience: Embrace the energy of the world’s foremost tech hub. Inside the conference, GTC connects attendees with the latest technologies and minds. Beyond the show floor, it’s a gateway to building lasting relationships with leaders and thinkers across industries.

- Seize the Future Now: Don’t just join a story — write one. Be part of this moment in AI. Register now for GTC to write your own story in the epicenter of technological advancement. Be part of this transformative moment in AI.

Advances in private training for production on-device language models

Language models (LMs) trained to predict the next word given input text are the key technology for many applications [1, 2]. In Gboard, LMs are used to improve users’ typing experience by supporting features like next word prediction (NWP), Smart Compose, smart completion and suggestion, slide to type, and proofread. Deploying models on users’ devices rather than enterprise servers has advantages like lower latency and better privacy for model usage. While training on-device models directly from user data effectively improves the utility performance for applications such as NWP and smart text selection, protecting the privacy of user data for model training is important.

|

| Gboard features powered by on-device language models. |

In this blog we discuss how years of research advances now power the private training of Gboard LMs, since the proof-of-concept development of federated learning (FL) in 2017 and formal differential privacy (DP) guarantees in 2022. FL enables mobile phones to collaboratively learn a model while keeping all the training data on device, and DP provides a quantifiable measure of data anonymization. Formally, DP is often characterized by (ε, δ) with smaller values representing stronger guarantees. Machine learning (ML) models are considered to have reasonable DP guarantees for ε=10 and strong DP guarantees for ε=1 when δ is small.

As of today, all NWP neural network LMs in Gboard are trained with FL with formal DP guarantees, and all future launches of Gboard LMs trained on user data require DP. These 30+ Gboard on-device LMs are launched in 7+ languages and 15+ countries, and satisfy (ɛ, δ)-DP guarantees of small δ of 10-10 and ɛ between 0.994 and 13.69. To the best of our knowledge, this is the largest known deployment of user-level DP in production at Google or anywhere, and the first time a strong DP guarantee of ɛ < 1 is announced for models trained directly on user data.

Privacy principles and practices in Gboard

In “Private Federated Learning in Gboard”, we discussed how different privacy principles are currently reflected in production models, including:

- Transparency and user control: We provide disclosure of what data is used, what purpose it is used for, how it is processed in various channels, and how Gboard users can easily configure the data usage in learning models.

- Data minimization: FL immediately aggregates only focused updates that improve a specific model. Secure aggregation (SecAgg) is an encryption method to further guarantee that only aggregated results of the ephemeral updates can be accessed.

- Data anonymization: DP is applied by the server to prevent models from memorizing the unique information in individual user’s training data.

- Auditability and verifiability: We have made public the key algorithmic approaches and privacy accounting in open-sourced code (TFF aggregator, TFP DPQuery, DP accounting, and FL system).

A brief history

In recent years, FL has become the default method for training Gboard on-device LMs from user data. In 2020, a DP mechanism that clips and adds noise to model updates was used to prevent memorization for training the Spanish LM in Spain, which satisfies finite DP guarantees (Tier 3 described in “How to DP-fy ML“ guide). In 2022, with the help of the DP-Follow-The-Regularized-Leader (DP-FTRL) algorithm, the Spanish LM became the first production neural network trained directly on user data announced with a formal DP guarantee of (ε=8.9, δ=10-10)-DP (equivalent to the reported ρ=0.81 zero-Concentrated-Differential-Privacy), and therefore satisfies reasonable privacy guarantees (Tier 2).

Differential privacy by default in federated learning

In “Federated Learning of Gboard Language Models with Differential Privacy”, we announced that all the NWP neural network LMs in Gboard have DP guarantees, and all future launches of Gboard LMs trained on user data require DP guarantees. DP is enabled in FL by applying the following practices:

- Pre-train the model with the multilingual C4 dataset.

- Via simulation experiments on public datasets, find a large DP-noise-to-signal ratio that allows for high utility. Increasing the number of clients contributing to one round of model update improves privacy while keeping the noise ratio fixed for good utility, up to the point the DP target is met, or the maximum allowed by the system and the size of the population.

- Configure the parameter to restrict the frequency each client can contribute (e.g., once every few days) based on computation budget and estimated population in the FL system.

- Run DP-FTRL training with limits on the magnitude of per-device updates chosen either via adaptive clipping, or fixed based on experience.

SecAgg can be additionally applied by adopting the advances in improving computation and communication for scales and sensitivity.

|

| Federated learning with differential privacy and (SecAgg). |

Reporting DP guarantees

The DP guarantees of launched Gboard NWP LMs are visualized in the barplot below. The x-axis shows LMs labeled by language-locale and trained on corresponding populations; the y-axis shows the ε value when δ is fixed to a small value of 10-10 for (ε, δ)-DP (lower is better). The utility of these models are either significantly better than previous non-neural models in production, or comparable with previous LMs without DP, measured based on user-interactions metrics during A/B testing. For example, by applying the best practices, the DP guarantee of the Spanish model in Spain is improved from ε=8.9 to ε=5.37. SecAgg is additionally used for training the Spanish model in Spain and English model in the US. More details of the DP guarantees are reported in the appendix following the guidelines outlined in “How to DP-fy ML”.

Towards stronger DP guarantees

The ε~10 DP guarantees of many launched LMs are already considered reasonable for ML models in practice, while the journey of DP FL in Gboard continues for improving user typing experience while protecting data privacy. We are excited to announce that, for the first time, production LMs of Portuguese in Brazil and Spanish in Latin America are trained and launched with a DP guarantee of ε ≤ 1, which satisfies Tier 1 strong privacy guarantees. Specifically, the (ε=0.994, δ=10-10)-DP guarantee is achieved by running the advanced Matrix Factorization DP-FTRL (MF-DP-FTRL) algorithm, with 12,000+ devices participating in every training round of server model update larger than the common setting of 6500+ devices, and a carefully configured policy to restrict each client to at most participate twice in the total 2000 rounds of training in 14 days in the large Portuguese user population of Brazil. Using a similar setting, the es-US Spanish LM was trained in a large population combining multiple countries in Latin America to achieve (ε=0.994, δ=10-10)-DP. The ε ≤ 1 es-US model significantly improved the utility in many countries, and launched in Colombia, Ecuador, Guatemala, Mexico, and Venezuela. For the smaller population in Spain, the DP guarantee of es-ES LM is improved from ε=5.37 to ε=3.42 by only replacing DP-FTRL with MF-DP-FTRL without increasing the number of devices participating every round. More technical details are disclosed in the colab for privacy accounting.

|

| DP guarantees for Gboard NWP LMs (the purple bar represents the first es-ES launch of ε=8.9; cyan bars represent privacy improvements for models trained with MF-DP-FTRL; tiers are from “How to DP-fy ML“ guide; en-US* and es-ES* are additionally trained with SecAgg). |

Discussion and next steps

Our experience suggests that DP can be achieved in practice through system algorithm co-design on client participation, and that both privacy and utility can be strong when populations are large and a large number of devices’ contributions are aggregated. Privacy-utility-computation trade-offs can be improved by using public data, the new MF-DP-FTRL algorithm, and tightening accounting. With these techniques, a strong DP guarantee of ε ≤ 1 is possible but still challenging. Active research on empirical privacy auditing [1, 2] suggests that DP models are potentially more private than the worst-case DP guarantees imply. While we keep pushing the frontier of algorithms, which dimension of privacy-utility-computation should be prioritized?

We are actively working on all privacy aspects of ML, including extending DP-FTRL to distributed DP and improving auditability and verifiability. Trusted Execution Environment opens the opportunity for substantially increasing the model size with verifiable privacy. The recent breakthrough in large LMs (LLMs) motivates us to rethink the usage of public information in private training and more future interactions between LLMs, on-device LMs, and Gboard production.

Acknowledgments

The authors would like to thank Peter Kairouz, Brendan McMahan, and Daniel Ramage for their early feedback on the blog post itself, Shaofeng Li and Tom Small for helping with the animated figures, and the teams at Google that helped with algorithm design, infrastructure implementation, and production maintenance. The collaborators below directly contribute to the presented results:

Research and algorithm development: Galen Andrew, Stanislav Chiknavaryan, Christopher A. Choquette-Choo, Arun Ganesh, Peter Kairouz, Ryan McKenna, H. Brendan McMahan, Jesse Rosenstock, Timon Van Overveldt, Keith Rush, Shuang Song, Thomas Steinke, Abhradeep Guha Thakurta, Om Thakkar, and Yuanbo Zhang.

Infrastructure, production and leadership support: Mingqing Chen, Stefan Dierauf, Billy Dou, Hubert Eichner, Zachary Garrett, Jeremy Gillula, Jianpeng Hou, Hui Li, Xu Liu, Wenzhi Mao, Brett McLarnon, Mengchen Pei, Daniel Ramage, Swaroop Ramaswamy, Haicheng Sun, Andreas Terzis, Yun Wang, Shanshan Wu, Yu Xiao, and Shumin Zhai.