The adoption of generative AI is rapidly expanding, reaching an ever-growing number of industries and users worldwide. With the increasing complexity and scale of generative AI models, it is crucial to work towards minimizing their environmental impact. This involves a continuous effort focused on energy reduction and efficiency by achieving the maximum benefit from the resources provisioned and minimizing the total resources required.

To add to our guidance for optimizing deep learning workloads for sustainability on AWS, this post provides recommendations that are specific to generative AI workloads. In particular, we provide practical best practices for different customization scenarios, including training models from scratch, fine-tuning with additional data using full or parameter-efficient techniques, Retrieval Augmented Generation (RAG), and prompt engineering. Although this post primarily focuses on large language models (LLM), we believe most of the recommendations can be extended to other foundation models.

Generative AI problem framing

When framing your generative AI problem, consider the following:

- Align your use of generative AI with your sustainability goals – When scoping your project, be sure to take sustainability into account:

- What are the trade-offs between a generative AI solution and a less resource-intensive traditional approach?

- How can your generative AI project support sustainable innovation?

- Use energy that has low carbon-intensity – When regulations and legal aspects allow, train and deploy your model on one of the 19 AWS Regions where the electricity consumed in 2022 was attributable to 100% renewable energy and Regions where the grid has a published carbon intensity that is lower than other locations (or Regions). For more detail, refer to How to select a Region for your workload based on sustainability goals. When selecting a Region, try to minimize data movement across networks: train your models close to your data and deploy your models close to your users.

- Use managed services – Depending on your expertise and specific use case, weigh the options between opting for Amazon Bedrock, a serverless, fully managed service that provides access to a diverse range of foundation models through an API, or deploying your models on a fully managed infrastructure by using Amazon SageMaker. Using a managed service helps you operate more efficiently by shifting the responsibility of maintaining high utilization and sustainability optimization of the deployed hardware to AWS.

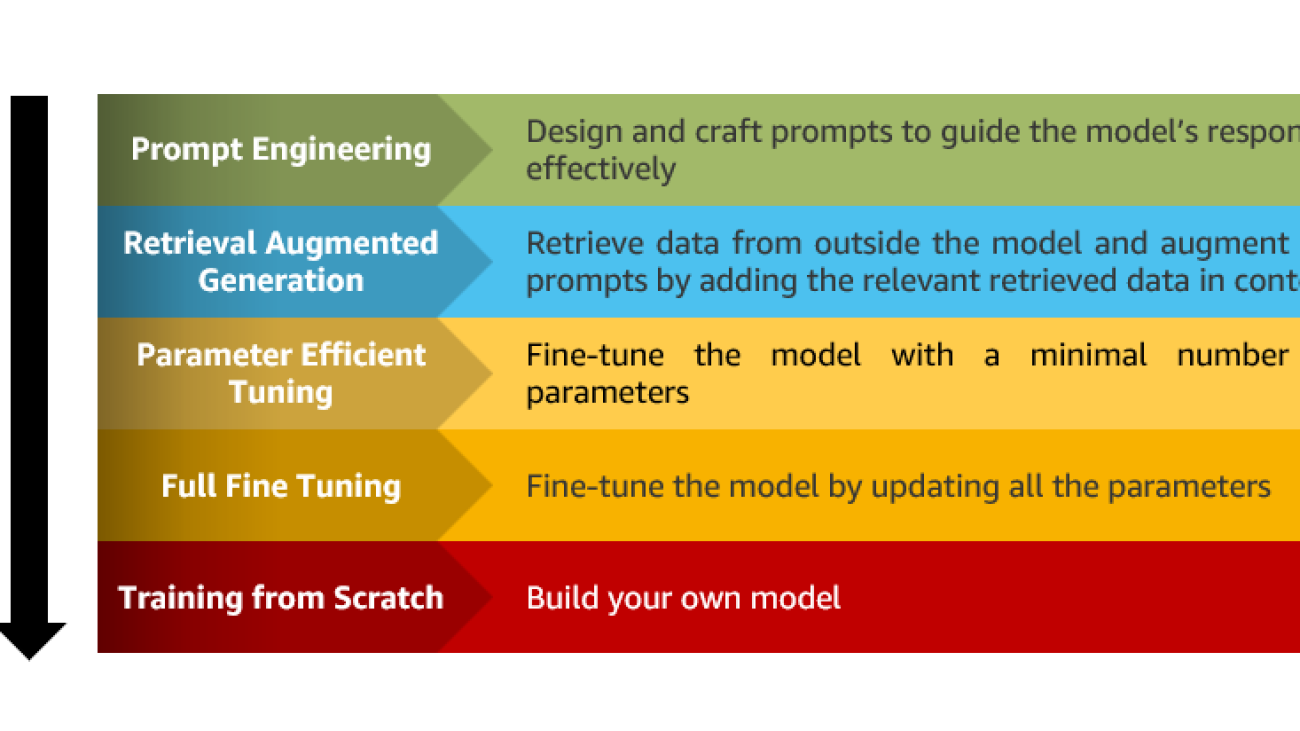

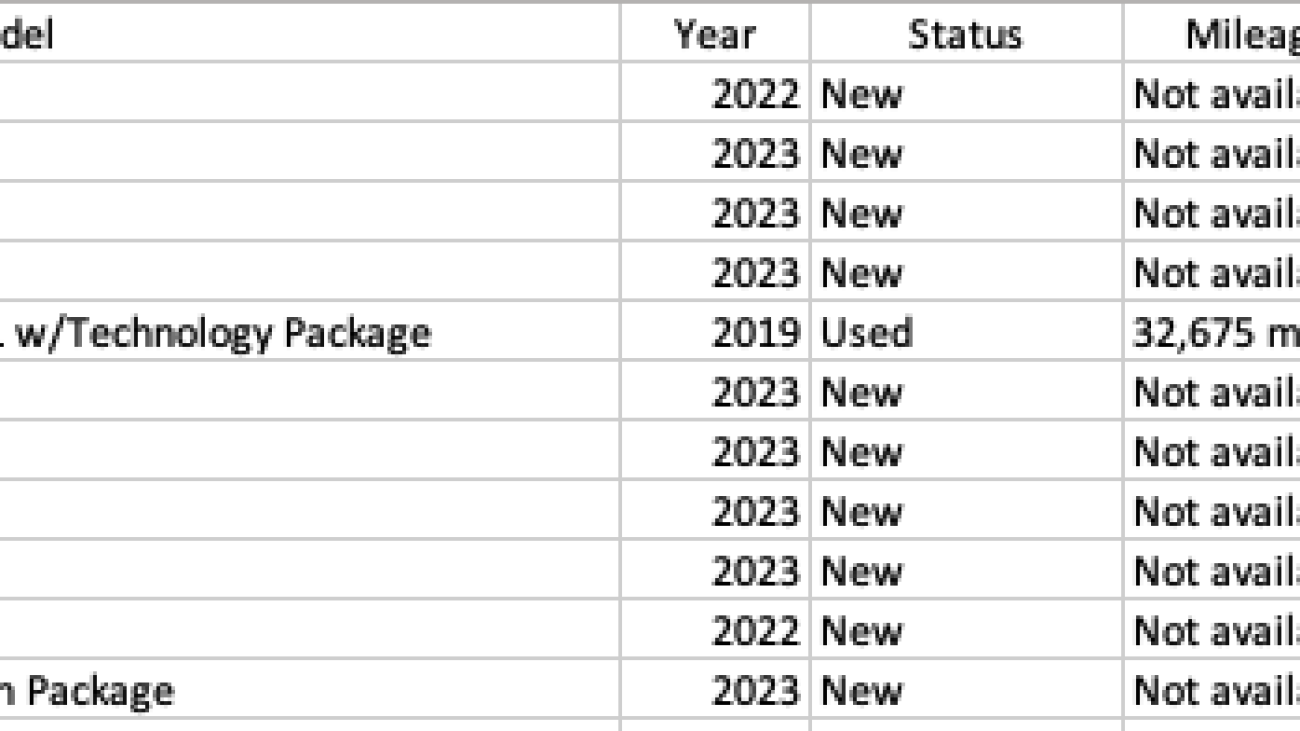

- Define the right customization strategy – There are several strategies to enhance the capacities of your model, ranging from prompt engineering to full fine-tuning. Choose the most suitable strategy based on your specific needs while also considering the differences in resources required for each. For instance, fine-tuning might achieve higher accuracy than prompt engineering but consumes more resources and energy in the training phase. Make trade-offs: by opting for a customization approach that prioritizes acceptable performance over optimal performance, reductions in the resources used by your models can be achieved. The following figure summarizes the environmental impact of LLMs customization strategies.

Model customization

In this section, we share best practices for model customization.

Base model selection

Selecting the appropriate base model is a critical step in customizing generative AI workloads and can help reduce the need for extensive fine-tuning and associated resource usage. Consider the following factors:

- Evaluate capabilities and limitations – Use the playgrounds of Amazon SageMaker JumpStart or Amazon Bedrock to easily test the capability of LLMs and assess their core limitations.

- Reduce the need for customization – Make sure to gather information by using public resources such as open LLMs leaderboards, holistic evaluation benchmarks, or model cards to compare different LLMs and understand the specific domains, tasks, and languages for which they have been pre-trained on. Depending on your use case, consider domain-specific or multilingual models to reduce the need for additional customization.

- Start with a small model size and small context window – Large model sizes and context windows (the number of tokens that can fit in a single prompt) can offer more performance and capabilities, but they also require more energy and resources for inference. Consider available versions of models with smaller sizes and context windows before scaling up to larger models. Specialized smaller models have their capacity concentrated on a specific target task. On these tasks, specialized models can behave qualitatively similarly to larger models (for example, GPT3.5, which has 175 billion parameters) while requiring fewer resources for training and inference. Examples of such models include Alpaca (7 billion parameters) or the utilization of T5 variants for multi-step math reasoning (11 billion parameters or more).

Prompt engineering

Effective prompt engineering can enhance the performance and efficiency of generative AI models. By carefully crafting prompts, you can guide the model’s behavior, reducing unnecessary iterations and resource requirements. Consider the following guidelines:

- Keep prompts concise and avoid unnecessary details – Longer prompts lead to a higher number of tokens. As tokens increase in number, the model consumes more memory and computational resources. Consider incorporating zero-shot or few-shot learning to enable the model to adapt quickly by learning from just a few examples.

- Experiment with different prompts gradually – Refine the prompts based on the desired output until you achieve the desired results. Depending on your task, explore advanced techniques such as self-consistency, Generated Knowledge Prompting, ReAct Prompting, or Automatic Prompt Engineer to further enhance the model’s capabilities.

- Use reproducible prompts – With templates such as LangChain prompt templates, you can save or load your prompts history as files. This enhances prompt experimentation tracking, versioning, and reusability. When you know the prompts that produce the best answers for each model, you can reduce the computational resources used for prompt iterations and redundant experiments across different projects.

Retrieval Augmented Generation

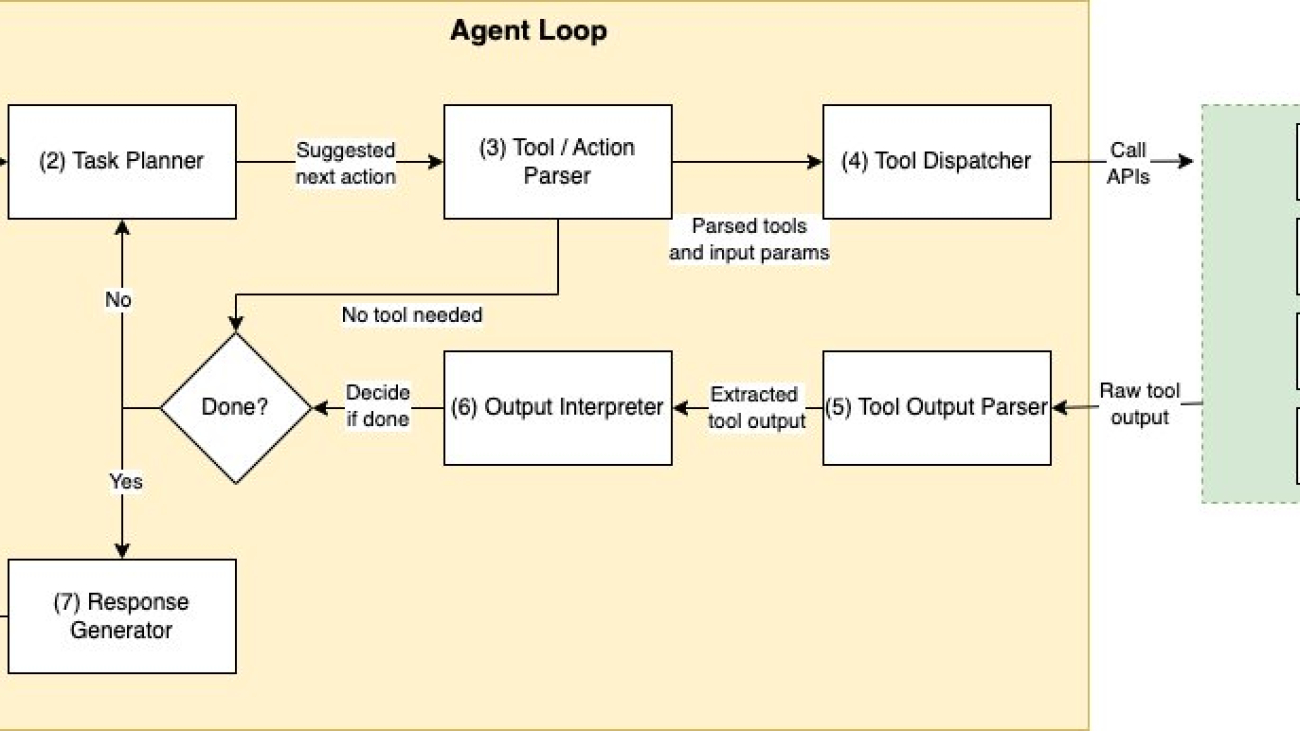

Retrieval Augmented Generation (RAG) is a highly effective approach for augmenting model capabilities by retrieving and integrating pertinent external information from a predefined dataset. Because existing LLMs are used as is, this strategy avoids the energy and resources needed to train the model on new data or build a new model from scratch. Use tools such as Amazon Kendra or Amazon OpenSearch Service and LangChain to successfully build RAG-based solutions with Amazon Bedrock or SageMaker JumpStart.

Parameter-Efficient Fine-Tuning

Parameter-Efficient Fine-Tuning (PEFT) is a fundamental aspect of sustainability in generative AI. It aims to achieve performance comparable to fine-tuning, using fewer trainable parameters. By fine-tuning only a small number of model parameters while freezing most parameters of the pre-trained LLMs, we can reduce computational resources and energy consumption.

Use public libraries such as the Parameter-Efficient Fine-Tuning library to implement common PEFT techniques such as Low Rank Adaptation (LoRa), Prefix Tuning, Prompt Tuning, or P-Tuning. As an example, studies show the utilization of LoRa can reduce the number of trainable parameters by 10,000 times and the GPU memory requirement by 3 times, depending on the size of your model, with similar or better performance.

Fine-tuning

Fine-tune the entire pre-trained model with the additional data. This approach may achieve higher performance but is more resource-intensive than PEFT. Use this strategy when the available data significantly differs from the pre-training data.

By selecting the right fine-tuning approach, you can maximize the reuse of your model and avoid the resource usage associated with fine-tuning multiple models for each use case. For example, if you anticipate reusing the model within a specific domain or business unit in your organization, you may prefer domain adaptation. On the other hand, instruction-based fine-tuning is better suited for general use across multiple tasks.

Model training from scratch

In some cases, training an LLM model from scratch may be necessary. However, this approach can be computationally expensive and energy-intensive. To ensure optimal training, consider the following best practices:

- Use efficient silicon – AWS Trainium-based EC2 Trn1 instances are purpose built for high-performance deep learning model training and optimized for energy efficiency. In 2022, we observed that training models on Trainium helps you reduce energy consumption by up to 29% vs. comparable instances.

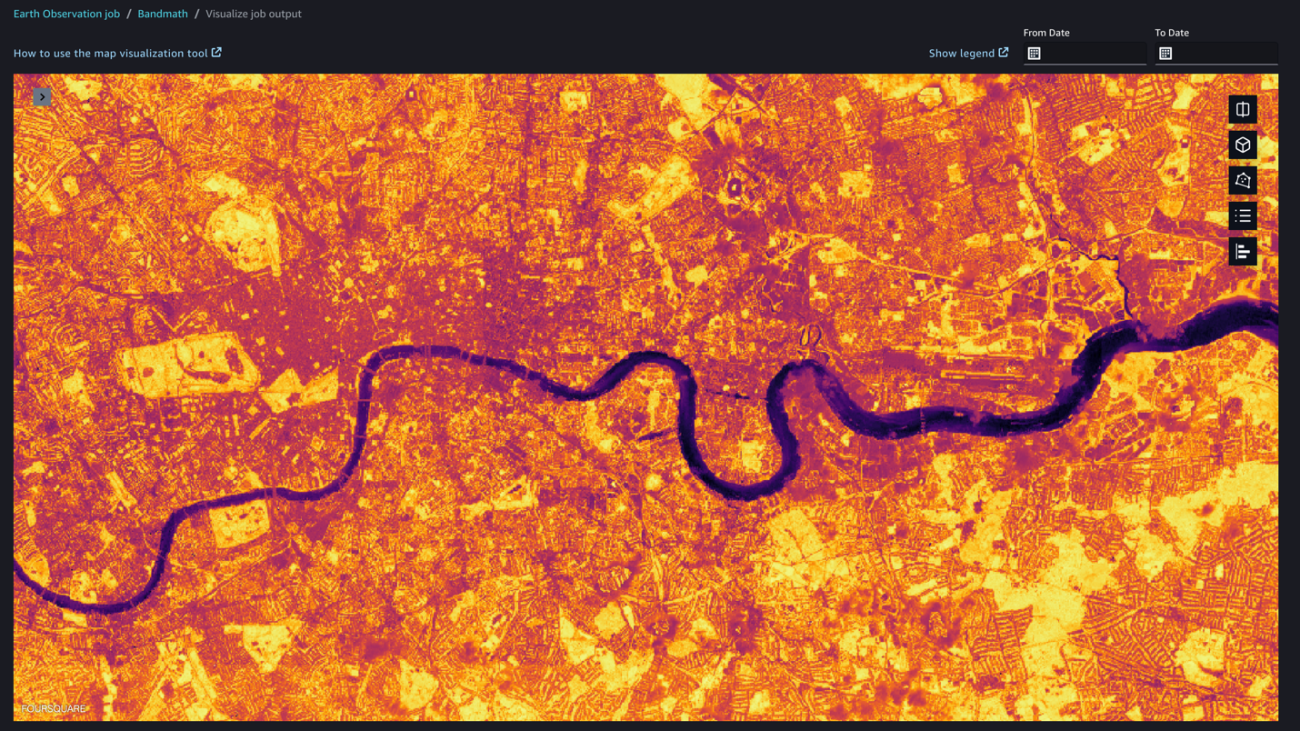

- Use high-quality data and scalable data curation – Use SageMaker Training to enhance the quality of the training dataset and minimize the reliance on massive data. By training on a diverse, comprehensive, and curated dataset, LLMs can produce responses that are more precise and achieve cost and energy optimization by reducing storage and compute requirements.

- Use distributed training – Use SageMaker distributed libraries such as data parallelism, pipeline parallelism, or tensor parallelism to run parallel computing across multiple GPUs or instances. These approaches help maximize GPU utilization by splitting your training batches into smaller microbatches. The smaller microbatches are fed to GPUs in an efficient pipeline to keep all GPU devices simultaneously active, leading to resource optimization.

Model inference and deployment

Consider the following best practices for model inference and deployment:

- Use deep learning containers for large model inference – You can use deep learning containers for large model inference on SageMaker and open-source frameworks such as DeepSpeed, Hugging Face Accelerate, and FasterTransformer to implement techniques like weight pruning, distillation, compression, quantization, or compilation. These techniques reduce model size and optimize memory usage.

- Set appropriate inference model parameters – During inference, you have the flexibility to adjust certain parameters that influence the model’s output. Understanding and appropriately setting these parameters allows you to obtain the most relevant responses from your models and minimize the number of iterations of prompt-tuning. This ultimately results in reduced memory usage and lower energy consumption. Key parameters to consider are

temperature,top_p,top_k, andmax_length. - Adopt an efficient inference infrastructure – You can deploy your models on an AWS Inferentia2 accelerator. Inf2 instances offer up to 50% better performance/watt over comparable Amazon Elastic Compute Cloud (Amazon EC2) instances because the underlying AWS Inferentia2 accelerators are purpose built to run deep learning models at scale. As the most energy-efficient option on Amazon EC2 for deploying ultra-large models, Inf2 instances help you meet your sustainability goals when deploying the latest innovations in generative AI.

- Align inference Service Level Agreement (SLA) with sustainability goals – Define SLAs that support your sustainability goals while meeting your business requirements. Define SLAs to meet your business requirements, not exceed them. Make trade-offs that significantly reduce your resources usage in exchange for acceptable decreases in service levels:

- Queue incoming requests and process them asynchronously – If your users can tolerate some latency, deploy your model on asynchronous endpoints to reduce resources that are idle between tasks and minimize the impact of load spikes. This will automatically scale the instance count to zero when there are no requests to process, so you only maintain an inference infrastructure when your endpoint is processing requests.

- Adjust availability – If your users can tolerate some latency in the rare case of a failover, don’t provision extra capacity. If an outage occurs or an instance fails, SageMaker automatically attempts to distribute your instances across Availability Zones.

- Adjust response time – When you don’t need real-time inference, use SageMaker batch transform. Unlike a persistent endpoint, clusters are decommissioned when batch transform jobs finish so you don’t continuously maintain an inference infrastructure.

Resource usage monitoring and optimization

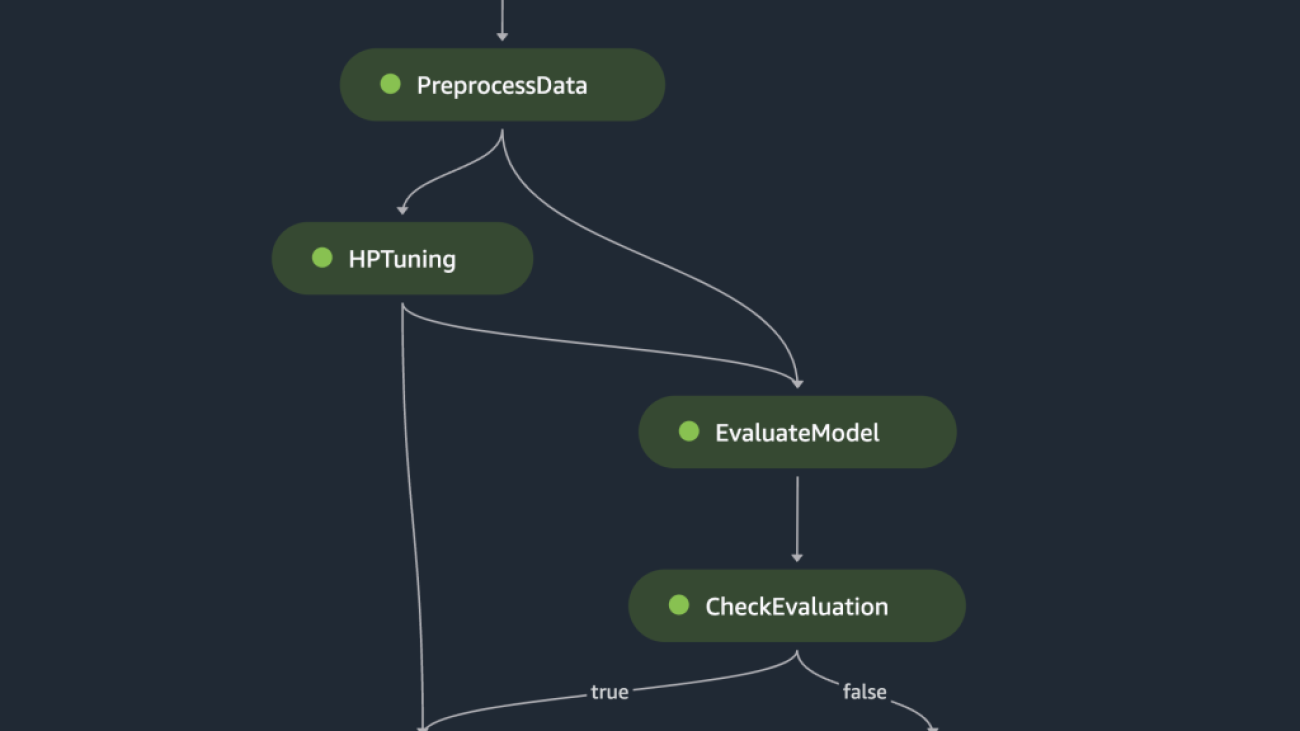

Implement an improvement process to track the impact of your optimizations over time. The goal of your improvements is to use all the resources you provision and complete the same work with the minimum resources possible. To operationalize this process, collect metrics about the utilization of your cloud resources. These metrics, combined with business metrics, can be used as proxy metrics for your carbon emissions.

To consistently monitor your environment, you can use Amazon CloudWatch to monitor system metrics like CPU, GPU, or memory utilization. If you are using NVIDIA GPU, consider NVIDIA System Management Interface (nvidia-smi) to monitor GPU utilization and performance state. For Trainium and AWS Inferentia accelerator, you can use AWS Neuron Monitor to monitor system metrics. Consider also SageMaker Profiler, which provides a detailed view into the AWS compute resources provisioned during training deep learning models on SageMaker. The following are some key metrics worth monitoring:

CPUUtilization,GPUUtilization,GPUMemoryUtilization,MemoryUtilization, andDiskUtilizationin CloudWatchnvidia_smi.gpu_utilization,nvidia_smi.gpu_memory_utilization, andnvidia_smi.gpu_performance_statein nvidia-smi logs.vcpu_usage,memory_info, andneuroncore_utilizationin Neuron Monitor.

Conclusion

As generative AI models are becoming bigger, it is essential to consider the environmental impact of our workloads.

In this post, we provided guidance for optimizing the compute, storage, and networking resources required to run your generative AI workloads on AWS while minimizing their environmental impact. Because the field of generative AI is continuously progressing, staying updated with the latest courses, research, and tools can help you find new ways to optimize your workloads for sustainability.

About the Authors

Dr. Wafae Bakkali is a Data Scientist at AWS, based in Paris, France. As a generative AI expert, Wafae is driven by the mission to empower customers in solving their business challenges through the utilization of generative AI techniques, ensuring they do so with maximum efficiency and sustainability.

Dr. Wafae Bakkali is a Data Scientist at AWS, based in Paris, France. As a generative AI expert, Wafae is driven by the mission to empower customers in solving their business challenges through the utilization of generative AI techniques, ensuring they do so with maximum efficiency and sustainability.

Benoit de Chateauvieux is a Startup Solutions Architect at AWS, based in Montreal, Canada. As a former CTO, he enjoys helping startups build great products using the cloud. He also supports customers in solving their sustainability challenges through the cloud. Outside of work, you’ll find Benoit in canoe-camping expeditions, paddling across Canadian rivers.

Benoit de Chateauvieux is a Startup Solutions Architect at AWS, based in Montreal, Canada. As a former CTO, he enjoys helping startups build great products using the cloud. He also supports customers in solving their sustainability challenges through the cloud. Outside of work, you’ll find Benoit in canoe-camping expeditions, paddling across Canadian rivers.

Raja Vaidyanathan is a Solutions Architect at AWS supporting global financial services customers. Raja works with customers to architect solutions to complex problems with long-term positive impact on their business. He’s a strong engineering professional skilled in IT strategy, enterprise data management, and application architecture, with particular interests in analytics and machine learning.

Raja Vaidyanathan is a Solutions Architect at AWS supporting global financial services customers. Raja works with customers to architect solutions to complex problems with long-term positive impact on their business. He’s a strong engineering professional skilled in IT strategy, enterprise data management, and application architecture, with particular interests in analytics and machine learning. Amandeep Bajwa is a Senior Solutions Architect at AWS supporting financial services enterprises. He helps organizations achieve their business outcomes by identifying the appropriate cloud transformation strategy based on industry trends and organizational priorities. Some of the areas Amandeep consults on are cloud migration, cloud strategy (including hybrid and multicloud), digital transformation, data and analytics, and technology in general.

Amandeep Bajwa is a Senior Solutions Architect at AWS supporting financial services enterprises. He helps organizations achieve their business outcomes by identifying the appropriate cloud transformation strategy based on industry trends and organizational priorities. Some of the areas Amandeep consults on are cloud migration, cloud strategy (including hybrid and multicloud), digital transformation, data and analytics, and technology in general. Prema Iyer is Senior Technical Account Manager for AWS Enterprise Support. She works with external customers on a variety of projects, helping them improve the value of their solutions when using AWS.

Prema Iyer is Senior Technical Account Manager for AWS Enterprise Support. She works with external customers on a variety of projects, helping them improve the value of their solutions when using AWS.

Sovik Kumar Nath is an AI/ML solution architect with AWS. He has extensive experience designing end-to-end machine learning and business analytics solutions in finance, operations, marketing, healthcare, supply chain management, and IoT. Sovik has published articles and holds a patent in ML model monitoring. He has double masters degrees from the University of South Florida, University of Fribourg, Switzerland, and a bachelors degree from the Indian Institute of Technology, Kharagpur. Outside of work, Sovik enjoys traveling, taking ferry rides, and watching movies.

Sovik Kumar Nath is an AI/ML solution architect with AWS. He has extensive experience designing end-to-end machine learning and business analytics solutions in finance, operations, marketing, healthcare, supply chain management, and IoT. Sovik has published articles and holds a patent in ML model monitoring. He has double masters degrees from the University of South Florida, University of Fribourg, Switzerland, and a bachelors degree from the Indian Institute of Technology, Kharagpur. Outside of work, Sovik enjoys traveling, taking ferry rides, and watching movies. Mohan Musti is Senior Technical Account Manger based out of Dallas. Mohan helps customers architect and optimize applications on AWS. Mohan has Computer Science and Engineering from JNT University ,India. In his spare time, he enjoys spending time with his family and camping.

Mohan Musti is Senior Technical Account Manger based out of Dallas. Mohan helps customers architect and optimize applications on AWS. Mohan has Computer Science and Engineering from JNT University ,India. In his spare time, he enjoys spending time with his family and camping. Jia (Vivian) Li is a Senior Solutions Architect in AWS, with specialization in AI/ML. She currently supports customers in financial industry. Prior to joining AWS in 2022, she had 7 years of experience supporting enterprise customers use AI/ML in the cloud to drive business results. Vivian has a BS from Peking University and a PhD from University of Southern California. In her spare time, she enjoys all the water activities, and hiking in the beautiful mountains in her home state, Colorado.

Jia (Vivian) Li is a Senior Solutions Architect in AWS, with specialization in AI/ML. She currently supports customers in financial industry. Prior to joining AWS in 2022, she had 7 years of experience supporting enterprise customers use AI/ML in the cloud to drive business results. Vivian has a BS from Peking University and a PhD from University of Southern California. In her spare time, she enjoys all the water activities, and hiking in the beautiful mountains in her home state, Colorado. Uchenna Egbe is an AIML Solutions Architect who enjoys building reusable AIML solutions. Uchenna has an MS from the University of Alaska Fairbanks. He spends his free time researching about herbs, teas, superfoods, and how to incorporate them into his daily diet.

Uchenna Egbe is an AIML Solutions Architect who enjoys building reusable AIML solutions. Uchenna has an MS from the University of Alaska Fairbanks. He spends his free time researching about herbs, teas, superfoods, and how to incorporate them into his daily diet. Navneet Tuteja is a Data Specialist at Amazon Web Services. Before joining AWS, Navneet worked as a facilitator for organizations seeking to modernize their data architectures and implement comprehensive AI/ML solutions. She holds an engineering degree from Thapar University, as well as a master’s degree in statistics from Texas A&M University.

Navneet Tuteja is a Data Specialist at Amazon Web Services. Before joining AWS, Navneet worked as a facilitator for organizations seeking to modernize their data architectures and implement comprehensive AI/ML solutions. She holds an engineering degree from Thapar University, as well as a master’s degree in statistics from Texas A&M University. Praful Kava is a Sr. Specialist Solutions Architect at AWS. He guides customers to design and engineer Cloud scale Analytics pipelines on AWS. Outside work, he enjoys travelling with his family and exploring new hiking trails.

Praful Kava is a Sr. Specialist Solutions Architect at AWS. He guides customers to design and engineer Cloud scale Analytics pipelines on AWS. Outside work, he enjoys travelling with his family and exploring new hiking trails.

Adir Sharabi is a Principal Solutions Architect with Amazon Web Services. He works with AWS customers to help them architect secure, resilient, scalable and high performance applications in the cloud. He is also passionate about Data and helping customers to get the most out of it.

Adir Sharabi is a Principal Solutions Architect with Amazon Web Services. He works with AWS customers to help them architect secure, resilient, scalable and high performance applications in the cloud. He is also passionate about Data and helping customers to get the most out of it. Omer Haim is a Senior Startup Solutions Architect at Amazon Web Services. He helps startups with their cloud journey, and is passionate about containers and ML. In his spare time, Omer likes to travel, and occasionally game with his son.

Omer Haim is a Senior Startup Solutions Architect at Amazon Web Services. He helps startups with their cloud journey, and is passionate about containers and ML. In his spare time, Omer likes to travel, and occasionally game with his son. Dmitry Zadorozhny is a data analyst at

Dmitry Zadorozhny is a data analyst at  Fuad Babaev serves as a Data Science Specialist at Virtuswap (

Fuad Babaev serves as a Data Science Specialist at Virtuswap (

Dhaval Shah is a Senior Solutions Architect at AWS, specializing in Machine Learning. With a strong focus on digital native businesses, he empowers customers to leverage AWS and drive their business growth. As an ML enthusiast, Dhaval is driven by his passion for creating impactful solutions that bring positive change. In his leisure time, he indulges in his love for travel and cherishes quality moments with his family.

Dhaval Shah is a Senior Solutions Architect at AWS, specializing in Machine Learning. With a strong focus on digital native businesses, he empowers customers to leverage AWS and drive their business growth. As an ML enthusiast, Dhaval is driven by his passion for creating impactful solutions that bring positive change. In his leisure time, he indulges in his love for travel and cherishes quality moments with his family. Ninad Joshi is a Senior Solutions Architect at AWS, helping global AWS customers design secure, scalable, and cost effective solutions in cloud to solve their complex real-world business challenges. His work in Machine Learning (ML) covers a wide range of AI/ML use cases, with a primary focus on End-to-End ML, Natural Language Processing, and Computer Vision. Prior to joining AWS, Ninad worked as a software developer for 12+ years. Outside of his professional endeavors, Ninad enjoys playing chess and exploring different gambits.

Ninad Joshi is a Senior Solutions Architect at AWS, helping global AWS customers design secure, scalable, and cost effective solutions in cloud to solve their complex real-world business challenges. His work in Machine Learning (ML) covers a wide range of AI/ML use cases, with a primary focus on End-to-End ML, Natural Language Processing, and Computer Vision. Prior to joining AWS, Ninad worked as a software developer for 12+ years. Outside of his professional endeavors, Ninad enjoys playing chess and exploring different gambits.

Raju Rangan is a Senior Solutions Architect at Amazon Web Services (AWS). He works with government sponsored entities, helping them build AI/ML solutions using AWS. When not tinkering with cloud solutions, you’ll catch him hanging out with family or smashing birdies in a lively game of badminton with friends.

Raju Rangan is a Senior Solutions Architect at Amazon Web Services (AWS). He works with government sponsored entities, helping them build AI/ML solutions using AWS. When not tinkering with cloud solutions, you’ll catch him hanging out with family or smashing birdies in a lively game of badminton with friends. Sherry Ding is a senior AI/ML specialist solutions architect at Amazon Web Services (AWS). She has extensive experience in machine learning with a PhD degree in computer science. She mainly works with public sector customers on various AI/ML-related business challenges, helping them accelerate their machine learning journey on the AWS Cloud. When not helping customers, she enjoys outdoor activities.

Sherry Ding is a senior AI/ML specialist solutions architect at Amazon Web Services (AWS). She has extensive experience in machine learning with a PhD degree in computer science. She mainly works with public sector customers on various AI/ML-related business challenges, helping them accelerate their machine learning journey on the AWS Cloud. When not helping customers, she enjoys outdoor activities.

John Hwang is a Generative AI Architect at AWS with special focus on Large Language Model (LLM) applications, vector databases, and generative AI product strategy. He is passionate about helping companies with AI/ML product development, and the future of LLM agents and co-pilots. Prior to joining AWS, he was a Product Manager at Alexa, where he helped bring conversational AI to mobile devices, as well as a derivatives trader at Morgan Stanley. He holds B.S. in computer science from Stanford University.

John Hwang is a Generative AI Architect at AWS with special focus on Large Language Model (LLM) applications, vector databases, and generative AI product strategy. He is passionate about helping companies with AI/ML product development, and the future of LLM agents and co-pilots. Prior to joining AWS, he was a Product Manager at Alexa, where he helped bring conversational AI to mobile devices, as well as a derivatives trader at Morgan Stanley. He holds B.S. in computer science from Stanford University.