Watch the recording of Marc Brooker’s presentation on Firecracker, an open source virtualization platform.Read More

AdLingo Ads Builder turns an ad into a conversation

My parents were small business owners in the U.S. Virgin Islands where I grew up. They taught me that, though advertising is important, personal relationships are the best way to get new customers and grow your business. When I started working at Google 14 years ago, online advertising was a one-way messaging channel. People couldn’t ask questions or get personalized information from an ad, so we saw an opportunity to turn an ad into a two-way conversation.

My co-founder Dario Rapisardi and I joined Area 120, Google’s in house incubator for experimental projects, to use conversational AI technology to create such a service. In 2018 we launched AdLingo Ads for brands that leverage the Google Display & Video 360 buying platform. They can turn their ads, shown on the Google Partner Inventory, into an AI-powered conversation with potential customers. If customers are interested in the product promoted in the ad, they can ask questions to get more information.

Today, we’re announcing AdLingo Ads Builder (accessible to our beta partners), a new tool that helps advertisers and agencies build AdLingo Ads ten times faster than before. You can upload the components of your ad, as well as the conversational assistant, with just a few clicks.

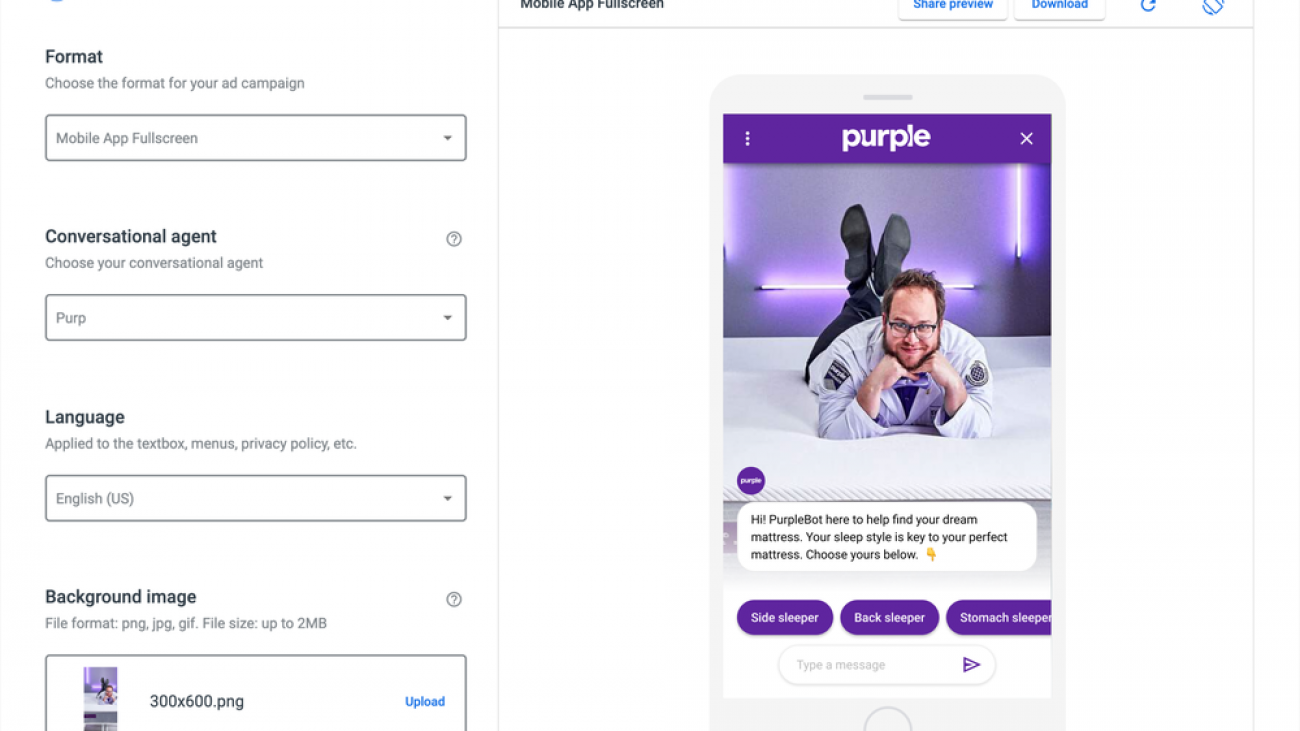

As an early example, Purple used AdLingo to help people find the best mattress based on their personal sleep preferences. People found the ad helpful, as each engaged person spent on average 1 minute and 37 seconds in the conversation.

AdLingo Ads Builder (with Purple ad): After selecting from a few simple drop-downs, the ad is ready to preview.

So far we’ve partnered with more than 30 different brands globally. Our product delivers results for advertisers by advancing potential customers from discovering a product to considering its purchase in one single ad, at a competitive cost compared to other channels. For example Renault used AdLingo for the new ZOE electric car launch to address French drivers’ preconceptions about electric vehicles. The campaign helped position Renault as a trusted advisor to consumers.

Renault AdLingo Ad experience: Potential customers can ask questions and learn more about ZOE electric cars.

Online advertising has created huge opportunities for companies to reach customers all over the world, but when I think about my parent’s small business, I remember the importance of building a personal relationship with your customers. In creating AdLingo, we’re on a mission to use conversational AI to foster stronger relationships between customers and businesses.

OmniTact: A Multi-Directional High-Resolution Touch Sensor

Human thumb next to our OmniTact sensor, and a US penny for scale.

Touch has been shown to be important for dexterous manipulation in

robotics. Recently, the GelSight sensor has caught significant interest

for learning-based robotics due to its low cost and rich signal. For example,

GelSight sensors have been used for learning inserting USB cables (Li et al,

2014), rolling a die (Tian et al. 2019) or grasping objects (Calandra

et al. 2017).

The reason why learning-based methods work well with GelSight sensors is that

they output high-resolution tactile images from which a variety of features

such as object geometry, surface texture, normal and shear forces can be

estimated that often prove critical to robotic control. The tactile images

can be fed into standard CNN-based computer vision pipelines allowing the use

of a variety of different learning-based techniques: In Calandra et al.

2017 a grasp-success classifier is trained on GelSight data collected in

self-supervised manner, in Tian et al. 2019 Visual Foresight, a

video-prediction-based control algorithm is used to make a robot roll a die

purely based on tactile images, and in Lambeta et al. 2020 a model-based

RL algorithm is applied to in-hand manipulation using GelSight images.

Unfortunately applying GelSight sensors in practical real-world scenarios is

still challenging due to its large size and the fact that it is only sensitive

on one side. Here we introduce a new, more compact tactile sensor design based

on GelSight that allows for omnidirectional sensing, i.e. making the sensor

sensitive on all sides like a human finger, and show how this opens up new

possibilities for sensorimotor learning. We demonstrate this by teaching a

robot to pick up electrical plugs and insert them purely based on tactile

feedback.

Announcing Meta-Dataset: A Dataset of Datasets for Few-Shot Learning

Posted by Eleni Triantafillou, Student Researcher, and Vincent Dumoulin, Research Scientist, Google Research

Recently, deep learning has achieved impressive performance on an array of challenging problems, but its success often relies on large amounts of manually annotated training data. This limitation has sparked interest in learning from fewer examples. A well-studied instance of this problem is few-shot image classification: learning new classes from only a few representative images.

In addition to being an interesting problem from a scientific perspective due to the apparent gap between the ability of a person to learn from limited information compared to that of a deep learning algorithm, few-shot classification is also a very important problem from a practical perspective. Because large labeled datasets are often unavailable for tasks of interest, solving this problem would enable, for example, quick customization of models to individual user’s needs, democratizing the use of machine learning. Indeed, there has been an explosion of recent work to tackle few-shot classification, but previous benchmarks fail to reliably assess the relative merits of the different proposed models, inhibiting research progress.

In “Meta-Dataset: A Dataset of Datasets for Learning to Learn from Few Examples” (presented at ICLR 2020), we propose a large-scale and diverse benchmark for measuring the competence of different image classification models in a realistic and challenging few-shot setting, offering a framework in which one can investigate several important aspects of few-shot classification. It is composed of 10 publicly available datasets of natural images (including ImageNet, CUB-200-2011, Fungi, etc.), handwritten characters and doodles. The code is public, and includes a notebook that demonstrates how Meta-Dataset can be used in TensorFlow and PyTorch. In this blog post, we outline some results from our initial research investigation on Meta-Dataset and highlight important research directions.

Background: Few-shot Classification

In standard image classification, a model is trained on a set of images from a particular set of classes, and then tested on a held-out set of images of those same classes. Few-shot classification goes a step further and studies generalization to entirely new classes at test time, no images of which were seen in training.

Specifically, in few-shot classification, the training set contains classes that are entirely disjoint from those that will appear at test time. So the aim of training is to learn a flexible model that can be easily repurposed towards classifying new classes using only a few examples. The end-goal is to perform well on the test-time evaluation that is carried out on a number of test tasks, each of which presents a classification problem between previously unseen classes, from a held out test set of classes. Each test task contains a support set of a few labeled images from which the model can learn about the new classes, and a disjoint query set of examples that the model is then asked to classify.

In Meta-Dataset, in addition to the tough generalization challenge to new classes inherent in the few-shot learning setup described above, we also study generalization to entirely new datasets, from which no images of any class were seen in training.

Comparison of Meta-Dataset with Previous Benchmarks

A popular dataset for studying few-shot classification is mini-ImageNet, a downsampled version of a subset of classes from ImageNet. This dataset contains 100 classes in total that are divided into training, validation and test class splits. While classes encountered at test time in benchmarks like mini-ImageNet have not been seen during training, they are still substantially similar to the training classes visually. Recent works reveal that this allows a model to perform competitively at test time simply by re-using features learned at training time, without necessarily demonstrating the capability to learn from the few examples presented to the model in the support set. In contrast, performing well on Meta-Dataset requires absorbing diverse information at training time and rapidly adapting it to solve significantly different tasks at test time that possibly originate from entirely unseen datasets.

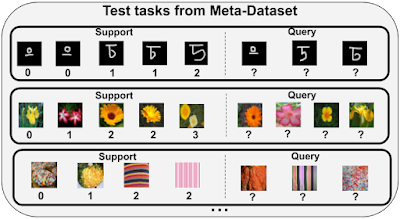

While other recent papers have investigated training on mini-ImageNet and evaluating on different datasets, Meta-Dataset represents the largest-scale organized benchmark for cross-dataset, few-shot image classification to date. It also introduces a sampling algorithm for generating tasks of varying characteristics and difficulty, by varying the number of classes in each task, the number of available examples per class, introducing class imbalances and, for some datasets, varying the degree of similarity between the classes of each task. Some example test tasks from Meta-Dataset are shown below.

Initial Investigation and Findings on Meta-Dataset

We benchmark two main families of few-shot learning models on Meta-Dataset: pre-training and meta-learning.

Pre-training simply trains a classifier (a neural network feature extractor followed by a linear classifier) on the training set of classes using supervised learning. Then, the examples of a test task can be classified either by fine-tuning the pre-trained feature extractor and training a new task-specific linear classifier, or by means of nearest-neighbor comparisons, where the prediction for each query example is the label of its nearest support example. Despite its “baseline” status in the few-shot classification literature, this approach has recently enjoyed a surge of attention and competitive results.

On the other hand, meta-learners construct a number of “training tasks” and their training objective explicitly reflects the goal of performing well on each task’s query set after having adapted to that task using the associated support set, capturing the ability that is required at test time to solve each test task. Each training task is created by randomly sampling a subset of training classes and some examples of those classes to play the role of support and query sets.

Below, we summarize some of our findings from evaluating pre-training and meta-learning models on Meta Dataset:

1) Existing approaches have trouble leveraging heterogeneous training data sources.

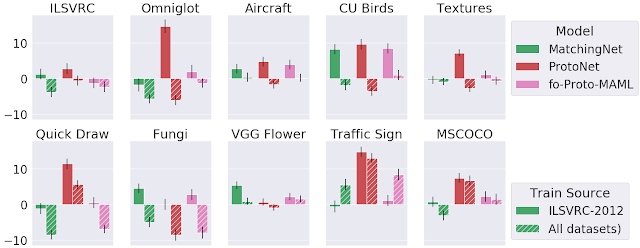

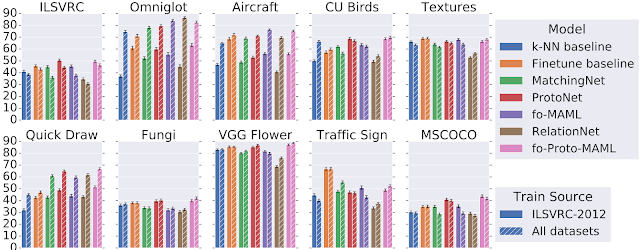

We compared training models (from both pre-training and meta-learning approaches) using only the training classes of ImageNet to using all training classes from the datasets in Meta-Dataset, in order to measure the generalization gain from using a more expansive collection of training data. We singled out ImageNet for this purpose, because the features learned on ImageNet readily transfer to other datasets. The evaluation tasks applied to all models are derived from a held-out set of classes from the datasets used in training, with at least two additional datasets that are entirely held-out for evaluation (i.e., no classes from these datasets were used for training).

One might expect that training on more data, albeit heterogeneous, would generalize better on the test set. However, this is not always the case. Specifically, the following figure displays the accuracy of different models on test tasks of Meta-Dataset’s ten datasets. We observe that the performance on test tasks coming from handwritten characters / doodles (Omniglot and Quickdraw) is significantly improved when having trained on all datasets, instead of ImageNet only. This is reasonable since these datasets are visually significantly different from ImageNet. However, for test tasks of natural image datasets, similar accuracy can be obtained by training on ImageNet only, revealing that current models cannot effectively leverage heterogeneous data towards improving in this regard.

|

| Comparison of test performance on each dataset after having trained on ImageNet (ILSVRC-2012) only or on all datasets. |

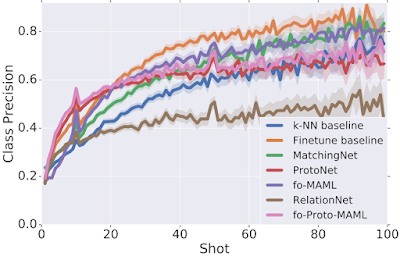

2) Some models are more capable than others of exploiting additional data at test time.

We analyzed the performance of different models as a function of the number of available examples in each test task, uncovering an interesting trade-off: different models perform best with a particular number of training (support) samples. We observe that some models outshine the rest when there are very few examples (“shots”) available (e.g., ProtoNet and our proposed fo-Proto-MAML) but don’t exhibit a large improvement when given more, while other models are not well-suited for tasks with very few examples but improve at a quicker rate as more are given (e.g., Finetune baseline). However, since in practice we might not know in advance the number of examples that will be available at test time, one would like to identify a model that can best leverage any number of examples, without disproportionately suffering in a particular regime.

3) The adaptation algorithm of a meta-learner is more heavily responsible for its performance than the fact that it is trained end-to-end (i.e. meta-trained).

We developed a new set of baselines to measure the benefit of meta-learning. Specifically, for several meta-learners, we consider a non-meta-learned counterpart that pre-trains a feature extractor and then, at evaluation time only, applies the same adaptation algorithm as the respective meta-learner on those features. When training on ImageNet only, meta-training often helps a bit or at least doesn’t hurt too much, but when training on all datasets, the results are mixed. This suggests that further work is needed to understand and improve upon meta-learning, especially across datasets.

Conclusion

Meta-Dataset introduces new challenges for few-shot classification. Our initial exploration has revealed limitations of existing methods, calling for additional research. Recent works have already reported exciting results on Meta-Dataset, for example using cleverly-designed task conditioning, more sophisticated hyperparameter tuning, a ‘meta-baseline’ that combines the benefits of pre-training and meta-learning, and finally using feature selection to specialize a universal representation for each task. We hope that Meta-Dataset will help drive research in this important sub-field of machine learning.

Acknowledgements

Meta-Dataset was developed by Eleni Triantafillou, Tyler Zhu, Vincent Dumoulin, Pascal Lamblin, Utku Evci, Kelvin Xu, Ross Goroshin, Carles Gelada, Kevin Swersky, Pierre-Antoine Manzagol and Hugo Larochelle. We would like to thank Pablo Castro for his valuable guidance on this blog post, Chelsea Finn for fruitful discussions and ensuring the correctness of fo-MAML’s implementation, as well as Zack Nado and Dan Moldovan for the initial dataset code that was adapted, Cristina Vasconcelos for spotting an issue in the ranking of models and John Bronskill for suggesting that we experiment with a larger inner-loop learning rate for MAML which indeed significantly improved our fo-MAML results.

Making AI a planetary-scale system

Watch the ICLR 2020 keynote presentation by Michael I. Jordan, Amazon scholar and UC Berkeley professor.Read More

Speeding Up Neural Network Training with Data Echoing

Posted by Dami Choi, Student Researcher and George Dahl, Senior Research Scientist, Google Research

Over the past decade, dramatic increases in neural network training speed have made it possible to apply deep learning techniques to many important problems. In the twilight of Moore’s law, as improvements in general purpose processors plateau, the machine learning community has increasingly turned to specialized hardware to produce additional speedups. For example, GPUs and TPUs optimize for highly parallelizable matrix operations, which are core components of neural network training algorithms. These accelerators, at a high level, can speed up training in two ways. First, they can process more training examples in parallel, and second, they can process each training example faster. We know there are limits to the speedups from processing more training examples in parallel, but will building ever faster accelerators continue to speed up training?

Unfortunately, not all operations in the training pipeline run on accelerators, so one cannot simply rely on faster accelerators to continue driving training speedups. For example, earlier stages in the training pipeline like disk I/O and data preprocessing involve operations that do not benefit from GPUs and TPUs. As accelerator improvements outpace improvements in CPUs and disks, these earlier stages will increasingly become a bottleneck, wasting accelerator capacity and limiting training speed.

|

| An example training pipeline representative of many large-scale computer vision programs. The stages that come before applying the mini-batch stochastic gradient descent (SGD) update generally do not benefit from specialized hardware accelerators. |

Consider a scenario where the code upstream to the accelerator takes twice as long as the code that runs on the accelerator – a scenario that is already realistic for some workloads today. Even if the code is pipelined to execute the upstream and downstream stages in parallel, the upstream stage will dominate training time and the accelerator will be idle 50% of the time. In this case, building a faster accelerator will not improve training speed at all. It may be possible to speed up the input pipeline by dedicating engineering effort and additional compute resources, but such efforts are time consuming and distract from the main goal of improving predictive performance. For very small datasets,one can precompute the augmented dataset offline and load the entire preprocessed dataset in memory, but this doesn’t work for most ML training scenarios.

In “Faster Neural Network Training with Data Echoing”, we propose a simple technique that reuses (or “echoes”) intermediate outputs from earlier pipeline stages to reclaim idle accelerator capacity. Rather than waiting for more data to become available, we simply utilize data that is already available to keep the accelerators busy.

|

| Left: Without data echoing, downstream computational capacity is idle 50% of the time. Right: Data echoing with echoing factor 2 reclaims downstream computational capacity. |

Repeating Data to Train Faster

Imagine a situation where reading and preprocessing a batch of training data takes twice as long as performing a single optimization step on that batch. In this case, after the first optimization step on the preprocessed batch, we can reuse the batch and perform a second step before the next batch is ready. In the best case scenario, where repeated data is as useful as fresh data, we would see a twofold speedup in training. In reality, data echoing provides a slightly smaller speedup because repeated data is not as useful as fresh data – but it can still provide a significant speedup compared to leaving the accelerator idle.

There are typically several ways to implement data echoing in a given neural network training pipeline. The technique we propose involves duplicating data into a shuffle buffer somewhere in the training pipeline, but we are free to insert this buffer anywhere after whichever stage produces a bottleneck in the given pipeline. When we insert the buffer before batching, we call our technique example echoing, whereas, when we insert it after batching, we call our technique batch echoing. Example echoing shuffles data at the example level, while batch echoing shuffles the sequence of duplicate batches. We can also insert the buffer before data augmentation, such that each copy of repeated data is slightly different (and therefore closer to a fresh example). Of the different versions of data echoing that place the shuffle buffer between different stages, the version that provides the greatest speedup depends on the specific training pipeline.

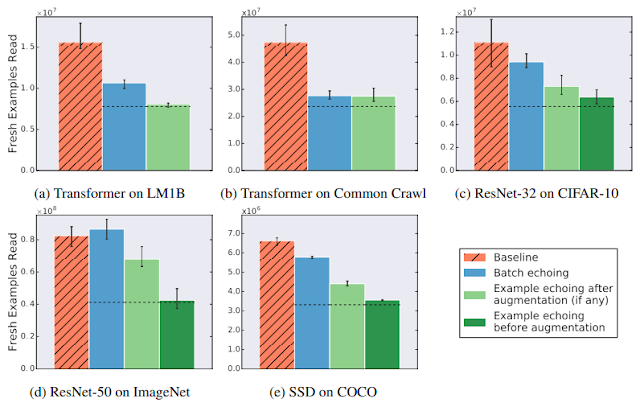

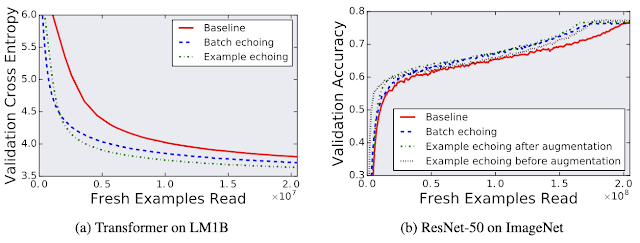

Data Echoing Across Workloads

So how useful is reusing data? We tried data echoing on five neural network training pipelines spanning 3 different tasks – image classification, language modeling, and object detection – and measured the number of fresh examples needed to reach a particular performance target. We chose targets to match the best result reliably achieved by the baseline during hyperparameter tuning. We found that data echoing allowed us to reach the target performance with fewer fresh examples, demonstrating that reusing data is useful for reducing disk I/O across a variety of tasks. In some cases, repeated data is nearly as useful as fresh data: in the figure below, example echoing before augmentation reduces the number of fresh examples required almost by the repetition factor.

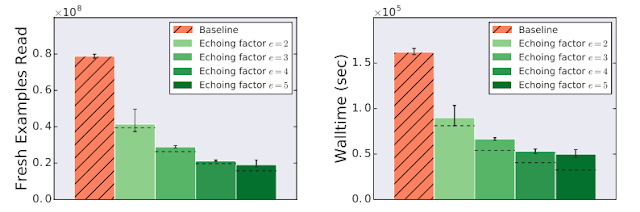

Reduction in Training Time

Data echoing can speed up training whenever computation upstream from accelerators dominates training time. We measured the training speedup achieved in a training pipeline bottlenecked by input latency due to streaming training data from cloud storage, which is realistic for many of today’s large-scale production workloads or anyone streaming training data over a network from a remote storage system. We trained a ResNet-50 model on the ImageNet dataset and found that data echoing provides a significant training speedup, in this case, more than 3 times faster when using data echoing.

Data Echoing Preserves Predictive Performance

Although one might be concerned that reusing data would harm the model’s final performance, we found that data echoing did not degrade the quality of the final model for any of the workloads we tested.

|

| Comparing the individual trials that achieved the best out-of-sample performance during training for both with and without data echoing shows that reusing data does not harm final model quality. Here validation cross entropy is equivalent to log perplexity. |

As improvements in specialized accelerators like GPUs and TPUs continue to outpace general purpose processors, we expect data echoing and similar strategies to become increasingly important parts of the neural network training toolkit.

Acknowledgements

The Data Echoing project was conducted by Dami Choi, Alexandre Passos, Christopher J. Shallue, and George E. Dahl while Dami Choi was a Google AI Resident. We would also like to thank Roy Frostig, Luke Metz, Yiding Jiang, and Ting Chen for helpful discussions.

Announcing a New Framework for Designing Optimal Experiments with Pyro

Experimentation is one of humanity’s principal tools for learning about our complex world. Advances in knowledge from medicine to psychology require a rigorous, iterative process in which we formulate hypotheses and test them by collecting and analyzing new evidence. At …

The post Announcing a New Framework for Designing Optimal Experiments with Pyro appeared first on Uber Engineering Blog.

Meet the Googlers working to ensure tech is for everyone

During their early studies and careers, Tiffany Deng, Tulsee Doshi and Timnit Gebru found themselves asking the same questions: Why is it that some products and services work better for some than others, and why isn’t everyone represented around the table when a decision is being made? Their collective passion to create a digital world that works for everyone is what brought the three women to Google, where they lead efforts to make machine learning systems fair and inclusive.

I sat down with Tiffany, Tulsee and Timnit to discuss why working on machine learning fairness is so important, and how they came to work in this field.

How would you explain your job to someone who isn’t in tech?

Tiffany: I’d say my job is to make sure we’re not reinforcing any of the entrenched and embedded biases humans might have into products people use, and that every time you pick up a product—a Google product—you as an individual can have a good experience when using it.

Timnit: I help machines understand imagery and text. Just like a human, if a machine tries to learn a pattern or understand something, and it is trained on input that’s been provided for it to do just that, the input, or data in this case, has societal bias. This could lead to a biased outcome or prediction made by the machine. And my work is to figure out different ways of mitigating this bias.

Tulsee: My work includes making sure everyone has positive experiences with our products, and that people don’t feel excluded or stereotyped, especially based on their identities. The products should work for you as an individual, and provide the best experience possible.

What made you want to work in this field?

Tulsee:When I started college, I was unsure of what I wanted to study. I came in with an interest in math, and quickly found myself taking a variety of classes in computer science, among other topics. But no matter which interesting courses I took, I often felt a disconnect between what I was studying and the people the work would help. I kept coming back to wanting to focus on people, and after taking classes like child psychology and philosophy of AI, I decided I wanted to take on a role where I could combine my skill sets with a people-centered approach. I think everyone has an experience of services and technology not working for them, and solving for that is a passion behind much of what I do.

Tiffany:After graduating from West Point I joined the army as an intelligence officer before becoming a consultant and working for the State Department and the Department of Defense. I then joined Facebook as a privacy manager for a period of time, and that’s when I started working on more ML fairness-related matters. When people ask me how I ended up where I am, I’d say that there’s never a straight path to finding your passion, and all the experiences that I’ve had outside of tech are ones I bring into the work I’m doing today.

An important “aha moment” for me was about a year and a half ago, when my son had a rash all over his body and we went to the doctor to get help. They told us they weren’t able to diagnose him because his skin wasn’t red, and of course, his skin won’t turn red as he has deep brown skin. Someone telling me they can’t diagnose my son because of his skin—that’s troubling as a parent. I wanted to understand the root cause of the issue—why is this not working for me and my family, the way it does for others? Fast forwarding, when thinking about how AI will someday be ubiquitous and an important component in assisting human decision-making, I wanted to get involved and help ensure that we’re building technology that works equally as well for everyone.

Timnit: I grew up with a father and two sisters working in electrical engineering, so I followed their path and decided to also pursue studies in the field. After spending some time at Apple working as a circuit designer and starting my own company, I went back to studying image processing and completed a Ph.D. in computer vision. Towards the end of my Ph.D., I read a ProPublica article discussing racial bias in predicting crime recidivism rates. At the same time, I started thinking more about how there were very few, if any, Black people in grad school and that whenever I went to conferences, Black people weren’t represented in the decisions driving this field of work. That’s how I came to found a nonprofit organization called Black in AI, along with Rediet Abebe, to increase the visibility of Black people working in the field. After graduating with my Ph.D. I did a postdoc at Microsoft research and soon after that, I took a role at Google as the co-lead of the ethical AI research team which was founded by Meg Mitchell.

What are some of the main challenges in this work, and why is it so important?

Tulsee:The challenge question is interesting, and a hard one. First of all, there is the theoretical and sociological question on the notion of fairness—how does one define what is fair? Addressing fairness concerns requires multiple perspectives, and product development approaches ranging from technical to design. Because of this, even for use cases where we have a lot of experience, there are still many challenges for product teams to understand the different approaches for measuring and tackling fairness concerns. This is one of the reasons why I believe tooling and resources are so critical, and why we’re investing in them for both internal and external purposes.

Another important aspect is company culture and how companies define their values and motivate their employees. We are starting to see a growing, industry-wide shift in terms of what success looks like. If organizations and product creators get rewarded for thinking about a broader set of people when developing products, the more companies start fostering a diverse workforce, consult external experts and think about whose voices are being represented at the table. We need to remember we’re talking about real people’s experiences, and while working on these issues can sometimes be emotionally difficult, it’s so important to get right.

Timnit:A general challenge is that people who are the most negatively affected are often the ones whose voices are not heard. Representation is an important issue, and while there’s a lot of opportunities with ML technology in society, it’s important to have a diverse set of people and perspectives involved when working on the development so you don’t end up enhancing a gap between different groups.

This is not an issue that is specific to ML. As an example, let’s think of DNA sequencing. The African continent has the most diverse DNA in the world, but I read that it consists of less than 1 percent of the DNA studied in DNA sequencing, so there are examples of researchers who have come to the wrong conclusions based on data that was not representative. Now imagine someone is looking to develop the next generation of drugs, and the result could be that they don’t work for certain groups because their DNA hasn’t been rightly represented.

Do you think ML has the potential to help complement human decision making, and drive the world to become more fair?

Timnit:It’s important to recognize the complexity of the human mind, and that humans should not be replaced when it comes to decision making. I don’t think ML can make the world more fair: Only humans can do that. And humans choose how to use this technology. In terms of opportunities, there are many ways in which we have already used ML systems to uncover societal bias, and this is something I work on as well. For example, studies by Jennifer Eberhardt and her collaborators at Stanford University including Vinodkumar Prabhakaran, who has since joined our team, used natural language processing to analyze body camera recordings of police stops in Oakland. They found a pattern of police speaking less respectfully to Black people than white people. A lot of times when you show these issues backed up by data and scientific analysis, it can help make a case. At the same time, the history of scientific racism also shows that data can be used to propagate the most harmful societal biases of the day. Blindly trusting data driven studies or decisions can be dangerous. It’s important to understand the context under which these studies are conducted and to work with affected communities and other domain experts to formulate the questions that need to be addressed.

Tiffany:I think ML will be incredibly important to help with things like climate change, sustainability and helping save endangered animals. Timnit’s work on using AI to help identify diseased cassava plants is an incredible use of AI, especially in the developing world. The range of problems AI can aid humans with is endless—we just have to ensure we continue to build technological solutions with ethics and inclusion at the forefront of our conversations.

Speech synthesizer learns expressive style from one-second voice sample

Users find speech with transferred expression 9% more natural than standard synthesized speech.Read More

Simple Sensor Intentions for Exploration

In this paper we focus on a setting in which goal tasks are defined via simple sparse rewards, and exploration is facilitated via agent-internal auxiliary tasks. We introduce the idea of simple sensor intentions (SSIs) as a generic way to define auxiliary tasks. SSIs reduce the amount of prior knowledge that is required to define suitable rewards. They can further be computed directly from raw sensor streams and thus do not require expensive and possibly brittle state estimation on real systems.Read More