Posted by Karol Hausman, Senior Research Scientist and Yevgen Chebotar, Research Scientist, Robotics at Google

For general-purpose robots to be most useful, they would need to be able to perform a range of tasks, such as cleaning, maintenance and delivery. But training even a single task (e.g., grasping) using offline reinforcement learning (RL), a trial and error learning method where the agent uses training previously collected data, can take thousands of robot-hours, in addition to the significant engineering needed to enable autonomous operation of a large-scale robotic system. Thus, the computational costs of building general-purpose everyday robots using current robot learning methods becomes prohibitive as the number of tasks grows.

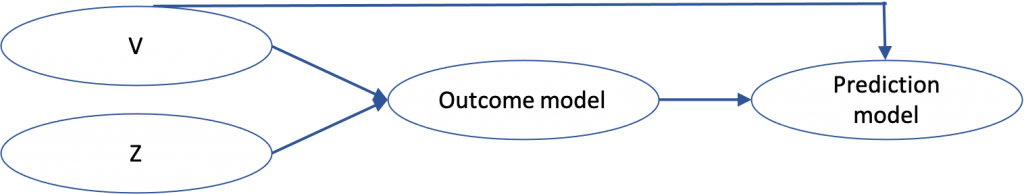

|

| Multi-task data collection across multiple robots where different robots collect data for different tasks. |

In other large-scale machine learning domains, such as natural language processing and computer vision, a number of strategies have been applied to amortize the effort of learning over multiple skills. For example, pre-training on large natural language datasets can enable few- or zero-shot learning of multiple tasks, such as question answering and sentiment analysis. However, because robots collect their own data, robotic skill learning presents a unique set of opportunities and challenges. Automating this process is a large engineering endeavour, and effectively reusing past robotic data collected by different robots remains an open problem.

Today we present two new advances for robotic RL at scale, MT-Opt, a new multi-task RL system for automated data collection and multi-task RL training, and Actionable Models, which leverages the acquired data for goal-conditioned RL. MT-Opt introduces a scalable data-collection mechanism that is used to collect over 800,000 episodes of various tasks on real robots and demonstrates a successful application of multi-task RL that yields ~3x average improvement over baseline. Additionally, it enables robots to master new tasks quickly through use of its extensive multi-task dataset (new task fine-tuning in <1 day of data collection). Actionable Models enables learning in the absence of specific tasks and rewards by training an implicit model of the world that is also an actionable robotic policy. This drastically increases the number of tasks the robot can perform (via visual goal specification) and enables more efficient learning of downstream tasks.

Large-Scale Multi-Task Data Collection System

The cornerstone for both MT-Opt and Actionable Models is the volume and quality of training data. To collect diverse, multi-task data at scale, users need a way to specify tasks, decide for which tasks to collect the data, and finally, manage and balance the resulting dataset. To that end, we create a scalable and intuitive multi-task success detector using data from all of the chosen tasks. The multi-task success is trained using supervised learning to detect the outcome of a given task and it allows users to quickly define new tasks and their rewards. When this success detector is being applied to collect data, it is periodically updated to accommodate distribution shifts caused by various real-world factors, such as varying lighting conditions, changing background surroundings, and novel states that the robots discover.

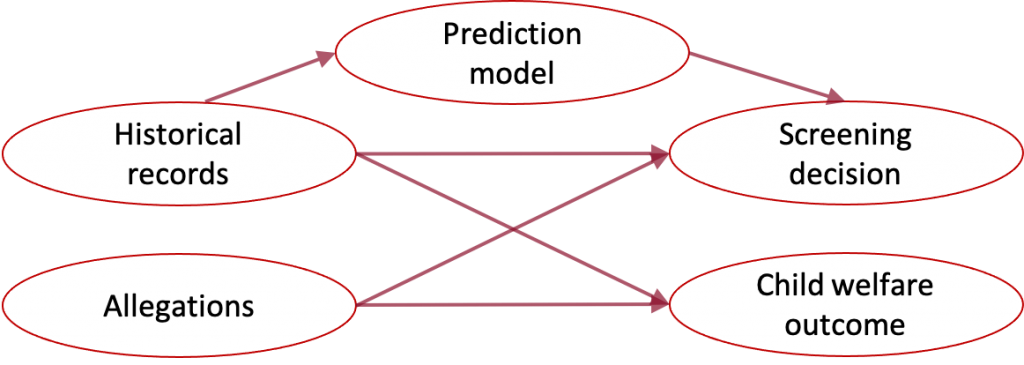

Second, we simultaneously collect data for multiple distinct tasks across multiple robots by using solutions to easier tasks to effectively bootstrap learning of more complex tasks. This allows training of a policy for the harder tasks and improves the data collected for them. As such, the amount of per-task data and the number of successful episodes for each task grows over time. To further improve the performance, we focus data collection on underperforming tasks, rather than collecting data uniformly across tasks.

This system collected 9600 robot hours of data (from 57 continuous data collection days on seven robots). However, while this data collection strategy was effective at collecting data for a large number of tasks, the success rate and data volume was imbalanced between tasks.

Learning with MT-Opt

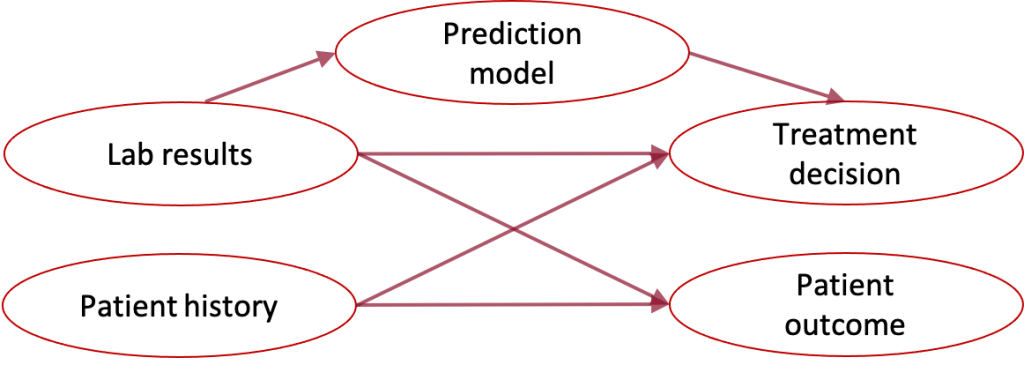

We address the data collection imbalance by transferring data across tasks and re-balancing the per-task data. The robots generate episodes that are labelled as success or failure for each task and are then copied and shared across other tasks. The balanced batch of episodes is then sent to our multi-task RL training pipeline to train the MT-Opt policy.

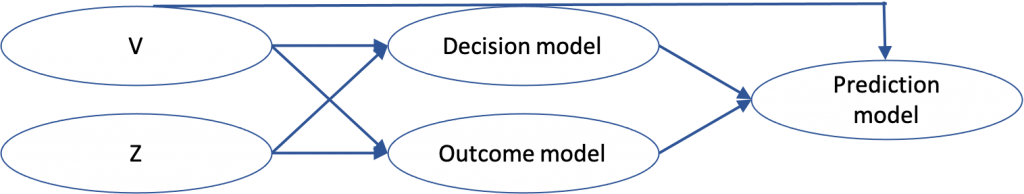

|

| Data sharing and task re-balancing strategy used by MT-Opt. The robots generate episodes which then get labelled as success or failure for the current task and are then shared across other tasks. |

MT-Opt uses Q-learning, a popular RL method that learns a function that estimates the future sum of rewards, called the Q-function. The learned policy then picks the action that maximizes this learned Q-function. For multi-task policy training, we specify the task as an extra input to a large Q-learning network (inspired by our previous work on large-scale single-task learning with QT-Opt) and then train all of the tasks simultaneously with offline RL using the entire multi-task dataset. In this way, MT-Opt is able to train on a wide variety of skills that include picking specific objects, placing them into various fixtures, aligning items on a rack, rearranging and covering objects with towels, etc.

Compared to single-task baselines, MT-Opt performs similarly on the tasks that have the most data and significantly improves performance on underrepresented tasks. So, for a generic lifting task, which has the most supporting data, MT-Opt achieved an 89% success rate (compared to 88% for QT-Opt) and achieved a 50% average success rate across rare tasks, compared to 1% with a single-task QT-Opt baseline and 18% using a naïve, multi-task QT-Opt baseline. Using MT-Opt not only enables zero-shot generalization to new but similar tasks, but also can quickly (in about 1 day of data collection on seven robots) be fine-tuned to new, previously unseen tasks. For example, when applied to an unseen towel-covering task, the system achieved a zero-shot success rate of 92% for towel-picking and 79% for object-covering, which wasn’t present in the original dataset.

| Example tasks that MT-Opt is able to learn, such as instance and indiscriminate grasping, chasing, placing, aligning and rearranging. |

<!–

|

| Example tasks that MT-Opt is able to learn, such as instance and indiscriminate grasping, chasing, placing, aligning and rearranging. |

–>

|

| Towel-covering task that was not present in the original dataset. We fine-tune MT-Opt on this novel task in 1 day to achieve a high (>90%) success rate. |

Learning with Actionable Models

While supplying a rigid definition of tasks facilitates autonomous data collection for MT-Opt, it limits the number of learnable behaviors to a fixed set. To enable learning a wider range of tasks from the same data, we use goal-conditioned learning, i.e., learning to reach given goal configurations of a scene in front of the robot, which we specify with goal images. In contrast to explicit model-based methods that learn predictive models of future world observations, or approaches that employ online data collection, this approach learns goal-conditioned policies via offline model-free RL.

To learn to reach any goal state, we perform hindsight relabeling of all trajectories and sub-sequences in our collected dataset and train a goal-conditioned Q-function in a fully offline manner (in contrast to learning online using a fixed set of success examples as in recursive classification). One challenge in this setting is the distributional shift caused by learning only from “positive” hindsight relabeled examples. This we address by employing a conservative strategy to minimize Q-values of unseen actions using artificial negative actions. Furthermore, to enable reaching temporary-extended goals, we introduce a technique for chaining goals across multiple episodes.

|

| Actionable Models relabel sub-sequences with all intermediate goals and regularize Q-values with artificial negative actions. |

Training with Actionable Models allows the system to learn a large repertoire of visually indicated skills, such as object grasping, container placing and object rearrangement. The model is also able to generalize to novel objects and visual objectives not seen in the training data, which demonstrates its ability to learn general functional knowledge about the world. We also show that downstream reinforcement learning tasks can be learned more efficiently by either fine-tuning a pre-trained goal-conditioned model or through a goal-reaching auxiliary objective during training.

|

| Example tasks (specified by goal-images) that our Actionable Model is able to learn. |

Conclusion

The results of both MT-Opt and Actionable Models indicate that it is possible to collect and then learn many distinct tasks from large diverse real-robot datasets within a single model, effectively amortizing the cost of learning across many skills. We see this an important step towards general robot learning systems that can be further scaled up to perform many useful services and serve as a starting point for learning downstream tasks.

This post is based on two papers, “MT-Opt: Continuous Multi-Task Robotic Reinforcement Learning at Scale” and “Actionable Models: Unsupervised Offline Reinforcement Learning of Robotic Skills,” with additional information and videos on the project websites for MT-Opt and Actionable Models.

Acknowledgements

This research was conducted by Dmitry Kalashnikov, Jake Varley, Yevgen Chebotar, Ben Swanson, Rico Jonschkowski, Chelsea Finn, Sergey Levine, Yao Lu, Alex Irpan, Ben Eysenbach, Ryan Julian and Ted Xiao. We’d like to give special thanks to Josh Weaver, Noah Brown, Khem Holden, Linda Luu and Brandon Kinman for their robot operation support; Anthony Brohan for help with distributed learning and testing infrastructure; Tom Small for help with videos and project media; Julian Ibarz, Kanishka Rao, Vikas Sindhwani and Vincent Vanhoucke for their support; Tuna Toksoz and Garrett Peake for improving the bin reset mechanisms; Satoshi Kataoka, Michael Ahn, and Ken Oslund for help with the underlying control stack, and the rest of the Robotics at Google team for their overall support and encouragement. All the above contributions were incredibly enabling for this research.