Generative AI technology is improving rapidly, and it’s now possible to generate text and images based on text input. Stable Diffusion is a text-to-image model that empowers you to create photorealistic applications. You can easily generate images from text using Stable Diffusion models through Amazon SageMaker JumpStart.

The following are examples of input texts and the corresponding output images generated by Stable Diffusion. The inputs are “A boxer dancing on a table,” “A lady on the beach in swimming wear, water color style,” and “A dog in a suit.”

Although generative AI solutions are powerful and useful, they can also be vulnerable to manipulation and abuse. Customers using them for image generation must prioritize content moderation to protect their users, platform, and brand by implementing strong moderation practices to create a safe and positive user experience while safeguarding their platform and brand reputation.

In this post, we explore using AWS AI services Amazon Rekognition and Amazon Comprehend, along with other techniques, to effectively moderate Stable Diffusion model-generated content in near-real time. To learn how to launch and generate images from text using a Stable Diffusion model on AWS, refer to Generate images from text with the stable diffusion model on Amazon SageMaker JumpStart.

Solution overview

Amazon Rekognition and Amazon Comprehend are managed AI services that provide pre-trained and customizable ML models via an API interface, eliminating the need for machine learning (ML) expertise. Amazon Rekognition Content Moderation automates and streamlines image and video moderation. Amazon Comprehend utilizes ML to analyze text and uncover valuable insights and relationships.

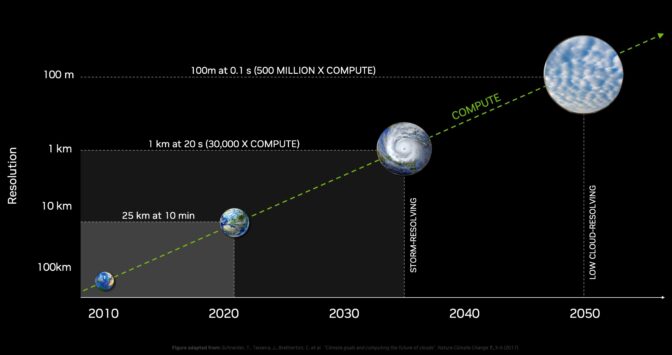

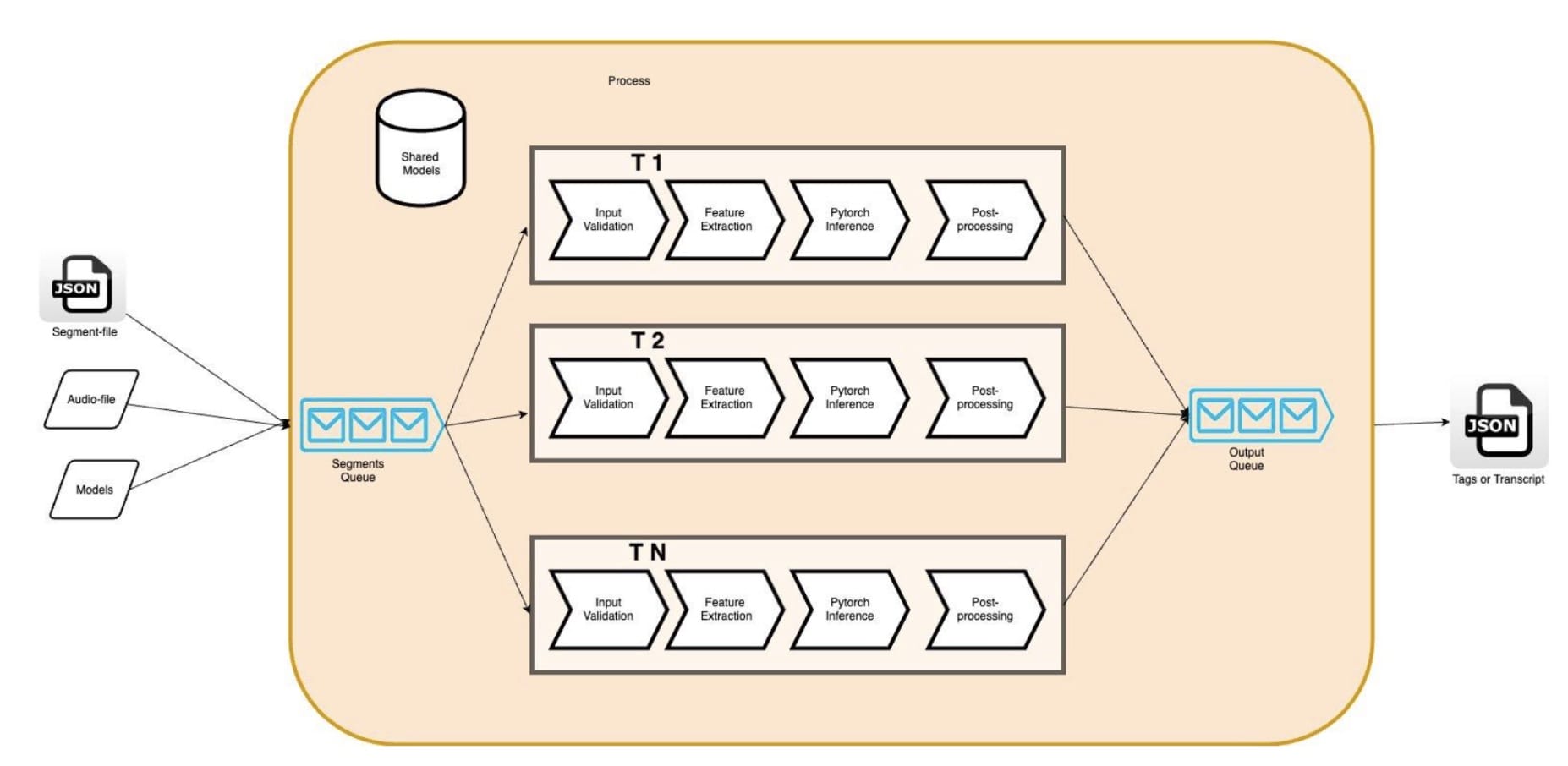

The following reference illustrates the creation of a RESTful proxy API for moderating Stable Diffusion text-to-image model-generated images in near-real time. In this solution, we launched and deployed a Stable Diffusion model (v2-1 base) using JumpStart. The solution uses negative prompts and text moderation solutions such as Amazon Comprehend and a rule-based filter to moderate input prompts. It also utilizes Amazon Rekognition to moderate the generated images. The RESTful API will return the generated image and the moderation warnings to the client if unsafe information is detected.

The steps in the workflow are as follows:

- The user send a prompt to generate an image.

- An AWS Lambda function coordinates image generation and moderation using Amazon Comprehend, JumpStart, and Amazon Rekognition:

- Apply a rule-based condition to input prompts in Lambda functions, enforcing content moderation with forbidden word detection.

- Use the Amazon Comprehend custom classifier to analyze the prompt text for toxicity classification.

- Send the prompt to the Stable Diffusion model through the SageMaker endpoint, passing both the prompts as user input and negative prompts from a predefined list.

- Send the image bytes returned from the SageMaker endpoint to the Amazon Rekognition

DetectModerationLabel API for image moderation.

- Construct a response message that includes image bytes and warnings if the previous steps detected any inappropriate information in the prompt or generative image.

- Send the response back to the client.

The following screenshot shows a sample app built using the described architecture. The web UI sends user input prompts to the RESTful proxy API and displays the image and any moderation warnings received in the response. The demo app blurs the actual generated image if it contains unsafe content. We tested the app with the sample prompt “A sexy lady.”

You can implement more sophisticated logic for a better user experience, such as rejecting the request if the prompts contain unsafe information. Additionally, you could have a retry policy to regenerate the image if the prompt is safe, but the output is unsafe.

Predefine a list of negative prompts

Stable Diffusion supports negative prompts, which lets you specify prompts to avoid during image generation. Creating a predefined list of negative prompts is a practical and proactive approach to prevent the model from producing unsafe images. By including prompts like “naked,” “sexy,” and “nudity,” which are known to lead to inappropriate or offensive images, the model can recognize and avoid them, reducing the risk of generating unsafe content.

The implementation can be managed in the Lambda function when calling the SageMaker endpoint to run inference of the Stable Diffusion model, passing both the prompts from user input and the negative prompts from a predefined list.

Although this approach is effective, it could impact the results generated by the Stable Diffusion model and limit its functionality. It’s important to consider it as one of the moderation techniques, combined with other approaches such as text and image moderation using Amazon Comprehend and Amazon Rekognition.

Moderate input prompts

A common approach to text moderation is to use a rule-based keyword lookup method to identify whether the input text contains any forbidden words or phrases from a predefined list. This method is relatively easy to implement, with minimal performance impact and lower costs. However, the major drawback of this approach is that it’s limited to only detecting words included in the predefined list and can’t detect new or modified variations of forbidden words not included in the list. Users can also attempt to bypass the rules by using alternative spellings or special characters to replace letters.

To address the limitations of a rule-based text moderation, many solutions have adopted a hybrid approach that combines rule-based keyword lookup with ML-based toxicity detection. The combination of both approaches allows for a more comprehensive and effective text moderation solution, capable of detecting a wider range of inappropriate content and improving the accuracy of moderation outcomes.

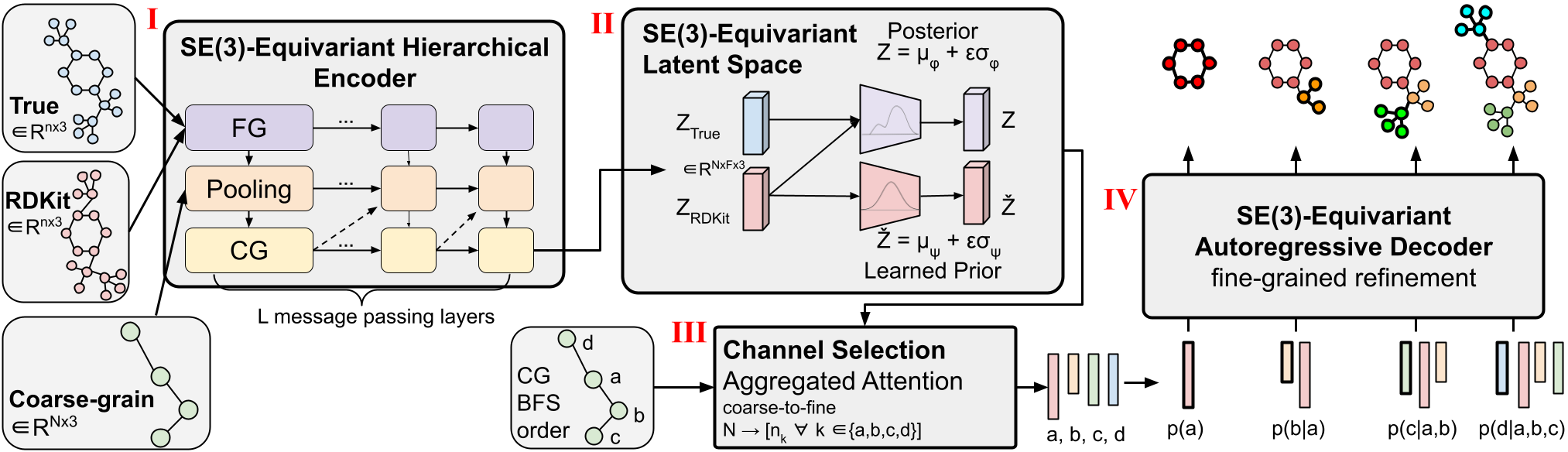

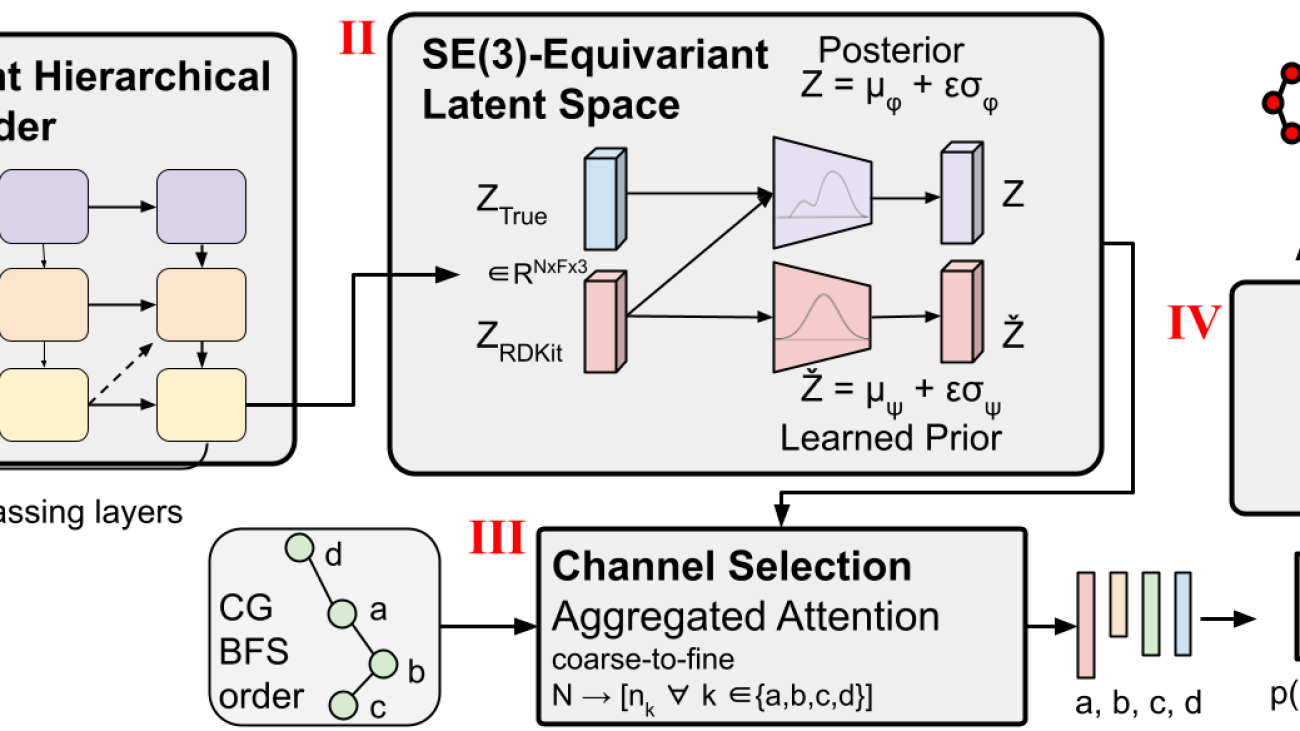

In this solution, we use an Amazon Comprehend custom classifier to train a toxicity detection model, which we use to detect potentially harmful content in input prompts in cases where no explicit forbidden words are detected. With the power of machine learning, we can teach the model to recognize patterns in text that may indicate toxicity, even when such patterns aren’t easily detectable by a rule-based approach.

With Amazon Comprehend as a managed AI service, training and inference are simplified. You can easily train and deploy Amazon Comprehend custom classification with just two steps. Check out our workshop lab for more information about the toxicity detection model using an Amazon Comprehend custom classifier. The lab provides a step-by-step guide to creating and integrating a custom toxicity classifier into your application. The following diagram illustrates this solution architecture.

This sample classifier uses a social media training dataset and performs binary classification. However, if you have more specific requirements for your text moderation needs, consider using a more tailored dataset to train your Amazon Comprehend custom classifier.

Moderate output images

Although moderating input text prompts is important, it doesn’t guarantee that all images generated by the Stable Diffusion model will be safe for the intended audience, because the model’s outputs can contain a certain level of randomness. Therefore, it’s equally important to moderate the images generated by the Stable Diffusion model.

In this solution, we utilize Amazon Rekognition Content Moderation, which employs pre-trained ML models, to detect inappropriate content in images and videos. In this solution, we use the Amazon Rekognition DetectModerationLabel API to moderate images generated by the Stable Diffusion model in near-real time. Amazon Rekognition Content Moderation provides pre-trained APIs to analyze a wide range of inappropriate or offensive content, such as violence, nudity, hate symbols, and more. For a comprehensive list of Amazon Rekognition Content Moderation taxonomies, refer to Moderating content.

The following code demonstrates how to call the Amazon Rekognition DetectModerationLabel API to moderate images within an Lambda function using the Python Boto3 library. This function takes the image bytes returned from SageMaker and sends them to the Image Moderation API for moderation.

import boto3

# Initialize the Amazon Rekognition client object

rekognition = boto3.client('rekognition')

# Call the Rekognition Image moderation API and store the results

response = rekognition.detect_moderation_labels(

Image={

'Bytes': base64.b64decode(img_bytes)

}

)

# Printout the API response

print(response)

For additional examples of the Amazon Rekognition Image Moderation API, refer to our Content Moderation Image Lab.

Effective image moderation techniques for fine-tuning models

Fine-tuning is a common technique used to adapt pre-trained models to specific tasks. In the case of Stable Diffusion, fine-tuning can be used to generate images that incorporate specific objects, styles, and characters. Content moderation is critical when training a Stable Diffusion model to prevent the creation of inappropriate or offensive images. This involves carefully reviewing and filtering out any data that could lead to the generation of such images. By doing so, the model learns from a more diverse and representative range of data points, improving its accuracy and preventing the propagation of harmful content.

JumpStart makes fine-tuning the Stable Diffusion Model easy by providing the transfer learning scripts using the DreamBooth method. You just need to prepare your training data, define the hyperparameters, and start the training job. For more details, refer to Fine-tune text-to-image Stable Diffusion models with Amazon SageMaker JumpStart.

The dataset for fine-tuning needs to be a single Amazon Simple Storage Service (Amazon S3) directory including your images and instance configuration file dataset_info.json, as shown in the following code. The JSON file will associate the images with the instance prompt like this: {'instance_prompt':<<instance_prompt>>}.

input_directory

|---instance_image_1.png

|---instance_image_2.png

|---instance_image_3.png

|---instance_image_4.png

|---instance_image_5.png

|---dataset_info.json

Obviously, you can manually review and filter the images, but this can be time-consuming and even impractical when you do this at scale across many projects and teams. In such cases, you can automate a batch process to centrally check all the images against the Amazon Rekognition DetectModerationLabel API and automatically flag or remove images so they don’t contaminate your training.

Moderation latency and cost

In this solution, a sequential pattern is used to moderate text and images. A rule-based function and Amazon Comprehend are called for text moderation, and Amazon Rekognition is used for image moderation, both before and after invoking Stable Diffusion. Although this approach effectively moderates input prompts and output images, it may increase the overall cost and latency of the solution, which is something to consider.

Latency

Both Amazon Rekognition and Amazon Comprehend offer managed APIs that are highly available and have built-in scalability. Despite potential latency variations due to input size and network speed, the APIs used in this solution from both services offer near-real-time inference. Amazon Comprehend custom classifier endpoints can offer a speed of less than 200 milliseconds for input text sizes of less than 100 characters, while the Amazon Rekognition Image Moderation API serves approximately 500 milliseconds for average file sizes of less than 1 MB. (The results are based on the test conducted using the sample application, which qualifies as a near-real-time requirement.)

In total, the moderation API calls to Amazon Rekognition and Amazon Comprehend will add up to 700 milliseconds to the API call. It’s important to note that the Stable Diffusion request usually takes longer depending on the complexity of the prompts and the underlying infrastructure capability. In the test account, using an instance type of ml.p3.2xlarge, the average response time for the Stable Diffusion model via a SageMaker endpoint was around 15 seconds. Therefore, the latency introduced by moderation is approximately 5% of the overall response time, making it a minimal impact on the overall performance of the system.

Cost

The Amazon Rekognition Image Moderation API employs a pay-as-you-go model based on the number of requests. The cost varies depending on the AWS Region used and follows a tiered pricing structure. As the volume of requests increases, the cost per request decreases. For more information, refer to Amazon Rekognition pricing.

In this solution, we utilized an Amazon Comprehend custom classifier and deployed it as an Amazon Comprehend endpoint to facilitate real-time inference. This implementation incurs both a one-time training cost and ongoing inference costs. For detailed information, refer to Amazon Comprehend Pricing.

Jumpstart enables you to quickly launch and deploy the Stable Diffusion model as a single package. Running inference on the Stable Diffusion model will incur costs for the underlying Amazon Elastic Compute Cloud (Amazon EC2) instance as well as inbound and outbound data transfer. For detailed information, refer to Amazon SageMaker Pricing.

Summary

In this post, we provided an overview of a sample solution that showcases how to moderate Stable Diffusion input prompts and output images using Amazon Comprehend and Amazon Rekognition. Additionally, you can define negative prompts in Stable Diffusion to prevent generating unsafe content. By implementing multiple moderation layers, the risk of producing unsafe content can be greatly reduced, ensuring a safer and more dependable user experience.

Learn more about content moderation on AWS and our content moderation ML use cases, and take the first step towards streamlining your content moderation operations with AWS.

About the Authors

Lana Zhang is a Senior Solutions Architect at AWS WWSO AI Services team, specializing in AI and ML for content moderation, computer vision, and natural language processing. With her expertise, she is dedicated to promoting AWS AI/ML solutions and assisting customers in transforming their business solutions across diverse industries, including social media, gaming, e-commerce, and advertising & marketing.

Lana Zhang is a Senior Solutions Architect at AWS WWSO AI Services team, specializing in AI and ML for content moderation, computer vision, and natural language processing. With her expertise, she is dedicated to promoting AWS AI/ML solutions and assisting customers in transforming their business solutions across diverse industries, including social media, gaming, e-commerce, and advertising & marketing.

James Wu is a Senior AI/ML Specialist Solution Architect at AWS. helping customers design and build AI/ML solutions. James’s work covers a wide range of ML use cases, with a primary interest in computer vision, deep learning, and scaling ML across the enterprise. Prior to joining AWS, James was an architect, developer, and technology leader for over 10 years, including 6 years in engineering and 4 years in marketing and advertising industries.

James Wu is a Senior AI/ML Specialist Solution Architect at AWS. helping customers design and build AI/ML solutions. James’s work covers a wide range of ML use cases, with a primary interest in computer vision, deep learning, and scaling ML across the enterprise. Prior to joining AWS, James was an architect, developer, and technology leader for over 10 years, including 6 years in engineering and 4 years in marketing and advertising industries.

Kevin Carlson is a Principal AI/ML Specialist with a focus on Computer Vision at AWS, where he leads Business Development and GTM for Amazon Rekognition. Prior to joining AWS, he led Digital Transformation globally at Fortune 500 Engineering company AECOM, with a focus on artificial intelligence and machine learning for generative design and infrastructure assessment. He is based in Chicago, where outside of work he enjoys time with his family, and is passionate about flying airplanes and coaching youth baseball.

Kevin Carlson is a Principal AI/ML Specialist with a focus on Computer Vision at AWS, where he leads Business Development and GTM for Amazon Rekognition. Prior to joining AWS, he led Digital Transformation globally at Fortune 500 Engineering company AECOM, with a focus on artificial intelligence and machine learning for generative design and infrastructure assessment. He is based in Chicago, where outside of work he enjoys time with his family, and is passionate about flying airplanes and coaching youth baseball.

John Rouse is a Senior AI/ML Specialist at AWS, where he leads global business development for AI services focused on Content Moderation and Compliance use cases. Prior to joining AWS, he has held senior level business development and leadership roles with cutting edge technology companies. John is working to put machine learning in the hands of every developer with AWS AI/ML stack. Small ideas bring about small impact. John’s goal for customers is to empower them with big ideas and opportunities that open doors so they can make a major impact with their customer.

John Rouse is a Senior AI/ML Specialist at AWS, where he leads global business development for AI services focused on Content Moderation and Compliance use cases. Prior to joining AWS, he has held senior level business development and leadership roles with cutting edge technology companies. John is working to put machine learning in the hands of every developer with AWS AI/ML stack. Small ideas bring about small impact. John’s goal for customers is to empower them with big ideas and opportunities that open doors so they can make a major impact with their customer.

Read More

<!–

<!–  –>

–>

Henrik Balle is a Sr. Solutions Architect at AWS supporting the US Public Sector. He works closely with customers on a range of topics from machine learning to security and governance at scale. In his spare time, he loves road biking, motorcycling, or you might find him working on yet another home improvement project.

Henrik Balle is a Sr. Solutions Architect at AWS supporting the US Public Sector. He works closely with customers on a range of topics from machine learning to security and governance at scale. In his spare time, he loves road biking, motorcycling, or you might find him working on yet another home improvement project.