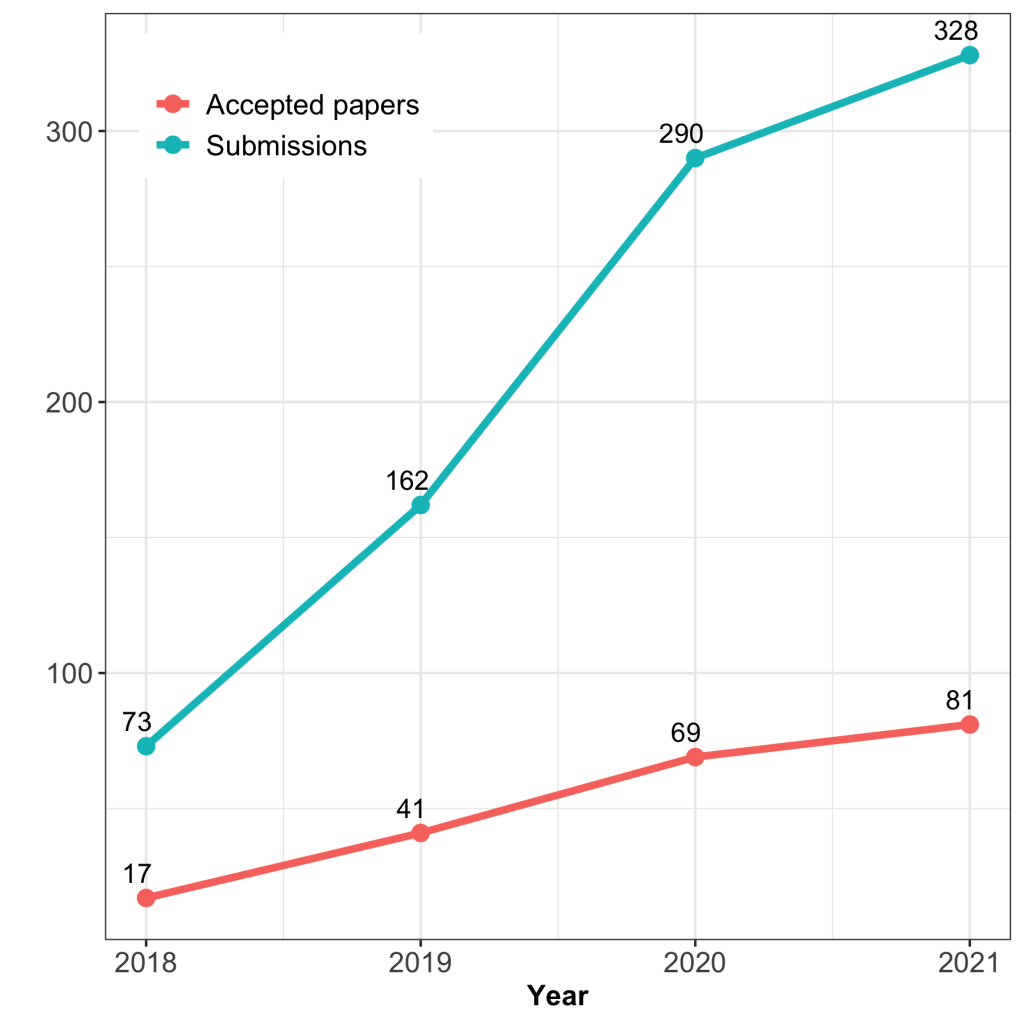

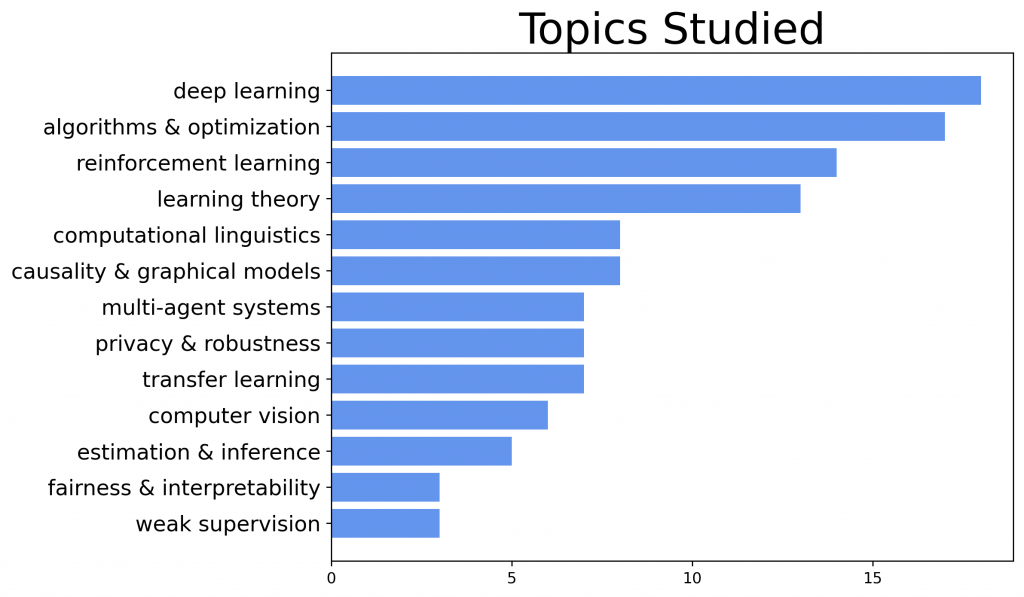

Carnegie Mellon University is proud to present 92 papers in the main conference and 9 papers in the datasets and benchmarks track at the 35th Conference on Neural Information Processing Systems (NeurIPS 2021), which will be held virtually this week. Additionally, CMU faculty and students are co-organizing 6 workshops and 1 tutorial, as well as giving 7 invited talks at the main conference and workshops. Here is a quick overview of the areas our researchers are working on:

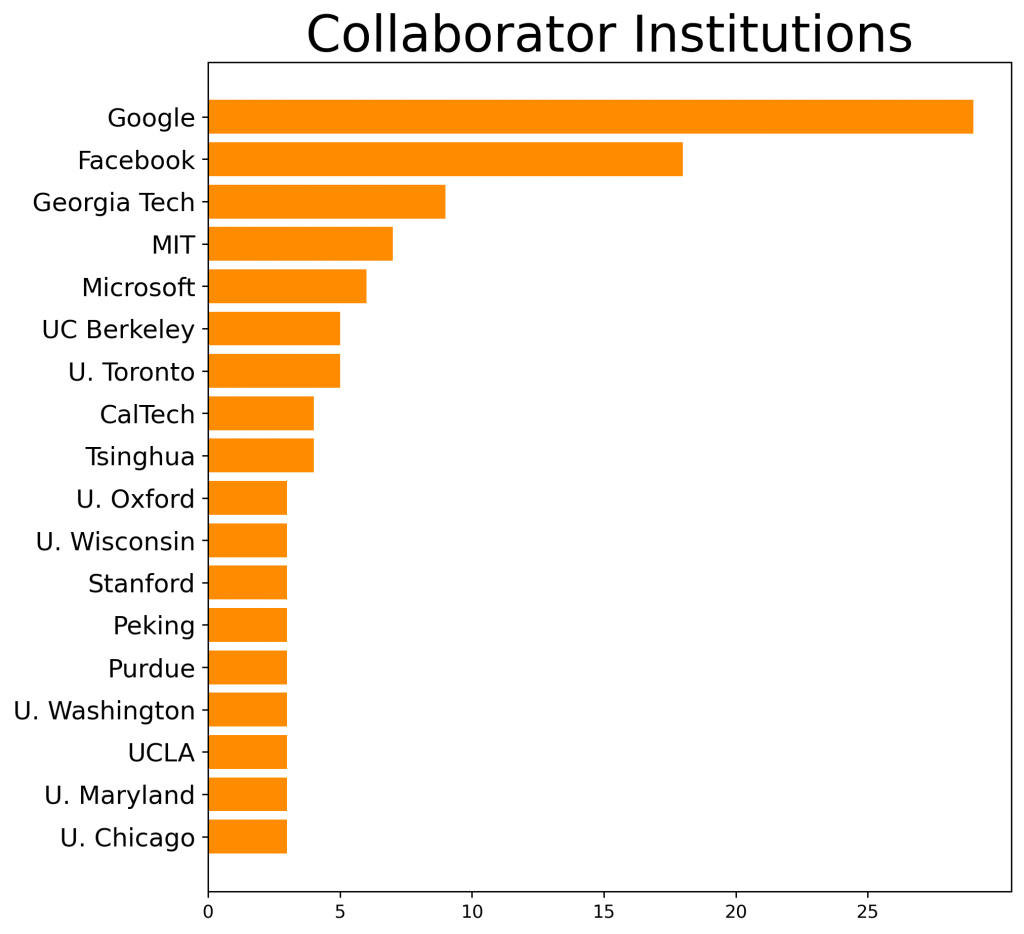

We are also proud to collaborate with many other researchers in academia and industry:

Conference Papers

Algorithms & Optimization

Adversarial Robustness of Streaming Algorithms through Importance Sampling

Vladimir Braverman (Johns Hopkins University) · Avinatan Hassidim (Google) · Yossi Matias (Tel Aviv University) · Mariano Schain (Google) · Sandeep Silwal (Massachusetts Institute of Technology) · Samson Zhou (Carnegie Mellon University)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot G1

Global Convergence of Gradient Descent for Asymmetric Low-Rank Matrix Factorization

Tian Ye (Carnegie Mellon University) · Simon Du (University of Washington)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot F3

Convergence Rates of Stochastic Gradient Descent under Infinite Noise Variance

Hongjian Wang (Carnegie Mellon University) · Mert Gurbuzbalaban (Rutgers University) · Lingjiong Zhu (Florida State University) · Umut Simsekli (Inria Paris / ENS) · Murat Erdogdu (University of Toronto)

Tue Dec 07 04:30 PM — 06:00 PM (PST) @ Poster Session 2 Spot E3

Few-Shot Data-Driven Algorithms for Low Rank Approximation

Piotr Indyk (MIT) · Tal Wagner (Microsoft Research Redmond) · David Woodruff (Carnegie Mellon University)

Tue Dec 07 04:30 PM — 06:00 PM (PST) @ Poster Session 2 Spot A3

Training Certifiably Robust Neural Networks with Efficient Local Lipschitz Bounds

Yujia Huang (Caltech) · Huan Zhang (UCLA) · Yuanyuan Shi (Caltech) · J. Zico Kolter (Carnegie Mellon University / Bosch Center for A) · Anima Anandkumar (NVIDIA / Caltech)

Tue Dec 07 04:30 PM — 06:00 PM (PST) @ Poster Session 2 Spot H2

Controlled Text Generation as Continuous Optimization with Multiple Constraints

Sachin Kumar (CMU) · Eric Malmi (Google) · Aliaksei Severyn (Google) · Yulia Tsvetkov (Department of Computer Science, University of Washington)

Wed Dec 08 04:30 PM — 06:00 PM (PST) @ Poster Session 4 Spot D1

Greedy Approximation Algorithms for Active Sequential Hypothesis Testing

Kyra Gan (Carnegie Mellon University) · Su Jia (CMU) · Andrew Li (Carnegie Mellon University)

Wed Dec 08 04:30 PM — 06:00 PM (PST) @ Poster Session 4 Spot B0

Optimal Sketching for Trace Estimation

Shuli Jiang (Carnegie Mellon University) · Hai Pham (Carnegie Mellon University) · David Woodruff (Carnegie Mellon University) · Richard Zhang (Google Brain)

Wed Dec 08 04:30 PM — 06:00 PM (PST) @ Poster Session 4 Spot A0

Federated Hyperparameter Tuning: Challenges, Baselines, and Connections to Weight-Sharing

Misha Khodak (CMU) · Renbo Tu (CMU, Carnegie Mellon University) · Tian Li (CMU) · Liam Li (Carnegie Mellon University) · Maria-Florina Balcan (Carnegie Mellon University) · Virginia Smith (Carnegie Mellon University) · Ameet S Talwalkar (CMU)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot C1

Linear and Kernel Classification in the Streaming Model: Improved Bounds for Heavy Hitters

Arvind Mahankali (Carnegie Mellon University) · David Woodruff (Carnegie Mellon University)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot E3

On Large-Cohort Training for Federated Learning

Zachary Charles (Google Research) · Zachary Garrett (Google) · Zhouyuan Huo (Google) · Sergei Shmulyian (Google) · Virginia Smith (Carnegie Mellon University)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot D1

The Skellam Mechanism for Differentially Private Federated Learning

Naman Agarwal (Google) · Peter Kairouz (Google) · Ken Liu (Carnegie Mellon University)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot C1

Federated Reconstruction: Partially Local Federated Learning

Karan Singhal (Google Research) · Hakim Sidahmed (Carnegie Mellon University) · Zachary Garrett (Google) · Shanshan Wu (University of Texas at Austin) · Keith Rush (Google) · Sushant Prakash (Google)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot C0

Habitat 2.0: Training Home Assistants to Rearrange their Habitat

Andrew Szot (Georgia Institute of Technology) · Alexander Clegg (Facebook (FAIR Labs)) · Eric Undersander (Facebook) · Erik Wijmans (Georgia Institute of Technology) · Yili Zhao (Facebook AI Research) · John Turner (Facebook) · Noah Maestre (Facebook) · Mustafa Mukadam (Facebook AI Research) · Devendra Singh Chaplot (Carnegie Mellon University) · Oleksandr Maksymets (Facebook AI Research) · Aaron Gokaslan (Facebook) · Vladimír Vondruš (Magnum Engine) · Sameer Dharur (Georgia Tech) · Franziska Meier (Facebook AI Research) · Wojciech Galuba (Facebook AI Research) · Angel Chang (Simon Fraser University) · Zsolt Kira (Georgia Institute of Techology) · Vladlen Koltun (Apple) · Jitendra Malik (UC Berkeley) · Manolis Savva (Simon Fraser University) · Dhruv Batra ()

Thu Dec 09 12:30 AM — 02:00 AM (PST) @ Poster Session 5 Spot E0

Joint inference and input optimization in equilibrium networks

Swaminathan Gurumurthy (Carnegie Mellon University) · Shaojie Bai (Carnegie Mellon University) · Zachary Manchester (Carnegie Mellon University) · J. Zico Kolter (Carnegie Mellon University / Bosch Center for A)

Thu Dec 09 04:30 PM — 06:00 PM (PST) @ Poster Session 7 Spot E1

On Training Implicit Models

Zhengyang Geng (Peking University) · Xin-Yu Zhang (TuSimple) · Shaojie Bai (Carnegie Mellon University) · Yisen Wang (Peking University) · Zhouchen Lin (Peking University)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot G2

Robust Online Correlation Clustering

Silvio Lattanzi (Google Research) · Benjamin Moseley (Carnegie Mellon University) · Sergei Vassilvitskii (Google) · Yuyan Wang (Carnegie Mellon University) · Rudy Zhou (CMU, Carnegie Mellon University)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot A1

Causality & Graphical Models

Can Information Flows Suggest Targets for Interventions in Neural Circuits?

Praveen Venkatesh (Allen Institute) · Sanghamitra Dutta (Carnegie Mellon University) · Neil Mehta (Carnegie Mellon University) · Pulkit Grover (CMU)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot A1

Identification of Partially Observed Linear Causal Models: Graphical Conditions for the Non-Gaussian and Heterogeneous Cases

Jeff Adams (University of Copenhagen) · Niels Hansen (University of Copenhagen) · Kun Zhang (CMU)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot C0

Instance-dependent Label-noise Learning under a Structural Causal Model

Nick Yao (University of Sydney) · Tongliang Liu (The University of Sydney) · Mingming Gong (University of Melbourne) · Bo Han (HKBU / RIKEN) · Gang Niu (RIKEN) · Kun Zhang (CMU)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot G3

Learning Treatment Effects in Panels with General Intervention Patterns

Vivek Farias (Massachusetts Institute of Technology) · Andrew Li (Carnegie Mellon University) · Tianyi Peng (Massachusetts Institute of Technology)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot F3

Reliable Causal Discovery with Improved Exact Search and Weaker Assumptions

Ignavier Ng (Carnegie Mellon University) · Yujia Zheng (Carnegie Mellon University) · Jiji Zhang (Lingnan University) · Kun Zhang (CMU)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot C1

Multi-task Learning of Order-Consistent Causal Graphs

Xinshi Chen (Georgia Institution of Technology) · Haoran Sun (Georgia Institute of Technology) · Caleb Ellington (Carnegie Mellon University) · Eric Xing (Petuum Inc. / Carnegie Mellon University) · Le Song (Georgia Institute of Technology)

Wed Dec 08 04:30 PM — 06:00 PM (PST) @ Poster Session 4 Spot C3

Efficient Online Estimation of Causal Effects by Deciding What to Observe

Shantanu Gupta (Carnegie Mellon University) · Zachary Lipton (Carnegie Mellon University) · David Childers (Carnegie Mellon University)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot A0

Learning latent causal graphs via mixture oracles

Bohdan Kivva (University of Chicago) · Goutham Rajendran (University of Chicago) · Pradeep Ravikumar (Carnegie Mellon University) · Bryon Aragam (University of Chicago)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot C0

Computational Linguistics

BARTScore: Evaluating Generated Text as Text Generation

Weizhe Yuan (Carnegie Mellon University) · Graham Neubig (Carnegie Mellon University) · Pengfei Liu (Carnegie Mellon University)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot A3

Off-Policy Risk Assessment in Contextual Bandits

Audrey Huang (Carnegie Mellon University) · Liu Leqi (Carnegie Mellon University) · Zachary Lipton (Carnegie Mellon University) · Kamyar Azizzadenesheli (Purdue University)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot C2

SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers

Enze Xie (The University of Hong Kong) · Wenhai Wang (Nanjing University) · Zhiding Yu (Carnegie Mellon University) · Anima Anandkumar (NVIDIA / Caltech) · Jose M. Alvarez (NVIDIA) · Ping Luo (The University of Hong Kong)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot A3

Dynamic population-based meta-learning for multi-agent communication with natural language

Abhinav Gupta (Facebook AI Research/CMU) · Marc Lanctot (DeepMind) · Angeliki Lazaridou (DeepMind)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot B2

(Implicit)^2: Implicit Layers for Implicit Representations

Zhichun Huang (CMU, Carnegie Mellon University) · Shaojie Bai (Carnegie Mellon University) · J. Zico Kolter (Carnegie Mellon University / Bosch Center for A)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot D1

Foundations of Symbolic Languages for Model Interpretability

Marcelo Arenas (Pontificia Universidad Catolica de Chile) · Daniel Báez (Universidad de Chile) · Pablo Barceló (PUC Chile & Millenium Instititute for Foundational Research on Data) · Jorge Pérez (Universidad de Chile) · Bernardo Subercaseaux (Carnegie Mellon University)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot C1

Keeping Your Eye on the Ball: Trajectory Attention in Video Transformers

Mandela Patrick (University of Oxford) · Dylan Campbell (University of Oxford) · Yuki Asano (University of Amsterdam) · Ishan Misra (Facebook AI Research) · Florian Metze (Carnegie Mellon University) · Christoph Feichtenhofer (Facebook AI Research) · Andrea Vedaldi (University of Oxford / Facebook AI Research) · João Henriques (University of Oxford)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot C3

Computer Vision

Dynamics-regulated kinematic policy for egocentric pose estimation

Zhengyi Luo (Carnegie Mellon University) · Ryo Hachiuma (Keio University) · Ye Yuan (Carnegie Mellon University) · Kris Kitani (Carnegie Mellon University)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot D3

NeRS: Neural Reflectance Surfaces for Sparse-view 3D Reconstruction in the Wild

Jason Zhang (Carnegie Mellon University) · Gengshan Yang (Carnegie Mellon University) · Shubham Tulsiani (UC Berkeley) · Deva Ramanan (Carnegie Mellon University)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Virtual @ Poster Session 1 Spot D0

SEAL: Self-supervised Embodied Active Learning using Exploration and 3D Consistency

Devendra Singh Chaplot (Carnegie Mellon University) · Murtaza Dalal (Carnegie Mellon University) · Saurabh Gupta (UIUC) · Jitendra Malik (UC Berkeley) · Russ Salakhutdinov (Carnegie Mellon University)

Wed Dec 08 04:30 PM — 06:00 PM (PST) @ Poster Session 4 Spot A1

Interesting Object, Curious Agent: Learning Task-Agnostic Exploration

Simone Parisi (Facebook) · Victoria Dean (CMU) · Deepak Pathak (Carnegie Mellon University) · Abhinav Gupta (Facebook AI Research/CMU)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot I3

TöRF: Time-of-Flight Radiance Fields for Dynamic Scene View Synthesis

battal Attal (Carnegie Mellon University) · Eliot Laidlaw (Brown University) · Aaron Gokaslan (Facebook) · Changil Kim (Facebook) · Christian Richardt (University of Bath) · James Tompkin (Brown University) · Matthew O’Toole (Carnegie Mellon University)

Thu Dec 09 04:30 PM — 06:00 PM (PST) @ Poster Session 7 Spot E3

ViSER: Video-Specific Surface Embeddings for Articulated 3D Shape Reconstruction

Gengshan Yang (Carnegie Mellon University) · Deqing Sun (Google) · Varun Jampani (Google) · Daniel Vlasic (Massachusetts Institute of Technology) · Forrester Cole (Google Research) · Ce Liu (Microsoft) · Deva Ramanan (Carnegie Mellon University)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot A2

Deep Learning

Can fMRI reveal the representation of syntactic structure in the brain?

Aniketh Janardhan Reddy (University of California Berkeley) · Leila Wehbe (Carnegie Mellon University)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot A3

PLUR: A Unifying, Graph-Based View of Program Learning, Understanding, and Repair

Zimin Chen (KTH Royal Institute of Technology, Stockholm, Sweden) · Vincent J Hellendoorn (CMU) · Pascal Lamblin (Google Research – Brain Team) · Petros Maniatis (Google Brain) · Pierre-Antoine Manzagol (Google) · Daniel Tarlow (Microsoft Research Cambridge) · Subhodeep Moitra (Google, Inc.)

Tue Dec 07 04:30 PM — 06:00 PM (PST) @ Poster Session 2 Spot A3

Rethinking Neural Operations for Diverse Tasks

Nick Roberts (University of Wisconsin-Madison) · Misha Khodak (CMU) · Tri Dao (Stanford University) · Liam Li (Carnegie Mellon University) · Chris Ré (Stanford) · Ameet S Talwalkar (CMU)

Tue Dec 07 04:30 PM — 06:00 PM (PST) @ Poster Session 2 Spot H1

Neural Additive Models: Interpretable Machine Learning with Neural Nets

Rishabh Agarwal (Google Research, Brain Team) · Levi Melnick (Microsoft) · Nicholas Frosst (Google) · Xuezhou Zhang (UW-Madison) · Ben Lengerich (Carnegie Mellon University) · Rich Caruana (Microsoft) · Geoffrey Hinton (Google)

Wed Dec 08 12:30 AM — 02:00 AM (PST) @ Poster Session 3 Spot A2

Emergent Discrete Communication in Semantic Spaces

Mycal Tucker (Massachusetts Institute of Technology) · Huao Li (University of Pittsburgh) · Siddharth Agrawal (Carnegie Mellon University) · Dana Hughes (Carnegie Mellon University) · Katia Sycara (CMU) · Michael Lewis (University of Pittsburgh) · Julie A Shah (MIT)

Wed Dec 08 04:30 PM — 06:00 PM (PST) @ Poster Session 4 Spot A1

Why Spectral Normalization Stabilizes GANs: Analysis and Improvements

Zinan Lin (Carnegie Mellon University) · Vyas Sekar (Carnegie Mellon University) · Giulia Fanti (CMU)

Wed Dec 08 04:30 PM — 06:00 PM (PST) @ Poster Session 4 Spot C3

Can multi-label classification networks know what they don’t know?

Haoran Wang (Carnegie Mellon University) · Weitang Liu (UC San Diego) · Alex Bocchieri (University of Wisconsin, Madison) · Sharon Li (University of Wisconsin-Madison)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot D3

Influence Patterns for Explaining Information Flow in BERT

Kaiji Lu (Carnegie Mellon University) · Zifan Wang (Carnegie Mellon University) · Peter Mardziel (Carnegie Mellon University) · Anupam Datta (Carnegie Mellon University)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot C0

Local Signal Adaptivity: Provable Feature Learning in Neural Networks Beyond Kernels

Stefani Karp (Carnegie Mellon University/Google) · Ezra Winston (Carnegie Mellon University) · Yuanzhi Li (CMU) · Aarti Singh (CMU)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot G3

Beta-CROWN: Efficient Bound Propagation with Per-neuron Split Constraints for Neural Network Robustness Verification

Shiqi Wang (Columbia) · Huan Zhang (UCLA) · Kaidi Xu (Northeastern University) · Xue Lin (Northeastern University) · Suman Jana (Columbia University) · Cho-Jui Hsieh (UCLA) · J. Zico Kolter (Carnegie Mellon University / Bosch Center for A)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot F1

Luna: Linear Unified Nested Attention

Max Ma (University of Southern California) · Xiang Kong (Carnegie Mellon University) · Sinong Wang (Facebook AI) · Chunting Zhou (Language Technologies Institute, Carnegie Mellon University) · Jonathan May (University of Southern California) · Hao Ma (Facebook AI) · Luke Zettlemoyer (University of Washington and Facebook)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot A3

Parametric Complexity Bounds for Approximating PDEs with Neural Networks

Tanya Marwah (Carnegie Mellon University) · Zachary Lipton (Carnegie Mellon University) · Andrej Risteski (CMU)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot A1

Estimation & Inference

Leveraging Spatial and Temporal Correlations in Sparsified Mean Estimation

Divyansh Jhunjhunwala (Carnegie Mellon University) · Ankur Mallick (CMU, Carnegie Mellon University) · Advait Gadhikar (Carnegie Mellon University) · Swanand Kadhe (University of California Berkeley) · Gauri Joshi (Carnegie Mellon University)

Wed Dec 08 04:30 PM — 06:00 PM (PST) @ Poster Session 4 Spot G2

Beyond Pinball Loss: Quantile Methods for Calibrated Uncertainty Quantification

Youngseog Chung (Carnegie Mellon University) · Willie Neiswanger (Carnegie Mellon University) · Ian Char (Carnegie Mellon University) · Jeff Schneider (CMU)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot G0

Lattice partition recovery with dyadic CART

OSCAR HERNAN MADRID PADILLA (University of California, Los Angeles) · Yi Yu (The university of Warwick) · Alessandro Rinaldo (CMU)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Virtual @ Poster Session 6 Spot D2

Mixture Proportion Estimation and PU Learning:A Modern Approach

Saurabh Garg (CMU) · Yifan Wu (Carnegie Mellon University) · Alexander J Smola (NICTA) · Sivaraman Balakrishnan (Carnegie Mellon University) · Zachary Lipton (Carnegie Mellon University)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot B2

Fairness & Interpretability

Fair Sortition Made Transparent

Bailey Flanigan (Carnegie Mellon University) · Greg Kehne (Harvard University) · Ariel Procaccia (Carnegie Mellon University)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot B1

Learning Theory

A unified framework for bandit multiple testing

Ziyu Xu (Carnegie Mellon University) · Ruodu Wang (University of Waterloo) · Aaditya Ramdas (CMU)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot C1

Learning-to-learn non-convex piecewise-Lipschitz functions

Maria-Florina Balcan (Carnegie Mellon University) · Misha Khodak (CMU) · Dravyansh Sharma (CMU) · Ameet S Talwalkar (CMU)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot E1

Rebounding Bandits for Modeling Satiation Effects

Liu Leqi (Carnegie Mellon University) · Fatma Kilinc Karzan (Carnegie Mellon University) · Zachary Lipton (Carnegie Mellon University) · Alan Montgomery (Carnegie Mellon University)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot A3

Sharp Impossibility Results for Hyper-graph Testing

Jiashun Jin (CMU Statistics) · Tracy Ke Ke (Harvard University) · Jiajun Liang (Purdue University)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot A2

Dimensionality Reduction for Wasserstein Barycenter

Zach Izzo (Stanford University) · Sandeep Silwal (Massachusetts Institute of Technology) · Samson Zhou (Carnegie Mellon University)

Tue Dec 07 04:30 PM — 06:00 PM (PST) @ Poster Session 2 Spot B3

Sample Complexity of Tree Search Configuration: Cutting Planes and Beyond

Maria-Florina Balcan (Carnegie Mellon University) · Siddharth Prasad (Computer Science Department, Carnegie Mellon University) · Tuomas Sandholm (CMU, Strategic Machine, Strategy Robot, Optimized Markets) · Ellen Vitercik (University of California, Berkeley)

Tue Dec 07 04:30 PM — 06:00 PM (PST) @ Poster Session 2 Spot E1

Faster Matchings via Learned Duals

Michael Dinitz (Johns Hopkins University) · Sungjin Im (University of California, Merced) · Thomas Lavastida (Carnegie Mellon University) · Benjamin Moseley (Carnegie Mellon University) · Sergei Vassilvitskii (Google)

Tue Dec 07 04:30 PM — 06:00 PM (PST) @ Poster Session 2 Spot A1

Breaking the Sample Complexity Barrier to Regret-Optimal Model-Free Reinforcement Learning

Gen Li (Tsinghua University) · Laixi Shi (Carnegie Mellon University) · Yuxin Chen (Caltech) · Yuantao Gu (Tsinghua University) · Yuejie Chi (Carnegie Mellon University)

Wed Dec 08 04:30 PM — 06:00 PM (PST) @ Poster Session 4 Spot H3

Universal Approximation Using Well-Conditioned Normalizing Flows

Holden Lee (Duke University) · Chirag Pabbaraju (Carnegie Mellon University) · Anish Sevekari Sevekari (Carnegie Mellon University) · Andrej Risteski (CMU)

Wed Dec 08 04:30 PM — 06:00 PM (PST) @ Poster Session 4 Spot B1

Boosted CVaR Classification

Runtian Zhai (Carnegie Mellon University) · Chen Dan (Carnegie Mellon University) · Arun Suggala (Carnegie Mellon University) · J. Zico Kolter (Carnegie Mellon University / Bosch Center for A) · Pradeep Ravikumar (Carnegie Mellon University)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Virtual @ Poster Session 6 Spot D0

Data driven semi-supervised learning

Maria-Florina Balcan (Carnegie Mellon University) · Dravyansh Sharma (CMU)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot C3

Multi-Agent Systems

Stateful Strategic Regression

Keegan Harris (Carnegie Mellon University) · Hoda Heidari (Carnegie Mellon University) · Steven Wu (Carnegie Mellon University)

Tue Dec 07 04:30 PM — 06:00 PM (PST) @ Poster Session 2 Spot G0

Revenue maximization via machine learning with noisy data

Ellen Vitercik (University of California, Berkeley) · Tom Yan (Carnegie Mellon University)

Wed Dec 08 04:30 PM — 06:00 PM (PST) @ Poster Session 4 Spot C0

Equilibrium Refinement for the Age of Machines: The One-Sided Quasi-Perfect Equilibrium

Gabriele Farina (Carnegie Mellon University) · Tuomas Sandholm (CMU, Strategic Machine, Strategy Robot, Optimized Markets)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot A2

Subgame solving without common knowledge

Brian Zhang (Carnegie Mellon University) · Tuomas Sandholm (CMU, Strategic Machine, Strategy Robot, Optimized Markets)

Thu Dec 09 04:30 PM — 06:00 PM (PST) @ Poster Session 7 Spot B2

Fast Policy Extragradient Methods for Competitive Games with Entropy Regularization

Shicong Cen (Carnegie Mellon University) · Yuting Wei (Carnegie Mellon University) · Yuejie Chi (Carnegie Mellon University)

Thu Dec 09 04:30 PM — 06:00 PM (PST) @ Poster Session 7 Spot B1

Privacy & Robustness

Adversarially robust learning for security-constrained optimal power flow

Priya Donti (Carnegie Mellon University) · Aayushya Agarwal (Carnegie Mellon University) · Neeraj Vijay Bedmutha (Carnegie Mellon University) · Larry Pileggi (Carnegie Mellon University) · J. Zico Kolter (Carnegie Mellon University / Bosch Center for A)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot B3

Robustness between the worst and average case

Leslie Rice (Carnegie Mellon University) · Anna Bair (Carnegie Mellon University) · Huan Zhang (UCLA) · J. Zico Kolter (Carnegie Mellon University / Bosch Center for A)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot E1

Relaxing Local Robustness

Klas Leino (School of Computer Science, Carnegie Mellon University) · Matt Fredrikson (CMU)

Tue Dec 07 04:30 PM — 06:00 PM (PST) @ Poster Session 2 Spot A1

Iterative Methods for Private Synthetic Data: Unifying Framework and New Methods

Terrance Liu (Carnegie Mellon University) · Giuseppe Vietri (University of Minnesota) · Steven Wu (Carnegie Mellon University)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot G1

Reinforcement Learning

Monte Carlo Tree Search With Iteratively Refining State Abstractions

Samuel Sokota (Carnegie Mellon University) · Caleb Y Ho (Independent Researcher) · Zaheen Ahmad (University of Alberta) · J. Zico Kolter (Carnegie Mellon University / Bosch Center for A)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot B1

Sample-Efficient Reinforcement Learning Is Feasible for Linearly Realizable MDPs with Limited Revisiting

Gen Li (Tsinghua University) · Yuxin Chen (Caltech) · Yuejie Chi (Carnegie Mellon University) · Yuantao Gu (Tsinghua University) · Yuting Wei (Carnegie Mellon University)

Wed Dec 08 04:30 PM — 06:00 PM (PST) @ Poster Session 4 Spot H2

When Is Generalizable Reinforcement Learning Tractable?

Dhruv Malik (Carnegie Mellon University) · Yuanzhi Li (CMU) · Pradeep Ravikumar (Carnegie Mellon University)

Wed Dec 08 04:30 PM — 06:00 PM (PST) @ Poster Session 4 Spot C2

An Exponential Lower Bound for Linearly Realizable MDP with Constant Suboptimality Gap

Yuanhao Wang (Tsinghua University) · Ruosong Wang (Carnegie Mellon University) · Sham Kakade (Harvard University & Microsoft Research)

Thu Dec 09 04:30 PM — 06:00 PM (PST) @ Poster Session 7 Spot D3

Replacing Rewards with Examples: Example-Based Policy Search via Recursive Classification

Ben Eysenbach (Google AI Resident) · Sergey Levine (University of Washington) · Russ Salakhutdinov (Carnegie Mellon University)

Thu Dec 09 04:30 PM — 06:00 PM (PST) @ Poster Session 7 Spot E1

Robust Predictable Control

Ben Eysenbach (Google AI Resident) · Russ Salakhutdinov (Carnegie Mellon University) · Sergey Levine (University of Washington)

Thu Dec 09 04:30 PM — 06:00 PM (PST) @ Poster Session 7 Spot E0

Discovering and Achieving Goals via World Models

Russell Mendonca (Carnegie Mellon University) · Oleh Rybkin (University of Pennsylvania) · Kostas Daniilidis (University of Pennsylvania) · Danijar Hafner (Google) · Deepak Pathak (Carnegie Mellon University)

Thu Dec 09 04:30 PM — 06:00 PM (PST) @ Poster Session 7 Spot F0

Functional Regularization for Reinforcement Learning via Learned Fourier Features

Alexander Li (Carnegie Mellon University) · Deepak Pathak (Carnegie Mellon University)

Thu Dec 09 04:30 PM — 06:00 PM (PST) @ Poster Session 7 Spot I3

Accelerating Robotic Reinforcement Learning via Parameterized Action Primitives

Murtaza Dalal (Carnegie Mellon University) · Deepak Pathak (Carnegie Mellon University) · Russ Salakhutdinov (Carnegie Mellon University)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot E1

No RL, No Simulation: Learning to Navigate without Navigating

Meera Hahn (Georgia Institute of Technology) · Devendra Singh Chaplot (Carnegie Mellon University) · Shubham Tulsiani (UC Berkeley) · Mustafa Mukadam (Facebook AI Research) · James M Rehg (Georgia Institute of Technology) · Abhinav Gupta (Facebook AI Research/CMU)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot E1

Transfer Learning

Domain Adaptation with Invariant Representation Learning: What Transformations to Learn?

Petar Stojanov (Carnegie Mellon University) · Zijian Li (Guangdong University of Technology) · Mingming Gong (University of Melbourne) · Ruichu Cai (Guangdong University of Technology) · Jaime Carbonell (None) · Kun Zhang (CMU)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot A3

Learning Domain Invariant Representations in Goal-conditioned Block MDPs

Beining Han (Tsinghua University) · Chongyi Zheng (CMU, Carnegie Mellon University) · Harris Chan (University of Toronto, Vector Institute) · Keiran Paster (University of Toronto) · Michael Zhang (University of Toronto / Vector Institute) · Jimmy Ba (University of Toronto / Vector Institute)

Thu Dec 09 04:30 PM — 06:00 PM (PST) @ Poster Session 7 Spot H0

Property-Aware Relation Networks for Few-Shot Molecular Property Prediction

Yaqing Wang (Baidu Research) · Abulikemu Abuduweili (Carnegie Mellon University) · Quanming Yao (4paradigm)

Thu Dec 09 04:30 PM — 06:00 PM (PST) @ Poster Session 7 Spot A0

Two Sides of Meta-Learning Evaluation: In vs. Out of Distribution

Amrith Setlur (Carnegie Mellon University) · Oscar Li (Carnegie Mellon University) · Virginia Smith (Carnegie Mellon University)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot C0

Weak Supervision

Automatic Unsupervised Outlier Model Selection

Yue Zhao (Carnegie Mellon University) · Dr. Rossi Rossi (Purdue University) · Leman Akoglu (CMU)

Tue Dec 07 08:30 AM — 10:00 AM (PST) @ Poster Session 1 Spot F3

End-to-End Weak Supervision

Salva Rühling Cachay (Technical University of Darmstadt) · Benedikt Boecking (Carnegie Mellon University) · Artur Dubrawski (Carnegie Mellon University)

Thu Dec 09 08:30 AM — 10:00 AM (PST) @ Poster Session 6 Spot D0

Robust Contrastive Learning Using Negative Samples with Diminished Semantics

Songwei Ge (University of Maryland, College Park) · Shlok Mishra (University of Maryland, College Park) · Chun-Liang Li (Google) · Haohan Wang (Carnegie Mellon University) · David Jacobs (University of Maryland, USA)

Fri Dec 10 08:30 AM — 10:00 AM (PST) @ Poster Session 8 Spot B0

Datasets and Benchmarks Track

CrowdSpeech and Vox DIY: Benchmark Dataset for Crowdsourced Audio Transcription

Nikita Pavlichenko · Ivan Stelmakh · Dmitry Ustalov

Tue Dec 07 8:30 a.m. PST — 10 a.m. PST @ Dataset and Benchmark Poster Session 1 Spot C1

MultiBench: Multiscale Benchmarks for Multimodal Representation Learning

Paul Pu Liang · Yiwei Lyu · Xiang Fan · Zetian Wu · Yun Cheng · Jason Wu · Leslie (Yufan) Chen · Peter Wu · Michelle A. Lee · Yuke Zhu · Ruslan Salakhutdinov · LP Morency

Wed Dec 08 midnight PST — 2 a.m. PST @ Dataset and Benchmark Poster Session 2 Spot D2

The CLEAR Benchmark: Continual LEArning on Real-World Imagery

Zhiqiu Lin · Jia Shi · Deepak Pathak · Deva Ramanan

Wed Dec 08 midnight PST — 2 a.m. PST @ Dataset and Benchmark Poster Session 2 Spot D2

Argoverse 2.0: Next Generation Datasets for Self-driving Perception and Forecasting

Benjamin Wilson · William Qi · Tanmay Agarwal · John Lambert · Jagjeet Singh · Siddhesh Khandelwal · Bowen Pan · Ratnesh Kumar · Andrew Hartnett · Jhony Kaesemodel Pontes · Deva Ramanan · Peter Carr · James Hays

Wed Dec 08 midnight PST — 2 a.m. PST @ Dataset and Benchmark Poster Session 2 Spot D3

RB2: Robotic Manipulation Benchmarking with a Twist

Sudeep Dasari · Jianren Wang · Joyce Hong · Shikhar Bahl · Yixin Lin · Austin Wang · Abitha Thankaraj · Karanbir Chahal · Berk Calli · Saurabh Gupta · David Held · Lerrel Pinto · Deepak Pathak · Vikash Kumar · Abhinav Gupta

Thu Dec 09 8:30 a.m. PST — 10 a.m. PST @ Dataset and Benchmark Poster Session 3 Spot D3

Therapeutics Data Commons: Machine Learning Datasets and Tasks for Drug Discovery and Development

Kexin Huang · Tianfan Fu · Wenhao Gao · Yue Zhao · Yusuf Roohani · Jure Leskovec · Connor Coley · Cao Xiao · Jimeng Sun · Marinka Zitnik

Fri Dec 10 8:30 a.m. PST — 10 a.m. PST @ Dataset and Benchmark Poster Session 4 Spot E1

Revisiting Time Series Outlier Detection: Definitions and Benchmarks

Henry Lai · Daochen Zha · Junjie Xu · Yue Zhao · Guanchu Wang · Xia Hu

Fri Dec 10 8:30 a.m. PST — 10 a.m. PST @ Dataset and Benchmark Poster Session 4 Spot E0

TenSet: A Large-scale Program Performance Dataset for Learned Tensor Compilers

Lianmin Zheng · Ruochen Liu · Junru Shao · Tianqi Chen · Joseph Gonzalez · Ion Stoica · Ameer Haj-Ali

Fri Dec 10 8:30 a.m. PST — 10 a.m. PST @ Dataset and Benchmark Poster Session 4 Spot E2

Neural Latents Benchmark ‘21: Evaluating latent variable models of neural population activity

Felix Pei · Joel Ye · David M Zoltowski · Anqi Wu · Raeed Chowdhury · Hansem Sohn · Joseph O’Doherty · Krishna V Shenoy · Matthew Kaufman · Mark Churchland · Mehrdad Jazayeri · Lee Miller · Jonathan Pillow · Il Memming Park · Eva Dyer · Chethan Pandarinath

Fri Dec 10 8:30 a.m. PST — 10 a.m. PST @ Dataset and Benchmark Poster Session 4 Spot C2

Organized Workshops

Artificial Intelligence for Humanitarian Assistance and Disaster Response Workshop

Ritwik Gupta · Esther Rolf · Robin Murphy · Eric Heim

Mon Dec 13 09:00 AM — 05:30 PM (PST)

CtrlGen: Controllable Generative Modeling in Language and Vision

Steven Y. Feng · Drew Arad Hudson · Tatsunori Hashimoto · DONGYEOP Kang · Varun Prashant Gangal · Anusha Balakrishnan · Joel Tetreault

Mon Dec 13 08:00 AM — 12:00 AM (PST)

Machine Learning for Autonomous Driving

Xinshuo Weng · Jiachen Li · Nick Rhinehart · Daniel Omeiza · Ali Baheri · Rowan McAllister

Mon Dec 13 07:50 AM — 06:30 PM (PST)

Self-Supervised Learning – Theory and Practice

Pengtao Xie · Ishan Misra · Pulkit Agrawal · Abdelrahman Mohamed · Shentong Mo · Youwei Liang · Christin Jeannette Bohg · Kristina N Toutanova

Tue Dec 14 07:00 AM — 04:30 PM (PST)

Ecological Theory of RL: How Does Task Design Influence Agent Learning?

Manfred Díaz · Hiroki Furuta · Elise van der Pol · Lisa Lee · Shixiang (Shane) Gu · Pablo Samuel Castro · Simon Du · Marc Bellemare · Sergey Levine

Tue Dec 14 05:00 AM — 02:40 PM (PST)

Math AI for Education: Bridging the Gap Between Research and Smart Education

Pan Lu · Yuhuai Wu · Sean Welleck · Xiaodan Liang · Eric Xing · James McClelland

Tue Dec 14 08:55 AM — 06:05 PM (PST)

Organized Tutorials

ML for Physics and Physics for ML

Shirley Ho · Miles Cranmer

Mon Dec 06 09:00 AM — 01:00 PM (PST)

Invited Talks

Main conference: How Duolingo Uses AI to Assess, Engage and Teach Better

Luis von Ahn

Tue Dec 07 07:00 AM — 08:30 AM (PST)

I (Still) Can’t Believe It’s Not Better: A workshop for “beautiful” ideas that “should” have worked

Cosma Shalizi

Mon Dec 13 04:50 AM — 02:40 PM (PST)

CtrlGen: Controllable Generative Modeling in Language and Vision

Yulia Tsvetkov

Mon Dec 13 08:00 AM — 12:00 AM (PST)

Machine Learning for Autonomous Driving

Jeff Schneider

Mon Dec 13 07:50 AM — 06:30 PM (PST)

New Frontiers in Federated Learning: Privacy, Fairness, Robustness, Personalization and Data Ownership

Virginia Smith

Mon Dec 13 05:30 AM — 04:00 PM (PST)

Self-Supervised Learning – Theory and Practice

Louis-Philippe Morency

Tue Dec 14 07:00 AM — 04:30 PM (PST)

Learning and Decision-Making with Strategic Feedback

Steven Wu

Tue Dec 14 07:00 AM — 02:30 PM (PST)